Image restoration of motion artifacts in cardiac arteries and vessels based on a generative adversarial network

Introduction

Cardiovascular disease is the leading cause of disease-related death worldwide. For many years, coronary heart disease has been the leading cause of cardiovascular disease-related death among humans. Coronary computed tomography angiography (CCTA) is an effective screening method for arterial disease (1,2). It determines blood flow in the coronary arteries to facilitate the diagnosis of artery stenosis, occlusion, embolism, pseudoaneurysm, or dissection. Compared with other imaging technologies, CCTA has many advantages. Examinations with CCTA can be used to investigate the arteries and veins of the heart and identify stenosis for timely treatment. Moreover, the required contrast agent can be injected through a forearm vein, which is less traumatic and safer than other methods. Compared with magnetic resonance angiography (MRA), the anatomical details of blood vessels displayed by CCTA are more accurate, and the scan time is shorter. Therefore, research on CCTA imaging technology is of great significance.

However, CCTA also has limitations. In the process of obtaining projection data from different angles by computed tomography (CT), the points (voxels or pixels) in the image matrix are displaced, forming motion artifacts in the CCTA image (3). The degree of motion artifacts depends on the rate of displacement and the correction effect of the image reconstruction algorithm. When the time window selected for reconstruction is inappropriate, the coronary arteries are in a phase of high-speed displacement during imaging. If the heart rate is too fast, the coronary arteries will move at a low speed for too little time, exceeding the time resolution of the collected CT data. Both of these issues can cause motion artifacts.

In recent years, the rapid development of deep neural networks has provided the possibility of CCTA motion artifact correction. Engineers at Philips Labs proposed using the residual network [ResNet; He et al. (4)] to identify and locate motion artifacts in coronary arteries (5). In another study, a 2.5-D convolutional neural network (CNN) was used to calculate the motion vector of motion artifacts in the coronary artery, thereby providing a reference for traditional artifact correction (6). Xie et al. (7) used residual learning with the improved Google-Net to study the artifacts of sparse-view CT reconstruction, subtracted the artifacts obtained by learning from the sparsely reconstructed images, and finally recovered clear corrected images.

Deep learning has a large number of applications in the image field. As a multi-scale CNN, UNet (University of Freiburg, Freiburg im Breisgau, Baden-Württemburg) is a model commonly used for medical image segmentation, and its updated model has many applications in medical imaging (8-15). Generative adversarial networks (GANs) perform well in image generation, high-resolution image reconstruction, image style conversion, and other fields, and they offer more possibilities than ordinary CNNs (16). The Wasserstein GAN (WGAN) introduces the Wasserstein distance, which solves the problem of unstable training and provides a reliable training progress indicator, which is highly correlated with the quality of the generated samples (17). The WGAN and various improved models have many applications in medical image processing (18-21). The WGAN with a gradient penalty (WGAN-GP) is an improved version of the WGAN that provides higher performance in image generation (22). Zhang et al. (23) used a pixel2pixel GAN with V-Net as the generator to correct motion artifacts in the right coronary artery, confirming that GANs have the potential to provide a new method of removing motion artifacts in coronary artery images (23). Additionally, the cycle GAN also provides an important deep learning method for medical image processing (24,25). In our previous research, the cycle least-squares GAN (LSGAN) was used to correct motion artifacts in CCTA clinical images, and multiple image indicators confirmed that the cycle LSGAN was more effective in correcting motion artifacts in global images than in regions of interest (ROIs) (26).

In this study, we retrospectively collected CCTA images of 60 patients scanned using retrospective electrocardiography (ECG) gating technology. We combined UNet, WGAN-GP, and cycle GAN to propose a method of cycle WGAN-GP constrained by multiple loss functions (L1 loss, perceptual loss, and Wasserstein loss) to correct motion artifacts in clinical CCTA images. The WGAN-GP method used UNet as a generator, and WGAN-GP was used as a unit for constructing the cycle WGAN-GP. In cycle WGAN-GP, we added a content discriminator to stabilize the cycle GAN. The performance of the method was assessed using image indicators, including the peak signal-to-noise ratio (PSNR), structural similarity (SSIM), normalized mean square error (NMSE), and radiologist clinical quantitative scores.

Methods

Network

Compared with the general GAN, the WGAN proposed in 2017 addressed the problems of gradient disappearance, gradient instability, and collapse mode. The WGAN-GP improved upon WGAN, providing higher performance in image generation. Cycle GAN uses 2 GANs to constrain each other to generate the target images more stably and reliably and to ensure correlation between the input images and generated images.

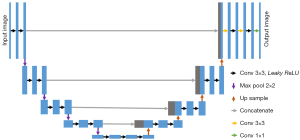

In this study, cycle WGAN-GP included 2 WGAN-GPs and a content discriminator. Each WGAN-GP contained a generator and a discriminator. The generator was UNet, and the discriminator was a CNN. The structure of content discriminator is shown in Figure 1. The content discriminator contained 6 non-linear processing steps. The first 4 layers were convolutional layers with a convolution kernel of 3, stride of 2, padding of 1, and the activation function of leaky rectified linear unit (ReLU). Then, an adaptive average pooling layer was included, finally followed by a convolution layer with a convolution kernel of 1 and a stride of 1.

A discriminator was designed to improve the generalization of the generator. The discriminator used 5 non-linear mappings, which each containing a convolution layer, leaky ReLU, and average pool. In each convolution layer, the size of the convolution kernel was 4, the step size was 2, and the zero padding was 1. In addition, the filling method was reflection padding. To perceive the various scales of the input images, the discriminator used the image feature matrix obtained from each non-linear mapping to calculate the loss. With this process, the discriminator had an improved ability to distinguish the overall features and local features of the input images.

The generator of the original WGAN was an ordinary CNN, which generates images by learning relevant features. This study used UNet as a generator to integrate information at different scales. The structure of UNet is shown in Figure 2. The overall structure included 5 scales. In each scale, the feature matrixes from the encoder scale were concatenated with the feature matrixes from the decoder scale. In this way, deep semantic information and shallow position information could be merged to extract more comprehensive image features and improve the effect of CCTA image reconstruction. The orange arrow in Figure 2 represents the convolutional layer with a convolution kernel of 3, padding of 1, and dilation of 2 (27). The orange and black arrows together form a convolutional layer with a receptive field of 7×7. In the last layer, leaky ReLU was used instead of ReLU. To prevent checkerboard artifacts in the generated image, the decoding section was designed as a bilinear interpolation instead of a transposed convolution.

Loss function

In designing the loss function of the entire framework, we designed the loss functions of the content discriminator, discriminator, and generator. First, the loss of the content discriminator WGAN-GP was designed to stabilize the training and convergence of the generator and avoid the problems of gradient disappearance and model collapse. Therefore, we used the least-squares loss in the content discriminator (28). The least-squares loss in the training content discriminator and training generator was determined as follows:

where x1 and x2 represent two different images.

The loss function of the discriminator of WGAN-GP was the Wasserstein loss with a gradient penalty. The Wasserstein loss with a gradient penalty played an important role in preventing gradient disappearance and model collapse. The formula was as follows:

where D and G represent the discriminator and generator, respectively, and λ is the gradient penalty coefficient, which was set to 10.

After designing the loss function of the discriminator, we designed the loss function for the generator in the WGAN-GP cycle. First, the loss function of the generator itself in WGAN-GP was as follows:

To improve the generator’s understanding of the CTA image structure, we added perceptual loss and L1 loss. The perceptual loss was calculated by VGG19 to generate the feature loss between synthesized images and target images, and then the generator was optimized by back propagation (29). Perceptual loss can greatly improve the convergence speed of a generator, allowing it to learn the characteristics of the target image more quickly. The L1 loss was used to calculate the loss value between the generated and target images per pixel. The generator could effectively learn the distribution of the target images at the pixel level. The perceptual loss was determined as follows:

To improve the stability of the generator in the loop structure, we used the L1 loss as the cycle consistency loss of the loop structure. The cycle consistency loss was determined as follows:

When training a batch in cycle WGAN-GP, the content discriminator was trained 3 times, and the discriminator and generator were each trained once. When training the generator, its loss function was as follows:

where Wp, W1, WLG, WG and WC were 0.5, 1, 0.1, 0.1, and 1, respectively.

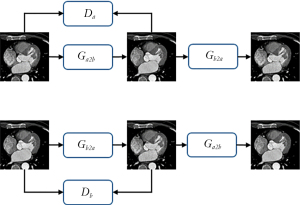

Throughout the process of Figure 3, all generators and discriminators used the adaptive movement estimation (ADAM) optimizer, and the learning rate was set to 0.001 (30). The learning rate update strategy used was cosine annealing, and the maximum number of iterations was 25 (31). The hidden layer weight initialization method was the Kaiming method (32). The entire model was trained on an NVIDIA GeForce RTX 3090 (Nvidia, Santa Clara, CA, USA) on the Windows 10 operating system (Microsoft Corp., Redmond, WA, USA). During training, the entire data set was trained as an epoch, and a total of 100 epochs were trained. During the test, Ga2b was used to correct motion artifacts in CCTA images, and corrected images without motion artifacts were output.

Data set

The CTA clinical images used in this study were clinical images collected by the Department of Radiology, Zhongnan Hospital of Wuhan University through Siemens SOMATOM Definition (Siemens Healthineers, Erlangen, Germany) from December 2019 to October 2020. Clinicians used retrospective ECG gating technology to scan the cases and reconstructed 4 phases of images from the obtained data, 2 of which were in diastole, while the other 2 were in systole. To focus the framework on correcting motion artifacts in CCTA images, we chose 2-phase images in the diastolic phase or 2-phase images in the systole as the data set. Among them, CCTA images with motion artifacts were used as input data, and other clear CCTA images were used as ground-truth images. The data set included 60 patients with 3,410 pairs of two-dimensional (2D) CCTA images. Due to the small size of the data set, we enhanced the existing data by rotating the images by −10°, −5°, and 5°, which quadrupled the amount of data and yielded a total of 13,640 images.

Metrics

Clinical quantitative analysis index

We invited 2 radiologists from Zhongnan Hospital of Wuhan University to score the original, synthesized, and target images. We used the input image, corrected images generated by 5 methods, and the target image as a set. We randomly selected 50 sets of images in the test data set as the scoring data set. According to the effect of artifact correction, the scores ranged from 1 to 5. Scores of 1 to 3 indicated poor quality, and scores of 4 and 5 indicated better image quality.

Image quantitative analysis index

We used the PSNR, SSIM, and NMSE to the analyze images. The PSNR and SSIM are global image indicators that compare and calculate noise and structure, respectively. The NMSE is a dimensionless image index that compares pixels between comparison images and target images.

The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The study was approved by Institutional Ethics Committee of Zhongnan Hospital of Wuhan University. Informed consent was waived in this retrospective study.

Results

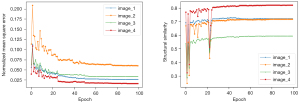

To monitor the stability of the model during the training process, we randomly selected 4 sets of images for use as supervised data. The curves of 2 indicators, the SSIM and NMSE, during the model training process are shown in Figure 4. In the first quarter of the training process, the curves converged quickly, after which the curves converged steadily throughout the remaining training process.

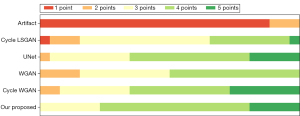

Table 1 shows the values of various image indicators for the test set for all methods. Among the various methods, our proposed algorithm performed best in terms of PNSR and NMSE on the test set. Although cycle WGAN performed the best in terms of SSIM among all methods, the SSIM difference between cycle WGAN and the proposed method was 0.001. In addition, we invited 2 radiologists from Zhongnan Hospital of Wuhan University to rate the motion artifact-corrected images. Table 2 shows the statistical data of the radiologist scores for the corrected images. In Table 2, our proposed method received the highest scores. To more intuitively observe the score distribution of each method, when 2 doctors evaluated the same image, we selected the lowest of the 2 scores, as shown in Figure 5. In Figure 5, the image processed by our proposed algorithm did not score below 3 points. The results from other algorithms received scores of 2 points or even 1 point. In other words, our proposed algorithm was the most stable among the compared methods.

Table 1

| Variables | PSNR | SSIM | NMSE |

|---|---|---|---|

| Artifact | 21.46±2.70 | 0.683±0.081 | 0.077±0.036 |

| Cycle LSGAN | 23.43±1.58 | 0.728±0.058 | 0.042±0.018 |

| UNet | 23.70±1.57 | 0.762±0.055 | 0.042±0.032 |

| WGAN | 24.41±1.73 | 0.767±0.055 | 0.035±0.021 |

| Cycle WGAN | 24.43±1.97 | 0.770±0.056 | 0.039±0.045 |

| Proposed | 24.96±1.54 | 0.769±0.055 | 0.031±0.023 |

PSNR, peak signal-to-noise ratio; SSIM, structural similarity; NMSE, normalized mean square error; LSGAN, least squares generative adversarial network; WGAN, Wasserstein generative adversarial network.

Table 2

| Variables | Radiologist 1 | Radiologist 2 | Total |

|---|---|---|---|

| Artifact | 1.19±0.39 | 1.23±0.50 | 1.21±0.40 |

| Cycle LSGAN | 3.27±0.76 | 3.42±0.97 | 3.35±0.83 |

| UNet | 3.92±0.83 | 4.04±0.81 | 3.98±0.77 |

| WGAN | 3.35±0.73 | 3.88±0.80 | 3.62±0.72 |

| Cycle WGAN | 3.88±0.93 | 4.27±0.65 | 4.08±0.76 |

| Proposed | 4.19±0.62 | 4.04±0.71 | 4.12±0.61 |

LSGAN, least squares generative adversarial network; WGAN, Wasserstein generative adversarial network.

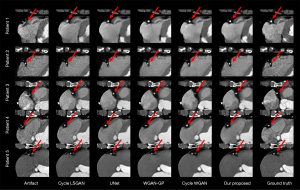

To observe the effects of motion artifact correction more intuitively, we compared the results of artifact correction using different methods among different patients. We randomly selected 5 patients and extracted the ROI of the right coronary artery. These regions are shown in Figure 6. The results showed that the correction effect of the other 4 methods was not sufficiently stable, and the correction effect of our proposed method was more stable than those of the other 4 methods. And the red arrow pointed to the coronary arteries in the CCTA images.

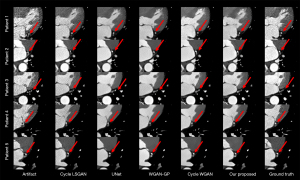

In addition, we randomly selected 5 patients and extracted images of the left circumflex coronary artery region, as shown in Figure 7. The left circumflex coronary artery is a smaller feature than the right coronary artery, and artifact correction in the left circumflex artery is more difficult than that in the right coronary artery. However, among the examined methods, most could correct the motion artifacts, but our proposed method achieved more accurate correction.

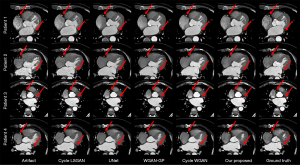

After viewing the images corrected for motion artifacts in the right coronary artery and left circumflex coronary artery, we examined additional images for motion artifact correction. We selected 4 images of patients with motion artifacts in both the right coronary artery and left circumflex coronary artery, as shown in Figure 8. In Figure 8, the right coronary artery of the third patient almost disappeared in the WGAN-GP and cycle WGAN results, while the other methods could correct the motion artifacts. Our proposed method could stably correct right coronary artery, left circumflex branch, and overall motion artifacts.

Discussion

In this study, we proposed using cycle WGAN-GP to correct motion artifacts in CCTA images. The UNet, WGAN, and cycle GAN are currently relatively stable deep learning models which have many applications in magnetic resonance imaging (MRI), positron emission tomography (PET), and CT. We combined these models and added a content discriminator to construct a stable and convergent deep learning model. By using cycle LSGAN to correct motion artifacts in CCTA images, we found that using the entire image was better than extracting local coronary artery regions to correct motion artifacts. Therefore, in this study, we also used the entire image for motion artifact correction. In addition, to maintain the overall characteristics of the images and provide more details in the coronary arteries, we used multiple loss functions to constrain the generator. The image indexes of CCTA images corrected by this method indicated a better performance than that of cycle LSGAN. In the clinical quantitative analysis index, the score of cycle WGAN-GP was 0.04 higher than that of cycle LSGAN. All indexes indicated that our method was the best among those compared.

However, our method still has some limitations. First, due to the relatively few characteristics of the left circumflex coronary artery, achieving model convergence was difficult. A small number of patients had severe left circumflex coronary artery motion artifacts, and their left circumflex coronary artery motion artifacts could not be corrected. Second, correcting motion artifacts in the coronary arteries was our goal, but the texture and edge information of the heart region is very important. The deep learning method could also maintain the texture and edge information of the heart when correcting motion artifacts, algorithms for which should be explored in future research. Third, the cycle WGAN-GP frame is too large, and it is difficult for ordinary machines to meet the training requirements. Although the hardware that we used is relatively advanced, it still had difficulty meeting the needs of network training.

Deep learning methods are very promising for artifact correction. In the task of correcting motion artifacts in CCTA images, our proposed cycle WGAN-GP was more stable than cycle LSGAN and performed better on small features. However, the proposed deep learning method should also more stably correct motion artifacts in the right coronary artery and left circumflex artery. In theory, deep learning methods can correct not only retrospective ECG-gated CCTA images, but also prospective ECG-gated CCTA images. However, whether motion artifacts in CCTA images collected by the prospective ECG gating technology can be corrected by deep learning has not been verified as no relevant data are currently available.

Conclusions

In conclusion, the cycle WGAN-GP proposed in this study could correct motion artifacts in CCTA images and is more stable than the general cycle WGAN-GP. In future work, the framework can also be used for other types of medical image processing. In addition, CCTA image motion artifact correction needs to be more stable, and appropriate algorithms for improving the overall and coronary artery characteristics are required.

Acknowledgments

Funding: This work was supported by the Shenzhen Excellent Technological Innovation Talent Training Project of China (No. RCJC20200714114436080) and the Natural Science Foundation of Guangdong Province in China (No. 2020A1515010733).

Footnote

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://qims.amegroups.com/article/view/10.21037/qims-20-1400/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The study was approved by Institutional Ethics Committee of Zhongnan Hospital of Wuhan University. Informed consent was waived in this retrospective study.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Hoffmann U, Ferencik M, Cury RC, Pena AJ. Coronary CT angiography. J Nucl Med 2006;47:797-806. [PubMed]

- Nieman K, Rensing BJ, van Geuns RJ, Vos J, Pattynama PM, Krestin GP, Serruys PW, de Feyter PJ. Non-invasive coronary angiography with multislice spiral computed tomography: impact of heart rate. Heart 2002;88:470-4. [Crossref] [PubMed]

- Li Q, Du X, Li K. Related Methods and Applications to Eliminate Motion Artifact in MDCT Coronary Angiography Examination. China Medical Devices 2016;31:73-6.

- He K, Zhang X, Ren S, Sun J. Deep Residual Learning for Image Recognition. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016:770-8.

- Lossau T, Nickisch H, Wissel T, Bippus R, Schmitt H, Morlock M, Grass M. Motion artifact recognition and quantification in coronary CT angiography using convolutional neural networks. Med Image Anal 2019;52:68-79. [Crossref] [PubMed]

- Lossau Née Elss T, Nickisch H, Wissel T, Bippus R, Schmitt H, Morlock M, Grass M. Motion estimation and correction in cardiac CT angiography images using convolutional neural networks. Comput Med Imaging Graph 2019;76:101640. [Crossref] [PubMed]

- Xie S, Zheng X, Chen Y, Xie L, Liu J, Zhang Y, Yan J, Zhu H, Hu Y. Artifact Removal using Improved GoogLeNet for Sparse-view CT Reconstruction. Sci Rep 2018;8:6700. [Crossref] [PubMed]

- Li X, Chen H, Qi X, Dou Q, Fu CW, Heng PA. H-DenseUNet: Hybrid Densely Connected UNet for Liver and Tumor Segmentation From CT Volumes. IEEE Trans Med Imaging 2018;37:2663-74. [Crossref] [PubMed]

- Ghodrati V, Shao J, Bydder M, Zhou Z, Yin W, Nguyen KL, Yang Y, Hu P. MR image reconstruction using deep learning: evaluation of network structure and loss functions. Quant Imaging Med Surg 2019;9:1516-27. [Crossref] [PubMed]

- Huang H, Lin L, Tong R, Hu H, Zhang Q, Iwamoto Y, Han X, Chen YW, Wu J. UNet 3+: A Full-Scale Connected UNet for Medical Image Segmentation. ICASSP 2020 - 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). 2020:1055-9.

- Guan S, Khan AA, Sikdar S, Chitnis PV. Fully Dense UNet for 2-D Sparse Photoacoustic Tomography Artifact Removal. IEEE J Biomed Health Inform 2020;24:568-76. [Crossref] [PubMed]

- Cai S, Tian Y, Lui H, Zeng H, Wu Y, Chen G. Dense-UNet: a novel multiphoton in vivo cellular image segmentation model based on a convolutional neural network. Quant Imaging Med Surg 2020;10:1275-85. [Crossref] [PubMed]

- Zhou Y, Huang W, Dong P, Xia Y, Wang S. D-UNet: A Dimension-Fusion U Shape Network for Chronic Stroke Lesion Segmentation. IEEE/ACM Trans Comput Biol Bioinform 2021;18:940-50.

- Gou M, Rao Y, Zhang M, Sun J, Cheng K. Automatic image annotation and deep learning for tooth CT image segmentation. International Conference on Image and Graphics. Springer, 2019:519-28.

- Ronneberger O, Fischer P, Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In: Navab N, Hornegger J, Wells WM, Frangi A. editors. Medical Image Computing and Computer-Assisted Intervention -- MICCAI 2015. Cham: Springer International Publishing, 2015:234-41.

- Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y. Generative adversarial nets. Advances in Neural Information Processing Systems 27 (NIPS 2014) 2014;27.

- Arjovsky M, Chintala S, Bottou L. Wasserstein generative adversarial networks. International conference on machine learning. PMLR; 2017:214-23.

- Wang Q, Zhou X, Wang C, Liu Z, Huang J, Zhou Y, Li C, Zhuang H, Cheng J. WGAN-Based Synthetic Minority Over-Sampling Technique: Improving Semantic Fine-Grained Classification for Lung Nodules in CT Images. IEEE Access 2019;7:18450-63.

- Zhou L, Schaefferkoetter JD, Tham IWK, Huang G, Yan J. Supervised learning with cyclegan for low-dose FDG PET image denoising. Med Image Anal 2020;65:101770. [Crossref] [PubMed]

- Negi A, Raj ANJ, Nersisson R, Zhuang Z, Murugappan M. RDA-UNET-WGAN: An Accurate Breast Ultrasound Lesion Segmentation Using Wasserstein Generative Adversarial Networks. Arab J Sci Eng 2020;45:6399-410. [Crossref]

- Ma J, Deng Y, Ma Z, Mao K, Chen Y. A Liver Segmentation Method Based on the Fusion of VNet and WGAN. Comput Math Methods Med 2021;2021:5536903. [Crossref] [PubMed]

-

Gulrajani I Ahmed F Arjovsky M Dumoulin V Courville A. Improved training of Wasserstein GANs. arXiv:1704.00028v3,2017 . - Zhang L, Chen Q, Jiang B, Ding Z, Zhang L, Xie X. Preliminary study on the removal of motion artifacts in coronary CT angiography based on generative countermeasure networks. Journal of Shanghai Jiaotong University (Medical Edition) 2020;40:1230-5.

- Liu Y, Chen A, Shi H, Huang S, Zheng W, Liu Z, Zhang Q, Yang X. CT synthesis from MRI using multi-cycle GAN for head-and-neck radiation therapy. Comput Med Imaging Graph 2021;91:101953. [Crossref] [PubMed]

- Harms J, Lei Y, Wang T, Zhang R, Zhou J, Tang X, Curran WJ, Liu T, Yang X. Paired cycle-GAN-based image correction for quantitative cone-beam computed tomography. Med Phys 2019;46:3998-4009. [Crossref] [PubMed]

- Deng F, Tie C, Zeng Y, Shi Y, Wu H, Wu Y, Liang D, Liu X, Zheng H, Zhang X, Hu Z. Correcting motion artifacts in coronary computed tomography angiography images using a dual-zone cycle generative adversarial network. J Xray Sci Technol 2021;29:577-95. [Crossref] [PubMed]

-

Yu F Koltun V. Multi-Scale Context Aggregation by Dilated Convolutions. arXiv:1511.07122v3 [cs.CV],2016 . - Mao X, Li Q, Xie H, Lau RYK, Wang Z, Smolley SP. Least squares generative adversarial networks. 2017 IEEE International Conference on Computer Vision (ICCV) 2017:2813-21. doi:

10.1109/ICCV.2017.304 .10.1109/ICCV.2017.304 - Johnson J, Alahi A, Fei-Fei L. Perceptual Losses for Real-Time Style Transfer and Super-Resolution. In: Leibe B, Matas J, Sebe N, Welling M. editors. Computer Vision – ECCV 2016. Cham: Springer International Publishing, 2016:694-711.

-

Kingma DP Ba J Adam: A method for stochastic optimization. arXiv:1412.6980v9 [cs.LG],2014 . -

Loshchilov I Hutter F. Sgdr: Stochastic gradient descent with warm restarts. arXiv:1608.03983v5 [cs.LG],2016 . - He K, Zhang X, Ren S, Sun J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. 2015 IEEE International Conference on Computer Vision (ICCV), 2015:1026-34.