Virtual reality-based surgical planning simulator for tumorous resection in FreeForm Modeling: an illustrative case of clinical teaching

Introduction

Medical theme

The treatment of bony tumor is particularly challenging because tumorous cells progress, transfer, and disseminate quickly through intravasation and extravasation with high recrudesce. Surgery is the best option for limb salvage, namely, intralesional curettage (1). In conventional orthopedic tumorous operation, surgeons often preoperatively integrate 2D and 3D surgical plans and then translate these initial strategies in the operative rooms, such as surgical procedures of 3D printing (3DP)-assisted and computer-assisted tumor surgery, which delineates the soft tissue anatomy and extraosseous extension in orthopedic tumors. However, traditional planning is so inaccurate that surgical outcomes are less than ideal even following cautious. A preoperative strategy must be effectively mapped out by 3D computer-aided simulation to rectify the complex operation. To realize our goal of individualized preplanning, our medical educators attempted to design and perform virtual operation in the software SenSable FreeForm Modeling (SFM). The purpose of this paper is to demonstrate our planning protocol of tumorous intralesional curettage simulated with surgical drill, and present interns a cultivating style of practical platform in computer-aided engineering (CAE).

Virtual surgical planning (VSP) is a process that integrates CAE and CAD into treatment modalities by analyzing or visualizing anatomic images and performing virtual manipulations to idealized results. VSP defines surgical issues in 3D and creates practical plans that could be accurately replicated in operating rooms, allowing surgeons to excise the lesional area exactly according to the template and enhance patient satisfaction (2). This adds new dimensions to computational assistive tools with less cost and more accessible alternatives in orthopedic oncology, addressing the limitations of the 3DP or current navigation. The features of multiview and interaction with holographics can support surgeon information on-demand in real-time, and remotely assist their workstation immediately. They have also been applied to oncologic surgery by localizing vital structures in complex transplantations (3). Direct overlay of the holographic models enables surgeons to “see through” the internal anatomy of a patient while maintaining contact with the physical reality so that spatial localization beneath the skin is more understandable, enabling precise incisions or surgical accesses to the targeted tumors and mitigating surgical invasiveness. The entire steps included data acquisition, diagnosis, quantification, establishment of a preliminary plan, emulation, and determination of the final plans. Advances in popularized SFM have allowed it to be used for applications created for stereotactic navigation for placement of implants, developing protocols for orthopedic professions, and delivering more than 140,000 patient-specific (PS) devices (4,5).

Educational theme

The delivery of medical education has changed with technological advancement, and virtual reality (VR) has become a popular option among educators for decades around the world due to its ability to place instructors in lecturing mode and the small learning curve. Slide-based lecturing can increase student interest when the lecture material is organized into slides for presentation including various effects such as sounds, animation, graphs, and colors. The shortcomings of this format include that learners may become overwhelmed by the volume of slides and the lack of interactive learning due to passive learning, with a negative effect on student performance. Historically, lecture-based curricula generally encourage passive engagement, which is a teacher-centered approach where students acquire knowledge without conscious effort and teachers deliver information only; although this approach is inexpensive and produced quickly, it does not stimulate critical thinking or active learning.

Over the past decades, instructional surgery has advanced from chalkboards to computer-assisted slideshows or beyond because poor attendance has been observed for in-person lectures, and there are high fees associated with the acquisition, delivery, and maintenance of traditional cadavers. Additionally, there are logistical issues resulting in declining cadaveric dissection; meanwhile, animal models are limited by anatomic variations and encounter cultural and ethical concerns. In addition, to maintain currency with college-related advancements, educators began to adapt their teaching patterns to engage students so that ample opportunities are provided to hone learners’ skills. As quantitative and qualitative findings have shown that passive learning is not as effective as active learning, there is a need for other cost-effective opportunities for 3D learning, including interactive teaching modalities such as 3DP models. VR and augmented reality (AR) have been explored to address the limitations of traditional lectures with 2D graphics and usage of orthopedics or neurosurgery (3). SenSable has also been leveraging for decades and offering educational solutions to accelerate innovation.

Surgical techniques

Materials

From a randomly chosen 36-year-old male patient who had femoral sarcoma and was ready for tumorous intralesional curettage at the Affiliated Jiangmen Traditional Chinese Medicine Hospital, a set of CT scans of hip joint images was gathered in July 2022. All procedures in this study were in accordance with the ethical standards of Jinan University Committee (No. 2022YL03005) and with the Helsinki Declaration (as revised in 2013). Written informed consent was provided by the patient for publication of this article. A copy of the written consent is available for review by the editorial office of this journal. The equipment included a Siemens 64-row CT (Erlangen, Germany), software ProE-5.0 (Parametric Tech Corp, Boston, MA, USA), Materialise Mimics-19 (Leuven, Belgium), and the haptic device Sensable FreeForm® Modeling Plus™ (SensAble™, Cambridge, MA, USA) which comes with a joystick namely Phantom (Premium 1.5 HF) for force feedback (4).

Methods

A virtual operation is composed of the following: (I) planning stage, when a virtual resection of the hip is ideally planned after discussing a case; (II) modeling stage, when a *.stl model and navigation are fabricated; (III) surgical stage, when lesional replacement is guided as demonstrated in Figure 1.

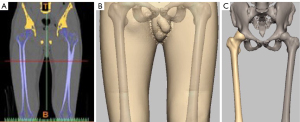

Reconstruction of femoral model

The dataset in digital imaging and communications in medicine (*.DICOM) was imported into Mimics by the learners, with the value of 1,250–2,732 set as the threshold. The pelvis was separated by functional processes such as Thresholding, Plotting and Calculation, Erasure, Mask, Edit mask, and Region Growing then a pair of femurs was reshaped/reconstructed and saved in *.stl format as presented in Figure 2A. Similarly, the threshold value was set to 50–1,200 for muscle and skin of the thigh as shown in Figure 2B.

Creation of tumoral model

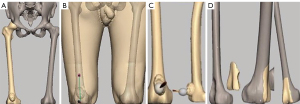

The abovementioned femoral model was imported to SFM. A bony tumor was emulated at the distal femur by the osteotomic toolkit and saved in *.stl as shown in Figure 2C.

Imitation of semantic model

The aforementioned femoral model was imported to SFM. A cortical window of distal femur was excised by the osteotomic toolkit and saved in *.stl to imitate the material of bony cement, while the left was deleted as Figure 3A demonstrates.

Maneuver of virtual operation

The model of the entire thigh with bony tumor was imported to SFM, and the extent of the resection was pre-operatively determined and typically expressed as a longitudinal distance from malignant tumor to the resecting planes based on planning. A linear incision 20 cm in length along the tumorous center was resected by the manipulative scalpel of Phantom (Figure 3B) so that the tumor was exposed. This tumor was then removed along its contours by a sculptural technique called Carve (Figure 3C) after measuring the expected resected margin with a ruler as per routine clinical practice, then the bony defeat was virtually filled by an implant with bony cement and covered by the harvested vascularized pericranial flap (Figure 3D) (6).

Depending on the condition of bony defects, they can be fixed by the following approaches: (I) internal fixation of interlocking nail plus allogeneic bone transplant; (II) internal fixation of steel plate with bony cement filling; (III) prosthetic joint plus allogeneic bone transplantation in combination; (IV) autologous bone transplantation; (V) other biological 3DP materials in tissue engineering (e.g., implant) (7). Previous studies have suggested implant frames as a template to recreate preoperative plans, whereby the total time for reference implant tracking, defect tracking, mounting, registration, and modification was approximately 15 minutes with 7.25 minutes allotted to modify the implant (7). This allows opportunities for unforeseen intraoperative modifications that will otherwise compromise an optimal fit between recipient and implant. The planned osteotomy was exported as a blueprint for implant design and fabrication which was further verified by importation into a VR model to check match of the defect; a well-fitted custom implant that requires minimal resizing could then be delivered, from which intraoperative guidance for tumor resection or implant placement could be forwarded.

Next, the exact size, shape, location, and outline of the desired osteotomy were obtained for designing the implant so that minimal resizing was required, representing a clear distinction from manual modification to remove excess material to fit the defects. The template should be intraoperatively placed and physically secured to the femur to allow legitimate duplication of the bony resection after excision of intraosseous masses. After all of these elements had been planned and verified preoperatively, we picked the PS model that showed the exact borders of the intraosseous tumor to plan a virtual osteotomy in VR preoperatively (8).

Demonstration of tutorial scenarios

We anticipate great room for preclinical and clinical studies of routine tutorial involving removal of lesions because the 100 learner participants included surgeons, senior residents, junior residents, and novices. Half of them were trained by VR models whereas the remainders were trained by convention; whether they were satisfied was evaluated by the degree of marginal precision upon excision of their lesions. By using Geomagic Freeform, standard manufacturing procedures were followed in designing the implants. Custom implants could be prefabricated and adjusted immediately after lesional resection to achieve the ideal size, contour, and appearance. Implant fabrication was initiated once surgeons approved the design involving “resecting template”; this virtual template was then overlaid intraoperatively onto the patient’s real-time anatomy to guide lesional excision (9).

Case result

This study utilized Mimics to build a realistic and vivid tumorous model of the thigh, the anatomic structure of which provides semitransparent visualization after processing, because the boundary between the tumor and other tissues is reasonably predictable, and surgeons are able to virtually contact with tumors, vessels, and organs intuitively. We created a stereoscopic 3D virtual workspace for users to manipulate the PS multimodality individually available for transparency color, semiautomatic and manual segmentation, image fusion, and curved, linear, and volumetric measurements. This tumorous resection was repeatably handled by SFM, whereby surgical strategy was performed to improve operative accuracy, and the learners experienced respondent force feedback beneficial to surgical teaching programs (4). An osteosarcoma with lesional size of 2.6 cm × 2.4 cm × 3.9 cm was “cut” virtually, with precise surgical margins shown to have been satisfactorily achieved by both expertly proficient (e.g., surgeons and senior residents) and inexperienced (e.g., junior residents and novice interns) hands for a total of 43 out of 50 learners. Even though most limb salvage surgeries can be carried out with conventional techniques, our VR-based navigation system deserves further attention.

This 3D thigh model was created through processes of Comminuting and Regional Growth its anatomic structure and gradation could be visualized, enlarged, shrunk, and rotated. The virtual instruments supplied by Ghost SDK and Pro-E made no difference from reality, and perfectly met the operative needs, according to the experience of 50 participants. Manipulated by Phantom Ghost, the operative devices and human model were interacted with each other through SFM, and the processes of tumorous incision were mimicked with high emulation and distinct effect as demonstrated in Figure 3B-3D (4). Cosman 2007, Hyltander 2002, and Prabhu 2010 declared that, by this protocol, the computerized plan could be consistently transferred to patients for orientation during surgery (10). This scenario defined the extent of the tumor repeatably, and carried out more precise surgery; the operation was deemed a success with advanced planning cited as a major factor, and we achieved satisfactory accuracy of complete tumor resection.

Another mechanism implemented for students with mock experiences was that emulations were also incorporated into the didactic curriculum. The trainers may “step” into images, gain various insights into anatomy, and explore various structures, providing the feeling that they have become part of the VR environment. VR shows anatomy with a higher magnification and more detail than seen in common radiologic images; trainees are thus fully immersed in the simulated environment and digital telemedicine as if isolated from the physical world. Those trained by VR-based models achieve a higher success rate of 86% than the 74% achieved by those trained conventionally (10,11).

Comments

Delivery modalities of innovation

In recent years, the VR technique has become important in medical areas; it is so multidisciplinary that it can simulate any anatomical features of bony structures as well as tumors, which facilitates the application of individual VSP to anatomical research, medical education, and clinical practice. The current concept of VR is understood as “an artificial environment which is experienced through sensory stimuli, e.g., sights or sounds, and enables transition from a conventional 3D screen to interactive VR models” (12). It is defined as any immersive simulative reality, the terms “mixed reality” (MR) and AR are both essentially various degrees of VR (13,14). It provides, by nature, a sense of embodiment excellent in presenting spatial relationships through stereoscopic displays for users to immerse themselves into dedicated planning environments, referring self-location to view a model where users interact with its frame through direct manipulation by grabbing, moving, and turning the objects with a dominant controller—a trigger button. VR represents a promising tool for VSP in bony base tumors and can be used to guide the appropriate selection of approaches to reduce invasiveness.

VR has revolutionized realistic scenes with definite control over the visual and auditory sensations, which is fully replaced by virtual content in the real world. 3D reconstruction in VR format markedly enhances the visualization of tumors and identification of their relation to cortex structures. VR can observe perspectives of the natural structures from all directions, potentially bringing key information into surgeons’ view in real-time on-demand as well as other superficial landmarks of tissue and adjacent joints, because adequate positioning and trajectory adjustment are critical factors for successful excision. It might even integrate computational navigation and 3DP surgery into existing clinical workflow for orthopedic tumors with varying complexity (15). The VR-based visual platform enables lesions to be treated in 1 session while avoiding common pitfalls of workflows such as tailoring implants, where navigation is employed in limb salvage operation because it maximizes the excising accuracy and preserves the adjacent joint. VR-based cinematic-rendered applications are also available for contacting remote experts online for advice in complicated operative situations. It will indirectly introduce expertise to the operating room to optimize workflow and shorten the training period for oncologic surgeons, because an integral unified platform is crucial to seamless collaboration among healthcare providers. Although remote collaboration in medicine is a relatively unexplored aspect of VR, it can reference images, 3D models, implants, or operative manuals to allow surgeons to make timely decisions while working heads-up or hands-free.

Recent advances in video card technology have included the development of headsets with high-quality visual displays to recognize 6 degrees of freedom (DOF; tilting sideways, tilting forwards and backwards, rotating left to right) and moving across all 3 planes required “room-scale”. Virtual simulation is a process that integrates computer-aided manufacturing (CAM) and CAD into surgical plans by visualizing and analyzing anatomy; it virtually performs manipulations to idealize operations, and transfers the appropriate approaches to patients (16). VSP achieves screwing position or length to ensure placement away from the alveolar nerves bicortically and predetermines the distraction vector, after which cutting guides are fabricated to reach precise cuts intraoperatively. A VR controller is able to feel the weight, movement, resistance, and pressure of an object as they try to grab it with VR, and to generate templates or navigation for expedient recreation of the plan in operating rooms. This navigation-assisted surgery was shown to improve implant alignment or survival with bony sarcoma.

The advent of VR through SFM supports alternative workflows to preoperative plans suitably and reduces the necessity for intraoperative trials or errors (3). These virtual platforms offer insight of the location and metric components for making decisions such as the drawing of pre-cutting lines to operate accurately. The ultimate goal of transferring surgical plans to patients is to position the displaced bony segments in anatomic alignment, which is accomplished by pre-drilling screw holes for fixative plates prior to osteotomy, and then by installing the pre-bent plates in these holes after completion of the osteotomies (17). The sculpturing capability of SFM integrates a toolkit to set complex process such as drilling holes or designing diameters. The revoking function of SFM not only inverses control for 20 processes but also reedits the operative plan, and even fixes the operative module in order to save operative time because correct orientation will reduce malposition. Advanced emulative platforms enable manipulation of a 3D model and observation of osteotomy from varietal angles, whereas an *.stl model offers appropriately sized prosthetic components prior to surgery for the best fit, even if limitations exist such as in a previous case report of a single patient without quantitative measurement and statistical analysis (18,19).

Simulative teaching pattern

A multidisciplinary team may offer various perspectives on the risks and enhance preoperative preparation; VR presents promise not only in overcoming current obstacles but also in engaging learners. Our orthopedic oncologists have strived to deliver the best tutorial experiences by manipulating scenarios of tumorous resection, and this typical teaching drill experiment presented effective tutorials for surgical interns.

In recent years, other teaching modalities such as 3DP anatomical models, AR and VR simulation have been explored to augment educational experience, leading to this explainable machine learning-powered tutorial platform for trainees to practice and perfect surgical skills preoperatively. Emulation is at the forefront of modern education, and our study showed that novice learners were confident in completing an emulated task. Active learning in simulation is a student-centered approach with conscious effort in assigned activities. However, haptic feedback is likely to be an integral part of the emulator, where trainee satisfaction and fidelity will increase with enthusiasm. These training programs focus on enhancing surgical skills in a patient-free environment; the advantages of box-trainer training (also called video trainer, incorporates automated auditory and video-based instruction specific to resection domain) over simulative training include: (I) cheaper cost, which could train multiple trainees simultaneously in short courses; (II) better realism; emulative training appears to shorten operative time and optimize operating performance of surgical trainees with limited laparoscopic experience compared to box-trainer training. Those with intermediate experience and already proficient experts will not benefit significantly from simulator training, whereas the performance of novices was improved in operating theatres after completing proficiency-based tutorial programs during resident education, and may be useful for experienced surgeons who are not significantly effective.

Many areas of medicine benefit from visualization and interaction through numerous training applications and pedagogic approaches (20-22). The greatest advantage of VR is its stereoscopy of immersive visualization, intuition, exploration, and navigation during training and planning of image-guided therapy, where instructors can drive annotations and movements without explicit command. Active learning simulation consists of active thinking, strengthens problem-solving skills, and even builds student empathy for patients prior to interaction. We demonstrated scenarios such as interventional training and personalized education for interns, and it is functional to manipulate and navigate the clinical scene as a result. The posture of ‘guide on the side’ is readily shown in active-learning pedagogies such as team-based learning (TBL) and problem-based learning (PBL), which have been popularized, as highlighted by universities specifically in healthcare education. Both TBL and PBL have shown benefits by promoting communication, teamwork, and creativity; these pedagogies often disseminate theory not applied in real-world scenarios, which is called “Practical substitution for teaching”.

Summary

This study provides validating evidences that VR can be used to efficiently plan, execute, and verify its safety for implant customization and placement in a broad population of single-stage orthopedics. The user friendliness of VR-based navigation enhances the accuracy of surgical margins in both inexperienced and experienced hands with minimal time and cost, engages students’ attention, facilitates higher learning, and enriches the active learning experience, thus, it will open a wide range of applications in interventional navigation affordably. VR is also a reliable method to facilitate interactive study with remote supervision, which creates a novel educational modality for educators to improve the delivery of tutorials and trainee engagement. The real feeling in virtual environments facilitates excellent teaching effects for clinicians; this foster style of “practice substituting for teaching” represents a paragon of keeping up with the times and is worthy of recommendation.

Acknowledgments

Funding: This work was supported by

Footnote

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://qims.amegroups.com/article/view/10.21037/qims-23-1151/coif). All authors report that this work was supported by the Doctoral Engine Project of Affiliated Jiangmen Hospital of TCM at Jinan University (2021), Medical Project in Healthy Field of Jiangmen Plan in Scientific Technology (No. 2022YL03005), and Guangdong-Hong Kong Technology Cooperation Funding Scheme (No. TCFS 2023), as well as study materials of CT/ProE-5.0/Mimics/FreeForm® Modeling Plus. The authors have no other conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. All procedures performed in this study were in accordance with the ethical standards of Jinan University Committee (No. 2022YL03005) and with the Helsinki Declaration (as revised in 2013). Written informed consent was provided by the patient for publication of this article. A copy of the written consent is available for review by the editorial office of this journal.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Kadam D. Limb salvage surgery. Indian J Plast Surg 2013;46:265-74. [Crossref] [PubMed]

- Singh GD, Singh M. Virtual Surgical Planning: Modeling from the Present to the Future. J Clin Med 2021;10:5655. [Crossref] [PubMed]

- Mofatteh M, Mashayekhi MS, Arfaie S, Chen Y, Mirza AB, Fares J, Bandyopadhyay S, Henich E, Liao X, Bernstein M. Augmented and virtual reality usage in awake craniotomy: a systematic review. Neurosurg Rev 2022;46:19. [Crossref] [PubMed]

- Lee MY, Chang CC, Ku YC. New layer-based imaging and rapid prototyping techniques for computer-aided design and manufacture of custom dental restoration. J Med Eng Technol 2008;32:83-90. [Crossref] [PubMed]

- Reinschluessel AV, Muender T, Salzmann D, Döring T, Malaka R, Weyhe D. Virtual Reality for Surgical Planning - Evaluation Based on Two Liver Tumor Resections. Front Surg 2022;9:821060. [Crossref] [PubMed]

- Grosch AS, Schröder T, Schröder T, Onken J, Picht T. Development and initial evaluation of a novel simulation model for comprehensive brain tumor surgery training. Acta Neurochir (Wien) 2020;162:1957-65. [Crossref] [PubMed]

- Migliorini F, La Padula G, Torsiello E, Spiezia F, Oliva F, Maffulli N. Strategies for large bone defect reconstruction after trauma, infections or tumour excision: a comprehensive review of the literature. Eur J Med Res 2021;26:118. [Crossref] [PubMed]

- Zawy Alsofy S, Nakamura M, Suleiman A, Sakellaropoulou I, Welzel Saravia H, Shalamberidze D, Salma A, Stroop R. Cerebral Anatomy Detection and Surgical Planning in Patients with Anterior Skull Base Meningiomas Using a Virtual Reality Technique. J Clin Med 2021;10:681. [Crossref] [PubMed]

- Mirchi N, Bissonnette V, Yilmaz R, Ledwos N, Winkler-Schwartz A, Del Maestro RF. The Virtual Operative Assistant: An explainable artificial intelligence tool for simulation-based training in surgery and medicine. PLoS One 2020;15:e0229596. [Crossref] [PubMed]

- Nagendran M, Gurusamy KS, Aggarwal R, et al. Virtual reality training for surgical trainees in laparoscopic surgery. Cochrane Database Syst Rev 2013;2013:CD006575. [Crossref] [PubMed]

- Pinter C, Lasso A, Choueib S, Asselin M, Fillion-Robin JC, Vimort JB, Martin K, Jolley MA, Fichtinger G. SlicerVR for Medical Intervention Training and Planning in Immersive Virtual Reality. IEEE Trans Med Robot Bionics 2020;2:108-17. [Crossref] [PubMed]

- Mofatteh M. Neurosurgery and artificial intelligence. AIMS Neurosci 2021;8:477-95. [Crossref] [PubMed]

- Heredia-Pérez SA, Harada K, Padilla-Castañeda MA, Marques-Marinho M, Márquez-Flores JA, Mitsuishi M. Virtual reality simulation of robotic transsphenoidal brain tumor resection: Evaluating dynamic motion scaling in a master-slave system. Int J Med Robot 2019;15:e1953. [Crossref] [PubMed]

- Sugahara K, Koyachi M, Koyama Y, Sugimoto M, Matsunaga S, Odaka K, Abe S, Katakura A. Mixed reality and three dimensional printed models for resection of maxillary tumor: a case report. Quant Imaging Med Surg 2021;11:2187-94. [Crossref] [PubMed]

- Peek JJ, Sadeghi AH, Maat APWM, Rothbarth J, Mureau MAM, Verhoef C, Bogers AJJC. Multidisciplinary Virtual Three-Dimensional Planning of a Forequarter Amputation With Chest Wall Resection. Ann Thorac Surg 2022;113:e13-6. [Crossref] [PubMed]

- Yilmaz R, Winkler-Schwartz A, Mirchi N, Reich A, Christie S, Tran DH, Ledwos N, Fazlollahi AM, Santaguida C, Sabbagh AJ, Bajunaid K, Del Maestro R. Continuous monitoring of surgical bimanual expertise using deep neural networks in virtual reality simulation. NPJ Digit Med 2022;5:54. [Crossref] [PubMed]

- Nagendran M, Gurusamy KS, Aggarwal R, Loizidou M, Davidson BR. Virtual reality training for surgical trainees in laparoscopic surgery. Cochrane Database Syst Rev 2013;2013:CD006575. [Crossref] [PubMed]

- Coyne L, Merritt TA, Parmentier BL, Sharpton RA, Takemoto JK. The Past, Present, and Future of Virtual Reality in Pharmacy Education. Am J Pharm Educ 2019;83:7456.

- Bui I, Bhattacharya A, Wong SH, Singh HR, Agarwal A. Role of Three-Dimensional Visualization Modalities in Medical Education. Front Pediatr 2021;9:760363. [Crossref] [PubMed]

- Rhu J, Lim S, Kang D, Cho J, Lee H, Choi GS, Kim JM, Joh JW. Virtual reality education program including three-dimensional individualized liver model and education videos: A pilot case report in a patient with hepatocellular carcinoma. Ann Hepatobiliary Pancreat Surg 2022;26:285-8. [Crossref] [PubMed]

- Bube SH, Kingo PS, Madsen MG, Vásquez JL, Norus T, Olsen RG, Dahl C, Hansen RB, Konge L, Azawi N. National Implementation of Simulator Training Improves Transurethral Resection of Bladder Tumours in Patients. Eur Urol Open Sci 2022;39:29-35. [Crossref] [PubMed]

- Liu T, Chen K, Xia RM, Li WG. Retroperitoneal teratoma resection assisted by 3-dimensional visualization and virtual reality: A case report. World J Clin Cases 2021;9:935-42. [Crossref] [PubMed]