Segmentation of the prostate, its zones, anterior fibromuscular stroma, and urethra on the MRIs and multimodality image fusion using U-Net model

Introduction

Prostate cancer, which is the second most common malignancy among men, is known as the fifth cause of cancer mortality throughout the world (1), detecting prostate cancer in an earlier stage is of great significance to increase the probability of successful treatment. In the case of reasonable suspicion for prostate cancer based on abnormality on digital rectal examination (DRE) or elevated prostate-specific antigen (PSA) levels, the patients are likely to be referred to pre-biopsy MRI (2).

Although imaging procedures are helpful to diagnose prostate cancer, their primary use is in the staging of the tumor. More than one imaging procedure is usually needed to help create an effective treatment plan. Due to its wide availability, transrectal ultrasound (TRUS) guided biopsy of the prostate is a diagnostic procedure for detecting and staging prostate cancer. 40% or greater of prostate cancers are isoechoic , hence, diagnostic accuracy has been limited for using TRUS in detecting and staging (3,4). A hypoechoic mass inside the peripheral zone (PZ) is not specific to prostate cancer and is present in benign prostatic hyperplasia (BPH) (3). In patients diagnosed with intermediate and high-risk prostate cancer, guidelines suggest disease staging using computed tomography (CT) scan or MRI (5,6). Unfortunately, these procedures understage tumors in most cases of metastases. Since anatomical imaging procedures such as CT or MRI depend on morphological features, lesion less than 8–10 mm in diameter are often missed (7). Hence, even with contrast enhancement, CT for local staging of prostate cancer may be of limited value (8). Also, CT does not have the soft tissue resolution required to detect prostate cancer (9). MRI is rapidly developing as a non-invasive procedure that can provide comprehensive information on the structure of prostate cancer (10). MRI scan demonstrates the zones of the prostate gland with excellent soft-tissue resolution and is useful for detection, staging, and follow-up after treatment (11). T2-weighted (T2W) fast spin-echo imaging is widely used for evaluating and depicting the prostate anatomy using MRI (12). The bulk of the prostate gland has relatively uniform signal intensity at T1-weighted (T1W) imaging, and zones of the prostate cannot be clearly identified on T1W images (13). T2W images allow anatomical visualization of the prostate and its zones. On T2W images, the normal PZ shows uniform high signal intensity due to the water content of glandular structures in the PZ. Tumors are commonly hypointense compared with the glandular PZ. However, hypointensity in the PZ is not a specific finding for prostate cancer in itself, and its differential diagnosis contains hemorrhage, prostatitis, and BPH (14). Dynamic contrast-enhanced MRI (DCE-MRI) creates a minimally invasive visualization of tumor angiogenesis. The sequence comprises T1-weighted fast gradient-echo images of the prostate acquired before, during, and after intravenous injection of a low molecular-weight gadolinium chelate. A final limitation of DCE-MRI is the difficulty of reproducing results across centers. The potential of MRI for prostate imaging is increased by combining anatomical (T2W), and functional imaging techniques such as diffusion-weighted (DWI) images and apparent diffusion coefficient (ADC) maps generated from DW images (15). Compared to the other methodologies, DWI has the advantage of short acquisition time and no need for intravenous contrast. DWI estimates the restriction of water diffusion in biological tissues, corresponding to cellular density, membrane permeability, and space between cells (16). Due to the high cell densities in the cancer cells tend to have more restricted diffusion than normal tissues. Several studies have assessed the importance of DWI-derived ADC in characterization of prostate cancer aggressiveness (17-19). Low ADC values show the limited diffusion in an ADC map, while higher ADC values are generated from tissues with relatively free diffusion (20). It has been proved that combining ADC and T2W images with fusion algorithms significantly improves the performance of prostate cancer detection and its boundary compared with T2W images (21). Image fusion is considered as merging relevant information from a sequence of images into one more informative and complete image than any input image. More precisely, a fused image integrates information from multiple images to a single image without the introduction of distortion. Therefore, fused images do not show artifacts or discrepancies that will (19). In summary, the purpose of image fusion is to acquire better contrast, fusion quality, and perceived experience. The result of the fused image should meet the following conditions, including the fused image should contain the information of the source images completely and bad states should be avoided, such as misregistration and noise. Accurate and reliable prostate zones segmentation has a fundamental role in image analysis tasks such as cancer detection, patient management, and radiotherapy treatment planning (22-25). Manual segmentation of the prostate achieved by radiologists is the principal procedure for generating ground truth. It is done almost entirely on visual inspection on a slice-by-slice basis. However, due to the large variability in the prostate gland of different patient groups, this approach is time-consuming and subject to inter-and intra-reader variations (26). Becker et al. (27) have systematically investigated inter-reader variability in prostate and seminal vesicle (SV) segmentation due to reader expertise. Variability was highest in the apex, lower in the base, and lowest in the midgland. Chen et al. (28) aimed to assess the variation in the segmentation of prostate cancer among different users. Four consultant radiologists, four consultant urologists, four urology trainees, and four non-clinician segmentation scientists were requested to segment prostate tumors on MRI images. There was a high variance among the radiologists in segmentation. Less experienced participants appear to under-segment models and underestimate the size of prostate tumors. Hence, there is a need for a more accurate, reliable, and robust segmentation technique (27,29). Machine learning (ML), particularly deep learning (DL), models (30) have the potential to overcome the limitations of manual segmentation by implementing robust models for the automatic segmentation (29,31,32). Early attempts to perform automatic delineation on the prostate relied on the edge or region-based models. These methods are based on the incorporation of prior knowledge or atlas-based segmentation (33,34) and use a procedure that fits chosen areas on a target image to selected reference images. However, these procedures rely on the atlas selection process and how well the area was selected (35). To improve performance and to develop an automated segmentation model, DL procedures have been used resulting in significant improvements, notably with the recent developments of convolutional neural networks (CNNs) (36,37). To perform a pixel-wise segmentation, DL procedures can learn rich features and present a computation advantage over atlas-based strategies (36,38). In this study, we implemented a U-Net network (36) and analyzed its performance for the automatic segmentation of the prostate and its zones, including PZ, transitional zone (TZ), anterior fibromuscular stroma (AFMS), and urethra on the MRI (T2W, DWI, and ADC); multimodality image fusion procedures were also performed. While DL models have been used in previous studies (25,39-41) on the T2W, DWI, and ADC separately, our notable contributions are the comparison of performance when evaluating MRI separately and in combination with fusion algorithms. To our knowledge, this is the first study evaluating segmentation of the entire prostate gland and its zones using multimodality image fusion employing a U-Net-based network. We present the following article in accordance with the TRIPOD reporting checklist (available at https://qims.amegroups.com/article/view/10.21037/qims-22-115/rc).

Methods

Dataset

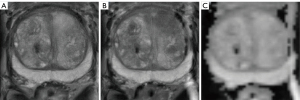

The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). In this study, data were obtained from the publicly available PROSTATEx Zone Segmentations dataset (42-45). A total of 91 eligible patients were retrospectively identified from 98 cases. Due to differences in the number of slices, seven cases were excluded in the three procedures including T2W, DWI, and ADC which were used in this study. The four-class segmentation encompasses the PZ, TZ, AFMS, and the urethra. As underlying images, transversal T2W scans were used. A medical student did 3D segmentations with experience in prostate segmentation, and an expert urologist instructed the student and double-checked the segmentations in the end. 50 patients were considered for training process in a 10-fold cross-validation fashion and 41 ones for external test. The testing data are held out of the training process at all times. 90% out of 50 patients, 45 patients, were employed for training the algorithms and 10%, 5 patients, were utilized to validate the training process. It is necessary to mention slices from one patient were not used in all three of training, validation, and testing. All MRI exams, including T2W, DWI, and ADC were acquired in axial orientation. To calculate the ADC map, three b-values were acquired (50, 400, and 800) and calculated by the scanner software. The MRI parameters are summarized in Table 1. In our study, the image masks were labeled into five classes: PZ, TZ, AFMS, urethra, and whole prostate. In all slices, the mentioned classes were annotated by hand, and distinguishing was done between the prostate zones as shown in Figure 1.

Table 1

| Sequence | TR/TE (ms) | Flip angle (°) | Slice thickness (mm) | Matrix size (pixels) | Pixel bandwidth (Hz) | Voxel size (mm) |

|---|---|---|---|---|---|---|

| T2W | 6,840/104 | 160 | 3 | 320×320 | 200 | 0.5×0.5×3 |

| DWI and ADC | 2,700/63 | 90 | 3 | 128×84 | 1,500 | 2×2×3 |

T2W, T2-weighted image; DWI, diffusion-weighted imaging; ADC, apparent diffusion coefficient; TR/TE, repetition time/echo time.

Preprocessing

Since the images used in this work, including T2W, DWI, and ADC were obtained undergoing different conditions, there was a variation in image acquisition and reconstruction parameters in imaging modalities. Voxel size and acquisition matrix are two important MRI parameters that vary significantly from protocol to protocol and per institutional preferences. In our study, the acquisition matrix was varied from 320×320 to 128×84 and voxel size from 0.5 to 2 mm across multimodality images. Hence, image registration, resampling, and other pre-processing methods are necessary to work with datasets having different dimensions. Details on the pre-processing methods are given in the subsequent paragraphs.

Registration

Due to variations in MRI acquisition protocols, images had different matrices (ranging from 320×320 to 128×84), while DL architectures usually need to be of same-sized inputs. Hence, in the first preprocessing step, images were registered using rigid registration through an in-house-developed code using the SimpleITK Python package. Masi et al. (46) aimed to examine the comparison of rigid and deformable registration between multiparametric magnetic resonance (mpMRI) and CT images for prostate cancer. Results show that rigid image coregistration is sufficiently accurate compared to deformable registration. The training parameters used for the registration algorithm were as follows: Similarity Metric = Mutual Information (MI), Interpolation = Linear, Optimizer = Stochastic Gradient Descent (SGD). For the used optimizer, the learning rate and the number of iterations were 0.01 and 100, respectively. Finally, MRI were resampled to a resolution of 0.5×0.5×3 mm3.

Cropping

One of the significant challenges in prostate MRI segmentation is the presence of class imbalance, where prostate gland volume in the MRI is relatively small compared to the background, resulting in poor prostate segmentation. Hence, to tackle class imbalance, cropping is necessary to obtain an accurate segmentation from the prostate and prevent dominating background pixels over the prostate pixel. Therefore, the goal of this step was to get a bounding box of the prostate. Bounding box size was big enough to cover the size of the largest prostate gland and small enough to fit in the GPU memory for efficient processing. Therefore, image cropping was performed through a bounding box (equal to 160×160×160 mm3) with a developed Python code in the following link: https://github.com/voreille/hecktor. Figure 2 shows an example of a cropped image and its corresponding mask before and after cropping.

Image fusion

For image segmentation, fused images were used in addition to T2W, DWI, and ADC separately. Hence, three combinations, including TW + DWI, T2W + ADC, and DWI + ADC were considered. For perfect diagnosis, medical image fusion is being widely used for capturing complementary information from images of different techniques. According to the studies and features such as perfect reconstruction, decomposing image data into the high-frequency portion, faster algorithm etc., image fusions with the wavelet analysis have received many better outcomes (47,48). Therefore, wavelet transform was done for image fusion using an in-house-developed Python code. Figure 3 shows three modes of fused images.

Architecture of the proposed model and image augmentation

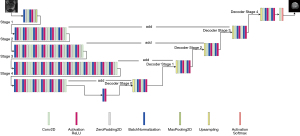

We applied U-Net model on T2W, DWI, ADC, and fused images for segmentation. The U-Net network model mainly comprises an encoder network and a decoder network. The main idea is that the image with a fixed size is dimensionally reduced to conform the size of the display area and generate thumbnails of the corresponding image to extract deeper image features (49). To concatenate the features of high and low levels together, skip-connection was added to the encoder-decoder network. In the encoder stage, from the input image, the features are extracted after convolution layers using the filters to learn low and high-dimensional features as they iteratively get trained. Then, the segmentation mask can be obtained using up-sampling at the decoder side. Discovering features in the segmented image depends on the learning of weight filters, down-sampling/up-sampling blocks, and skip connections. Also, the backbone is the main architectural component that determines how layers were arranged in the encoder process and built in the decoder process (50). In this study, ResNet34 was used as a backbone for U-Net (U-Net with ResNet34). The proposed network architecture is shown in Figure 4. Details of the proposed model are shown in the Table S1. During the training process, data augmentation was used to reduce overfitting (51). The flipud (flip up to down), fliplr (flip left to right), and rotation were performed to the input training slices. The results of the image augmentation performed on an image and its label are shown in Figure 5.

Training, loss function, and evaluation metric

The selected optimization algorithm is Adam (52) with a learning rate α=0.0008 and decay with the following parameters: init alpha =0.0008, factor =0.9, drop every=30. The optimal learning rate value was selected by the trial-and-error method. Step decay was calculated as follows:

The training was performed for 100 epochs with a batch size of 8 and an early stop mechanism. The loss function is formulated as a mixed loss, including contributions from dice loss and focal loss as follows (31,53).

TP, FP, and FN show the number of true positive, false positive, and false negative, respectively. is the predicted probability, is the ground truth, C is the number of anatomies plus one, λ is the trade-off between dice loss and focal loss, α and β are the trade-offs of penalties for FNs and FPs which are set as 0.5 here, N is the total number of voxels in the MRI, and λ is set to be 0.5 s.

For training, we performed ten-fold cross-validation. Ten-fold cross-validation split the data into ten parts and then alternately used nine parts for training and the rest for testing. All experiments, were performed on the on the Google Cloud computing service “Google Colab” with Tesla K80 GPU (16 GB memory) using framework Tensor Flow version 2.4.1. For evaluating the proposed model performance, dice score (DSC), intersection over union (IoU), precision, recall, and Hausdorff Distance (HD) were used as follows:

As indicated in Eq. [9], X and Y are the set of all points within the manual and automatic segmentation using the U-Net model, respectively, and d is the Euclidean distance. The HD is a positive real number, and smaller numbers represent better matching segmentations.

Data was analyzed using the GraphPad Prism software (GraphPad, USA). D’Agostino test was used to assess the normality of data. One-way analysis of variance (ANOVA) was performed to investigate the signal intensity between the used sequences and the fusion of images. P<0.05 was considered statistically significant.

Results

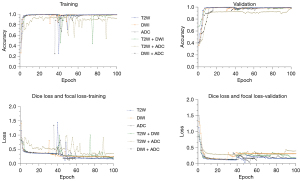

T2W, DWI, and ADC images

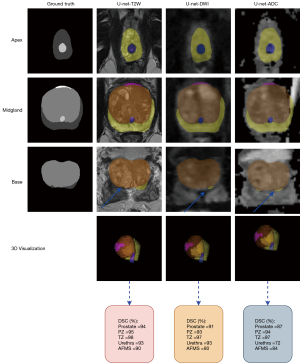

In total, axial T2W, DWI, and ADC images and fusion of images, including T2W + DWI, T2W + ADC, and DWI + ADC of 91 patients, were processed. The signal intensity between the used sequences were significantly different (P value <0.05). The values of accuracy and loss for the U-Net model on three images and their fusion images are given in Figure 6. The training and validation step have been carried out to the 100 epochs. An early stopping strategy was applied to the training process so that if validation accuracy reached the value of one, learning was stopped. In the testing set, it can be seen that the highest accuracy was obtained for the combination T2W + DWI and T2W alone using the U-Net model. Tables 2,3 show calculated metrics, including DSC, IoU precision, recall, and HD for three images T2W, DWI, and ADC for prostate, PZ, TZ, urethra, and AFMS for the validation and testing sets. The range of the DSC in the testing set was between 88% and 95% for prostate, 92.5% and 94% for PZ, 96.6% and 98% for TZ, 80% and 94% for urethra, and 78% and 88% for AFMS across the three images. Using T2W images alone on the external test images, higher DSC, IoU, precision, and recall was achieved than the individual DWI and ADC images. DSC of 95%, 94%,98%, 94%, and 88%, IoU of 88%, 88.5%, 96%, 90%, and 79%, precision of 95.9%, 93.9%, 97.6%, 93.83%, and 87.82%, and recall of 94.2%, 94.2%, 98.3%, 94%, 87.93% was achieved for the whole prostate, PZ, TZ, urethra, and AFMS (Table 3). The min of the HD in the testing set was 0.013 for the T2W procedure in the TZ class, and its max was 0.17 for the ADC procedure in the PZ class. By comparing three images separately, DWI had the lowest DSC, IoU, and recall, compared to both T2W and ADC images for three-zone: DSC of 92.5%, 96.6%, and 78%, IoU of 86.5%, 94.3%, and 63.6%, recall of 91.4%, 95.9%, and 77.9% was achieved for the PZ, TZ, and AFMS. Figure 7 shows the axial slice for DSC obtained for the prostate and its zones of a randomly selected case in the T2W, DWI, and ADC images. The prostate is subdivided into the base, mid gland, and apex from superior to inferior. We presented DSC for the base, mid gland, and apex of the prostate. As shown in Figure 7, the prostate base had failed segmentations than the mid gland and apex.

Table 2

| Class | Modality | Precision (%) | Recall (%) | DSC (%) | IoU (%) | HD |

|---|---|---|---|---|---|---|

| PZ | T2W | 94.8±1.1 | 95±0.9 | 95±1.51 | 91±2.51 | 0.035±0.047 |

| DWI | 94.5±1.3 | 93.5±1.2 | 94±0.5 | 92.5±0.57 | 0.025±0.01 | |

| ADC | 96.1±0.2 | 94.4±0.6 | 95±0.51 | 91.3±1.1 | 0.19±0.29 | |

| TZ | T2W | 99.22±0.01 | 97.9±0.6 | 99.12±0.02 | 97±0.02 | 0.035±0.041 |

| DWI | 97.1±0.3 | 96.97±0.1 | 97±0.57 | 95±1.1 | 0.01±0.004 | |

| ADC | 97.8±0.4 | 97.5±1.2 | 98±0.2 | 96±0.9 | 0.14±0.26 | |

| Urethra | T2W | 94.2±1 | 95.1±1.5 | 95.21±1.45 | 93±1.8 | 0.016±0.04 |

| DWI | 93±1.1 | 92.9±3.9 | 93±2.6 | 90.3±5.2 | 0.015±0.01 | |

| ADC | 82.2±6.3 | 79.4±5.56 | 81±7 | 72±9 | 0.05±0.1 | |

| AFMS | T2W | 90.1±2.9 | 88.4±1.7 | 89±2.1 | 82±2.8 | 0.016±0.039 |

| DWI | 81.1±3 | 78.1±2.5 | 79±2.4 | 72.3±2.5 | 0.031±0.02 | |

| ADC | 84.1±0.5 | 83.8±0.3 | 84±1.1 | 74±1 | 0.032±0.05 | |

| Prostate | T2W | 96.12±0.5 | 95.5±0.9 | 96±0.9 | 92.5±0.7 | 0.010±0.022 |

| DWI | 91.2±1.2 | 90.6±1.54 | 91±1.7 | 86±1.2 | 0.011±0.021 | |

| ADC | 90±1.3 | 90±1.5 | 90±1.2 | 84±0.7 | 0.11±0.15 |

PZ, peripheral zone; TZ, transitional zone; AFMS, anterior fibromuscular stroma; T2W, T2-weighted image; DWI, diffusion-weighted image; ADC, apparent diffusion coefficient; DSC, dice score; IoU, intersection over union; HD, Hausdorff Distance.

Table 3

| Class | Modality | Precision (%) | Recall (%) | DSC (%) | IoU (%) | HD |

|---|---|---|---|---|---|---|

| PZ | T2W | 93.9 | 94.2 | 94 | 88.5 | 0.030 |

| DWI | 93.2 | 91.4 | 92.5 | 86.5 | 0.022 | |

| ADC | 93.1 | 93.4 | 93.5 | 88 | 0.17 | |

| TZ | T2W | 97.6 | 98.3 | 98 | 96 | 0.013 |

| DWI | 97.2 | 95.9 | 96.6 | 94.3 | 0.030 | |

| ADC | 97.2 | 96.7 | 97 | 95 | 0.13 | |

| Urethra | T2W | 93.83 | 94 | 94 | 90 | 0.014 |

| DWI | 91.1 | 90.8 | 91 | 84.3 | 0.015 | |

| ADC | 80.3 | 79.7 | 80 | 67 | 0.052 | |

| AFMS | T2W | 87.82 | 87.93 | 88 | 79 | 0.015 |

| DWI | 78.1 | 77.9 | 78 | 63.6 | 0.031 | |

| ADC | 82.7 | 83 | 83 | 71 | 0.052 | |

| Prostate | T2W | 95.9 | 94.2 | 95 | 88 | 0.017 |

| DWI | 92.3 | 89.5 | 90 | 82 | 0.018 | |

| ADC | 88.1 | 87.96 | 88 | 80 | 0.031 |

PZ, peripheral zone; TZ, transitional zone; AFMS, anterior fibromuscular stroma; T2W, T2-weighted image; DWI, diffusion-weighted image; ADC, apparent diffusion coefficient; DSC, dice score; IoU, intersection over union; HD, Hausdorff Distance.

Fusion images

Tables 4,5 show calculated metrics for three combinations in the validation and testing sets. Across the three combinations T2W + DWI, T2W + ADC, and DWI + ADC, the range of the DSC in the testing set was between 97% and 99.06% for prostate, 98.05% and 99.05% for PZ, 98% and 99.04% for TZ, 97% and 99.09% for urethra, 96.1% and 98.08% for AFMS. In this study, the results clearly show that the best segmentation was obtained when the model is trained using T2W + DWI images. DSC of 99.06%, 99.05%, 99.04%, 99.09%, and 98.08%, IoU of 97.09%, 97.02%, 98.12%, 98.13%, and 96%, precision of 99.24%, 98.22%, 98.91%, 99.23%, and 98.9%, and recall of 98.3%, 99.8%, 99.02%, 98.93%, and 97.51% for the whole prostate, PZ, TZ, urethra, and AFMS (Table 5). The min of the HD in the testing set for three combinations was 0.29 for the T2W + ADC procedure in the whole prostate class, and its max was 1.32 for the DWI + ADC procedure in the TZ class. In total, all fused images presented accurate segmentation of the prostate gland and its zones, with some examples shown in Figure 8. It is notable that the newly generated mask is closely similar in shape and size to the ground truth mask. As for qualitative analysis, visual comparison between three combinations shows that more accurate segmentation can be achieved at the mid gland level.

Table 4

| Class | Combinations | Precision (%) | Recall (%) | DSC (%) | IoU (%) | HD |

|---|---|---|---|---|---|---|

| PZ | T2W + DWI | 99.15±0.5 | 99.0±0.3 | 99.2±0.7 | 97.3±0.12 | 1.25±0.09 |

| T2W + ADC | 98.5±0.3 | 98.4±0.6 | 98.3±0.4 | 97±0.42 | 0.96±0.57 | |

| DWI + ADC | 97.4±0.25 | 98±0.4 | 97.6±0.3 | 96.9±0.38 | 1.36±0.08 | |

| TZ | T2W + DWI | 99.4±1.3 | 98.5±1.9 | 99.1±1.5 | 98.4±0.8 | 1.35±0.09 |

| T2W + ADC | 99.16±0.9 | 97.5±1 | 98.5±1.1 | 97.32±0.5 | 1±0.59 | |

| DWI + ADC | 98.14±0.8 | 97.1±0.2 | 97.6±0.5 | 97±0.1 | 1.48±0.07 | |

| Urethra | T2W + DWI | 99.2±0.2 | 99.4±0.15 | 99.2±0.1 | 98.5±0.2 | 0.3±0.08 |

| T2W + ADC | 98±0.25 | 96.7±0.15 | 97.3±0.2 | 96.5±0.52 | 1.02±1.26 | |

| DWI + ADC | 97.3±0.45 | 99.12±0.6 | 98.6±0.5 | 97±0.6 | 0.43±0.07 | |

| AFMS | T2W + DWI | 98.22±0.2 | 98.6±0.3 | 98.7±0.1 | 97±0.4 | 0.34±0.03 |

| T2W + ADC | 98±0.6 | 97.9±0.5 | 98±0.42 | 97.23±0.12 | 0.26±0.15 | |

| DWI + ADC | 96.9±0.53 | 97±0.7 | 97±0.21 | 95±0.32 | 0.4±0.035 | |

| Prostate | T2W + DWI | 99.13±0.2 | 99.1±0.15 | 99.1±0.1 | 97±0.25 | 0.32±0.08 |

| T2W + ADC | 98.5±0.5 | 98.10±0.2 | 98.32±0.2 | 98±0.1 | 0.24±0.11 | |

| DWI + ADC | 97.2±0.3 | 97.4±0.42 | 97.6±0.23 | 96±0.31 | 0.45±0.09 |

PZ, peripheral zone; TZ, transitional zone; AFMS, anterior fibromuscular stroma; T2W, T2-weighted image; DWI, diffusion-weighted image; ADC, apparent diffusion coefficient; DSC, dice score; IoU, intersection over union; HD, Hausdorff Distance.

Table 5

| Class | Combinations | Precision (%) | Recall (%) | DSC (%) | IoU (%) | HD |

|---|---|---|---|---|---|---|

| PZ | T2W + DWI | 98.22 | 99.8 | 99.05 | 97.02 | 1.2 |

| T2W + ADC | 97.64 | 99.09 | 98.1 | 96.1 | 0.98 | |

| DWI + ADC | 97.6 | 99.1 | 98.05 | 97 | 1.22 | |

| TZ | T2W + DWI | 98.91 | 99.02 | 99.04 | 98.12 | 1.1 |

| T2W + ADC | 98 | 97.95 | 98 | 96.06 | 0.99 | |

| DWI + ADC | 98.5 | 97.8 | 98 | 98 | 1.32 | |

| Urethra | T2W + DWI | 99.23 | 98.93 | 99.09 | 98.13 | 0.5 |

| T2W + ADC | 98 | 96.5 | 97 | 96 | 1.1 | |

| DWI + ADC | 99.10 | 97.7 | 98.04 | 97.05 | 0.45 | |

| AFMS | T2W + DWI | 98.9 | 97.51 | 98.08 | 96 | 0.30 |

| T2W + ADC | 98.4 | 96.2 | 97 | 96 | 0.36 | |

| DWI + ADC | 97 | 95 | 96.1 | 93 | 0.45 | |

| Prostate | T2W + DWI | 99.24 | 98.3 | 99.06 | 97.09 | 0.30 |

| T2W + ADC | 99.12 | 97.1 | 98.1 | 97 | 0.29 | |

| DWI + ADC | 98 | 96.2 | 97 | 95 | 0.55 |

PZ, peripheral zone; TZ, transitional zone; AFMS, anterior fibromuscular stroma; T2W, T2-weighted image; DWI, diffusion-weighted image; ADC, apparent diffusion coefficient; DSC, dice score; IoU, intersection over union; HD, Hausdorff Distance.

Discussion

Accurate segmentation of the prostatic zones from surrounding tissues is helpful and sometimes even necessary in various clinical settings, such as volume delineation and MRI-Ultrasound-guided biopsies approaches. Also, the behavior of intraprostatic dose painting plans for prostate cancer strongly correlates with its zonal location. In this study, automatic segmentation of the prostate and the zonal anatomy on T2W, DWI, ADC, and fusion images were presented using the U-Net network with ResNet34 as a backbone. The images were fused using the wavelet analysis, and three combinations, including TW + DWI, T2W + ADC, and DWI + ADC, were generated. For the proposed architecture, DSC, IoU, precision, recall, and HD were used as the overall metrics to evaluate the segmentation performance. We hypothesized that the fused images would have superior DSC than the single image.

One possible approach to enhance the anatomic localization of findings identified on DW images is to perform a fusion of these images with T2W images. Indeed, higher accuracy has been shown for detecting pelvic and abdominal malignancies by using the fusion of T2W and DWI in comparison with the images separately (40,54). Rosenkrantz et al. (55) assessed the utility of T2W + DWI fusion images for prostate cancer detection and localization. Their results showed that the fusion of two modalities improved sensitivity and accuracy for tumor detection on a sextant-basis, with similar specificity. In another study by Selnæs et al. (56), any combination of T2W, DWI, and DCE-MRI was significantly better than T2W alone in separating cancer from noncancer segments. In the present study, the results clearly showed that the U-Net, compared to manual segmentation, was able to accurately segment the entire prostate and its zones using fused images of T2W + DWI. The better performance of the U-Net and its accurate segmentation can be attributed to its architecture that uses the ResNet34 model as the backbone and performs the preprocessing steps. The U-Net performance is summarized in Table 6 considering the DSC values of some studies. Our results show the superior performance of the algorithm than other studies, it will be able to reliably segment the structures. However, variability was found in the base of the gland, which is affected by changes in prostate morphology. But, the difference in agreement between base, midgland, and apex was fairly small. Chilali et al. (58) propose an automatic segmentation technique using the T2W images to segment the prostate, PZ, and TZ. The segmentation was acquired by the application of a Fuzzy c-means clustering algorithm. The mean values of DSC were 0.81, 0.62, and 0.7 for the prostate, PZ, and TZ, respectively. Our results showed the higher DSC on the T2W images, so that was 95% for prostate, 94% for PZ, and 98% for TZ. The TZ showed higher DSC compared to PZ, which was expected due to the low PZ segmentation performance. Singh et al. (63) propose a semi-automated model that segments the prostate gland and its zones using DW-MRI. Segmentation of PZ and TZ was performed based on an in-house probabilistic atlas with a partial volume correction algorithm. The proposed segmentation had obtained DSC of 90.76%±3.68% for the prostate gland, DSC of 77.73%±2.76% for the PZ, and DSC of 86.05%±1.50% for the TZ. Similarly, we obtained a DSC of 90% for the prostate on the DW images. However, T2W, DWI, ADC, and fusion images were used in the present study. We obtained better results than the study of Singh et al. (63) (DSC = 99.06% by T2W + DWI images). Zabihollahy et al. (25) performed a methodology to segment prostate, central gland (CG), and PZ from T2W and ADC images. They designed two similar models, each made up of two U-Nets, to segment the prostate, CG, and PZ from T2W and ADC images, separately. They trained and tested their model on 225 patients, and achieved a DSC of 95.33%±7.77%, 93.75%±8.91%, and 86.78%±3.72% for prostate, CG, and PZ, respectively. Compared to our study, they achieved higher DSC on the T2W and ADC images. However, in the current study, different performance metrics were presented, and better results were achieved for the DSC that the fusion model obtained a max value of 99.06%. It was noticed that prostate, urethra, and AFMS have a higher bias between images than PZ and TZ. Cem Birbiri et al. (64) evaluated the performance of the conditional GAN (cGAN), CycleGAN, and U-Net models for the detection and segmentation of prostate tissue in mp-MRIs. For each MR modality (T2W, DWI, and ADC), original images were combined with the augmented images using three approaches, including Super-Pixel (SP), Gaussian Noise Addition (GNA), and Moving Mean (MM). To obtain a better validation of the experiments, 4-fold cross-validation was performed, and each trained model was evaluated only on the original test images. The best results for the T2W and ADC images were achieved when the model was trained on the combination of original and GNA images with DSC values of 78.9%±12% for T2W images and DSC values of 80.2%±3% for ADC images. The main advantage of our study is that we investigated the segmentation of prostate and prostate zones and DSC values obtained in the multimodality images. However, there is some similarity between their work and ours, our developed methodology segments the prostate and its zones on the fused images with a higher DSC. This feature has not been reported previously. Overall, the results indicate that the U-Net-based segmentation method can produce intra-patient reproducible and reliable masks for T2W and T2W + DWI images of the prostate. Good reproducibility gives the potential for picking up changes in the prostate, an essential step towards the clinical implementation of prostate computer-aided design and drafting (CADD) systems, based on multimodality and fusion images.

Table 6

| Study | Type of image | Network | Segmented area | DSC (%) |

|---|---|---|---|---|

| Zavala-Romero (41) | T2W | A 3D multistream architecture | Prostate | 0.893±0.036 |

| PZ | 0.811±0.079 | |||

| Zhu (53) | T2W | U-Net | Prostate | 92.7±4.2 |

| PZ | 79.3±10.4 | |||

| Khan (31) | T2W | DeepLabV3+ | Prostate | 92.8 |

| Makni (57) | T2W | C-means | PZ | 80 |

| CZ | 89 | |||

| Clark (45) | DWI | Fully Convolutional Neural network | Prostate | 93 |

| TZ | 88 | |||

| Chilali (58) | T2W | C-means | Prostate | 81 |

| PZ | 70 | |||

| TZ | 62 | |||

| Ghavami (59) | T2W | UNet | Prostate | 0.84±0.07 |

| VNet | Prostate | 0.88±0.03 | ||

| HighRes3dNet | Prostate | 0.89±0.03 | ||

| HolisticNet | Prostate | 0.88±0.12 | ||

| Dense VNet | Prostate | 0.88±0.03 | ||

| Adapted UNet | Prostate | 0.87±0.03 | ||

| Zabihollahy (25) | T2W | U-Net | Prostate | 95.33±7.77 |

| PZ | 86.78±3.72 | |||

| CZ | 93.75±8.91 | |||

| ADC | U-Net | Prostate | 92.09±8.89 | |

| PZ | 86.1±9.56 | |||

| CZ | 89.89±10.69 | |||

| Aldoj (60) | T2W | Dense-2 U-net | Prostate | 92.1±0.8 |

| PZ | 78.1±2.5 | |||

| CZ | 89.5±2 | |||

| U-Net | Prostate | 90.7±2 | ||

| PZ | 75±3 | |||

| CZ | 89.1±2.2 | |||

| Tao (61) | T2W | 3D U-Net | Prostate | 91.6 |

| Bardis (62) | mpMRI | 3D U-Net | Prostate | 94 |

| PZ | 77.4 | |||

| TZ | 91 | |||

| Our work | T2W + DWI | U-Net with ResNet-34 as a Backbone | Prostate | 99.1±0.1 |

| PZ | 99.2±0.7 | |||

| TZ | 99.1±1.5 | |||

| AFMS | 98.7±0.1 | |||

| Urethra | 99.2±0.1 |

DSC, dice score; T2W, T2-weighted image; DWI, diffusion-weighted image; mpMRI, multiparametric MRI; ADC, apparent diffusion coefficient; PZ, peripheral zone; CZ, central zone; TZ, transitional zone; AFMS, anterior fibromuscular stroma.

In this study, according to prevailing guidelines, a public dataset for patients with prostate cancer was used. Hence, the results illustrate the reproducibility of the U-Net-based segmentation method in the actual clinical setting. Deep-learning models always require a large amount of annotated data. This biases vision researchers to work on tasks where the annotation is easy instead of important tasks. Also, many modern segmentation models require a significant amount of memory even during the inference stage. Similarly, in this study, we were faced with limited memory for training 3D-U Net. Although the sample size was relatively large in this study, all images were from a retrospective cohort, and this makes the potential selection biased. Thus, training a multi-center study in the future can give an additional understanding of the reproducibility of DL-based segmentation across institutions. Therefore, future studies with a large sample size are suggested. In the manual delineation of the prostate on CT, large inter-and intra-observer variation in delineating has been reported because of differences in the level of expertise of the physicians. Observer variability reflects the uncertainty to determine boundaries and is most pronounced around tumor boundaries. Computer-aided segmentation procedures have been designed to improve prostate contouring accuracy. However, the accuracy of these procedures is limited by the inherent low soft-tissue contrast of CT images, which usually yields an overestimation of prostate volume. However, the superior soft-tissue contrast in MRI offers a more accurate delineation of the prostate with some geometric uncertainty. It is helpful to have MRI alongside the CT for improved segmentation, especially for radiotherapy planning. Herein, for future works, we propose to use synthetic image generation methods such as a Cycle Generative Adversarial Network (CycleGAN) to produce MRI from the provided CT scans in prostate cancer patients. We should also point out the lack of a multi-reader, which would have been interesting as it was discussed by Becker et al. (27) to evaluate inter-rater reliability (39,65-68) for four-zone prostate segmentation and assess the radiologist’s level of expertise. Also, we propose expanding the scope of the study even further by: (I) not providing bounding boxes. Using the entire MRI for fully automatic algorithms, (II) to address the challenge of different numbers of slices in within-modality images.

Conclusions

We presented a fully automated U-Net-based technique to segment prostate, PZ, TZ, urethra, and AFMS using T2W, DWI, ADC, and fusion images, including T2W + DWI, T2W + ADC, and DWI + ADC as input. Better performance was achieved using T2W + DWI images than T2W, DWI, and ADC separately or T2W + ADC and DWI + ADC in combination. We only used the U-Net model this time; comparing it with other networks, such as U-Net, Mask R-CNN, CycleGAN, etc. is needed. As well, for better generalization, deep adversarial domain adaptation using transfer learning with GANs is also suggested.

Acknowledgments

Funding: This work was supported by the Ahvaz Jundishapur University of Medical Sciences (Grant No. U-01034).

Footnote

Reporting Checklist: The authors have completed the TRIPOD reporting checklist. Available at https://qims.amegroups.com/article/view/10.21037/qims-22-115/rc

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://qims.amegroups.com/article/view/10.21037/qims-22-115/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013).

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Rawla P. Epidemiology of Prostate Cancer. World J Oncol 2019;10:63-89. [Crossref] [PubMed]

- Eskra JN, Rabizadeh D, Pavlovich CP, Catalona WJ, Luo J. Approaches to urinary detection of prostate cancer. Prostate Cancer Prostatic Dis 2019;22:362-81. [Crossref] [PubMed]

- Shinohara K, Scardino PT, Carter SS, Wheeler TM. Pathologic basis of the sonographic appearance of the normal and malignant prostate. Urol Clin North Am 1989;16:675-91. [Crossref] [PubMed]

- Shinohara K, Wheeler TM, Scardino PT. The appearance of prostate cancer on transrectal ultrasonography: correlation of imaging and pathological examinations. J Urol 1989;142:76-82. [Crossref] [PubMed]

- Mottet N, Bellmunt J, Bolla M, Briers E, Cumberbatch MG, De Santis M, et al. EAU-ESTRO-SIOG Guidelines on Prostate Cancer. Part 1: Screening, Diagnosis, and Local Treatment with Curative Intent. Eur Urol 2017;71:618-29. [Crossref] [PubMed]

- Thompson I, Thrasher JB, Aus G, Burnett AL, Canby-Hagino ED, Cookson MS, D'Amico AV, Dmochowski RR, Eton DT, Forman JD, Goldenberg SL, Hernandez J, Higano CS, Kraus SR, Moul JW, Tangen CMAUA Prostate Cancer Clinical Guideline Update Panel. Guideline for the management of clinically localized prostate cancer: 2007 update. J Urol 2007;177:2106-31. [Crossref] [PubMed]

- Hövels AM, Heesakkers RA, Adang EM, Jager GJ, Strum S, Hoogeveen YL, Severens JL, Barentsz JO. The diagnostic accuracy of CT and MRI in the staging of pelvic lymph nodes in patients with prostate cancer: a meta-analysis. Clin Radiol 2008;63:387-95. [Crossref] [PubMed]

- Heydarheydari S, Farshchian N, Haghparast A. Influence of the contrast agents on treatment planning dose calculations of prostate and rectal cancers. Rep Pract Oncol Radiother 2016;21:441-6. [Crossref] [PubMed]

- Li R, Ravizzini GC, Gorin MA, Maurer T, Eiber M, Cooperberg MR, Alemozzaffar M, Tollefson MK, Delacroix SE, Chapin BF. The use of PET/CT in prostate cancer. Prostate Cancer Prostatic Dis 2018;21:4-21. [Crossref] [PubMed]

- Stabile A, Giganti F, Emberton M, Moore CM. MRI in prostate cancer diagnosis: do we need to add standard sampling? A review of the last 5 years. Prostate Cancer Prostatic Dis 2018;21:473-87. [Crossref] [PubMed]

- Yuan J, Poon DMC, Lo G, Wong OL, Cheung KY, Yu SK. A narrative review of MRI acquisition for MR-guided-radiotherapy in prostate cancer. Quant Imaging Med Surg 2022;12:1585-607. [Crossref] [PubMed]

- Lee CH, Taupitz M, Asbach P, Lenk J, Haas M. Clinical utility of combined T2-weighted imaging and T2-mapping in the detection of prostate cancer: a multi-observer study. Quant Imaging Med Surg 2020;10:1811-22. [Crossref] [PubMed]

- Kader A, Brangsch J, Kaufmann JO, Zhao J, Mangarova DB, Moeckel J, Adams LC, Sack I, Taupitz M, Hamm B, Makowski MR, Molecular MR. Imaging of Prostate Cancer. Biomedicines 2020;9:1. [Crossref] [PubMed]

- Johnson LM, Turkbey B, Figg WD, Choyke PL. Multiparametric MRI in prostate cancer management. Nat Rev Clin Oncol 2014;11:346-53. [Crossref] [PubMed]

- Cuocolo R, Stanzione A, Ponsiglione A, Romeo V, Verde F, Creta M, La Rocca R, Longo N, Pace L, Imbriaco M. Clinically significant prostate cancer detection on MRI: A radiomic shape features study. Eur J Radiol 2019;116:144-9. [Crossref] [PubMed]

- Chilla GS, Tan CH, Xu C, Poh CL. Diffusion weighted magnetic resonance imaging and its recent trend-a survey. Quant Imaging Med Surg 2015;5:407-22. [PubMed]

- Vargas HA, Akin O, Franiel T, Mazaheri Y, Zheng J, Moskowitz C, Udo K, Eastham J, Hricak H. Diffusion-weighted endorectal MR imaging at 3 T for prostate cancer: tumor detection and assessment of aggressiveness. Radiology 2011;259:775-84. [Crossref] [PubMed]

- Tamada T, Prabhu V, Li J, Babb JS, Taneja SS, Rosenkrantz AB. Assessment of prostate cancer aggressiveness using apparent diffusion coefficient values: impact of patient race and age. Abdom Radiol (NY) 2017;42:1744-51. [Crossref] [PubMed]

- Kim TH, Kim CK, Park BK, Jeon HG, Jeong BC, Seo SI, Lee HM, Choi HY, Jeon SS. Relationship between Gleason score and apparent diffusion coefficients of diffusion-weighted magnetic resonance imaging in prostate cancer patients. Can Urol Assoc J 2016;10:E377-82. [Crossref] [PubMed]

- Toivonen J, Merisaari H, Pesola M, Taimen P, Boström PJ, Pahikkala T, Aronen HJ, Jambor I. Mathematical models for diffusion-weighted imaging of prostate cancer using b values up to 2000 s/mm(2): correlation with Gleason score and repeatability of region of interest analysis. Magn Reson Med 2015;74:1116-24. [Crossref] [PubMed]

- Xu J, Humphrey PA, Kibel AS, Snyder AZ, Narra VR, Ackerman JJ, Song SK. Magnetic resonance diffusion characteristics of histologically defined prostate cancer in humans. Magn Reson Med 2009;61:842-50. [Crossref] [PubMed]

- Ren H, Zhou L, Liu G, Peng X, Shi W, Xu H, Shan F, Liu L. An unsupervised semi-automated pulmonary nodule segmentation method based on enhanced region growing. Quant Imaging Med Surg 2020;10:233-42. [Crossref] [PubMed]

- Rundo L, Han C, Zhang J, Hataya R, Nagano Y, Militello C, Ferretti C, Nobile MS, Tangherloni A, Gilardi MC, Vitabile S, Nakayama H, Mauri G. CNN-based prostate zonal segmentation on T2-weighted MR images: A cross-dataset study. In: Esposito A, Faundez-Zanuy M, Morabito F, Pasero E. editors. Neural Approaches to Dynamics of Signal Exchanges. Smart Innovation, Systems and Technologies, vol 151. Springer; 2020:269-80.

- Jensen C, Sørensen KS, Jørgensen CK, Nielsen CW, Høy PC, Langkilde NC, Østergaard LR. Prostate zonal segmentation in 1.5T and 3T T2W MRI using a convolutional neural network. J Med Imaging (Bellingham) 2019;6:014501. [Crossref] [PubMed]

- Zabihollahy F, Schieda N, Krishna Jeyaraj S, Ukwatta E. Automated segmentation of prostate zonal anatomy on T2-weighted (T2W) and apparent diffusion coefficient (ADC) map MR images using U-Nets. Med Phys 2019;46:3078-90. [Crossref] [PubMed]

- Korsager AS, Fortunati V, van der Lijn F, Carl J, Niessen W, Østergaard LR, van Walsum T. The use of atlas registration and graph cuts for prostate segmentation in magnetic resonance images. Med Phys 2015;42:1614-24. [Crossref] [PubMed]

- Becker AS, Chaitanya K, Schawkat K, Muehlematter UJ, Hötker AM, Konukoglu E, Donati OF. Variability of manual segmentation of the prostate in axial T2-weighted MRI: A multi-reader study. Eur J Radiol 2019;121:108716. [Crossref] [PubMed]

- Chen MY, Woodruff MA, Dasgupta P, Rukin NJ. Variability in accuracy of prostate cancer segmentation among radiologists, urologists, and scientists. Cancer Med 2020;9:7172-82. [Crossref] [PubMed]

- Garg G, Juneja M. A survey of prostate segmentation techniques in different imaging modalities. Curr Med Imaging 2018;14:19-46. [Crossref]

- Wang Y, Zhang Y, Wen Z, Tian B, Kao E, Liu X, Xuan W, Ordovas K, Saloner D, Liu J. Deep learning based fully automatic segmentation of the left ventricular endocardium and epicardium from cardiac cine MRI. Quant Imaging Med Surg 2021;11:1600-12. [Crossref] [PubMed]

- Khan Z, Yahya N, Alsaih K, Al-Hiyali MI, Meriaudeau F. Recent Automatic Segmentation Algorithms of MRI Prostate Regions: A Review. IEEE Access 2021. doi:

10.1109/ACCESS.2021.3090825 .10.1109/ACCESS.2021.3090825 - Cardenas CE, Yang J, Anderson BM, Court LE, Brock KB. Advances in Auto-Segmentation. Semin Radiat Oncol 2019;29:185-97. [Crossref] [PubMed]

- Sharp G, Fritscher KD, Pekar V, Peroni M, Shusharina N, Veeraraghavan H, Yang J. Vision 20/20: perspectives on automated image segmentation for radiotherapy. Med Phys 2014;41:050902. [Crossref] [PubMed]

- Iglesias JE, Sabuncu MR. Multi-atlas segmentation of biomedical images: A survey. Med Image Anal 2015;24:205-19. [Crossref] [PubMed]

- Schipaanboord B, Boukerroui D, Peressutti D, van Soest J, Lustberg T, Dekker A, Elmpt WV, Gooding MJ. An Evaluation of Atlas Selection Methods for Atlas-Based Automatic Segmentation in Radiotherapy Treatment Planning. IEEE Trans Med Imaging 2019;38:2654-64. [Crossref] [PubMed]

- Ronneberger O, Fischer P, Brox T. U-net: Convolutional networks for biomedical image segmentation. In: Navab N, Hornegger J, Wells W, Frangi A. editors. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015. MICCAI 2015. Springer; 2015:234-41.

- Shelhamer E, Long J, Darrell T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans Pattern Anal Mach Intell 2017;39:640-51. [Crossref] [PubMed]

- Guo Y, Gao Y, Shen D, Deformable MR. Prostate Segmentation via Deep Feature Learning and Sparse Patch Matching. IEEE Trans Med Imaging 2016;35:1077-89. [Crossref] [PubMed]

- Hamzaoui D, Montagne S, Renard-Penna R, Ayache N, Delingette H. Automatic zonal segmentation of the prostate from 2D and 3D T2-weighted MRI and evaluation for clinical use. J Med Imaging (Bellingham) 2022;9:024001. [Crossref] [PubMed]

- Nishie A, Stolpen AH, Obuchi M, Kuehn DM, Dagit A, Andresen K. Evaluation of locally recurrent pelvic malignancy: performance of T2- and diffusion-weighted MRI with image fusion. J Magn Reson Imaging 2008;28:705-13. [Crossref] [PubMed]

- Zavala-Romero O, Breto AL, Xu IR, Chang YC, Gautney N, Dal Pra A, Abramowitz MC, Pollack A, Stoyanova R. Segmentation of prostate and prostate zones using deep learning: A multi-MRI vendor analysis. Strahlenther Onkol 2020;196:932-42. [Crossref] [PubMed]

- Litjens G, Debats O, Barentsz J, Karssemeijer N, Huisman H. Computer-aided detection of prostate cancer in MRI. IEEE Trans Med Imaging 2014;33:1083-92. [Crossref] [PubMed]

- Litjens G, Debats O, Barentsz J, Karssemeijer N, Huisman H. Prostatex challenge data. TCIA 2017.

- Meyer A, Rakr M, Schindele D, Blaschke S, Schostak M, Fedorov A, Hansen C. Towards patient-individual PI-Rads v2 sector map: CNN for automatic segmentation of prostatic zones from T2-weighted MRI. 019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019) 2019:696-700. doi:

10.1109/ISBI.2019.8759572 .10.1109/ISBI.2019.8759572 - Clark K, Vendt B, Smith K, Freymann J, Kirby J, Koppel P, Moore S, Phillips S, Maffitt D, Pringle M, Tarbox L, Prior F. The Cancer Imaging Archive (TCIA): maintaining and operating a public information repository. J Digit Imaging 2013;26:1045-57. [Crossref] [PubMed]

- Masi M, Landoni V, Faiella A, Farneti A, Marzi S, Guerrisi M, Sanguineti G. Comparison of rigid and deformable coregistration between mpMRI and CT images in radiotherapy of prostate bed cancer recurrence. Phys Med 2021;92:32-9. [Crossref] [PubMed]

- Krishn A, Bhateja V, Himanshi, Sahu A. Medical image fusion using combination of PCA and wavelet analysis. 2014 International Conference on Advances in Computing, Communications and Informatics (ICACCI) 2014:986-91. doi:

10.1109/ICACCI.2014.6968636 .10.1109/ICACCI.2014.6968636 - Zhang H, Cao X. A Way of Image Fusion Based on Wavelet Transform. 2013 IEEE 9th International Conference on Mobile Ad-hoc and Sensor Networks, 2013:498-501. doi:

10.1109/MSN.2013.103 .10.1109/MSN.2013.103 - Du G, Cao X, Liang J, Chen X, Zhan Y. Medical image segmentation based on u-net: A review. J Imaging Sci Technol 2020;64:20508-1 - 20508-12.

- Zhang R, Du L, Xiao Q, Liu J. Comparison of Backbones for Semantic Segmentation Network. J Phys Conf Ser 2020;1544:12196. [Crossref]

- Shorten C, Khoshgoftaar TM, Furht B. Text Data Augmentation for Deep Learning. J Big Data 2021;8:101. [Crossref] [PubMed]

- Kingma DP, Ba J. Adam: A method for stochastic optimization. ArXiv 2014.

- Zhu W, Huang Y, Zeng L, Chen X, Liu Y, Qian Z, Du N, Fan W, Xie X. AnatomyNet: Deep learning for fast and fully automated whole-volume segmentation of head and neck anatomy. Med Phys 2019;46:576-89. [Crossref] [PubMed]

- Tsushima Y, Takano A, Taketomi-Takahashi A, Endo K. Body diffusion-weighted MR imaging using high b-value for malignant tumor screening: usefulness and necessity of referring to T2-weighted images and creating fusion images. Acad Radiol 2007;14:643-50. [Crossref] [PubMed]

- Rosenkrantz AB, Mannelli L, Kong X, Niver BE, Berkman DS, Babb JS, Melamed J, Taneja SS. Prostate cancer: utility of fusion of T2-weighted and high b-value diffusion-weighted images for peripheral zone tumor detection and localization. J Magn Reson Imaging 2011;34:95-100. [Crossref] [PubMed]

- Selnæs KM, Heerschap A, Jensen LR, Tessem MB, Schweder GJ, Goa PE, Viset T, Angelsen A, Gribbestad IS. Peripheral zone prostate cancer localization by multiparametric magnetic resonance at 3 T: unbiased cancer identification by matching to histopathology. Invest Radiol 2012;47:624-33. [Crossref] [PubMed]

- Makni N, Iancu A, Colot O, Puech P, Mordon S, Betrouni N. Zonal segmentation of prostate using multispectral magnetic resonance images. Med Phys 2011;38:6093-105. [Crossref] [PubMed]

- Chilali O, Puech P, Lakroum S, Diaf M, Mordon S, Betrouni N. Gland and Zonal Segmentation of Prostate on T2W MR Images. J Digit Imaging 2016;29:730-6. [Crossref] [PubMed]

- Ghavami N, Hu Y, Gibson E, Bonmati E, Emberton M, Moore CM, Barratt DC. Automatic segmentation of prostate MRI using convolutional neural networks: Investigating the impact of network architecture on the accuracy of volume measurement and MRI-ultrasound registration. Med Image Anal 2019;58:101558. [Crossref] [PubMed]

- Aldoj N, Biavati F, Michallek F, Stober S, Dewey M. Automatic prostate and prostate zones segmentation of magnetic resonance images using DenseNet-like U-net. Sci Rep 2020;10:14315. [Crossref] [PubMed]

- Tao L, Ma L, Xie M, Liu X, Tian Z, Fei B. Automatic Segmentation of the Prostate on MR Images based on Anatomy and Deep Learning. Proc SPIE Int Soc Opt Eng 2021;11598:115981N.

- Bardis M, Houshyar R, Chantaduly C, Tran-Harding K, Ushinsky A, Chahine C, Rupasinghe M, Chow D, Chang P. Segmentation of the Prostate Transition Zone and Peripheral Zone on MR Images with Deep Learning. Radiol Imaging Cancer 2021;3:e200024. [Crossref] [PubMed]

- Singh D, Kumar V, Das CJ, Singh A, Mehndiratta A. Segmentation of prostate zones using probabilistic atlas-based method with diffusion-weighted MR images. Comput Methods Programs Biomed 2020;196:105572. [Crossref] [PubMed]

- Cem Birbiri U, Hamidinekoo A, Grall A, Malcolm P, Zwiggelaar R. Investigating the Performance of Generative Adversarial Networks for Prostate Tissue Detection and Segmentation. J Imaging 2020;6:83. [Crossref] [PubMed]

- Rundo L, Han C, Nagano Y, Zhang J, Hataya R, Militello C, Tangherloni A, Nobile MS, Ferretti C, Besozzi D, Gilardi MC, Vitabile S, Mauri G, Nakayama H, Cazzaniga P. USE-Net: Incorporating Squeeze-and-Excitation blocks into U-Net for prostate zonal segmentation of multi-institutional MRI datasets. Neurocomputing 2019;365:31-43. [Crossref]

- Schelb P, Tavakoli AA, Tubtawee T, Hielscher T, Radtke JP, Görtz M, Schütz V, Kuder TA, Schimmöller L, Stenzinger A, Hohenfellner M, Schlemmer HP, Bonekamp D. Comparison of Prostate MRI Lesion Segmentation Agreement Between Multiple Radiologists and a Fully Automatic Deep Learning System. Rofo 2021;193:559-73. [Crossref] [PubMed]

- Liu Y, Miao Q, Surawech C, Zheng H, Nguyen D, Yang G, Raman SS, Sung K. Deep Learning Enables Prostate MRI Segmentation: A Large Cohort Evaluation With Inter-Rater Variability Analysis. Front Oncol 2021;11:801876. [Crossref] [PubMed]

- Meyer A, Chlebus G, Rak M, Schindele D, Schostak M, van Ginneken B, Schenk A, Meine H, Hahn HK, Schreiber A, Hansen C. Anisotropic 3D Multi-Stream CNN for Accurate Prostate Segmentation from Multi-Planar MRI. Comput Methods Programs Biomed 2021;200:105821. [Crossref] [PubMed]