Deep learning for automated grading of radiographic sacroiliitis

Introduction

Sacroiliitis is a condition resulting from a variety of etiologies that disrupt or damage the sacroiliac joint and trigger an inflammatory reaction within the joint (1,2). This inflammation is a common initial symptom of axial spondyloarthritis. Early clinical presentations and laboratory assessments lack diagnostic specificity, and once spinal rigidity and joint deformities manifest in later stages, the condition becomes irreversible, often leading to disability in young and middle-aged individuals (3,4). Imaging examinations play a crucial role in the early diagnosis of sacroiliitis, as outlined in the modified New York criteria, which specify that the presence of clear sacroiliitis on imaging (defined as a minimum of grade 2 on both sides or grade 3 on one side) enables prompt identification of radiographic sacroiliitis (5). X-ray examination is a straightforward and easily repeatable procedure with low radiation exposure, high spatial resolution, and the ability to effectively visualize grade-II-and-above lesions in sacroiliitis. Additionally, it provides clear imaging of the hip joint, rendering it the preferred modality for both screening and diagnosing this condition (6,7).

Presently, the diagnosis of sacroiliitis via X-ray typically necessitates assessment by seasoned radiologists, and the precision of screening is contingent upon the expertise of the diagnosing practitioner. Although X-ray imaging is widely used in the diagnosis of sacroiliac arthritis, there are significant limitations in its reliability of evaluation. The variability in imaging explanations for sacroiliac arthritis has been highlighted in recent research, including that by van den Berg et al. (8), and it may be difficult for even experienced radiologists and rheumatologists to obtain consistency when evaluating X-ray sacroiliac arthritis. This inherent unreliability emphasizes the need for more objective and standardized diagnostic tools.

As computer-aided diagnostic technology in medical imaging continues to advance, an increasing number of artificial intelligence (AI) products are reaching the clinical validation phase (9-13); however, their utilization in bone and joint imaging lags behind more prevalent applications such as those in oncology and neurology. This study investigated the use of an AI model for the analysis of sacroiliitis X-ray images in order to aid senior physicians in performing repetitive diagnostic tasks, enhance work productivity, and support junior imaging diagnostic practitioners or non-imaging medical professionals in accurately conducting evaluations within the realm of sacroiliitis imaging. We present this article in accordance with the TRIPOD + AI reporting checklist (available at https://qims.amegroups.com/article/view/10.21037/qims-2024-2742/rc).

Methods

The overall design of the proposed method

In our methodology, we used X-ray to accurately discriminate radiographic sacroiliitis, along arthritis grading. To achieve this, we implemented three key steps (Figure 1).

Region of interest extraction and preprocessing

We automatically extracted the target regions of interest (ROIs) of the sacroiliac joint, as illustrated in Figure 1. The preprocessing operations of image standardization and image augmentation were then performed on the left and right ROIs of the sacroiliac joint.

Model construction

In our study, we used the ConvNeXt-T network (14) to build the sacroiliac arthritis grading model. ConvNeXt-T is a recent development in the field of convolutional neural networks (CNNs) that integrates the strengths of traditional CNN architectures with the innovations of transformer models, particularly in terms of scalability and performance, thus achieving state-of-the-art performance in a scalable and efficient manner.

Model evaluation

The receiver operating characteristic (ROC) curve and confusion matrix were drawn to visually represent the performance. In our study, the diagnosis performance of our AI model was compared to that of two radiologists. In addition, the performance of the radiologist assisted by the AI model was compared to that of the radiologist alone.

Study sample

The study was conducted in accordance with the Declaration of Helsinki and its subsequent amendments. The study was approved by the Ethics Committee of Xi’an No. 3 Hospital (approval No. SYLL-2025-024). Xi’an No. 5 Hospital was also informed and agreed the study. Informed consent was waived for this retrospective analysis.

For the training set and the internal validation set, 690 patients who were clinically suspected of sacroiliitis and who underwent pelvic X-rays at Affiliated Hospital of Northwest University (Xi’an No. 3 Hospital) from January 2022 to December 2023 were selected (Figure 2). After patients with poor image quality (malpositioning, intestinal gas interference, or the presence of images with skeletal variations and deformities) that precluded a definitive diagnosis were excluded, a total of 660 people (1,320 single sacroiliac joints) and their X-ray images were included in the study and randomized into a training group of 465 patients (930 single sacroiliac joints) and a validation group of 195 patients (390 single sacroiliac joints).

For the external test set, we retrospectively included 223 patients (446 single sacroiliac joints) with radiographic images from another tertiary hospital (Xi’an No. 5 Hospital) between January 2024 and June 2024 who met the same inclusion criteria described above (Figure 2).

ROI extraction and preprocessing

In our study, the target ROIs of the sacroiliac joint were automatically extracted through the use of the delineation results from adjacent bones. Specifically, we employed a well-trained segmentation model to accurately segment the innominate bones and thighbones. Based on the four delineated bones, the left and right sacroiliac joints were automatically extracted for model training.

In our training configuration, the image patches of sacroiliac joints were cropped to a patch size of 1,024×1,024 from the original image. Prior to the model training, we applied a normalization procedure to standardize the image intensity. First, we performed adaptive normalization on the image intensity (Ii). Specifically, the z-score with a per image mean value (µ) and standard value (σ) was calculated from the intensity ranging between the of 0.01 and 99.99 percentiles. Subsequently, the intensity values were clipped to the intensity range of [−1, 1] as follows:

where I is the original image, and I' is the standardized image.

To improve the generalization performance of the model, data augmentation was employed in training, including random elastic deformation in the cropped image (0–5 mm), small random scaling (0.9–1.1), and small random rotation (−10° to +10°).

Model construction

In the task of arthritis discrimination and grading, we adopted the ConvNeXt-T network as the backbone in our framework.

As illustrated in Figure 3, the network consists of a certain number of basic generic blocks, including ConvNeXt Block, which utilizes the moving-up depthwise convolution layer with large kernel size (the kernel size is set to 7×7, the stride size is set to 1×1, and the padding size is set to 3×3; this is followed by layer normalization layer) to enlarge the receptive field and realize the global self-attention. In the ConvNeXt block, the inverted bottleneck is used to improve the efficiency in depthwise separable convolution. By expanding the feature channels before applying depthwise convolutions and then projecting them back to a lower-dimensional space, the inverted bottleneck allows for better utilization of intermediate features. This design helps in capturing more complex patterns in the data, improving the network’s representational capacity (15,16). In addition, the separate down-sample layer is used between adjacent ConvNeXt blocks, consisting of one Layer Normalization layer and one convolution layer (the kernel size is set to 2×2, the stride size is set to 2×2, k1 indicates that the kernel size is set to 1×1, and k2 indicates that the kernel size is set to 2×2. The same applies to the rest convolution layers). The details of the network architecture are illustrated in Figure 3.

In our model-building process, the label smoothing cross-entropy loss function was used to optimize the model as follows:

where q is the target of model, p is the predict of model, i is the category label, and K is the amount of the category.

To finetune and optimize the weights of ConvNeXt-T, we adapted AdamW (learning rate =3e−4 backbone/1e−3 FC), cosine decay (200 epochs). The detailed training hyperparameters are provided in Table 1.

Table 1

| Hyperparameter category | Settings |

|---|---|

| Optimizer | AdamW (β1=0.9, β2=0.999) |

| Weight decay in AdamW | 0.05 (FC layers), 0.01 (pretrained layers) |

| Initial learning rate | 3e−4 (backbone), 1e−3 (FC layer) |

| Learning schedule | Cosine annealing (200 epochs, 5-epoch warmup) |

| Regularization | Dropout =0.2 |

| Batch size | 32 (training), 16 (validation) |

Model evaluation

To evaluate the performance of our model, we used various metrics, including the area under the curve (AUC), sensitivity, specificity, and accuracy. Additionally, we compared the performance of our model to that of two radiologists in terms of accuracy, sensitivity, and specificity. The performance of a radiologist assisted with the AI model was also compared to that of the radiologist alone to assess the effectiveness of our model in assisting diagnosis. We further used ROC curves and confusion matrix to evaluate how well our model predictions aligned with the observed outcomes. All metrics and curves were computed separately in the test dataset.

According to the modified New York criteria (5), images were categorized into grades 0–4 by two senior radiologists with more than 10 years of diagnostic experience. Patients with at least grade 2 bilaterally or at least grade 3 unilaterally were considered to be definite radiographic sacroiliitis. This classification system was established through independent image interpretation, with the diagnostic criteria specified in Table 2 and Figure 4. In cases of disagreement between radiologists, a senior physician with extensive experience in orthopedic X-ray diagnostics was consulted, resulting in a final consensus score for the images.

Table 2

| Grade | Radiographic assessment |

|---|---|

| Grade 0 | Normal |

| Grade 1 | Suspicious changes: a slightly blurred and rough sacroiliac joint surface with no obvious abnormality in bone |

| Grade 2 | Minimal abnormality: limited erosion and hardening of the joint surface, but with a normal joint space |

| Grade 3 | Unequivocal abnormality: a blurred and rough joint surface, with a narrow joint space |

| Grade 4 | Severe abnormality: fusion and loss of joint space, with dense osteitis |

In addition, two radiologists from our department independently assessed 446 single sacroiliac joint images of 223 patients in the external test set. They graded the images before and after consulting the model’s results, without prior knowledge of the grading outcomes. They determined whether each case was positive based on their independent grading assessments.

Statistical analyses

Statistical analysis was performed with SPSS version 21.0 (IBM Corp., Armonk, NY, USA). Comparisons of demographic data of the study patients, such as age and duration of symptoms, were conducted through two-sample t-tests. Continuous variables are presented as the mean ± standard deviation (SD) if normally distributed and as the median and interquartile range (IQR) otherwise. With the grading and scoring results of senior physicians being adopted as the gold standard, the accuracy of the deep learning model, each of the two junior physicians, and each of two junior physicians assisted by AI was calculated. Paired sampled t-tests were used to compare the difference in grading assessment accuracy between the deep learning model and the junior physicians. The ROC curve was used to evaluate the performance of the deep learning model. Intra- and interobserver agreement was assessed via the intraclass correlation coefficient. Differences in categorical data were analyzed via the χ2 test.

Results

Clinical characteristics of the study sample

The study included 883 individuals comprising 1,766 single sacroiliac joints. Among these cases, 1,404 were found to have consistent scores between the two radiologists, with a kappa value of 0.715. A total of 362 cases that had inconsistent scoring were reviewed and scored by a senior attending physician. The basic characteristics of the patients are presented in Table 3, and no statistically significant differences were observed in baseline clinical data between the two groups of patients in the model developing and the external testing.

Table 3

| Characteristic at baseline | Model development (N=660) | External test set (N=223) | P |

|---|---|---|---|

| Age (years) | 39.01±11.76 [25–73] | 39.34±12.08 [28–65] | 0.87 |

| Male sex | 302 (45.76) | 112 (50.22) | 0.07 |

| Duration of symptoms (days) | 1,975±325 | 1,232±221 | 0.08 |

| HLA-B27 positive | 231 (35.00) | 88 (39.46) | 0.15 |

| CRP (mg/L) | 14.32±21.63 | 12.31±16.32 | 0.43 |

| Peripheral monoarthritis | 193 (29.24) | 70 (31.39) | 0.60 |

| Family history of SpA | 81 (12.27) | 34 (15.25) | 0.28 |

| Treatment with DMARDs | 151 (22.88) | 48 (21.52) | 0.68 |

| Treatment with NSAIDs | 305 (46.21) | 116 (52.02) | 0.15 |

| Treatment with steroids | 54 (8.18) | 22 (9.87) | 0.51 |

| Treatment with a TNF inhibitor | 25 (3.79) | 14 (6.28) | 0.20 |

Data are presented as mean ± SD or n (%) or [range]. SD, standard deviation; HLA-B27, human leukocyte antigen B27; CRP, C-reactive protein; SpA, spondyloarthritis; DMARD, disease-modifying antirheumatic drug; NSAID, nonsteroidal anti-inflammatory drug; TNF, tumor necrosis factor.

Grading of radiographic sacroiliitis

Among the 1,320 cases of suspected radiographic sacroiliitis in both the training and validation groups, there were 254 cases graded as 0, 232 cases graded as 1, 279 cases graded as 2, 267 cases graded as 3, and 288 cases graded as 4; there were 413 positive patients and 247 negative patients.

Among the 446 cases of suspected radiographic sacroiliitis in the external test data set, 150 cases were grade 0, 102 cases were grade 1, 68 cases were grade 2, 66 cases were grade 3, and 60 cases were grade 4; there were 121 positive patients and 102 negative patients.

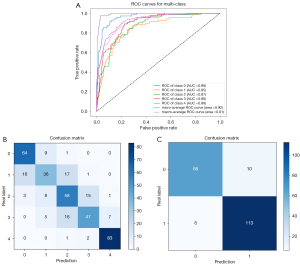

Model performance in the validation set

Figure 5 illustrates the hierarchical evaluation performance of the deep learning classification model curve in the validation set, as well as the ROC analysis of the five AUC values for grades 0–4 sacroiliitis, which were 0.96, 0.85, 0.87, 0.90, and 0.99, respectively. The model demonstrates a high level of accuracy in grading sacroiliitis, achieving a rate of 73.85%. According to the New York grading criteria (5), patients with at least grade 2 bilaterally or at least grade ≥3 in a single joint are considered positive for radiographic sacroiliitis. The model’s accuracy in definitively diagnosing positive patients was 91.79%, and the mean sensitivity, specificity, positive predictive value, and the negative predictive value in the validation set were 94.96%, 86.84%, 91.87%, and 91.67%, respectively. These results indicate the strong predictive capabilities of the proposed model.

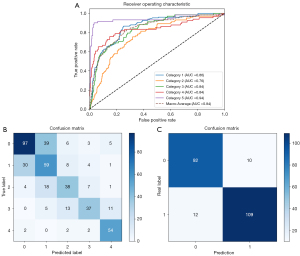

Model performance in the external test set

Figure 6 illustrates the hierarchical evaluation performance of the deep learning classification model curve in the external test set, as well as the ROC analysis of the five AUC values for grades 0–4 sacroiliitis which were 0.86, 0.76, 0.84, 0.84, and 0.94, respectively. The model demonstrated accuracy in grading single-joint sacroiliitis, achieving a rate of 63.90%. The model’s accuracy in definitively diagnosing positive patients was 90.13%, and the mean sensitivity, specificity, positive predictive value, and negative predictive value in the external test set were 90.08%, 90.20%, 91.60%, and 88.46%, respectively.

Manual detection and grading assessment of radiographic sacroiliitis

Two radiologists (junior physicians) independently graded 446 cases of sacroiliitis in an external test set without prior knowledge of the standardized results, with a kappa value of 0.698. The highest consistency was found for grades 0 and 4, with kappa values of 0.82 and 0.78, respectively, and the lowest consistency was found for grades 2 and 3, with kappa values of 0.45 and 0.52, respectively.

Among 446 cases of sacroiliitis, the accuracy of grading assessment (on a scale of 0–4) was 75.00% and 74.08% for radiologist 1 and radiologist 2, respectively, while their diagnostic accuracy was 92.45% and 91.10% respectively. The sensitivity, specificity, positive predictive value, and negative predictive value of radiologist 1 were 94.03%, 89.74%, 93.03%, and 87.04%, respectively, while they were 88.43%, 95.71%, 97.27%, and 82.72%, respectively, for radiologist 2 (Table 4). Statistical analysis indicated no significant difference in the diagnostic accuracy of sacroiliitis between the two radiologists and the deep learning model.

Table 4

| Assessment method | SEN (%) | SPE (%) | PPV (%) | NPV (%) | Accuracy (%) | P |

|---|---|---|---|---|---|---|

| DL algorithm | 90.08 | 90.20 | 91.59 | 88.46 | 90.13 | – |

| Radiologist 1 | 94.03 | 89.74 | 93.03 | 87.04 | 92.45 | 0.26 |

| Radiologist 2 | 88.43 | 95.71 | 97.27 | 82.72 | 91.10 | 0.24 |

DL, deep learning; NPV, negative predictive value; PPV, positive predictive value; SEN, sensitivity; SPE, specificity.

Manual detection and grading assessment of radiographic sacroiliitis assisted by the deep learning algorithm

Two radiologists exhibited a notable enhancement in the accuracy of diagnosing sacroiliitis through the use of AI model. The findings indicated that their assessment outcomes significantly differed from those recorded prior to the intervention, with classification accuracy rates (on a scale of 0–4) improving from 75.00% and 74.08% to 88.89% and 80.90%, respectively. Furthermore, the diagnostic accuracy rate increased from 92.45% and 91.10% to 97.17% and 95.29%. For the definitive diagnosis of sacroiliac inflammation, the sensitivity, specificity, positive predictive value, and negative predictive value for Radiologist 1 were 97.01%, 97.44%, 98.48%, and 95.00%, respectively, while those for Radiologist 2 were 94.21%, 97.14%, 98.28%, and 90.67%, respectively (Table 5).

Table 5

| Assessment method | SEN (%) | SPE (%) | PPV (%) | NPV (%) | Accuracy (%) | P |

|---|---|---|---|---|---|---|

| Radiologist 1 | 94.03 | 89.74 | 93.03 | 87.04 | 92.45 | 0.042 |

| Radiologist 1 with model | 97.01 | 97.44 | 98.48 | 95.00 | 97.17 | |

| Radiologists 2 | 88.43 | 95.71 | 97.27 | 82.72 | 91.10 | 0.038 |

| Radiologists 2 with model | 94.21 | 97.14 | 98.28 | 90.67 | 95.29 |

NPV, negative predictive value; PPV, positive predictive value; SEN, sensitivity; SPE, specificity.

Discussion

This study effectively designed and assessed an AI model for the detection and assessment of sacroiliitis on conventional X-ray images. The model exhibited high accuracy and notably enhanced the diagnostic and grading precision of sacroiliitis for novice medical practitioners.

AI algorithms, particularly deep learning models, possess the capability to analyze extensive datasets and recognize image characteristics that may be imperceptible to human observers (17). The capacity of AI to effectively process and derive insights from vast quantities of data may result in more precise diagnostic outcomes compared to those achieved by trained medical professionals. Furthermore, AI remains unaffected by human factors such as fatigue or subjective bias, thus yielding more consistent results. Additionally, it has the capability to significantly reduce diagnosis time, ultimately enhancing diagnostic efficiency. Finally, AI can function as a valuable adjunctive diagnostic instrument for healthcare professionals, aiding in the formulation of more precise diagnoses grounded in objective data.

In recent years, neural networks have already been applied to a variety of medical imaging data, showing great potential in image reconstruction (18), structural segmentation (19), lesion detection (20,21), and disease diagnosis (22,23), but its application in sacroiliitis remains relatively limited. One study confirmed that employing data augmentation and transfer learning techniques can yield favorable outcomes in the radiological assessment of ankylosing spondylitis across three CNN architectures—VGG-16, Inception-v3, and ResNet-101—with the VGG-16 model demonstrating marginal superiority over the other two networks (24).

Bressem et al. (4) attempted to create and evaluate an AI diagnostic model using traditional X-rays to detect radiographic ankylosing spondylitis and found that the model’s diagnostic performance was comparable to that of skilled professionals; however, grading assessment of radiographic sacroiliitis with AI was not attempted. Similarly, Lee et al. (25) used the DenseNet121 algorithm for the hierarchical diagnosis of sacroiliitis, which produced a strong performance and demonstrated the potential to improve diagnostic accuracy in clinical practice. However, to streamline the training process and avoid confusion, the study focused solely on the right sacroiliac joint for training the AI model. This approach simplified diagnosis but potentially overlooked the diversity of sacroiliitis presentations. Our study employed the ConvNeXt-T algorithm, a sophisticated model within the CNN field. Table S1 provides a detailed comparison of the diagnostic performance of various network models. The ConvNeXt-T model showed a significantly better diagnostic effect in the validation set, with higher accuracy and AUC values compared to the other models. This suggests that the ConvNeXt-T model is more reliable for the automated grading of radiographic sacroiliitis. We used this network to automate the grading assessment of sacroiliitis in X-ray images that met the diagnostic criteria, resulting in successful outcomes. The overall versatility of the model was also validated.

This model merges the best features of traditional CNN structures with transformative innovations from transformer models, yielding improved scalability and performance. ConvNeXt-T, a pure CNN architecture, integrates design principles from vision transformers to achieve superior performance in medical image analysis. It benefits for medical imaging are as follows: (I) capturing global-local features via 7×7 depthwise convolutions, critical for the anatomical context; (II) reducing parameters (~40% vs. CNNs) to address data scarcity; and (III) pretraining weights (open-source ImageNet-1K) to boost performance and convergence speed. These advantages represent a significant step forward in the evolution of CNNs, combining the best aspects of traditional CNNs and transformer models to achieve state-of-the-art performance in a scalable and efficient manner. Therefore, it can be used to assess radiographic changes, such as bone erosion, subchondral sclerosis, joint space narrowing, and ankylosis, and to further apply the extracted radiographic features to the quantitative grading of sacroiliitis, which in this study, provided excellent accuracy in all grades of sacroiliitis and good results in grading assessment.

It is widely acknowledged that conventional radiographs have limited reliability in detecting sacroiliitis (24-28), especially in early lesions. The poor interreader agreement, driven by specific lesion types such as erosion, was also confirmed in this study, where for grade 2 and 3 sacroiliitis, there was low agreement in grading the diagnosis between the two radiologists. Deep learning algorithms also exhibit similar characteristics. In comparison to the diagnostic accuracy for grade 4 sacroiliitis (90.00%), that for grades 2 (55.88%) and grades 3 (56.06%) was lower. This discrepancy may be attributed to the definitions of these grades, which include ambiguous joint spaces, roughness, and bony sclerosis—features that are challenging to quantify. With the accumulation of learning experience and iterative model refinement, we anticipate improvements in both diagnostic sensitivity and accuracy.

Our study demonstrated that using an AI model as a reference can enhance the diagnostic accuracy of junior radiologists. The grading accuracy of the two radiologists improved significantly from 75.00% and 74.08% to 88.89% and 80.90%, respectively. This enhancement is particularly advantageous for less experienced practitioners, who may leverage AI assistance to elevate their diagnostic capabilities. Furthermore, our AI model processes a pelvic X-ray image in just 0.28 seconds, while a radiologist requires an average of 10 seconds. This represents a reduction in processing time by a factor of 36, which has the potential to greatly enhance diagnostic efficiency.

The AI model developed in this study is subject to certain limitations which should be addressed. Primarily, the two hospitals that provided data for this study were from the same region, which might have introduced patient bias and resulted in incomplete data collection regarding sacroiliitis. Future research will involve addressing these limitations by employing larger sample sizes and conducting multicenter studies to further validate the model’s effectiveness. In addition, even radiologists with specialized training may fail to detect subtle early changes of sacroiliitis on plain radiographs (8,29,30). The subjective nature of establishing a gold standard can impact the training and validation of AI models, hindering their ability to promptly detect these changes. To address this issue, three experienced radiology staff members were used to provide explanations and annotations on the images, with the purpose being to minimize potential discrepancies and achieve a standardized and consistent model gold standard.

Conclusions

The findings of our study support the efficacy of deep learning models in detecting sacroiliitis through the analysis of conventional X-ray images. This approach has the potential to serve as a computer-assisted diagnostic tool, assisting healthcare professionals in overcoming the limitations associated with traditional X-rays for diagnostic purposes. The model’s high diagnostic accuracy and its ability to enhance the performance of less experienced practitioners underscore its potential as a valuable diagnostic resource.

Acknowledgments

None.

Footnote

Reporting Checklist: The authors have completed the TRIPOD+AI reporting checklist. Available at https://qims.amegroups.com/article/view/10.21037/qims-2024-2742/rc

Funding: This work was funded by

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://qims.amegroups.com/article/view/10.21037/qims-2024-2742/coif). Q.Z., Y.X., and F.S. are employees of Shanghai United Imaging Intelligence Co., Ltd. Y.D. declares that the research was supported by the Research Project of Shaanxi Provincial Administration of Traditional Chinese Medicine (No. SZY-NLTL-2024-035), Medical Project of Innovation Ability Strong Foundation Program in Xi’an (No. 22YXYJ0069), Internal Fund of Xi’an No. 5 Hospital (No. 20231c04). Y.G. declares that the research was supported by the Key Research and Development Projects of Shaanxi Province (No. S2023-YBSF-338). The other authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki and its subsequent amendments. The study was approved by the Ethics Committee of Xi’an No. 3 Hospital (approval No. SYLL-2025-024). Xi’an No. 5 Hospital was also informed and agreed the study. Informed consent was waived for this retrospective analysis.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Taurog JD, Chhabra A, Colbert RA. Ankylosing Spondylitis and Axial Spondyloarthritis. N Engl J Med 2016;374:2563-74. [Crossref] [PubMed]

- Rudwaleit M, van der Heijde D, Landewé R, Akkoc N, Brandt J, Chou CT, Dougados M, Huang F, Gu J, Kirazli Y, Van den Bosch F, Olivieri I, Roussou E, Scarpato S, Sørensen IJ, Valle-Oñate R, Weber U, Wei J, Sieper J. The Assessment of SpondyloArthritis International Society classification criteria for peripheral spondyloarthritis and for spondyloarthritis in general. Ann Rheum Dis 2011;70:25-31. [Crossref] [PubMed]

- Danve A, Deodhar A. Treatment of axial spondyloarthritis: an update. Nat Rev Rheumatol 2022;18:205-16. [Crossref] [PubMed]

- Bressem KK, Vahldiek JL, Adams L, Niehues SM, Haibel H, Rodriguez VR, Torgutalp M, Protopopov M, Proft F, Rademacher J, Sieper J, Rudwaleit M, Hamm B, Makowski MR, Hermann KG, Poddubnyy D. Deep learning for detection of radiographic sacroiliitis: achieving expert-level performance. Arthritis Res Ther 2021;23:106. [Crossref] [PubMed]

- van der Linden S, Valkenburg HA, Cats A. Evaluation of diagnostic criteria for ankylosing spondylitis. A proposal for modification of the New York criteria. Arthritis Rheum 1984;27:361-8. [Crossref] [PubMed]

- Mandl P, Navarro-Compán V, Terslev L, Aegerter P, van der Heijde D, D'Agostino MA, et al. EULAR recommendations for the use of imaging in the diagnosis and management of spondyloarthritis in clinical practice. Ann Rheum Dis 2015;74:1327-39. [Crossref] [PubMed]

- Melchior J, Azraq Y, Chary-Valckenaere I, Rat AC, Reignac M, Texeira P, Blum A, Loeuille D. Radiography, abdominal CT and MRI compared with sacroiliac joint CT in diagnosis of structural sacroiliitis. Eur J Radiol 2017;95:169-76. [Crossref] [PubMed]

- van den Berg R, Lenczner G, Feydy A, van der Heijde D, Reijnierse M, Saraux A, Rahmouni A, Dougados M, Claudepierre P. Agreement between clinical practice and trained central reading in reading of sacroiliac joints on plain pelvic radiographs. Results from the DESIR cohort. Arthritis Rheumatol 2014;66:2403-11. [Crossref] [PubMed]

- Zhou Z, Gao Y, Zhang W, Zhang N, Wang H, Wang R, Gao Z, Huang X, Zhou S, Dai X, Yang G, Zhang H, Nieman K, Xu L. Deep Learning-based Prediction of Percutaneous Recanalization in Chronic Total Occlusion Using Coronary CT Angiography. Radiology 2023;309:e231149. [Crossref] [PubMed]

- Vieira S, Pinaya WH, Mechelli A. Using deep learning to investigate the neuroimaging correlates of psychiatric and neurological disorders: Methods and applications. Neurosci Biobehav Rev 2017;74:58-75. [Crossref] [PubMed]

- Li M, Ling R, Yu L, Yang W, Chen Z, Wu D, Zhang J. Deep Learning Segmentation and Reconstruction for CT of Chronic Total Coronary Occlusion. Radiology 2023;306:e221393. [Crossref] [PubMed]

- Chartrand G, Cheng PM, Vorontsov E, Drozdzal M, Turcotte S, Pal CJ, Kadoury S, Tang A. Deep Learning: A Primer for Radiologists. Radiographics 2017;37:2113-31. [Crossref] [PubMed]

- Sun H, Ren G, Teng X, Song L, Li K, Yang J, Hu X, Zhan Y, Wan SBN, Wong MFE, Chan KK, Tsang HCH, Xu L, Wu TC, Kong FS, Wang YXJ, Qin J, Chan WCL, Ying M, Cai J. Artificial intelligence-assisted multistrategy image enhancement of chest X-rays for COVID-19 classification. Quant Imaging Med Surg 2023;13:394-416. [Crossref] [PubMed]

- Liu Z, Mao H, Wu CY, Feichtenhofer C, Darrell T, Xie S. A ConvNet for the 2020s. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, LA, USA, 2022:11966-76. doi:

10.1109/CVPR52688.2022.01167 . - Sandler M, Howard A, Zhu M, Zhmoginov A, Chen LC. MobileNetV2: Inverted Residuals and Linear Bottlenecks. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 2018:4510-20. doi:

10.1109/CVPR.2018.00474 . - Howard A, Sandler M, Chen B, Wang W, Chen LC, Tan M, Chu G, Vasudevan V, Zhu Y, Pang R, Adam H, Le Q. Searching for MobileNetV3. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV). Seoul, Korea (South): IEEE; 2019:1314-24. Available online: https://ieeexplore.ieee.org/document/9008835/

- McBee MP, Awan OA, Colucci AT, Ghobadi CW, Kadom N, Kansagra AP, Tridandapani S, Auffermann WF. 2018_Deep Learning in Radiology.pdf [cited 2024 Jun 7]. Available online: https://sci.bban.top/pdf/10.1016/j.acra.2018.02.018.pdf#view=FitH

- Koetzier LR, Mastrodicasa D, Szczykutowicz TP, van der Werf NR, Wang AS, Sandfort V, van der Molen AJ, Fleischmann D, Willemink MJ. Deep Learning Image Reconstruction for CT: Technical Principles and Clinical Prospects. Radiology 2023;306:e221257. [Crossref] [PubMed]

- Hallinan JTPD, Zhu L, Yang K, Makmur A, Algazwi DAR, Thian YL, Lau S, Choo YS, Eide SE, Yap QV, Chan YH, Tan JH, Kumar N, Ooi BC, Yoshioka H, Quek ST. Deep Learning Model for Automated Detection and Classification of Central Canal, Lateral Recess, and Neural Foraminal Stenosis at Lumbar Spine MRI. Radiology 2021;300:130-8. [Crossref] [PubMed]

- Becker AS, Marcon M, Ghafoor S, Wurnig MC, Frauenfelder T, Boss A. Deep Learning in Mammography: Diagnostic Accuracy of a Multipurpose Image Analysis Software in the Detection of Breast Cancer. Invest Radiol 2017;52:434-40. [Crossref] [PubMed]

- Yala A, Lehman C, Schuster T, Portnoi T, Barzilay R. A Deep Learning Mammography-based Model for Improved Breast Cancer Risk Prediction. Radiology 2019;292:60-6. [Crossref] [PubMed]

- Walsh SLF, Humphries SM, Wells AU, Brown KK. Imaging research in fibrotic lung disease; applying deep learning to unsolved problems. Lancet Respir Med 2020;8:1144-53. [Crossref] [PubMed]

- Brown JM, Campbell JP, Beers A, Chang K, Ostmo S, Chan RVP, Dy J, Erdogmus D, Ioannidis S, Kalpathy-Cramer J, Chiang MF. Imaging and Informatics in Retinopathy of Prematurity (i-ROP) Research Consortium. Automated Diagnosis of Plus Disease in Retinopathy of Prematurity Using Deep Convolutional Neural Networks. JAMA Ophthalmol 2018;136:803-10. [Crossref] [PubMed]

- Üreten K, Maraş Y, Duran S, Gök K. Deep learning methods in the diagnosis of sacroiliitis from plain pelvic radiographs. Mod Rheumatol 2023;33:202-6. [Crossref] [PubMed]

- Lee KH, Choi ST, Lee GY, Ha YJ, Choi SI. Method for Diagnosing the Bone Marrow Edema of Sacroiliac Joint in Patients with Axial Spondyloarthritis Using Magnetic Resonance Image Analysis Based on Deep Learning. Diagnostics (Basel) 2021.

- Christiansen AA, Hendricks O, Kuettel D, Hørslev-Petersen K, Jurik AG, Nielsen S, Rufibach K, Loft AG, Pedersen SJ, Hermansen LT, Østergaard M, Arnbak B, Manniche C, Weber U. Limited Reliability of Radiographic Assessment of Sacroiliac Joints in Patients with Suspected Early Spondyloarthritis. J Rheumatol 2017;44:70-7. [Crossref] [PubMed]

- van Tubergen A, Heuft-Dorenbosch L, Schulpen G, Landewé R, Wijers R, van der Heijde D, van Engelshoven J, van der Linden S. Radiographic assessment of sacroiliitis by radiologists and rheumatologists: does training improve quality? Ann Rheum Dis 2003;62:519-25. [Crossref] [PubMed]

- Spoorenberg A, de Vlam K, van der Linden S, Dougados M, Mielants H, van de Tempel H, van der Heijde D. Radiological scoring methods in ankylosing spondylitis. Reliability and change over 1 and 2 years. J Rheumatol 2004;31:125-32.

- Melchior J, Azraq Y, Chary-Valckenaere I, Rat AC, Texeira P, Blum A, Loeuille D. Radiography and abdominal CT compared with sacroiliac joint CT in the diagnosis of sacroiliitis. Acta Radiol 2017;58:1252-9. [Crossref] [PubMed]

- Yazici H, Turunç M, Ozdoğan H, Yurdakul S, Akinci A, Barnes CG. Observer variation in grading sacroiliac radiographs might be a cause of 'sacroiliitis' reported in certain disease states. Ann Rheum Dis 1987;46:139-45. [Crossref] [PubMed]