Fusing residual dense and attention in generative adversarial networks for super-resolution of medical images

Introduction

Medical images contain rich clinical feature information that provides an important basis for clinical diagnosis. However, low-resolution (LR) medical images may affect the accuracy of diagnosis due to blurred lesion features. The super-resolution (SR) of medical images has become a popular research area (1). The network structure of image SR methods may be based on convolutional operations (2,3), residual densely connected networks (4,5), multi-scale fusion (6,7), or generative adversarial networks (GANs) (8-10). These types of methods are currently also used in the field of medical images. For example, Qiu et al. (11) and Wang et al. (12) proposed methods to accomplish the SR of medical images using convolutional neural networks. The method first goes through a convolutional layer to extract the features of the LR image, then maps the features to the high-resolution (HR) image size via non-linear mapping, and finally maps the feature map back to the HR image through a reconstruction layer. However, the method heavily depends on the context content of the image region.

To address this issue, Zhu et al. (13), Wei et al. (14), Purohit et al. (15), and Lu et al. (16) proposed a SR methods for medical images based on residual dense connectivity. The method aims to enhance the performance of the model through dense connection (17) and residual connection (18), which help to alleviate the over-smoothing problem that may occur when the depth of the network is increased, and improve the ability of the model to capture complex structures. However, the method leads to an increase in network parameters and computational resource consumption.

To address these issues, Zhu et al. (19), Liu et al. (20), and Pang et al. (21) proposed a medical image SR methods based on multi-scale fusion (22). The method mainly focuses on extracting features at different scales using different scale convolution kernels or employing multi-scale pooling modules to capture the features of the image at different scales. This technique helps the model to better handle data with multi-scale features. The details and structures in images can be better captured by the multi-scale technique, but the method is sensitive to image noise and requires a large number of HR images.

To address these issues, Wang et al. (23), Du et al. (24), Jiang et al. (25), and Ahmad et al. (26) proposed a SR method based on GANs (27), which is a type of supervised learning, for medical images. By using the binary minimax game method of generator and discriminator, the generated model can estimate the SR image of the sample data distribution. This method effectively solves the problem of insufficient HR images, but it has the problem of vanishing and exploding gradients. Similarly, a number of other methods (28) have been proposed for the SR of medical images, but all have different issues (29).

To address the above issues, we proposed the fusing residual dense and attention in generative adversarial network (FRDAGAN) for medical image SR. The study’s main contributions include that it:

- Combined residual densely connected networks with GANs to fully utilize all the hierarchical features of the original LR images, while preventing the gradient decay that occurs when the number of network layers increases;

- Incorporated the attention gate (AG) network structure into the GAN to suppress the noise information of the input image and improve the signal-to-noise ratio value of the image;

- Used a hybrid loss function, combining content loss, perceptual loss, and Wasserstein gradient penalty loss, as a new loss function to prevent vanishing and exploding gradients. We present this article in accordance with the CLEAR reporting checklist (available at https://qims.amegroups.com/article/view/10.21037/qims-2025-3/rc).

Methods

GAN

The study was conducted in accordance with the Declaration of Helsinki and its subsequent amendments.

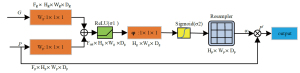

A GAN is a deep generative model based on adversarial learning (30), which consists of a generative network and a discriminative network. The generative network is responsible for generating fake data based on the input noisy data; while the discriminative network determines the authenticity of the input fake data and real data. The generative network evades the censorship of the discriminator by continuously optimizing the generated data, while the discriminator increases the accuracy of its own judgement by optimizing the structure of the network, and thus the two form an adversarial relationship. Figure 1 shows its network structure.

The LR images are input into the generator, generative SR images are generated by the generator, and the generative SR images and the real HR images are then input into the discriminator. The real and generative SR images are compared in the discriminator, if the generative SR image satisfies the real SR image discrimination condition, the output is true, otherwise the generator and discriminator network structure continue to be updated until the condition is satisfied.

Residual dense network (RDN)

An RDN (5) is a deep-learning network based on residual and dense connectivity for image SR reconstruction. The RDN comprises a residual and dense network, which uses a dense block structure to allow information to be transmitted within each block, improving the utilization of information. At the same time, to avoid gradient decay, the RDN uses the strategy of residual connection, and the input and output of each dense block are added together to ensure the flow of information between each block. The residual network structure (Figure 2A) and the dense network structure (Figure 2B) are combined to form the RDN structure (Figure 2C).

According to the principle of residual networks, when the input variable xi−1 is in the mapping process, the gradient decay problem will occur. Through the internal skip connection function, the input variable xi−1 and the mapped result F(xi−1) are added to enhance the output result. As the number of network layers increases, overfitting will not occur. Eq. [1] defines the residual network as follows:

The residual network strengthens the connection between blocks, but cannot fuse local features within blocks. The dense block refers to the fusion of local feature information through dense connection. The dense connection block strengthens the fusion between internal features (i.e., it is the accumulation of all the variables before xi and residual mapping outputs from xi−1, where xi is a feature of layer i). Eq. [2] defines the dense block as follows:

However, the dense block network structure only focuses on the internal feature fusion of blocks, and lacks connections between blocks, which leads to the problem of gradient decay between blocks. Therefore, we built the residual dense block, which is a kind of global residual connection. Eq. [3] defines the residual dense block as follows:

where β is the residual scaling parameter, and β =0.2. When , the variable xi−1 is connected to the mapping result of the variable xi−1, and when i=n, the initial variable x0 is skip-connected to the output data of the residual dense block.

AG

The RDN realizes the fusion between features, while the AG suppresses image noise. The AG (31) is an attention mechanism (32). As a feature weight allocation mechanism, it can effectively highlight important feature information and suppress background feature information, and its core purpose is to assign different weights according to the importance of features. For the AG, shown in Figure 3, based on input features G and P, after convolution by 1×1×1, the corresponding position features are added, the negative features are then suppressed by the rectified linear unit (ReLU) function, and the features are normalized by the Sigmoid function. The processed results are multiplied with the initial feature P by channel to obtain the new feature Pl after the assigned weight. The process of operating on the input features G and P can be represented by Eqs. [4,5], respectively, which are expressed as follows:

where is the ReLU function, and is the sigmoid activation function. Parameter: , , , Offset: , .

Hybrid loss function

In the RDN and AG subsections, the image features are processed, but issues of vanishing and exploding gradient arise in the GAN during training. In this study, we used a hybrid loss function to address the vanishing and exploding gradient issues. The hybrid loss function contains the content loss function, perceptual loss function, and adversarial loss function.

The content loss function is shown in Eq. [6], which is expressed as follows:

where MSE is the mean square error, which is calculated by the squared value of the pixel difference at the corresponding position of the image; Ihr is the real HR image; and Isr is the generated HR image, with an image size of H × W.

The visual geometry group (VGG) based perceptual loss function is expressed in Eq. [7] as follows:

where Vx,y indicates the feature map obtained by the yth convolution (after activation) before the xth maximum-pooling layer in the VGG19 network; and Wx,y and Hx,y describe the dimensions of the respective feature maps in the VGG network.

An important feature of Wasserstein generative adversarial network (WGAN) is to shear all the weights of the discriminators into a constant range [−c, c] to satisfy the conditions for deriving the Wasserstein distance, which better overcomes the gradient loss problem that occurs in GAN (33). However, with the clipping strategy, the weights of the discriminator tend to be either a minimum or a maximum, which causes the discriminator to behave like a binary network, weakening the non-linear simulation ability of the GAN. Therefore, a gradient penalty (34) has been proposed to replace the shear operation. The new technique restricts the gradient of the discriminator from changing rapidly by adding a new term to the adversarial loss. In this study, the loss function of the Wasserstein generative adversarial network with gradient penalty (WGANGP) was used as the adversarial loss function. The WGAN loss is defined in Eq. [8] and the WGANGP loss is defined in Eq. [9], which are expressed as follows:

Therefore, in this study, a hybrid loss function was adopted, as defined in Eq. [10], which is expressed as follows:

Network structure

Through the improvement of the above method, the proposed method generates new SR output images through the adversarial training of the generator and discriminator using the LR and HR input images. Its calculation is represented by Eq. [11], which is expressed as follows:

where D is the discriminator, G is the generator, is the HR image, is the LR image, is the probability distribution of training set , and is the probability distribution of after generator processing. The generator network structure is shown in Figure 4. The input LR image with noisy information is processed by the convolution module, and then processed by the N-layer residual dense block, and the residual dense feature matrix is output. Next, the feature size is adjusted through the convolution layer, and batch normalization. The process matrix G and the initial matrix P are input into the AG module. Finally, the output generated SR image and the HR image are fed into the discriminator, and the SR image results are output after the discrimination of the LSR loss function and the back-propagation mechanism.

Results

During the experiment, smaller size images were obtained by cropping the input images. In this study, the input images were cropped to 88 pixels. These smaller size images were used as the LR images, and the original input images were used as the HR images to train the network parameters. When the training of the model parameters was completed, the original input image was up-sampled by the generator to obtain the larger size SR images.

Configuration

The experiment was run on the Linux server, and the configuration environment was Ubuntu 20.04, Python 3.10, Stable Diffusion v.1-5, Compute Unified Device Architecture 11.7, cuDNN 8, Pytorch 2, and JupyterLab. The computing power was as follows: NVIDIA Tesla T4, GPU: 16GB+; 8+TFlops SP CPU: 8 cores; and memory: 32 GB. Since the sizes of the input images were not uniform, the images were cropped to 88 pixels before being input it into the model. The size of the output image was scaled up according to the adopted coefficients, such as 2, 4, and 8. In the hybrid loss function, the hyperparameters were 6×10−3 and 1×10−3.

Dataset

Dataset 1 (35) refers to the LUNA16 dataset, which contains two-dimensional medical images and which is a subset of the largest common lung nodule dataset, Lung Image Database Consortium and Image Database Resource Initiative (LIDC-IDRI). This dataset is collected by the National Cancer Institute and is widely used to detect and classify early lung cancer. It contains 1,186 medical imaging data from low-dose lung computed tomography images of 888 patients in .mhd format. The minimum value of each dimension of the image is [300, 300], the median value of each dimension is [360, 360], the maximum value of each dimension is [400, 400]. We randomly divided the LUNA16 dataset into training, testing, and validation sets at a ratio of 7:2:1.

Dataset 2 (36) refers to a brain tumor magnetic resonance imaging (MRI) segmentation dataset, which contains three-dimensional medical images, including brain MRI images and manual fluid-attenuated inversion recovery anomaly segmentation images. The image dataset was derived from the Cancer Imaging Archive, and contains the images of 110 patients from The Cancer Genome Atlas low-grade glioma collection. Each patient provided 40 to 150 brain MRI scans. After data preprocessing, 1,335 images in .tif format were retained with a uniform image dimension size of [256, 256], and the brain MRI dataset was randomly divided into training, testing, and validation sets at a ratio of 7:2:1.

Evaluation indicators

There are two commonly used evaluation indicators for SR; that is, the peak signal-to-noise ratio (PSNR), and the structural similarity index measure (SSIM) (37). These two evaluation indexes are the most basic indexes used to measure the quality of compressed reconstructed SR images. PSNR (db) is defined in Eq. [12] as follows:

where MSE is the mean square error value {the formula is provided in Eq. [6]}, and MAXI is the maximum pixel value in image I. In this study, the image was converted to YCbCr format, and then the PSNR of the Y component was calculated; a larger PSNR value indicated a better result.

The mean SSIM assumes that the human visual system is highly adapted to extract image structures, and measures the structural similarity between images based on the comparisons of luminance, contrast, and structures. It is expressed in Eq. [13] as follows:

where x and y denote two images; and are the mean and variance, respectively; is the covariance between x and y; c1 and c2 are constant relaxation terms; c1 = (k1×L)2; k1 is a constant, and the default value is 0.01; c2= (k2 × L)2; k2 is a constant, and the default value is 0.03; and L is the range of pixel values, and the value is .

Qualitative results

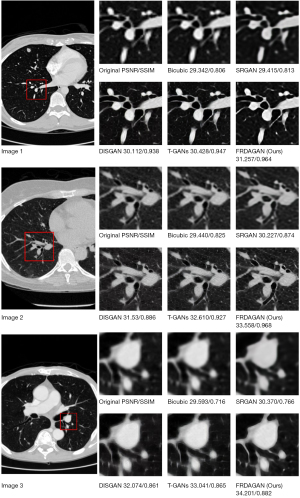

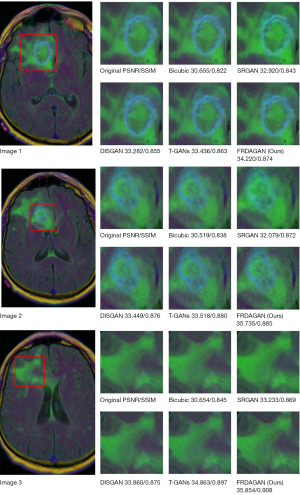

To validate the performance of the proposed method, we compared the proposed method with the super-resolution generative adversarial network (SRGAN) (8), wavelet-informed discriminator guides generative adversarial network (DISGAN) (23), and transformer and generative adversarial network (T-GAN) (24) methods on the Luna16 and brain MRI image datasets, respectively. Figures 5,6 show the effect graphs after 100 rounds of iteration (up-sampling ×4).

Figure 5 shows the SR effect of different methods on the Luna16 validation dataset. Due to the small feature area of lung nodules, zooming in on the feature area of the lung nodule SR image can help clinicians to judge the type of lung nodules more clearly, which can aid in later treatment. In relation to the images generated by the method proposed in this study, in the lesion area, visually, the pixel clarity was higher and the evaluation metrics were better than those of the other methods, thus showing the progressiveness of the method proposed in this study.

Figure 6 shows the SR images generated by different methods on the brain MRI validation dataset; the red area indicates low-grade brain glioma. Clinicians determine the type of glioma based on the shape of the low-grade brain glioma; thus, a clear picture of the image is very important for clinical diagnosis. From the visual analysis, we can see that our proposed method produced clearer pixels in the SR image than the other methods. Meanwhile, we further validated the effectiveness and advances of our proposed method using the average evaluation index results of different methods on the test dataset (Table 1).

Table 1

| Methods | Datasets | ||||

|---|---|---|---|---|---|

| Luna16 | Brain MRI | ||||

| PSNR↑ | SSIM↑ | PSNR↑ | SSIM↑ | ||

| SRGAN | 30.004±0.442 | 0.818±0.042 | 32.744±0.348 | 0.861±0.037 | |

| DISGAN | 31.239±0.527 | 0.895±0.104 | 33.530±0.412 | 0.869±0.080 | |

| T-GANs | 32.026±0.363 | 0.913±0.037 | 33.939±0.346 | 0.880±0.031 | |

| FRDAGAN (ours) | 33.005±0.157 | 0.938±0.028 | 35.270±0.183 | 0.889±0.024 | |

Data are presented as average ± standard deviation. We used cross-validation to run different comparison methods on the test dataset to get the average result values of the evaluation metrics. The table indicates the average results of different methods on the test dataset of the Luna16 and Brain MRI. “↑” indicates that the higher the value the better the effect. DISGAN, wavelet-informed discriminator guides generative adversarial network; FRDAGAN, fusing residual dense and attention in generative adversarial network; MRI, magnetic resonance imaging; PSNR, peak signal-to-noise ratio; SRGAN, super-resolution generative adversarial network; SSIM, structural similarity index measure; T-GANs, transformer and generative adversarial network-based super-resolution reconstruction network.

We used cross-validation to compare different methods on the test dataset to obtain the average result values of the evaluation metrics in Table 1. Based on the evaluation index analysis, our proposed method was better than other methods in terms of the PSNR and SSIM values as a whole, which shows the progressiveness of the method proposed in this study.

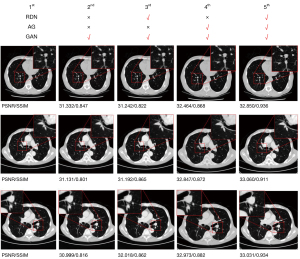

Ablation studies

To further verify the effectiveness of the proposed method, we used an ablation research experiment to compare the improvement effect of different aspects of the proposed method. In Figure 7, the 1st image is the original image, the 2nd image is the SR image generated by the GAN, the 3rd image is the SR image generated using the residual dense block and GAN structure, the 4th image is the SR image generated using the AG and GAN structure, and the 5th image is the SR image generated using the method proposed in this study. The experiment set results showed that that 3rd image had a higher SSIM value than the 2nd image, showing the validity of the RDN; the 4th image showed a significant improvement in the PSNR values compared to the 2nd image, thus showing the validity of the AG; and the 5th image showed significant improvements in the PSNR and SSIM compared to the 2nd, 3rd, and 4th images. The results show the effectiveness of the method proposed in this study.

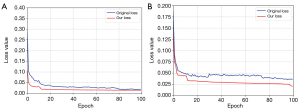

To verify the stability and convergence of the hybrid loss function in the adversarial network during the training process, we described the loss results with the Luna16 and brain MRI datasets during the training process. The experimental results are shown in Figure 8. As Figure 8A,8B show, the loss value of the hybrid loss function proposed in this study was smaller than the original loss function during the initial iteration. Meanwhile, our loss function tended to converge at an epoch of 20, while the original loss value tended to converge during the subsequent iterations. Therefore, the convergence performance of our loss function was better and more stable than GAN.

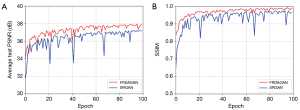

To verify the performance of the proposed method in the training process, we performed comparative experiments using the basic SRGAN methods. The Luna16 dataset was used as an example. As the curves in Figure 9 show, the PSNR and SSIM values of the proposed method were significantly better than those of the SRGAN in the iterative process, which shows that the method proposed in this study had better performance. The proposed method also had a lower vibration amplitude and was more stable during the iteration process.

Discussion

The method proposed in this study achieved good results in terms of the SR of medical image; however, it still had some limitations. The validation image data should satisfy the same probability distribution or have a similar probability distribution as the training data. The more different the probability distribution, the worse the SR image effect (38,39). Therefore, to improve this method, a larger number of same probability datasets is needed. In addition, the MRI data modality is also an important factor that constrained this study (40). In the future, we will further investigate the problem of medical SR images with different probability distributions and different modalities.

Conclusions

This study proposed the FRDAGANfor the SR reconstruction of medical images. The method transmitted feature information at different layers through the residual dense connection structure, and avoided the problem of gradient decay caused by an increase in network structure. The PSNR of the SR images was improved by the AG, which suppressed the interference of noisy information. Finally, a hybrid loss function consisting of a content loss function, a perceptual loss function and an adversarial loss function prevented vanishing and exploding gradients. Our experiments showed that our proposed method had an obvious enhancement effect on the SR reconstruction of medical images, and showed the effectiveness and progressiveness of the proposed method.

Acknowledgments

None.

Footnote

Reporting Checklist: The authors have completed the CLEAR reporting checklist. Available at https://qims.amegroups.com/article/view/10.21037/qims-2025-3/rc

Funding: This work was supported by

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://qims.amegroups.com/article/view/10.21037/qims-2025-3/coif). The authors have no conflicts of interest to declare.

Ethical Statement:

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- He J, Ma H, Guo M, Wang J, Wang Z, Fan G. Research into super-resolution in medical imaging from 2000 to 2023: bibliometric analysis and visualization. Quant Imaging Med Surg 2024;14:5109-30. [Crossref] [PubMed]

- Liu K, Yu H, Zhang M, Zhao L, Wang X, Liu S, Li H, Yang K. A Lightweight Low-dose PET Image Super-resolution Reconstruction Method based on Convolutional Neural Network. Curr Med Imaging 2023;19:1427-35. [Crossref] [PubMed]

- Jia S, Zhu S, Wang Z, Xu M, Wang W, Guo Y. Diffused Convolutional Neural Network for Hyperspectral Image Super-Resolution. IEEE Transactions on Geoscience and Remote Sensing 2023;61:1-15. [Crossref]

- Sui J, Ma X, Zhang X, Pun M. GCRDN: Global Context-Driven Residual Dense Network for Remote Sensing Image Superresolution. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 2023;16:4457-68. [Crossref]

- Zhang Y, Tian Y, Kong Y, Zhong B, Fu Y. Residual dense network for image super-resolution. IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2018:2472-81.

- Liu F, Yang X, De Baets B. A deep recursive multi-scale feature fusion network for image super-resolution. Journal of Visual Communication and Image Representation 2023;90:103730. [Crossref]

- Ma T, Wang H, Liang J, Peng J, Ma Q, Kai Z. MSMA-Net: An Infrared Small Target Detection Network by Multiscale Super-Resolution Enhancement and Multilevel Attention Fusion. IEEE Transactions on Geoscience and Remote Sensing 2023;62:1-20.

- Guerreiro J, Tomás P, Garcia N, Aidos H. Super-resolution of magnetic resonance images using Generative Adversarial Networks. Comput Med Imaging Graph 2023;108:102280. [Crossref] [PubMed]

- Angarano S, Salvetti F, Martini M, Chiaberge M. Generative Adversarial Super-Resolution at the edge with knowledge distillation. Engineering Applications of Artificial Intelligence 2023;123:106407. [Crossref]

- Cui J, Miao S, Wang J, Chen J, Dong C, Hao D, Li J. The super-resolution reconstruction in diffusion-weighted imaging of preoperative rectal MR using generative adversarial network (GAN): Image quality and T-stage assessment. Clin Radiol 2024;79:e1530-8. [Crossref] [PubMed]

- Qiu D, Zheng L, Zhu J, Huang D. Multiple improved residual networks for medical image super-resolution. Future Generation Computer Systems 2021;116:200-8. [Crossref]

- Wang C, Lv X, Shao M, Qian Y, Zhang Y. A novel fuzzy hierarchical fusion attention convolution neural network for medical image super-resolution reconstruction. Information Sciences 2023;622:424-36. [Crossref]

- Zhu D, Qiu D. Residual dense network for medical magnetic resonance images super-resolution. Comput Methods Programs Biomed 2021;209:106330. [Crossref] [PubMed]

- Wei S, Wu W, Jeon G, Ahmad A, Yang X. Improving resolution of medical images with deep dense convolutional neural network. Concurrency and Computation: Practice and Experience 2020;32:e5084. [Crossref]

- Purohit K, Mandal S, Rajagopalan AN. Scale-recurrent multi-residual dense network for image super-resolution. European Conference on Computer Vision (ECCV) Workshops. 2018:1-17.

- Lu W, Song Z, Chu J. A novel 3D medical image super-resolution method based on densely connected network. Biomedical Signal Processing and Control 2020;62:102120. [Crossref]

- Huang G, Liu Z, Van der Maaten L, Weinberger KQ. Densely connected convolutional networks. IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2017:2261-9.

- He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2016:770-8.

- Zhu J, Tan C, Yang J, Yang G, Lio' P. Arbitrary Scale Super-Resolution for Medical Images. Int J Neural Syst 2021;31:2150037. [Crossref] [PubMed]

- Liu C, Wu X, Yu X, Tang Y, Zhang J, Zhou J. Fusing multi-scale information in convolution network for MR image super-resolution reconstruction. Biomed Eng Online 2018;17:114. [Crossref] [PubMed]

- Pang K, Zhao K, Hung ALY, Zheng H, Yan R, Sung K. NExpR: Neural Explicit Representation for fast arbitrary-scale medical image super-resolution. Comput Biol Med 2025;184:109354. [Crossref] [PubMed]

- Lin TY, Dollár P, Girshick R, He K, Hariharan B, Belongie S. Feature pyramid networks for object detection. IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2017:936-44.

- Wang Q, Mahler L, Steiglechner J, Birk F, Scheffler K, Lohmann G. DISGAN: wavelet-informed discriminator guides GAN to MRI super-resolution with noise cleaning. IEEE/CVF International Conference on Computer Vision Workshops (ICCVW). 2023:2444-53.

- Du W, Tian S. Transformer and GAN-based super-resolution reconstruction network for medical images. Tsinghua Science and Technology 2024;29:197-206. [Crossref]

- Jiang M, Zhi M, Wei L, Yang X, Zhang J, Li Y, Wang P, Huang J, Yang G FA-GAN. Fused attentive generative adversarial networks for MRI image super-resolution. Comput Med Imaging Graph 2021;92:101969. [Crossref] [PubMed]

- Ahmad W, Ali H, Shah Z, Azmat S. A new generative adversarial network for medical images super resolution. Sci Rep 2022;12:9533. [Crossref] [PubMed]

- Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y. Generative adversarial networks. Communications of the ACM 2020;63:139-44. [Crossref]

- Zhao K, Pang K, Hung ALY, Zheng H, Yan R, Sung K. MRI Super-Resolution With Partial Diffusion Models. IEEE Trans Med Imaging 2025;44:1194-205. [Crossref] [PubMed]

- Shin M, Seo M, Lee K, Yoon K. Super-resolution techniques for biomedical applications and challenges. Biomed Eng Lett 2024;14:465-96. [Crossref] [PubMed]

- Qian Z, Huang K, Wang QF, Zhang XY. A survey of robust adversarial training in pattern recognition: Fundamental, theory, and methodologies. Pattern Recognition 2022;131:108889. [Crossref]

- Oktay O, Schlemper J, Folgoc LL, Lee M, Heinrich M, Misawa K, Mori K, McDonagh S, Hammerla NY, Kainz B, Glocker B, Rueckert D. Attention U-Net: Learning Where to Look for the Pancreas. Medical Imaging with Deep Learning. 2022:1-10.

- Niu Z, Zhong G, Yu H. A review on the attention mechanism of deep learning. Neurocomputing 2021;452:48-62. [Crossref]

- Arjovsky M, Chintala S, Bottou L. Wasserstein generative adversarial networks. 34th International Conference on Machine Learning 2017;70:214-23.

- Gulrajani I, Ahmed F, Arjovsky M, Dumoulin V, Courville AC. Improved training of wasserstein GANs. Advances in Neural Information Processing Systems 2017;30:1-11.

- Setio AAA, Traverso A, de Bel T, Berens MSN, Bogaard CVD, Cerello P, et al. Validation, comparison, and combination of algorithms for automatic detection of pulmonary nodules in computed tomography images: The LUNA16 challenge. Med Image Anal 2017;42:1-13. [Crossref] [PubMed]

- Buda M, Saha A, Mazurowski MA. Association of genomic subtypes of lower-grade gliomas with shape features automatically extracted by a deep learning algorithm. Comput Biol Med 2019;109:218-25. [Crossref] [PubMed]

- Palubinskas G. Image similarity/distance measures: what is really behind MSE and SSIM? International Journal of Image and Data Fusion 2017;8:32-53. [Crossref]

- Shan S, Gao Y, Waddington D, Chen H, Whelan B, Liu P, Wang Y, Liu C, Gan H, Gao M, Liu F. Image Reconstruction With B0 Inhomogeneity Using a Deep Unrolled Network on an Open-Bore MRI-Linac. IEEE Transactions on Instrumentation and Measurement 2024;73:1-9. [Crossref]

- Kurz C, Buizza G, Landry G, Kamp F, Rabe M, Paganelli C, Baroni G, Reiner M, Keall PJ, van den Berg CAT, Riboldi M. Medical physics challenges in clinical MR-guided radiotherapy. Radiat Oncol 2020;15:93. [Crossref] [PubMed]

- Azam MA, Khan KB, Salahuddin S, Rehman E, Khan SA, Khan MA, Kadry S, Gandomi AH. A review on multimodal medical image fusion: Compendious analysis of medical modalities, multimodal databases, fusion techniques and quality metrics. Comput Biol Med 2022;144:105253. [Crossref] [PubMed]