U-Net benign prostatic hyperplasia-trained deep learning model for prostate ultrasound image segmentation in prostate cancer

Introduction

Worldwide, prostate cancer (PCa) is one of the commonest neoplasms in men. PCa and skin cancer constitute the commonest cancers, and PCa is the third leading cause of cancer mortality, with lung and colorectal cancers constituting the first and second leading causes, respectively (1). Transrectal ultrasound (TRUS) and magnetic resonance imaging (MRI) are the main imaging modalities for diagnosing, treating, and following up patients with PCa; however, the information provided by these two imaging techniques differ significantly. Studies have suggested new ways to identify PCa (2,3). The fact that TRUS is economical and portable and provides real-time imaging ensures that TRUS is mainly used to visualize the shape and position of the prostate and for cognitive registration in a targeted prostate biopsy. TRUS is used in combination with MRI to improve the accuracy of detection of PCa (4). Prostatic segmentation in TRUS images enables the direct determination of the prostate volume and facilitates the diagnosis of benign prostatic hyperplasia (BPH); moreover, the different stages of TRUS-based prostatic segmentation play a key role in the clinical decision-making process (5).

In addition, prostatic segmentation enables multi-modal image fusion for tumour positioning in procedures such as biopsy, minimally invasive ablation, and radiotherapy. The registration process of a targeted biopsy in PCa relies on the precise segmentation of the ultrasound images of the prostate (6). Therefore, accurate segmentation is of great concern in TRUS-imaging-based assessment of the prostate. However, TRUS images are characterized by spots, shadow artifacts, and low contrast. These features result in missing images or blurring of prostate boundaries, uneven distribution intensity, and large variability in the shape of the prostate, which decrease the segmentation accuracy. Furthermore, traditional manual segmentation of the prostate is an extremely time-consuming, tedious task that is prone to interobserver variations. The application of an artificial intelligence (AI)-based deep learning algorithm can help resolve the abovementioned problems.

In recent years, AI-based techniques have been developed rapidly and penetrated several medical fields in the risk prediction, diagnosis, and treatment of diseases. Radiomics refers to a technique that uses high-throughput methods to extract and analyze a large number of radiological features from medical images to effectively quantify regions of interest. Many scholars have used imaging radiomics method to study the segmentation of the prostate (7-10). Radiomics method is not a black box like a neural network, and may be safer in medical applications. Based on the classification, segmentation, and positioning of clinical images, the deep learning feature of AI has been used to facilitate the evaluation, diagnosis, and treatment of some prostatic diseases and has achieved remarkable results. Lei et al. developed a deep learning-based multidirectional automatic prostatic segmentation method that automatically segments the TRUS prostate images through reliable contour refinement to enable ultrasound-guided radiotherapy (11). Using complementary information that is encoded in different layers of the convolutional neural network (CNN), Wang et al. developed a novel three-dimensional (3D) deep neural network with an attention module to improve prostatic segmentation in TRUS (12). Han et al. used weakly supervised and semi-supervised deep learning frameworks to segment PCa based on TRUS images, which potentially reduces the time expended by radiologists in drawing the boundaries of the PCa lesions (13). CNN is one of the most advanced deep learning technologies and has great significance for the automatic segmentation of the prostate from ultrasound images in the diagnosis and treatment planning of prostate diseases (14).

Prostatic segmentation based on the application of deep learning to ultrasound images has great potential, but relies on the availability of a large amount of annotated data. In a 27-year follow-up study, it was found that the incidence of PCa in patients with BPH increased by 2 to 3 times (15). Worldwide, BPH has a higher incidence than PCa and the clinical data obtained from patients with BPH is markedly more than that from patients with PCa (16). However, there is no relevant research on the use of a prostate segmentation model that is constructed from the ultrasound images obtained from patients with BPH for the image segmentation of the prostate in patients with PCa.

The implementation of the abovementioned strategy could potentially reduce the need for high-precision segmentation models from tumour data. Therefore, in this study, we aimed to study the use of a prostate segmentation model that was constructed from ultrasound images obtained from patients with BPH for image segmentation of the prostate in patients with PCa. Moreover, we hope to use this strategy to alleviate the data requirements for training a new high-precision PCa segmentation network. This will be of great significance to the combination of AI technology and clinical practice. We present this article in accordance with the CLEAR reporting checklist (available at https://qims.amegroups.com/article/view/10.21037/qims-2024-2476/rc).

Methods

Study design

The study was conducted in accordance with the Declaration of Helsinki and its subsequent amendments (17). The study was approved by the Clinical Research Ethics Committee of Renmin Hospital of Wuhan University (No. WDRY2023-K122) and individual consent for this retrospective analysis was waived. The study did not have direct contact with patients, and there was almost no risk to patients. The experimental results were only used for scientific research, not for commercial activities. During the experiment, we ensured that the experimental data were safe and controllable.

Data collection

Between March 2019 and March 2021, the study database of 370 ultrasound images of prostate hyperplasia included one to three ultrasound images of different levels of the prostate from 260 patients with BPH. Moreover, 68 ultrasound images from 62 patients with PCa were included. All patients were diagnosed on the basis of the evaluation of a prostate biopsy specimen, confirming BPH in 260 patients and PCa in 62 patients.

Data pre-processing

Ultrasound images of BPH were divided into a training set and test set in accordance with an 8:2 allocation approximately. All of the PCa images were included in the test set. A urology ultrasound specialist with more than 5 years of clinical experience labelled the prostate on all ultrasound images using the Labelme (version 4.5.11) software (18), and all ultrasound images (grayscale images) and label images (binary images) were resized to 512×512. In order to consider whether the depth of the ultrasound image had an impact on the network training effect, we resized all images to the size of the aspect ratio consistent with the original image (i.e., 528×336), and performed training again. The prostate ultrasound images and corresponding labels in the training set were processed using the same data-augmentation strategy. Finally, the data that was used to train the model included 2,000 ultrasound images of the prostate and the corresponding label images.

Comparison of features between PCa and BPH ultrasound images

We used the radiomics method to perform feature extraction on the collected PCa and BPH ultrasound images. A total of 924 radiomics features were extracted for each ultrasound image. Features were divided into the following nine groups: (I) first-order statistics (n=18); (II) shape two-dimensional (2D) (n=14); (III) texture [n=22, derived from gray-level co-occurrence matrix (GLCM)]; (IV) texture [n=16, derived from gray-level run-length matrix (GLRLM)]; (V) texture [n=16, derived from gray-level size zone matrix (GLSZM)]; (VI) texture [n=14, derived from gray-level dependence matrix (GLDM)]; (VII) texture [n=5, derived from neighborhood gray-tone difference matrix (NGTDM)]; (VIII) based on the features of the wavelet filtered image (n=728); and (IX) Laplacian based on the features of the sigma-1 mm Gaussian filtered image (n=91). Feature calculation was done automatically using the PyRadiomics (version 3.0.1) package in Python 3.7.

The naming method of each feature was based on the image type, feature group and feature name from which the feature was extracted and connected with an underline. For example, wavelet-LLL_ngtdm_Strength was a feature extracted from the NGTDM group of images based on low-low-low (LLL) wavelet filtering, and the feature name was Strength. All eigenvectors were normalized to eliminate the dimensional influence between indicators, which was convenient for subsequent processing. Least absolute shrinkage and selection operator (LASSO) method was used for dimensionality reduction, and the Pearson correlation coefficient was used to analyze the degree of linear correlation between those features.

Model construction

Based on the training set of BPH data, we used U-Net, LinkNet and PSPNet neural networks to build deep learning model (19-21). U-Net adopts a symmetric encoder-decoder structure. The encoder consists of 5 downsampling blocks, each comprising two 3×3 convolutions followed by rectified linear unit (ReLU) activation and a 2×2 max-pooling operation, halving the feature map size while doubling the number of channels at each level. The decoder includes 5 upsampling blocks, where 2×2 transposed convolutions are used for upsampling, followed by two 3×3 convolutions and ReLU activation. Skip connections concatenate feature maps from the corresponding encoder layers with the decoder feature maps to preserve spatial details. Finally, a 1×1 convolution and Sigmoid activation generate the binary segmentation mask. The LinkNet model also used the encoder-decoder structure, while the PSPNet model used a specially designed global mean pooling operation and feature fusion to construct a pyramid pooling module. We then used the divided training set for model training according to the following parameters: learning rate, 0.001; momentum, 0.9; epoch, 100; batch size, 32; and optimizer, Adam optimization method. Binary cross entropy was used as the loss function in the model.

Test-set evaluation

We used the trained prostatic U-Net segmentation model to segment the BPH and PCa images in the test dataset. Due to the unclear edges of the prostate, uneven intensity distribution, and large variability of the shape of the prostate on the ultrasound images, we adopted four different post-processing methods after the segmentation: methods one, two, three, and four involved no post-processing, filling in the image, convex closure processing, and selection of the largest connected domain of the image and the associated groups were marked as Predict 1, Predict 2, Predict 3, and Predict 4 groups, respectively. Next, we compared the Dice coefficients of the images that were processed by the four different methods as well as the label images.

Data processing

The deep learning model was built based on TensorFlow and Keras. Keras is a deep learning framework based on the TensorFlow background. In addition, we used Open Source Computer Vision (opencv), pandas, Numerical Python (numpy), MATLAB-style Plotting Library (matplotlib), and other Python-based open-source software packages. We used the Dice coefficient and Intersection over Union (IoU) as evaluation metric to compare the difference between the predicted image and the annotated image, which is defined as shown in Eq. [1] and Eq. [2]:

Where TP is a true-positive area, TN is a true-negative area, FP is a false-positive area, and FN is a false-negative area. A higher Dice coefficient indicates the greater accuracy of the model prediction.

Statistical analysis

Two independent-sample t-tests were used to determine whether the Dice coefficients of the BPH and PCa groups (BPH and PCa groups, respectively) differed significantly. Before the t-test, we used a Shapiro test of Gaussianity method to check the normal distribution of the data. A two-tailed P value <0.05 indicated statistical significance.

Results

Patient characteristics

Table 1 presents an overview of the study population of 322 patients (BPH, n=260; PCa, n=62).

Table 1

| Characteristics | Training set | Test set | ||

|---|---|---|---|---|

| BPH | BPH | PCa | ||

| Image numbers | 300 | 70 | 68 | |

| Patient numbers | 192 | 68 | 62 | |

| Average age (years) | 65.64±6.34 | 67.24±6.48 | 73.31±7.69 | |

| Average PSA (ng/mL) | 22.53±5.68 | 28.45±7.34 | 178±32.14 | |

| Average fPSA (ng/mL) | 5.67±1.32 | 7.85±1.69 | 25.74±3.44 | |

| Average f/t | 0.6±0.1 | 0.7±0.2 | 0.5±0.1 | |

| Average gland size (mL) | 68.49±12.36 | 79.63±26.64 | 74.01±21.49 | |

| Average Gleason score | – | – | 8.1±1.4 | |

| Average Gleason grade | – | – | 4.3±0.6 | |

Data are presented as mean ± standard deviation. BPH, benign prostatic hyperplasia; f/t, fPSA/tPSA; fPSA, free PSA; PCa, prostate cancer; PSA, prostate-specific antigen; tPSA, total PSA.

Comparison of features between PCa and BPH ultrasound images

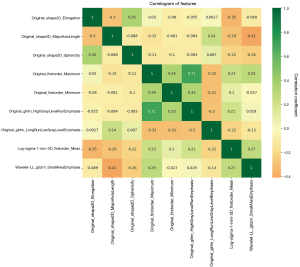

As shown in Figure 1, a total of 9 relevant features had been screened out using LASSO, namely (I) original_shape2D_Elongation; (II) original_shape2D_MajorAxisLength; (III) original_shape2D_Sphericity; (IV) original_firstorder_Maximum; (V) original_firstorder_Minimum; (VI) original_glrlm_HighGrayLevelRunEmphasis; (VII) original_glrlm_LongRunLowGrayLevelEmphasis; (VIII) log-sigma-1-mm-3D_firstorder_Mean; (IX) wavelet-LL_glszm_SmallAreaEmphasis. The degree of correlation between nine features was shown in the form of heatmap. The results proved that there existed differences between the ultrasound images of PCa and BPH, and the extracted features could indicate the potential identification markers between the two. For example, original_shape2D_Elongation shows the relationship between the two largest principal components in the region of interest shape, which may indicate that the largest principal component area of the prostate in BPH and PCa ultrasound images may be different. Original_shape2D_Sphericity is the ratio of the perimeter of the tumor region to the perimeter of a circle with the same surface area as the tumor region and therefore a measure of the roundness of the shape of the tumor region relative to a circle, which may indicate that the prostate sphericity of PCa ultrasound images is more pronounced than that of BPH.

Training results

The results of the models training are shown in Figure 2. We randomly selected 20% of the images from the training set for the validation set. As shown in Figure 2, when the models tended to converge after some epochs, the IoU score and the loss value of the training set and validation set of the models reached a high score and reduced to a low value, respectively. Among them, the IoU score of the U-Net model is the highest, 0.9602. As shown in Figure 3, when the size of the data set was resized to be consistent with the original image resolution (528×336), the training effect did not significantly decrease, which proved that the depth change of the ultrasound image had a small impact on the training effect to a certain extent. In view of the excellent segmentation results of U-Net, we retained the model with the lowest loss in the validation set and used it for prediction. The average Dice coefficient, which is used to evaluate the similarity of two samples and essentially measures the overlap between two samples, of each group was calculated as shown in Table 2. In the BPH and PCa groups, the Dice coefficients of methods three and two were highest and achieved a score of 84.2% and 83.8%, respectively.

Table 2

| Coefficients | Methods | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Predict 1 | Predict 2 | Predict 3 | Predict 4 | ||||||||

| BPH | PCa | BPH | PCa | BPH | PCa | BPH | PCa | ||||

| Dice average (%) | 81.4 | 83.2 | 81.8 | 83.8 | 84.2 | 82.9 | 81.2 | 83.4 | |||

| Dice max (%) | 97.3 | 97.1 | 97.2 | 97.1 | 95.7 | 95.2 | 97.3 | 97.1 | |||

| Dice min (%) | 69.5 | 70.4 | 71.6 | 72.3 | 70.9 | 71.7 | 71.8 | 72.7 | |||

| IoU average | 0.8063 | 0.8146 | 0.8094 | 0.8173 | 0.8195 | 0.8116 | 0.8032 | 0.8154 | |||

BPH, benign prostatic hyperplasia; IoU, Intersection over Union; PCa, prostate cancer.

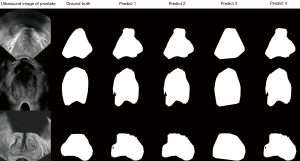

Example and analysis of prediction results

The prediction and the ground truth results are shown in Figure 4. A urology ultrasound expert with more than 5 years of clinical experience delineated the boundaries of the prostate on all ultrasound images. The ground truth served as the standard reference for segmentation in this study, and the Predict 1, Predict 2, Predict 3, and Predict 4 groups constituted the post-processing results of different segmentation images.

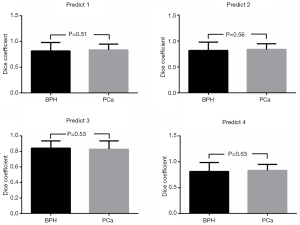

Student’s t-test results

Shapiro test of Gaussianity was performed, and the data (Dice) conformed to the normal distribution (P>0.05). We used the Student’s t-test to compare whether the Dice coefficients of the BPH and the PCa groups in the Predict 1, Predict 2, Predict 3, and Predict 4 groups differed significantly. A two-tailed P value <0.05 indicated statistical significance. The P values obtained from the experimental results were: P1 =0.51, P2 =0.56, P3 =0.53, and P4 =0.53, which were all greater than 0.05 in the four groups (Figure 5). Therefore, the P values supported the null hypothesis that the Dice coefficients of the BPH and PCa groups did not differ significantly.

Discussion

This study used the U-Net, LinkNet and PSPNet segmentation model to segment the ultrasound images of patients with BPH, and successfully applied the trained U-Net model to the ultrasound images of patients with PCa. The final models demonstrated good performance, which could relieve the demand pressure of a high-precision segmentation model for tumour data to some extent, and has a good prospect for application in the clinical setting. Ultrasound imaging has become indispensable in clinical diagnosis and treatment. The automatic segmentation of the prostate in TRUS images is of great significance for imaging-guided intervention and treatment planning of PCa for which the accurate segmentation of the prostate area constitutes a critical step. The AI-based deep learning technology has strong learning ability and has achieved remarkable results in image classification and segmentation in the medical field (22). U-Net is a deep-learning model that has garnered considerable attention in medical image-segmentation tasks. The U-shaped and jump-connection structure of U-Net make the network structure more suitable for medical image segmentation (23).

Many researchers have contributed to prostate image segmentation. Lei et al. developed a new V-Net segmentation model with an average prostate volume Dice similarity coefficient (DSC) of 0.92, and this method could become a useful tool for the diagnosis and treatment of PCa (24). Orlando et al. proposed a 3D-segmentation method to reconstruct the U-Net segmentation model wherein they made predictions based on 2D slices that were sampled radially around the central axis of the prostate, and then reconstructed those slices into a 3D surface (25). The DSC of this model reached 0.94, which provided a generalizable intraoperative solution for PCa surgery. Ma et al. proposed a segmentation model based on the context-classification-based random walk algorithm that combined patient characteristics and clinical information to improve the segmentation performance of the model with a Dice coefficient of 0.91 (26). Bi et al. proposed an active shape model (ASM) with Rayleigh Mixture Model Clustering (ASM-RMMC) that had a DSC of 0.95 (27).

The present study is the first to explore whether a trained segmentation model derived from the images of patients with BPH can be applied to PCa image segmentation. We adopted a data augmentation strategy to prevent overfitting of the model, and the three neural network models we used achieved high IoU score and low loss. The IoU score of U-Net model reached 0.9602 in the training results, which proved that the model we trained had a high segmentation performance for prostate images. We conducted model training based on different ultrasound image resolutions, which proved that the different resolutions of ultrasound images have little effect on the training effect of neural networks. We used radiomics to extract the features in the ultrasound images of BPH and PCa, and proved that the two had different features; thus, we could use deep learning to transfer the images of BPH training to PCa images. In addition, we adopted four different post-segmentation image post-processing methods (methods one to four), and used Dice coefficients to evaluate the segmentation performance of the model. Among them, the highest Dice coefficients (84.2% and 83.8%) were observed for the ultrasound images of BPH and PCa obtained by the convex closure processing method and the filling method, respectively. Although these methods may not be suitable for segmentation in a medical environment, they can be regarded as a heuristic strategy. Subsequently, we used two independent-sample t-tests to compare whether the Dice coefficients of the BPH and PCa groups differed significantly. As the P values of the four groups were all greater than 0.05, the Dice coefficients of the BPH and the PCa groups did not differ significantly. Finally, we confirmed that the U-Net model we constructed could be applied to prostate segmentation of PCa patients. By comparing the differences between the two sets of features, we demonstrated that although there are feature differences between BPH and PCa images, the segmentation performance (Dice coefficient) of the U-Net model showed no significant difference between the two (P>0.05). This indicates that the segmentation network trained on BPH data can generalize to PCa images, indirectly validating the model’s robustness to feature differences.

In this study, we mainly used the U-Net model as a classic representative model to prove this portability. Theoretically, this could be achieved on all segmentation networks. The biggest advantage of the U-Net model we used is based on U-shaped structure and skip-connection. The U-shaped structure is realized by down-sampling and then up-sampling, and the skip-connection structure is used in the same stage, which ensure that the U-Net neural network performs well in the field of medical image segmentation. Falk et al. used the U-Net neural network model to solve the quantification task in biomedical imaging data and applied the model to the single-cell segmentation problem (28).

Nonetheless, there are some limitations in this study. First, we did not conduct a subgroup analysis of PCa to compare the segmentation efficiency in different stages of PCa. In addition, we did not conduct multi-centre experiments or use an independent test set for model evaluation. The collected data set seems to be small. The abovementioned limitations could contribute to a low accuracy and insufficient generalizability of the trained segmentation model.

Conclusions

In this study, we proved that a prostatic segmentation network trained from the ultrasound images of patients with BPH could be applied for the segmentation of PCa ultrasound images, and the model that we trained showed good performance, thereby potentially reducing the need for tumour data for high-precision segmentation models. Further research is needed to improve the model to overcome the shortcomings of the trained model and to optimize the results.

Acknowledgments

We acknowledge the support of our strategic partner, PaddlePaddle Platform.

Footnote

Reporting Checklist: The authors have completed the CLEAR reporting checklist. Available at https://qims.amegroups.com/article/view/10.21037/qims-2024-2476/rc

Funding: This study was supported by

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://qims.amegroups.com/article/view/10.21037/qims-2024-2476/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki and its subsequent amendments. The study was approved by the Clinical Research Ethics Committee of Renmin Hospital of Wuhan University (No. WDRY2023-K122) and individual consent for this retrospective analysis was waived.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Schatten H. Brief Overview of Prostate Cancer Statistics, Grading, Diagnosis and Treatment Strategies. Adv Exp Med Biol 2018;1095:1-14. [Crossref] [PubMed]

- Terracciano D, La Civita E, Athanasiou A, Liotti A, Fiorenza M, Cennamo M, Crocetto F, Tennstedt P, Schiess R, Haese A, Ferro M, Steuber T. New strategy for the identification of prostate cancer: The combination of Proclarix and the prostate health index. Prostate 2022;82:1469-76. [Crossref] [PubMed]

- Gentile F, La Civita E, Della Ventura B, Ferro M, Cennamo M, Bruzzese D, Crocetto F, Velotta R, Terracciano D. A Combinatorial Neural Network Analysis Reveals a Synergistic Behaviour of Multiparametric Magnetic Resonance and Prostate Health Index in the Identification of Clinically Significant Prostate Cancer. Clin Genitourin Cancer 2022;20:e406-10. [Crossref] [PubMed]

- Brock M, von Bodman C, Palisaar J, Becker W, Martin-Seidel P, Noldus J. Detecting Prostate Cancer. Dtsch Arztebl Int 2015;112:605-11. [PubMed]

- Wu P, Liu Y, Li Y, Liu B. Robust Prostate Segmentation Using Intrinsic Properties of TRUS Images. IEEE Trans Med Imaging 2015;34:1321-35. [Crossref] [PubMed]

- Anas EMA, Mousavi P, Abolmaesumi P. A deep learning approach for real time prostate segmentation in freehand ultrasound guided biopsy. Med Image Anal 2018;48:107-16. [Crossref] [PubMed]

- Sunoqrot MRS, Selnæs KM, Sandsmark E, Nketiah GA, Zavala-Romero O, Stoyanova R, Bathen TF, Elschot M. A Quality Control System for Automated Prostate Segmentation on T2-Weighted MRI. Diagnostics (Basel) 2020.

- Yang F, Ford JC, Dogan N, Padgett KR, Breto AL, Abramowitz MC, Dal Pra A, Pollack A, Stoyanova R. Magnetic resonance imaging (MRI)-based radiomics for prostate cancer radiotherapy. Transl Androl Urol 2018;7:445-58. [Crossref] [PubMed]

- Zhang H, Li X, Zhang Y, Huang C, Wang Y, Yang P, Duan S, Mao N, Xie H. Diagnostic nomogram based on intralesional and perilesional radiomics features and clinical factors of clinically significant prostate cancer. J Magn Reson Imaging 2021;53:1550-8. [Crossref] [PubMed]

- Liu M, Shao X, Jiang L, Wu K. 3D EAGAN: 3D edge-aware attention generative adversarial network for prostate segmentation in transrectal ultrasound images. Quant Imaging Med Surg 2024;14:4067-85. [Crossref] [PubMed]

- Lei Y, Tian S, He X, Wang T, Wang B, Patel P, Jani AB, Mao H, Curran WJ, Liu T, Yang X. Ultrasound prostate segmentation based on multidirectional deeply supervised V-Net. Med Phys 2019;46:3194-206. [Crossref] [PubMed]

- Wang Y, Dou H, Hu X, Zhu L, Yang X, Xu M, Qin J, Heng PA, Wang T, Ni D. Deep Attentive Features for Prostate Segmentation in 3D Transrectal Ultrasound. IEEE Trans Med Imaging 2019;38:2768-78. [Crossref] [PubMed]

- Han S, Hwang SI, Lee HJ. A Weak and Semi-supervised Segmentation Method for Prostate Cancer in TRUS Images. J Digit Imaging 2020;33:838-45. [Crossref] [PubMed]

- Meiburger KM, Acharya UR, Molinari F. Automated localization and segmentation techniques for B-mode ultrasound images: A review. Comput Biol Med 2018;92:210-35. [Crossref] [PubMed]

- Ørsted DD, Bojesen SE, Nielsen SF, Nordestgaard BG. Association of clinical benign prostate hyperplasia with prostate cancer incidence and mortality revisited: a nationwide cohort study of 3,009,258 men. Eur Urol 2011;60:691-8. [Crossref] [PubMed]

- Ørsted DD, Bojesen SE. The link between benign prostatic hyperplasia and prostate cancer. Nat Rev Urol 2013;10:49-54. [Crossref] [PubMed]

- World Medical Association. World Medical Association Declaration of Helsinki. Ethical principles for medical research involving human subjects. Bull World Health Organ 2001;79:373-4. [PubMed]

- Russell BC, Torralba A, Murphy KP, Freeman WT. LabelMe: A Database and Web-Based Tool for Image Annotation. International Journal of Computer Vision 2008;77:157-73. [Crossref]

- Ronneberger O, Fischer P, Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In: Navab N, Hornegger J, Wells W, Frangi A. editors. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015. Lecture Notes in Computer Science, vol 9351. Springer, Cham; 2015:234-41.

- Chaurasia A, Culurciello E, editors. LinkNet: Exploiting encoder representations for efficient semantic segmentation. 2017 IEEE Visual Communications and Image Processing (VCIP); 10-13 December 2017; St. Petersburg, FL, USA. IEEE; 2017.

- Zhao H, Shi J, Qi X, Wang X, Jia J. Pyramid Scene Parsing Network. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE Computer Society 2016:6230-9.

- Hamet P, Tremblay J. Artificial intelligence in medicine. Metabolism 2017;69S:S36-40. [Crossref] [PubMed]

- Shaziya H, Shyamala K, Zaheer R, editors. Automatic Lung Segmentation on Thoracic CT Scans Using U-Net Convolutional Network. 2018 International Conference on Communication and Signal Processing (ICCSP); 03-05 April 2018; Chennai, India. IEEE; 2018.

- Lei Y, Wang T, Roper J, Jani AB, Patel SA, Curran WJ, Patel P, Liu T, Yang X. Male pelvic multi-organ segmentation on transrectal ultrasound using anchor-free mask CNN. Med Phys 2021;48:3055-64. [Crossref] [PubMed]

- Orlando N, Gillies DJ, Gyacskov I, Romagnoli C, D'Souza D, Fenster A. Automatic prostate segmentation using deep learning on clinically diverse 3D transrectal ultrasound images. Med Phys 2020;47:2413-26. [Crossref] [PubMed]

- Ma L, Guo R, Tian Z, Fei B. A random walk-based segmentation framework for 3D ultrasound images of the prostate. Med Phys 2017;44:5128-42. [Crossref] [PubMed]

- Bi H, Jiang Y, Tang H, Yang G, Shu H, Dillenseger JL. Fast and accurate segmentation method of active shape model with Rayleigh mixture model clustering for prostate ultrasound images. Comput Methods Programs Biomed 2020;184:105097. [Crossref] [PubMed]

- Falk T, Mai D, Bensch R, Çiçek Ö, Abdulkadir A, Marrakchi Y, et al. U-Net: deep learning for cell counting, detection, and morphometry. Nat Methods 2019;16:67-70. [Crossref] [PubMed]