Diabetic retinal vessel segmentation algorithm based on MA-DUNet

Introduction

Diabetic retinopathy (DR) is an important cause of visual impairment and blindness worldwide (1,2). According to the World Health Organization, as of 2019, there were 370 million people living with diabetes worldwide, approximately 30–40% of whom were at risk of developing DR (3). Therefore, the early detection and appropriate treatment of DR are crucial to effectively prevent the condition from worsening, prevent vision loss (more than 90% of vision loss caused by diabetes can be prevented by early detection and appropriate treatment), and minimize the risk of other complications (4).

For patients with diabetes, the degree of microvascular lesions on the retina often reflects the severity of diabetes. DR typically progresses through six stages (5), starting with mild microaneurysms and small hemorrhages, and gradually evolving into more complex and severe proliferative stages. In stage I (the non-proliferative stage), only minor lesions, such as microaneurysms and small hemorrhages, may appear on the retina. Although the microvasculature is already damaged, no neovascularization has yet formed. As the disease progresses into stages II and III, the retina gradually develops yellow-white hard exudates, cotton-wool spots, and more microaneurysms, accompanied by retinal edema and increased vascular permeability. These changes exacerbate the severity of the lesion. By stage IV (the pre-proliferative/early proliferative stage), the retina begins to form neovascularizations, which have fragile walls prone to rupture and bleeding. Finally, in Stages V and VI (the proliferative/advanced proliferative stages), neovascularizations increase and form fibrovascular proliferative membranes, potentially leading to retinal detachment and severely impacting vision. By carefully observing and thoroughly analyzing the retinal vasculature, doctors can not only effectively capture lesion information caused by diabetes but also identify the characteristics of lesions at different stages, providing a basis for developing personalized treatment plans (6).

Optical coherence tomography angiography (OCTA) (7) can provide high-resolution images of retinal structures, clearly showing structural and morphological changes in the blood vessels. With these structural vascular details, doctors can observe microscopic lesions in the retinal blood vessels and assess the severity of the lesions, such as the degree of vascular stenosis and the size of aneurysms, thereby providing crucial evidence for the early diagnosis of DR. Garg et al. (8) built a semi-automatic algorithm based on OCTA to precisely measure non-perfusion areas on widefield swept-source OCTA. This method significantly improves and simplifies the calculation of the signal void area ratio, and it is faster and more accurate than manual characterization of non-perfused regions and subsequent estimation of the signal void area ratio. The future application of this broad field of view (FOV) combination has tremendous prognostic and diagnostic clinical implications for DR and other types of ischemic retinopathy. Researchers can use computer-aided retinal vessel segmentation technology to further enhance the accuracy and efficiency of diagnosis. This technology not only significantly improves analysis efficiency but also effectively reduces the risk of errors caused by subjective factors, providing strong support for the early detection and treatment of DR.

Currently, numerous computer-aided algorithms have been developed for the automatic segmentation of retinal blood vessels. Xu et al. (9) proposed a novel deep neural network called the Shared decoder and Pyramid-like loss Network (SPNet) that is based on a shared decoder and a pyramid-like loss function. By incorporating decoder modules with a sharing mechanism and a series of weight-shared components to decode feature maps at different scales, the SPNet captures multi-scale semantic information. To better represent capillaries and vessel edges, the SPNet employs a residual pyramid structure in the decoding stage to decompose spatial information, and designs a pyramid-like loss function to gradually correct potential segmentation errors. Wang et al. (10) proposed a supervised image segmentation method that integrates a convolutional neural network (CNN) and random forest (RF). The CNN is used as a feature extractor to mine key features from training data, while RF serves as a classifier to precisely segment images using these features. Nergiz et al. (11) developed a fully automatic system for retinal image vessel segmentation that efficiently completes the vessel segmentation task through a series of processing steps; the Frangi filter is first applied to enhance vessel features, structure tensors are then used to obtain four-dimensional tensor information, and finally, the Otsu algorithm and tensor coloring technique are employed to achieve accurate vessel segmentation. Adeyinka et al. (12) designed a deep-learning system comprising two major modules: an encoder and a decoder. In the encoder, convolutional layers are responsible for feature extraction, and pooling layers are used to reduce the resolution of feature maps. The decoder unit processes these features to restore resolution and fuse features, ultimately generating high-resolution vessel feature maps. This structure enables the system to effectively extract and process image features. The U-shaped Network (U-Net) proposed by Ronneberger et al. (13) is the most widely used network in medical image analysis. Its unique encoder-decoder structure and effective information flow facilitated by skip connections allow the U-Net to perform well even with relatively limited data.

Researchers have proposed many improvements to the U-Net. For example, Wu et al. (14) designed an adaptive module that captures multi-scale features by adjusting the receptive field, and fuses these features to extract richer semantic information. Sun et al. (15) proposed the Series Deformable convolution and Attention mechanism based on the U-Net that incorporates deformable convolutional structures and attention mechanisms. Jin et al. (16) integrated the dense-block into the U-Net for retinal vessel segmentation, capturing different vessel morphologies by adjusting the receptive field, and proposed the Deformable U-shaped Network (DUNet), which outperformed the traditional U-Net and networks that only use deformable convolution. However, due to issues such as noise interference, insufficient contrast, and non-uniform changes in tiny blood vessels in retinal images, the accurate segmentation of retinal blood vessels remains a significant challenge in current research (17). Therefore, continuous exploration and innovation are necessary to find more effective and precise segmentation methods.

To address these issues, this study proposed optimizations based on the DUNet to further enhance retinal blood vessel segmentation performance. Specifically, the atrous multi-scale (AMS) convolution was incorporated in the encoding stage to enhance the perception of information at different scales, thereby improving the extraction and representation of image features. This improvement enables the network to better capture multi-scale features of retinal blood vessels, enhancing segmentation accuracy. Second, a gated channel transformation (GCT) attention mechanism was introduced between the encoder and decoder to improve feature transmission and extract key information. This mechanism dynamically adjusts the relationships between feature channels, emphasizing important task-related features while suppressing irrelevant ones, thus improving the effectiveness and efficiency of feature utilization. Finally, in the decoding process, a Multi-Modal Attention Fusion Block (MAFB) that combines a Multi-Modal Fusion Block (MFB) and GCT attention mechanism was used to optimize information usage and improve segmentation performance. MAFB not only fuses feature information from different levels, but also further optimizes and selects the fused features through the GCT attention mechanism, making the decoding process more precise and efficient. We present this article in accordance with the CLEAR reporting checklist (available at https://qims.amegroups.com/article/view/10.21037/qims-24-2267/rc).

Methods

Datasets

The study was conducted in accordance with the Declaration of Helsinki and its subsequent amendments. This study used the following three public datasets of color retinal images that are widely used in blood vessel segmentation: digital retinal images for vessel extraction (DRIVE); structured analysis of the retina (STARE); and Child Heart and Health Study in England Database 1 (CHASE-DB1). The DRIVE dataset (18) contains 40 images (33 normal and healthy fundus images, and seven DR images) that have a resolution of 565×584 pixels, and a FOV angle of 45°. The images were divided into a training set and test set at a ratio of 1:1. The STARE dataset (19) dataset contains 20 images (10 normal and healthy images, and 10 DR images) that have a resolution of 700×605 pixels, and a FOV angle of 35°. The images were divided into a training set and test set at a ratio of 3:1. The CHASE-DB1 dataset (20) contains 28 images that have a resolution of 999×960 pixels, and a FOV angle of 45°. The CHASE-DB1 dataset includes 14 normal and healthy retinal images as well as 14 DR images. The images were divided into a training set and test set at a ratio of 5:2. Through collaboration with the Guangxi Zhuang Autonomous Region Occupational Disease Prevention and Treatment Hospital, we collected fundus image data from personnel working in continuous radioactive environments. The images have a resolution of 953×650 pixels and a FOV angle of 45°. This dataset, which contains 46 images, was used to validate the performance of the model in segmenting blood vessels in real-world scenarios. The details of each dataset are provided in Table 1.

Table 1

| Dataset | Pixels | Angle (°) | Sample | Ratio |

|---|---|---|---|---|

| DRIVE | 565×584 | 45 | 40 | 1:1 |

| STARE | 700×605 | 35 | 20 | 3:1 |

| CHASE-DB1 | 999×960 | 45 | 28 | 5:2 |

| Hospital data | 953×650 | 45 | 46 | 3:1 |

CHASE-DB1, Child Heart and Health Study in England Database 1; DRIVE, Digital Retinal Images for Vessel Extraction; STARE, Structured Analysis of the Retina.

Dataset preprocessing

In this study, the image data were enhanced using grayscale conversion, normalization, contrast limited adaptive histogram equalization (CLAHE) (21), and Gamma (22) processes. Single-channel images have better contrast when the background is displayed, as more background details are retained, and the background is clearer and more natural. Conversely, red, green, and blue (RGB) images may lose background detail and contrast. Therefore, the RGB images were converted into single-channel images to optimize the background performance. Examples of preprocessed intermediate images are shown in Figure 1. These steps improved the image quality and laid a solid foundation for the subsequent image analysis and retinal blood vessel identification.

MA-DUNet model framework

By combining the characteristics of the U-Net and deformable convolutional networks (23), the DUNet (16) adopts a U-shaped structure with bilateral encoders and decoders, using deformable convolutional blocks to replace some of the original convolutional layers. This approach learns local, dense, and adaptive receptive fields, thereby modeling retinal blood vessels of different shapes and sizes. In deformable convolutions, the grid sampling positions are no longer fixed but are adjusted based on offsets. These offsets are not arbitrarily set; rather, they are learned and specifically generated by additional convolutional layers in the network. As a result, deformable convolutions exhibit excellent adaptability, capable of handling variations in scale, shape, and orientation. Each deformable convolutional block integrates a convolutional offset layer, a convolutional layer, a batch normalization layer, and an activation layer, forming a complete processing unit. By incorporating such convolutional layers, the DUNet can more accurately capture features of various shapes and sizes, enabling the adaptive processing of different input features. The use of deformable convolutional layers allows the network to dynamically adjust its receptive field, thereby more effectively processing features of different shapes and sizes. This flexibility enables the DUNet to better adapt to complex input data, enhancing its representation capability for diverse scenarios. Meanwhile, to address the issue of internal covariate shift and improve training speed, the DUNet inserts batch normalization layers and rectified linear unit (ReLU) activation function layers after each convolutional unit. The introduction of batch normalization layers stabilizes the input distribution of each layer, facilitating network convergence and learning. Simultaneously, the ReLU activation function layer introduces non-linearity, enhancing the network’s expressive power and ability to capture features. This combination in the DUNet helps improve model performance and training efficiency.

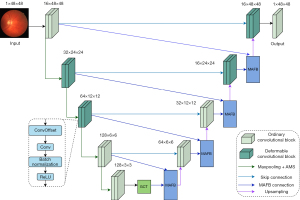

Based on the DUNet, this study further introduced multi-scale dilated convolutions, a GCT attention mechanism, and a multimodal attention fusion block to construct a network architecture named the Multi-modal Attention Deformable U-shaped Network (MA-DUNet). The overall structure of the MA-DUNet is shown in Figure 2. This improvement aimed to enhance the network’s perception of image information at different scales, strengthen feature transmission, and optimize the fusion of multimodal information, thereby improving the accuracy of image segmentation. In the encoding stage, the introduction of the AMS convolution significantly expands the network’s receptive field, enabling the network to better capture information at different scales in the image, whether its microscopic details or macroscopic structures, allowing for more precise extraction. This enhanced perception capability is crucial for improving the accuracy of segmentation results. To further strengthen feature transmission and the extraction of important information, the MA-DUNet incorporates GCT attention mechanism between the bottom encoder and decoder. The introduction of GCT attention mechanism endows the model with the ability to dynamically adjust the relationships between feature channels, reinforcing important features closely related to the segmentation task while weakening the interference of irrelevant features. This enables the model to focus more on information beneficial to the segmentation task during the decoding process, thereby improving overall performance. MAFB plays a key role in the decoding process. This module combines the advantages of the MFB and GCT attention mechanism, efficiently fusing the output features of the encoding and decoding layers. Through the MAFB connection, the fused features become the new output features of the decoding layer, which are then upsampled and decoded together with the original output features of the encoding layer. This design enables the model to fully use information from both the encoding and decoding processes, achieving more precise segmentation.

AMS

The AMS convolution aggregates feature information by combining atrous convolution and multi-scale convolution (24), and its structure is shown in Figure 3. The AMS convolution consists of three parts. The first part is hole depth separable convolution that expands the perceptual domain and reduces information loss by increasing hole rates (1, 1, 3, 5). The second part is multi-branch deep strip convolution whereby two depth strip convolutions are used to replace the multi-branch depth separable convolution. This simplifies the model and captures multi-scale features. Pairing uses 5×5, 7×7, and 11×11 convolution kernels. Finally, there is the convolutional joint part that uses 1×1 convolution to integrate multi-scale information and enhance the model’s ability to perceive features of different scales. By integrating these three components, AMS convolution is able to achieve good performance in various complex scenarios, particularly in target segmentation tasks, where it can effectively handle targets of different scales and complexities. We introduced the AMS convolutional structure into the down-sampling part of the network and combined it with maximum pooling operations. This approach significantly enhances the network’s performance in image segmentation tasks and enables it to adapt to targets of varying scales and complexities, thereby better addressing the image segmentation needs in diverse and complex scenarios. The specific formula for the output of the AMS convolution is shown in Eq. [1], which is expressed as follows:

where and represent the input and output features, respectively; , , and denote the regular convolution, the dilated depth-wise separable convolution, and the multi-branch depth-wise striped convolution, respectively; represents the i-th branch, where ; and the symbol denotes the element-wise matrix multiplication operation.

GCT

The GCT attention mechanism (25) is a highly lightweight and efficient architecture based on the normalization operation of channel relationship modeling. Through parameter-free normalization, global context embedding, and adaptive gating operators, GCT attention mechanism can capture competition and cooperative relationships between channel features. Parameter-free normalization can simplify the model, global context embedding integrates global information, and gated adaptive operators intelligently adjust input channels to allow GCT attention mechanism to adapt to diverse data distribution and task requirements. Its trainable channel-level parameters are lightweight and efficient, with flexible weight adjustment and easy deployment. Compared to the Squeeze and Excitation Networks (SE-Net), GCT attention mechanism enhances the ability to model complex features by replacing fully connected layers and introducing a gating mechanism to model channel feature relationships. Overall, GCT attention mechanism demonstrates strong potential in channel feature processing and is suitable for various complex tasks and application scenarios, providing powerful support for improving the performance of deep neural networks. The detailed structure of GCT attention mechanism is shown in Figure 4.

When the GCT attention mechanism module receives an input tensor , it processes the tensor through three sequential parts to generate the final refined tensor (also of shape ). In the first part, the global context embedding module aggregates global context information in each channel of the input tensor , producing an output tensor . By using l2-norm instead of global average pooling and introducing trainable parameters to control the weight of each channel, when is close to 0, channel C will not participate in channel normalization. In other words, the gating weight allows GCT attention mechanism to learn the relationship from one channel to others. In the second part, the output tensor S from the first part is taken as input, and l2-norm is used for cross-channel normalization to create a competitive relationship among channels, resulting in the output tensor . In the third part, the output tensor from the second part is taken as input, and a gating mechanism is introduced to adjust the scale of each original channel according to its corresponding gate. Finally, the processed tensor is output. When the gating weight of a channel is positively activated, GCT attention mechanism promotes competition between that channel and others; while when is negatively activated, GCT attention mechanism encourages cooperation between that channel and others. This dynamic gating mechanism allows GCT attention mechanism to intelligently adjust the relationships between channels based on task and data characteristics, thereby enhancing the model’s expressive power and adaptability.

In summary, for an input tensor , the process of obtaining the refined tensor after passing through the three components of GCT attention mechanism is described by Eq. [2], which is expressed as follows:

where , , is a small constant to prevent issues with a derivation of zero; is a scalar used to normalize , ensuring that the scale of does not become too small when C is large; and and are trainable weights and biases, with and .

MAFB

MFB (26) makes full use of multi-modal information by adaptively weighing different inputs of different modes. When dealing with multi-modal fusion tasks, MFB uses three strategies (i.e., addition, multiplication, and the maximum value) to fuse different modal feature maps, and then splices and sends them to the convolution layer to enhance model flexibility and multi-modal fusion performance. This introduces the GCT mechanism in addition to the three MFB fusion strategies, forming a MAFB to enhance the adaptability and performance of the model. Since the skip connection structure used between U-Nets can itself connect the feature maps of the corresponding layers in the encoding and decoding stages, the MFB was adjusted to remove the connection between the two convolutional layers and the modal output of the upper layer operations to make them more adaptable to model requirements and reduce redundancy. The MAFB module is illustrated in Figure 5, where +, ×, Max, and C represent element-wise summation, element-wise product, element-wise maximization, and tensor concatenation operations, respectively. The computation processes for the output tensors , , , and are described by Eq. [3].

Eq. [3] is expressed as follows:

where is the output tensor of the i-th layer in the encoding stage, serving as the input for modality 1; is the output tensor of the corresponding i-th layer in the decoding stage, serving as the input for modality 2; and represents the DCT attention mechanism function, and .

Because the skip connection structure employed in the U-Net inherently connects the feature maps of corresponding layers in the encoding and decoding stages, adjustments were made to the original MFB module. Specifically, the connections between the two convolutional layers and the upper modal outputs were removed. This modification not only met the model’s requirements but also effectively reduced network redundancy. The adjusted MAFB module processes the two input tensors to generate the output tensor . Subsequently, two 3×3 convolutional operations are performed on this output, and the result is used as the output of the next layer in the decoder. Afterwards, an up-sampling operation is carried out with the decoding layer to preserve more contextual semantic features. The MFB module itself has already achieved the weighted integration of multimodal information. With the addition of GCT attention mechanism, the model gains a deeper understanding of the correlations between different modalities and dynamically adjusts the weights of various modalities based on the characteristics of the input data. This improvement further enhances the model’s ability to comprehensively use multimodal information.

Results

Model training

The MA-DUNet model was trained using a combination of AMS convolution, GCT attention mechanism, and MAFB. During training, the number of iterations was set to 100, and the batch size was 32. The Adam optimizer was used for parameter tuning, with the initial learning rate set to 0.001 and the patience parameter set to 15. If the loss value remained stable after 15 epochs, the learning rate was reduced by 10 times to 0.0001.

Evaluation index

To quantify the experimental results, this study used accuracy (ACC), precision (Pre), sensitivity (Sen), specificity (Spe), the area under the curve (AUC) of the receiver operating characteristic (ROC) curve, and the F1 score as evaluation indicators. ACC represents the proportion of correct predictions in all samples. Pre represents the proportion of samples that are actually blood vessels among all the samples predicted as blood vessels. Sen represents the proportion of samples that are correctly predicted as blood vessels to all the samples that are actually blood vessels. Spe describes the proportion of samples that are correctly predicted as background to all the samples that are actually background. AUC represents the accuracy of the model in predicting the blood vessel rate, which is obtained by calculating the area under the ROC curve. The F1 score is the harmonic mean of Pre and Sen, and is used to comprehensively measure a model’s Pre and recall. A high F1 score indicates that the model has achieved a good balance between Pre and recall. The specific calculation formulas for these indicators are set out in Table 2.

Table 2

| Evaluation index | Formulas |

|---|---|

| ACC | ACC = (TP + TN)/(TP + TN + FP + FN) |

| Pre | Pre = TP/(TP + FP) |

| Sen | Sen = TP/(TP + FN) |

| Spe | Spe = TN/(TN + FP) |

| F1 score | F1 = 2(Pre*Sen)/(Pre + Sen) |

ACC, accuracy; FP, false positive; FN, false negative; Pre, precision; Sen, sensitivity; Spe, specificity; TP, true positive; TN, true negative.

In Table 2, true positive (TP) represents the sample whose pixel value is predicted to be a blood vessel and is actually a blood vessel; true negative represents the sample whose pixel value is predicted to be the background and is actually the background; false positive represents the pixel value samples that are predicted to be blood vessels but are actually background; and false negative represents the pixel values samples that are predicted to be background but are actually blood vessels.

Ablation study on modules

To verify the effectiveness of each module of the MA-DUNet, ablation experiments were conducted on the DRIVE, STARE, and CHASE-DB1 datasets to confirm the role of each module in network performance. By comparing the performance differences between the network models with different modules and their variant models lacking the corresponding modules one by one, the effect of each module on overall performance could be clearly assessed. The experimental design included the following six parts: (I) DUNet; (II) DUNet + AMS; (III) DUNet + GCT; (IV) DUNet + MFB; (V) DUNet + MAFB; and (VI) MA-DUNet. In these experiments, DUNet + AMS indicates the addition of AMS convolution between the encoding layers of the DUNet; DUNet + GCT represents the incorporation of GCT attention mechanism between the bottom layers of the encoder and decoder in the DUNet; DUNet + MFB signifies the introduction of a MFB in the decoder part of the DUNet; DUNet + MAFB denotes the integration of a MAFB, improved through the use of both GCT attention mechanism and MFB, into the decoding structure of the network; and MA-DUNet represents the simultaneous addition of the multi-scale AMS convolution, GCT attention mechanism, and MAFB to the DUNet model. The experimental results are presented in Tables 3-5.

Table 3

| Method | Pre (%) | Sen (%) | Spe (%) | ACC (%) | F1 score (%) | AUC (%) |

|---|---|---|---|---|---|---|

| DUNet | 85.31 | 79.39 | 97.98 | 95.62 | 82.26 | 97.97 |

| DUNet + AMS | 85.26 | 79.78 | 97.98 | 95.65 | 82.40 | 97.99 |

| DUNet + GCT | 85.45 | 79.42 | 98.03 | 95.66 | 82.35 | 98.04 |

| DUNet + MFB | 85.52 | 79.68 | 97.99 | 95.64 | 82.36 | 97.99 |

| DUNet + MAFB | 85.68 | 79.83 | 98.05 | 95.69 | 82.43 | 98.06 |

| MA-DUNet | 85.76† | 79.93† | 98.11† | 95.72† | 82.50† | 98.10† |

†, highest value. ACC, accuracy; AMS, atrous multi-scale; AUC, area under the curve; DRIVE, Digital Retinal Images for Vessel Extraction; DUNet, Deformable U-shaped Network; GCT, gated channel transformation; MA-DUNet, Multi-modal Attention Deformable U-shaped Network; MAFB, Multi-modal Fusion Block; MFB, Multi-modal Fusion Block; Pre, precision; Sen, sensitivity; Spe, specificity.

Table 4

| Method | Pre (%) | Sen (%) | Spe (%) | ACC (%) | F1 score (%) | AUC (%) |

|---|---|---|---|---|---|---|

| DUNet | 88.03 | 80.49 | 98.51 | 96.52 | 84.20 | 98.71 |

| DUNet + AMS | 88.31 | 81.14 | 98.63 | 96.66 | 84.65† | 98.83 |

| DUNet + GCT | 88.43 | 80.64 | 98.65 | 96.63 | 84.42 | 98.80 |

| DUNet + MFB | 88.21 | 80.76 | 98.62 | 96.60 | 84.23 | 98.77 |

| DUNet + MAFB | 88.45 | 81.28 | 98.68 | 96.64 | 84.31 | 98.83 |

| MA-DUNet | 88.75† | 81.58† | 98.74† | 96.68† | 84.53 | 98.89† |

†, highest value. ACC, accuracy; AMS, atrous multi-scale; AUC, area under the curve; DUNet, Deformable U-shaped Network; GCT, gated channel transformation; MA-DUNet, Multi-modal Attention Deformable U-shaped Network; MAFB, Multi-modal Attention Fusion Block; MFB, Multi-modal Fusion Block; Pre, precision; Sen, sensitivity; Spe, specificity; STARE, Structured Analysis of the Retina.

Table 5

| Method | Pre (%) | Sen (%) | Spe (%) | ACC (%) | F1 score (%) | AUC (%) |

|---|---|---|---|---|---|---|

| DUNet | 79.74 | 79.35 | 97.88 | 96.24 | 79.54 | 98.23 |

| DUNet + AMS | 80.27† | 78.99 | 98.06 | 96.33 | 79.62 | 98.27 |

| DUNet + GCT | 79.99 | 79.42 | 98.01 | 96.32 | 79.67 | 98.26 |

| DUNet + MFB | 80.03 | 79.23 | 98.03 | 96.32 | 79.63 | 98.25 |

| DUNet + MAFB | 80.09 | 79.56 | 98.03 | 96.35 | 79.82 | 98.30 |

| MA-DUNet | 80.15 | 79.98† | 98.09† | 96.38† | 80.04† | 98.35† |

†, highest value. ACC, accuracy; AMS, AMS convolution; AUC, area under the curve; CHASE-DB1, Child Heart and Health Study in England Database 1; DUNet, Deformable U-shaped Network; GCT, gated channel transformation; MA-DUNet, Multi-modal Attention Deformable U-shaped Network; MAFB, Multi-modal Attention Fusion Block; MFB, Multi-modal Fusion Block; Pre, precision; Sen, sensitivity; Spe, specificity.

Based on the ablation experiment results shown in Tables 3-5 across the three datasets, we observed that the addition of different modules to the original DUNet generally improved model performance. Although there are occasional slight decreases in performance when introducing a certain module, the overall trend still indicated that these additional modules had a positive effect on enhancing model performance. Further, with the use of the improved network model, we successfully compensated for some performance deficiencies. Ultimately, our proposed MA-DUNet integrates the advantages of GCT attention mechanism and multi-modal fusion, and skillfully combines contextual information to guide the operations in the decoding stage. Through meticulous structural optimization and information guidance, the model achieves significant improvements in multiple evaluation metrics, including Pre, Sen, Spe, ACC, F1 score, and AUC. Specifically, there were improvements of 0.45%, 0.54%, 0.13%, 0.10%, 0.24%, and 0.13% on the DRIVE dataset, 0.72%, 1.09%, 0.23%, 0.16%, 0.33%, and 0.18% on the STARE dataset, and 0.41%, 0.63%, 0.21%, 0.14%, 0.50%, and 0.12% on the CHASE_DB1 dataset in terms of Pre, Sen, Spe, ACC, F1 score, and AUC respectively. Among these metrics, Pre and Sen showed the most significant improvements, indicating that the model was better able to identify TPs than DUNet.

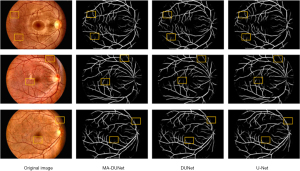

Comparison with other algorithms

During the training phase of the segmentation model, after adding the AMS convolution, GCT attention mechanism, and MAFB to the DUNet, the model’s Sen, Spe, ACC, AUC, and recall, all improved on the DRIVE, STARE, and CHASE-DB1 datasets. For the DRIVE, STARE, and CHASE-DB1 datasets, the model’s ACC values reached 95.72%, 96.68%, and 96.38%, respectively, and the model’s AUC values reached 98.10%, 98.89%, and 98.35%, respectively. The quantitative results comparing our proposed MA-DUNet method with other methods are shown in Tables 6-8. The qualitative segmentation results of two representative examples from each of the three datasets using different network models (UNet, DUNet, and MA-DUNet) are shown in Figure 6. The first two rows images are the results for the DRIVE dataset, the middle two rows images are the results for the STARE dataset, and the last two rows images are the results for the CHASE-DB1 dataset. Our proposed MA DUNet model excelled at detail processing, accurately capturing the tiny peripheral branches of blood vessels and effectively eliminating blurred boundaries and fracture phenomena in segmentation results.

Table 6

| Method | Sen (%) | Spe (%) | ACC (%) | AUC (%) | Year |

|---|---|---|---|---|---|

| Ronneberger et al. (13) | 78.69 | 97.99 | 95.52 | 97.77 | 2015 |

| Liskowski et al. (27) | 77.63 | 97.68 | 94.95 | 97.20 | 2016 |

| Yan et al. (28) | 76.53 | 98.18 | 95.42 | 97.52 | 2018 |

| Cheng et al. (29) | 76.72 | 98.14 | 95.59 | 97.93 | 2020 |

| Khan et al. (30) | 79.49 | 97.38 | 95.79 | 97.20 | 2020 |

| Li et al. (31) | 79.21 | 98.10 | 95.68 | 98.06 | 2020 |

| Muzammil et al. (32) | 72.71 | 97.98 | 95.71 | 97.90 | 2022 |

| Liang et al. (33) | 80.54† | 97.89 | 95.68 | 98.07 | 2023 |

| Liu et al. (34) | 79.85 | 97.91 | 95.65 | 97.98 | 2023 |

| Tan et al. (35) | 77.26 | 98.26 | 95.59 | 97.92 | 2024 |

| Ding et al. (36) | 74.84 | 98.36† | 95.37 | 97.59 | 2024 |

| MA-DUNet (ours) | 79.93 | 98.11 | 95.72† | 98.10† | 2025† |

†, highest value. ACC, accuracy; AUC, area under the curve; DRIVE, Digital Retinal Images for Vessel Extraction; MA-DUNet, Multi-modal Attention Deformable U-shaped Network; Sen, sensitivity; Spe, specificity.

Table 7

| Method | Sen (%) | Spe (%) | ACC (%) | AUC (%) | Year |

|---|---|---|---|---|---|

| Ronneberger et al. (13) | 76.46 | 98.60 | 96.40 | 97.91 | 2015 |

| Liskowski et al. (27) | 78.66 | 97.54 | 95.63 | 97.88 | 2016 |

| Jiang et al. (37) | 78.20 | 97.98 | 96.53 | 98.70 | 2018 |

| Yan et al. (28) | 75.81 | 98.46 | 96.12 | 98.01 | 2018 |

| Li et al. (38) | 75.36 | 98.08 | 95.81 | 97.21 | 2020 |

| Khan et al. (30) | 81.18 | 97.38 | 95.43 | 97.28 | 2020 |

| Muzammil et al. (32) | 71.64 | 97.60 | 95.60 | 98.65 | 2022 |

| Tan et al. (35) | 71.83 | 99.15† | 96.48 | 98.62 | 2024 |

| Ding et al. (36) | 70.10 | 98.98 | 95.94 | 98.01 | 2024 |

| MA-DUNet (ours) | 81.58† | 98.74 | 96.68† | 98.89† | 2025† |

†, highest value. ACC, accuracy; AUC, area under the curve; MA-DUNet, Multi-modal Attention Deformable U-shaped Network; Sen, sensitivity; Spe, specificity; STARE, Structured Analysis of the Retina.

Table 8

| Method | Sen (%) | Spe (%) | ACC (%) | AUC (%) | Year |

|---|---|---|---|---|---|

| Ronneberger et al. (13) | 79.38 | 97.57 | 96.09 | 97.91 | 2015 |

| Yan et al. (28) | 76.33 | 98.09 | 96.10 | 97.81 | 2018 |

| Alom et al. (39) | 77.26 | 98.20† | 95.53 | 97.79 | 2019 |

| Khan et al. (30) | 81.90 | 97.35 | 96.37 | 97.80 | 2020 |

| Du et al. (40) | 81.95 | 97.27 | 95.90 | 97.84 | 2021 |

| Deng et al. (41) | 85.43† | 96.93 | 95.87 | 98.06 | 2022 |

| Liang et al. (33) | 82.40 | 97.75 | 96.35 | 98.34 | 2023 |

| Tan et al. (35) | 79.83 | 98.04 | 96.37 | 98.28 | 2024 |

| Ding et al. (36) | 74.75 | 97.78 | 95.66 | 96.95 | 2024 |

| MA-DUNet (ours) | 79.98 | 98.09 | 96.38† | 98.35† | 2025† |

†, highest value. ACC, accuracy; AUC, area under the curve; MA-DUNet, Multi-modal Attention Deformable U-shaped Network; Sen, sensitivity; Spe, specificity; CHASE-DB1, Child Heart and Health Study in England Database 1.

In relation to the DRIVE dataset, the MA-DUNet achieved a Sen of 79.93%, a Spe of 98.11%, an ACC of 95.72%, and an AUC of 98.10%. Compared with other methods, the MA-DUNet demonstrated competitive Sen, outperforming various earlier models, including those of Ronneberger et al. [2015], Liskowski et al. [2016], and Yan et al. [2018] (13,27,28). It also surpassed most models in terms of specificity, including those of Khan et al. [2020] and Ding et al. [2024] (30,36). With an accuracy of 95.72% and an AUC of 98.10%, the MA-DUNet remained highly competitive compared to the state-of-the-art techniques of Li et al. [2020], Liang et al. [2023], and Tan et al. [2024] (33,35,38). These results fully demonstrate the effectiveness of the MA-DUNet in enhancing segmentation performance using the DRIVE dataset, highlighting its significant clinical application potential in retinal vessel segmentation.

In relation to the STARE dataset, the MA-DUNet achieved a Sen of 81.58%, a Spe of 98.74%, an ACC of 96.68%, and an AUC of 98.89%. Compared with other methods, the MA-DUNet excelled in terms of its Sen, outperforming multiple existing models, including those of Ronneberger et al. [2015], Liskowski et al. [2016], and Jiang et al. [2018] (13,27,37). Further, MA-DUNet’s specificity was also higher than most comparable methods, notably surpassing those of Tan et al. [2024] and Ding et al. [2024] (35,36). The MA-DUNet’s performance in terms of accuracy and the AUC was equally impressive, reaching 96.68% and 98.89% respectively, demonstrating its strong competitiveness when compared to the state-of-the-art techniques of Khan et al. [2020] and Yan et al. [2018] (28,30). These results indicate that the MA-DUNet showed significantly improved segmentation performance using the STARE dataset, further validating its clinical potential in retinal vessel segmentation applications.

In relation to the CHASE-DB1 dataset, the MA-DUNet achieved a Sen of 79.98%, a Spe of 98.09%, an ACC of 96.38%, and an AUC of 98.35%. Compared with other methods, the MA-DUNet performed well in terms of Sen, approaching that of Khan et al. [2020] and Du et al. [2021] (30,40), and excelled in terms of specificity, outperforming most models including those of Yan et al. [2018] and Tan et al. [2024] (28,35). The MA-DUNet also achieved notable results in terms of accuracy and the AUC, reaching 96.38% and 98.35% respectively, demonstrating strong competitiveness when compared to the state-of-the-art techniques of Deng et al. [2022] and Liang et al. [2023] (33,41). These results highlight MA-DUNet’s segmentation performance using the CHASE-DB1 dataset, further validating its potential clinical application value in retinal vessel segmentation. Overall, the MA-DUNet exhibited stable and excellent performance using this dataset, providing strong support for the early detection of retinal diseases.

Model comparison experiments in real-world scenarios

To validate the segmentation performance of the models in real-world scenarios, we conducted tests using real data collected through collaborations with hospitals. This dataset includes tiny vessels and low-contrast areas that are difficult to handle in actual segmentation processes to accurately assess the model’s performance under complex conditions. The segmentation results of the DUNet model and the MA-DUNet model are shown in Figure 7.

According to the results, the MA-DUNet model can accurately segment fine vascular structures in tiny vessels, while the DUNet model may miss or inaccurately segment these areas. In low-contrast regions, the MA-DUNet model also performed well, effectively distinguishing vessels from the background, even in area with uneven lighting or similar colors between vessels and surrounding tissues, maintaining high segmentation accuracy. Conversely, the segmentation results of the DUNet model in these areas can be blurred, affecting the overall segmentation quality. Thus, our model outperformed the DUNet model in segmentation performance tasks in real-world scenarios. The MA-DUNet model has applicability in clinical practice. Doctors can rely on more accurate vascular segmentation results to conduct faster and more accurate disease assessments, which could also aid in the formulation of more personalized treatment plans.

Discussion

This study sought to improve the blood vessel segmentation of diabetic retinal images, promote the advancement of computer-aided DR diagnosis, and provide doctors with convenient and efficient auxiliary tools, thus facilitating the early detection of the disease and effectively reducing the risk of blindness caused by DR. Research on vessel segmentation in diabetic retinal images is crucial for diagnosis and treatment. By accurately segmenting the blood vessel structure in fundus images, various eye diseases can be detected and diagnosed early, and the severity of the disease and treatment effect can be evaluated. The accurate segmentation of blood vessel structures in retinal images also provides an important data basis for scientific research in ophthalmic medicine and promotes progress in the field.

To address the difficulty of segmenting blood vessel breaks and tiny terminal branches in retinal blood vessel images, this study introduced the AMS convolution into the coding stage of the DUNet framework to expand the network’s perceptual domain, aggregate local information, and capture multi-scale feature information. GCT attention mechanism was then introduced between the encoder and decoder to enhance feature transfer and important information extraction. GCT attention mechanism can dynamically adjust feature channel relationships, highlight task-related features, and improve the quality and accuracy of segmentation results. Finally, in the decoding process, the MAFB was used to achieve the fusion of coding layer and decoding layer features, and GCT attention mechanism was introduced to improve model adaptability.

Based on the blood vessel segmentation results, the MA-DUNet model proposed in this study was better able to segment blood vessels in retinal images, showing significant advantages. When faced with distinct vascular structures, all three models demonstrated excellent segmentation capabilities and were able to accurately segment vascular regions. This is mainly because these blood vessels have sharp contrast and edge features, allowing the models to easily identify and segment them. However, when faced with small and complex vascular branch endings, most models struggled to accurately capture them due to weak vascular signals and the similarity of the surrounding tissues. Conversely, the MA-DUNet model we proposed is more detailed, can accurately capture the tiny terminal branches of blood vessels, and can effectively eliminate blurred boundaries and fractures in the segmentation results, thus presenting a clearer, complete vascular structure. In addition, the MA-DUNet model has also made significant progress in maintaining continuity and consistency in blood vessel segmentation results.

Due to the high noise in images and the low contrast between blood vessels and surrounding tissues, false detection or missed detection continues to occur in blood vessel segmentation, limiting model performance. Therefore, in our future research, we will continue to explore and improve the algorithm. We will start by increasing the diversity of the training data, introducing more complex feature extractors, optimizing the network structure, and introducing more sophisticated post-processing technology to improve the model’s ability to handle complex tasks. The adaptability of the application reduces over-segmentation, making our method more practical and reliable.

The current study primarily focused on OCTA results, but future research will consider integrating three-dimensional imaging technology and functional parameters. Three-dimensional imaging technology (42,43) can provide more three-dimensional structural information about blood vessels, helping us to understand the distribution and course of blood vessels more comprehensively; while functional blood flow parameters can reflect the physiological status of blood vessels, providing more precise evidence for the diagnosis and treatment of vascular diseases (44,45). Therefore, we intend to gradually incorporate these elements in subsequent research. By integrating three-dimensional imaging technology and functional blood flow parameters, we will be able to achieve a more comprehensive assessment of vascular health status and further enhance our understanding of vascular pathological changes. This is expected to bring new breakthroughs to the research and treatment of vascular diseases, promoting the development of this field.

In this study, due to dataset limitations, we did not include multiple key demographic characteristics such as age, gender, body mass index, and related clinical data. Therefore, we were unable to directly analyze the association between these factors and vascular parameters. These data are crucial for comprehensively revealing the interaction between vascular health and patient characteristics (46). Consequently, in future research, we intend to actively collect this important data to delve deeper into the relationship between vascular parameters, and demographic and clinical data. Through these analyses, we will gain deeper insights into vascular health and identify the potential factors influencing it. This will provide a strong scientific basis for the development of prevention and treatment strategies for vascular diseases. We intend to carry out a series of experimental designs or larger-scale longitudinal studies to more comprehensively explore the complex relationship between vascular parameters, and demographic and clinical data. We look forward to further advancing the field of vascular diseases through these studies and contributing to the health of patients.

Conclusions

This study established a novel retinal blood vessel segmentation network, the MA-DUNet, which significantly improves segmentation accuracy by incorporating the AMS convolution, GCT attention mechanism, and MAFB. The network effectively addresses the complexity and diversity of fundus images, accurately identifying vascular structures, and providing a reliable basis for the early diagnosis of ocular diseases. The experimental results showed that the MA-DUNet exhibits excellent performance in retinal blood vessel segmentation tasks, and thus has promising application prospects and substantial clinical value. We believe this innovative approach will make a positive contribution to the advancement of ophthalmology and medical imaging technology.

Acknowledgments

None.

Footnote

Reporting Checklist: The authors have completed the CLEAR reporting checklist. Available at https://qims.amegroups.com/article/view/10.21037/qims-24-2267/rc

Funding: This work was supported by

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://qims.amegroups.com/article/view/10.21037/qims-24-2267/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki and its subsequent amendments.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Khan Z, Gaidhane AM, Singh M, Ganesan S, Kaur M, Sharma GC, et al. Diagnostic Accuracy of IDX-DR for Detecting Diabetic Retinopathy: A Systematic Review and Meta-Analysis. Am J Ophthalmol 2025;273:192-204. [Crossref] [PubMed]

- Irodi A, Zhu Z, Grzybowski A, Wu Y, Cheung CY, Li H, Tan G, Wong TY. The evolution of diabetic retinopathy screening. Eye (Lond) 2025;39:1040-6. [Crossref] [PubMed]

- Saeedi P, Petersohn I, Salpea P, Malanda B, Karuranga S, Unwin N, Colagiuri S, Guariguata L, Motala AA, Ogurtsova K, Shaw JE, Bright D, Williams R; IDF Diabetes Atlas Committee. Global and regional diabetes prevalence estimates for 2019 and projections for 2030 and 2045: Results from the International Diabetes Federation Diabetes Atlas, 9th edition. Diabetes Res Clin Pract 2019;157:107843.

- Farooq MS, Arooj A, Alroobaea R, Baqasah AM, Jabarulla MY, Singh D, Sardar R. Untangling Computer-Aided Diagnostic System for Screening Diabetic Retinopathy Based on Deep Learning Techniques. Sensors (Basel) 2022;22:1803. [Crossref] [PubMed]

- Yang Z, Tan TE, Shao Y, Wong TY, Li X. Classification of diabetic retinopathy: Past, present and future. Front Endocrinol (Lausanne) 2022;13:1079217. [Crossref] [PubMed]

- Guo H, Wu W, Huang Y, Huang Y, Jin N, Ma H, Li Q. Correlation between Systemic Inflammation and Morphological Changes of Retinal Neurovascular Unit in Patients with Early Signs of Diabetic Retinopathy: An OCT and OCT-Angiography Study. Ophthalmic Res 2025;68:263-74. [Crossref] [PubMed]

- Tang VTS, Symons RCA, Fourlanos S, Guest D, McKendrick AM. The relationship between ON-OFF function and OCT structural and angiographic parameters in early diabetic retinal disease. Ophthalmic Physiol Opt 2025;45:77-88. [Crossref] [PubMed]

- Garg I, Miller JB. Semi-automated algorithm using directional filter for the precise quantification of non-perfusion area on widefield swept-source optical coherence tomography angiograms. Quant Imaging Med Surg 2023;13:3688-702. [Crossref] [PubMed]

- Xu GX, Ren CX. SPNet: A novel deep neural network for retinal vessel segmentation based on shared decoder and pyramid-like loss. Neurocomputing 2023;523:199-212. [Crossref]

- Wang S, Yin Y, Cao G, Wei B, Zheng Y, Yang G. Hierarchical retinal blood vessel segmentation based on feature and ensemble learning. Neurocomputing 2015;149:708-17. [Crossref]

- Nergiz M, Akın M. Retinal vessel segmentation via structure tensor coloring and anisotropy enhancement. Symmetry 2017;9:276. [Crossref]

- Adeyinka AA, Adebiyi MO, Akande NO, Ogundokun RO, Kayode AA, Oladele TO. A deep convolutional encoder-decoder architecture for retinal blood vessels segmentation. Computational Science and Its Applications-ICCSA 2019: 19th International Conference, Saint Petersburg, Russia, July 1–4, 2019, Proceedings, Part V 19. Springer International Publishing; 2019:180-9.

- Ronneberger O, Fischer P, Brox T. U-net: Convolutional networks for biomedical image segmentation. In: Navab N HJ, Wells WM, Frangi AF, editor. Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015.18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, part III 18. Springer International Publishing; 2015:234-41.

- Wu H, Wang W, Zhong J, Lei B, Wen Z, Qin J. SCS-Net: A Scale and Context Sensitive Network for Retinal Vessel Segmentation. Med Image Anal 2021;70:102025. [Crossref] [PubMed]

- Sun K, Chen Y, Chao Y, Geng J, Chen Y. A retinal vessel segmentation method based improved U-Net model. Biomed Signal Process Control 2023;82:104574. [Crossref]

- Jin Q, Meng Z, Pham TD, Chen Q, Wei L, Su R. DUNet: A deformable network for retinal vessel segmentation. Knowledge-Based Systems 2019;178:149-62. [Crossref]

- Jordan KC, Menolotto M, Bolster NM, Livingstone IA, Giardini ME. A review of feature-based retinal image analysis. Expert Rev Ophthalmol 2017;12:207-20. [Crossref]

- Staal J, Abràmoff MD, Niemeijer M, Viergever MA, van Ginneken B. Ridge-based vessel segmentation in color images of the retina. IEEE Trans Med Imaging 2004;23:501-9. [Crossref] [PubMed]

- Hoover A, Kouznetsova V, Goldbaum M. Locating blood vessels in retinal images by piecewise threshold probing of a matched filter response. IEEE Trans Med Imaging 2000;19:203-10. [Crossref] [PubMed]

- Owen CG, Rudnicka AR, Mullen R, Barman SA, Monekosso D, Whincup PH, Ng J, Paterson C. Measuring retinal vessel tortuosity in 10-year-old children: validation of the Computer-Assisted Image Analysis of the Retina (CAIAR) program. Invest Ophthalmol Vis Sci 2009;50:2004-10. [Crossref] [PubMed]

- Kumari PLS. A Qualitative Approach for Enhancing Fundus Images with Novel CLAHE Methods. Eng Technol Appl Sci Res 2025;15:20102-7. [Crossref]

- Wu Z, Kessler LJ, Chen X, Pan Y, Yang X, Zhao L, Zhao J, Auffarth GU. Retinal Fundus Image Enhancement With Detail Highlighting and Brightness Equalizing Based on Image Decomposition. IET Image Proc 2025;19:e70041. [Crossref]

- Dai J, Qi H, Xiong Y, Li Y, Zhang G, Hu H, Wei Y, editors. Deformable convolutional networks. Proceedings of the IEEE International Conference on Computer Vision 2017:764-73.

- Yin Y, Han Z, Jian M, Wang GG, Chen L, Wang R. AMSUnet: A neural network using atrous multi-scale convolution for medical image segmentation. Comput Biol Med 2023;162:107120. [Crossref] [PubMed]

- Yang Z, Zhu L, Wu Y, Yang Y. editors. Gated channel transformation for visual recognition. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition 2020:11794-803.

- Zhou T, Fu H, Chen G, Shen J, Shao L. Hi-Net: Hybrid-Fusion Network for Multi-Modal MR Image Synthesis. IEEE Trans Med Imaging 2020;39:2772-81. [Crossref] [PubMed]

- Liskowski P, Krawiec K. Segmenting Retinal Blood Vessels With Deep Neural Networks. IEEE Trans Med Imaging 2016;35:2369-80. [Crossref] [PubMed]

- Yan Z, Yang X, Cheng KT. Joint Segment-Level and Pixel-Wise Losses for Deep Learning Based Retinal Vessel Segmentation. IEEE Trans Biomed Eng 2018;65:1912-23. [Crossref] [PubMed]

- Cheng YL, Ma MN, Zhang LJ, Jin CJ, Ma L, Zhou Y. Retinal blood vessel segmentation based on Densely Connected U-Net. Math Biosci Eng 2020;17:3088-108. [Crossref] [PubMed]

- Khan TM, Alhussein M, Aurangzeb K, Arsalan M, Naqvi SS, Nawaz SJ. Residual connection-based encoder decoder network (RCED-Net) for retinal vessel segmentation. IEEE Access 2020;8:131257-72.

- Li X, Jiang Y, Li M, Yin S. Lightweight attention convolutional neural network for retinal vessel image segmentation. IEEE Trans Industr Inform 2020;17:1958-67. [Crossref]

- Muzammil N, Shah SAA, Shahzad A, Khan MA, Ghoniem RM. Multifilters-based unsupervised method for retinal blood vessel segmentation. Appl Sci (Basel) 2022;12:6393. [Crossref]

- Liang L, Feng J, Peng R, Zeng S. U-shaped retinal vessel segmentation combining multi-label loss and dual attention. J Comput Aided Des Comput Graph 2023;35:75-86.

- Liu Y, Shen J, Yang L, Bian G, Yu H. ResDO-UNet: A deep residual network for accurate retinal vessel segmentation from fundus images. Biomed Signal Process Control 2023;79:104087. [Crossref]

- Tan Y, Yang KF, Zhao SX, Wang J, Liu L, Li YJ. Deep matched filtering for retinal vessel segmentation. Knowledge-Based Syst 2024;283:111185. [Crossref]

- Ding W, Sun Y, Huang J, Ju H, Zhang C, Yang G, Lin CT. RCAR-UNet: Retinal vessel segmentation network algorithm via novel rough attention mechanism. Inf Sci 2024;657:120007. [Crossref]

- Jiang Z, Zhang H, Wang Y, Ko SB. Retinal blood vessel segmentation using fully convolutional network with transfer learning. Comput Med Imaging Graph 2018;68:1-15. [Crossref] [PubMed]

- Li H, Wang Y, Wan C, Shen J, Chen Z, Ye H, Yu Q. MAU-Net: A Retinal Vessels Segmentation Method. Annu Int Conf IEEE Eng Med Biol Soc 2020;2020:1958-61. [PubMed]

- Alom MZ, Yakopcic C, Hasan M, Taha TM, Asari VK. Recurrent residual U-Net for medical image segmentation. J Med Imaging (Bellingham) 2019;6:014006. [Crossref] [PubMed]

- Du XF, Wang JS, Sun WZ. UNet retinal blood vessel segmentation algorithm based on improved pyramid pooling method and attention mechanism. Phys Med Biol 2021; [Crossref] [PubMed]

- Deng X, Ye J. A retinal blood vessel segmentation based on improved D-MNet and pulse-coupled neural network. Biomed Signal Process Control 2022;73:103467. [Crossref]

- Li X, Bala R, Monga V. Robust Deep 3D Blood Vessel Segmentation Using Structural Priors. IEEE Trans Image Process 2022;31:1271-84. [Crossref] [PubMed]

- Li M, Huang K, Zeng C, Chen Q, Zhang W. Visualization and quantization of 3D retinal vessels in OCTA images. Opt Express 2024;32:471-81. [Crossref] [PubMed]

- Böhm EW, Pfeiffer N, Wagner FM, Gericke A. Methods to measure blood flow and vascular reactivity in the retina. Front Med (Lausanne) 2022;9:1069449. [Crossref] [PubMed]

- Kumar KS, Singh NP. Analysis of retinal blood vessel segmentation techniques: a systematic survey. Multimedia Tools Appl 2023;82:7679-733. [Crossref]

- Trovato Battagliola E, Pacella F, Malvasi M, Scalinci SZ, Turchetti P, Pacella E, La Torre G, Arrico L. Risk factors in central retinal vein occlusion: A multi-center case-control study conducted on the Italian population: Demographic, environmental, systemic, and ocular factors that increase the risk for major thrombotic events in the retinal venous system. Eur J Ophthalmol 2022;32:2801-9. [Crossref] [PubMed]