Dual-stage artificial intelligence-powered screening for accurate classification of thyroid nodules: enhancing fine needle aspiration biopsy precision

Introduction

Malignant thyroid cancer, being a broad term that encompasses several types of thyroid cancers, varies in prevalence among its different forms. Malignant cancer has the ability to invade and destroy nearby tissue and spread to other parts of the body. The term encompasses all types of thyroid cancers that are malignant, including papillary thyroid carcinoma (PTC), medullary thyroid carcinoma (MTC) (1), follicular thyroid cancer (FTC), and anaplastic thyroid cancer (ATC). Fine needle aspiration biopsy (FNAB) plays a crucial role in the diagnosis and management of malignant thyroid cancer. As a minimally invasive diagnostic technique, can clarify the nature of thyroid nodules before surgery, providing a basis for personalized and precise treatment of thyroid diseases (2). Early detection through FNAB is crucial, as it profoundly influences prognosis and treatment strategies. Malignant thyroid cancer detected at an early stage is generally more treatable and associated with higher success rates compared to cases identified later, when the cancer may have metastasized beyond the thyroid gland. When diagnosed early, malignant thyroid cancer is often confined to the thyroid, increasing the likelihood of curative surgical intervention. Early detection enhances the possibility of complete cancer cell removal before dissemination occurs. In summary, for malignant nodules, FNAB provides critical information that aids in determining the necessary surgical extent (3-5).

Before FNAB, it is usually necessary to perform ultrasound examinations to determine if the nodules are FNAB-required. Ultrasound examination is crucial in diagnosing and managing malignant thyroid cancer, serving as a non-invasive, readily accessible, and efficient method to assess the necessity of FNAB. Ultrasound is highly sensitive for detecting thyroid nodules, including those that are not palpable during a physical examination. By evaluating the thyroid gland and the surrounding structures (6), it provides guidance for making informed decisions regarding FNAB, enhancing the precision of clinical interventions in thyroid malignancies. This is crucial for early detection of potential malignancies, including malignant thyroid cancer (7). Ultrasound is widely available, relatively low cost, and does not involve ionizing radiation, making it an excellent first-line imaging tool for initial evaluation and ongoing surveillance. It allows for real-time imaging, which is especially useful during ultrasound-guided procedures like Guiding FNAB (8,9). Several esteemed thyroid classification systems have been established to enhance the accuracy of thyroid nodule diagnosis, including the 2017 American College of Radiology (ACR), Thyroid Imaging Reporting and Data System (TIRADS) (10), the 2015 American Thyroid Association (ATA) management guidelines (11), the European Thyroid Association TIRADS (12), the Kwak-TIRADS (13), and the 2020 Chinese TIRADS (C-TIRADS) (14). These systems have demonstrated reliable diagnostic accuracy, significantly reducing the number of unnecessary biopsies and minimizing the over treatment of benign thyroid nodules (15-17). While the various thyroid classification systems, such as ACR-TIRADS, ATA-Guidelines, EU-TIRADS, Kwak-TIRADS, and C-TIRADS, have significantly improved the diagnostic process for thyroid nodules, they are not without their disadvantages. The challenges presented by these classification systems stem from their inherent subjectivity and variability, the complexity and steep learning curve required for their effective application, and limitations in identifying aggressive cancers. The variability in standards across these systems leads to inconsistent diagnostic effectiveness, complicating their integration into clinical practice, particularly for clinicians with less experience. This inconsistency not only hinders the universal adoption of these systems but also impacts the accuracy and reliability of diagnosing potentially aggressive cancers, thereby affecting patient management strategies (11). Given these challenges, the reliance on human interpretation and application of these classification systems sometimes leads to inaccurate needle biopsies—either through misclassification of the nodule’s risk level or through technical errors during ultrasound-guided FNAB procedures. This misclassification can result in false negatives, where malignant nodules are not biopsied (missed biopsies), or false positives, leading to unnecessary biopsies of benign nodules (misguided biopsies) (18-20).

To address these limitations, deep learning methods have emerged as a powerful tool in the field of thyroid nodule diagnostics (21,22). Deep learning, a subset of machine learning, involves the use of neural networks with many layers to analyze complex patterns in data. In the context of thyroid nodule assessment, deep learning offers greater efficiency and more objective analysis. Therefore, while traditional thyroid classification systems have significantly advanced the diagnosis and management of thyroid nodules, the integration of deep learning methods holds the promise of overcoming their limitations (21,23,24). By providing a consistent, accurate, and efficient tool for analysis, deep learning has the potential to enhance diagnostic accuracy, reduce subjectivity, and ultimately lead to more effective diagnosis (25,26). This strategy reduces misdiagnosis rates and improves patient outcomes, particularly in the assessment of FNAB in patients with thyroid nodules. Our contributions are summarized as follows: (I) developed a new ultrasound image dataset tailored for four-class classification of thyroid nodules; (II) proposed a dual-stage framework combining segmentation and classification tasks; (III) conducted a comprehensive evaluation of deep learning architectures.

To contextualize our work, we present a summary of recent studies employing deep learning for thyroid nodule diagnosis. As illustrated in Table 1, various deep learning techniques are employed in the management of thyroid nodules and carcinoma for classification and segmentation tasks, each demonstrating varied performance across different datasets. Given that these methods are tested under varying conditions and datasets, it is also crucial to identify the most effective models for the two-stage task. To achieve this, we implement transfer learning using a pretrained model from the ImageNet-1K dataset. Although ImageNet-1K lacks direct relevance to medical imaging tasks, its diverse range of images allows the model to learn robust and generalized features that can be beneficial for various applications. Additionally, we enhance model performance by applying data augmentation techniques, such as random rotation and random cropping, during training.

Table 1

| References | Year | Task | Method | No. of images | Acc or mIoU (%) |

|---|---|---|---|---|---|

| Peng et al. (27) | 2021 | Classification | ResNet, ResNeXt, and DenseNet | 18,049 | 92.2 |

| Göreke et al. (28) | 2023 | Classification | Multi-layer RNN | 99 | 99.9 |

| Liu et al. (29) | 2023 | Classification | ResNet | 2,096 | 73.0 |

| Liu et al. (30) | 2023 | Segmentation | DeepLabV3+ | 2,602 | 85.3 |

| Yang et al. (31) | 2023 | Classification | CNN | 1,278 | 61.8 |

| Chen et al. (32) | 2024 | Segmentation | CNN, MCTS and CLGCN | 5,834 | 86.6 |

| Wang et al. (33) | 2022 | Classification | ResNet50 and XGBoost | 2,992 | 76.8 |

| Sun et al. (34) | 2022 | segmentation | DeepLabV3+ | 3,786 | 95.8 |

| Zhao et al. (35) | 2022 | Classification | LoGo-Net | 21,597 | 89.6 |

| Deng et al. (36) | 2022 | Classification | MTL network | 3,907 | 93.6 |

| Wang et al. (37) | 2024 | Classification | Multi-view SSL | 9,669 | 86.3 |

| Gökmen Inan et al. (38) | 2024 | Classification | Hybrid ResNet | 880 | 96.6 |

Acc measures the percentage of correctly classified samples, while mIoU evaluates the overlap between predicted and ground truth regions in segmentation tasks. Acc, accuracy; CLGCN, Cross-Layer Graph Convolutional Network; CNN, Convolutional Neural Network; MCTS, Multi-Channel Transformer System; mIoU, mean intersection over union; MTL, multitask learning; RNN, Recurrent Neural Networks; SSL, self-supervised learning.

While many studies focus on binary classification of thyroid nodules (e.g., benign vs. malignant), recent advancements have explored multitask classification to provide more detailed diagnostic information. For example, Deng et al. proposed a multitask learning (MTL) network that outputs multiple results for a single ultrasound image, including ACR TIRADS descriptors, scores, levels, and benignity/malignancy predictions (36). Kang et al. highlighted the challenge of task inconsistency in MTL for thyroid nodule segmentation and classification, proposing intra- and inter-task consistent learning to improve performance across tasks (39). Their work demonstrates the potential of well-designed multitask frameworks to enhance diagnostic accuracy. Additionally, Vadhiraj et al. integrated TIRADS features into a computer-aided diagnosis system using multiple-instance learning (MIL), achieving high accuracy with Support Vector Machine (SVM) for benign-malignant classification (40). Their study underscores the value of combining TIRADS features with computational models to support radiologists’ decision-making.

However, their approach does not directly address the multiclass classification of specific pathological types such as PTC, MTC, nodular goiter with adenomatous hyperplasia (NGAH), and chronic lymphocytic thyroiditis (CLT). These studies collectively illustrate the evolution of thyroid nodule diagnosis, from binary to multiclass classification, and the importance of addressing task-specific challenges in computational frameworks. Our work builds on these advancements by focusing on multiclass classification of specific pathological types, aiming to provide more precise diagnostic tools.

Our approach offers novel features that distinguish it from traditional thyroid nodule screening methods. First, it employs a two-stage framework. In the first stage, a segmentation model is used to precisely delineate the nodule region. This segmentation model is versatile and can be adapted to other anatomical regions, allowing for potential transfer learning to different segmentation tasks. Second, in the classification stage, our model addresses a four-class classification problem, distinguishing between different types of thyroid nodules. This represents an advancement over most existing studies, which typically focus on binary classification (benign vs. malignant). The four-class approach provides a more refined diagnosis, leading to improved treatment planning and patient outcomes. Additionally, we compared the performance of traditional neural networks with transformer-based models. Our results show that transformer models significantly outperform traditional models in this new classification task, particularly in handling the complexity and variability of thyroid nodules. After evaluating the performance of seven different segmentation models to identify the most effective one for automated segmentation, we then assessed the performance of classification models on the segmented dataset. Our study addresses these limitations by proposing a dual-stage framework that not only performs segmentation but also extends classification to four distinct pathological types (PTC, MTC, NGAH, CLT). This approach provides more detailed diagnostic information, which can guide clinicians in making more informed decisions regarding FNAB and treatment planning. We present this article in accordance with the CLEAR reporting checklist (available at https://qims.amegroups.com/article/view/10.21037/qims-24-2336/rc).

Methods

This study was conducted in accordance with the Declaration of Helsinki and its subsequent amendments. The study was approved by Medical Ethics Committee of Peking University Shenzhen Hospital (No. [2024] 084) and individual consent for this retrospective analysis was waived.

Patients

From February 2018 to January 2024, 392 patients confirmed thyroid diseases by operation and biopsy at Peking University Shenzhen Hospital were enrolled in this study.

The inclusion criteria were:

- Presence of suspicious nodules on ultrasound imaging;

- Thyroid pathology results from fine-needle aspiration;

- The age range of subjects in this study was 20 to 60 years.

The exclusion criteria were:

- Had not undergone a preoperative thyroid sonographic examination;

- Had US images of thyroid nodules that did not meet the requirements for analysis, specifically nodules classified as TIRADS 1 or 2, which could be clearly identified as benign by a doctor without the need for AI assistance;

- Had incomplete clinical information; and/or

- Had pathological results that could not provide a definitive benign or malignant diagnosis.

The final dataset comprised a total of 392 patients, categorized as follows: 67 MTC patients with 627 ultrasound lesion images, 127 PTC patients with 655 images, 133 NGAH patients with 882 images, and 65 CLT patients with 338 images. The dataset was partitioned into training, validation, and test sets in a 70:15:15 ratio, as detailed in Table 2. The training and validation sets were derived from the primary dataset, while the test set was compiled from cases provided by different doctors to enhance the model’s generalizability.

Table 2

| Class | Cases | Train set | Validation set | Test set | Train images | Validation images | Test images |

|---|---|---|---|---|---|---|---|

| MTC | 67 | 47 | 10 | 10 | 440 | 93 | 94 |

| PTC | 127 | 89 | 19 | 19 | 460 | 97 | 98 |

| NGAH | 133 | 93 | 20 | 20 | 618 | 136 | 128 |

| CLT | 65 | 46 | 10 | 9 | 236 | 52 | 50 |

| Total | 392 | 275 | 59 | 58 | 1,754 | 378 | 370 |

CLT, chronic lymphocytic thyroiditis; MTC, medullary thyroid carcinoma; NGAH, nodular goiter with adenomatous hyperplasia; PTC, papillary thyroid carcinoma.

As shown in Table 2, for MTC and PTC, biopsies were accurately targeted to confirm malignancy, reflecting their malignant nature. In contrast, biopsies for NGAH and CLT were inaccurately targeted, as these conditions are benign. The ultrasound images from the misdiagnosed cases present a challenge even for experienced physicians to accurately identify, underscoring the complexity and subtlety of the features that distinguish various thyroid conditions. Conversely, the cases where biopsies were correctly performed were intentionally selected to include instances that would be challenging for intern doctors to diagnose. It provides a rigorous learning resource for medical deep learning models at all levels. Through rigorous analysis and training of this dataset, medical models can enhance their ability to discern between benign and malignant cases, improving patient outcomes through more accurate diagnosis and appropriate treatment planning.

Manually annotated dataset

The dataset preprocessing is divided into two parts. For the segmentation model, annotations were performed using Label Studio. One doctor meticulously labeled the images, while another reviewed the annotations to ensure accuracy. In the first stage of segmentation dataset preparation, 996 images were divided into training, validation, and test sets in a 3:1:1 ratio. Specifically, 613 ultrasound images were allocated to the training set, 213 images to the validation set, and 170 images to the test set.

The second part involves the creation of the classification model dataset. This dataset was generated through an automated segmentation process using the trained segmentation models. The resulting segmentations were then reviewed and corrected by human experts to ensure quality. This refined, automatically segmented dataset was then partitioned into training, validation, and test sets in a 70:15:15 ratio. The training set comprises 1,754 images from 275 patients, while the validation set includes 378 images from 59 patients. The test set, an independent dataset provided by different doctors to ensure generalizability and robustness, consists of 370 images from 58 patients. Therefore, the classification dataset is derived from automatically segmented images, with manual refinement, and is distinct from the manually annotated dataset used for segmentation model training.

Overall design

There are several challenges that motivated us to build our dual pipelines: chief among these is the considerable size of our ultrasound images, which measure 1,260 by 910 pixels, against the relatively small tumor regions they contain. This discrepancy is further complicated by the inherent variability in tumor shape, density, and the lighting conditions under which the ultrasound images are captured. Direct application of deep learning networks for classification purposes on such entire, large images presents a formidable computational task and poses significant obstacles in achieving high levels of accuracy.

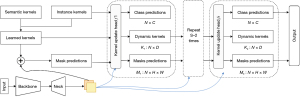

As shown in Figure 1, in response to these challenges, we have designed and implemented a novel dual-architecture diagnostic pipeline specifically tailored for ultrasound image segmentation and classification. This innovative approach divides the task into two distinct processes: first, segmenting the image to precisely identify region of interest (ROI), which helps reduce irrelevant data input to the classifier and enhances focus on the area of concern. Following segmentation, classification algorithms are applied to these regions to determine the presence and type of tumors. While object detection could also isolate the ROI, segmentation was chosen for its superior ability to capture fine-grained anatomical details, which is crucial in medical imaging tasks like tumor detection. This two-stage strategy not only reduces computational burden but also improves the precision and accuracy of the classification task, ensuring that detailed boundaries and intricate features are preserved for better diagnostic outcomes.

Segmentation models development

In this phase, we evaluated the performance of seven distinct semantic segmentation models, incorporating techniques such as transfer learning and test-time augmentation (TTA). TTA involves applying data augmentation techniques during inference to improve model robustness. For example, we use TTA strategies like random flipping and random cropping, where the input images are augmented in various ways, and predictions from the augmented versions are averaged or combined to enhance the overall accuracy and reliability of the model. These techniques not only enrich our dataset through the introduction of variability but also mimic real-world scenarios where images may not be perfectly aligned or uniformly cropped. By leveraging transfer learning (23), we utilize pre-trained models that have been developed on large, diverse datasets, allowing us to fine-tune these models with our specific dataset.

To identify the most suitable segmentation model for our study, we evaluated seven state-of-the-art models representing different architectural paradigms, including Convolutional Neural Network (CNN)-based, transformer-based, and hybrid architectures. These models were selected to cover a broad spectrum of advancements in semantic segmentation:

- UNet (41): a classic CNN-based architecture known for its U-shaped encoder-decoder structure, widely used for biomedical image segmentation due to its ability to capture fine-grained details.

- DeepLabV3+ (42): an advanced CNN-based model that employs atrous convolutions and a spatial pyramid pooling module to capture multi-scale contextual information.

- PSPNet (43): a CNN-based model that utilizes pyramid pooling to aggregate global context information, enhancing segmentation accuracy.

- FASTSCNN (44): a lightweight and efficient CNN-based model designed for real-time segmentation tasks, balancing speed and performance.

- Segformer (45): a transformer-based model that leverages a hierarchical transformer encoder and a lightweight decoder, excelling in capturing long-range dependencies.

- Mask2Former (46): a versatile transformer-based model capable of handling both semantic and instance segmentation tasks through a unified architecture.

- K-Net (47): a hybrid architecture that combines convolutional kernels with dynamic updates based on pixel group content, adopting a bipartite matching strategy for instance kernel training.

Our selection criteria included segmentation accuracy and adaptability to medical imaging data. After extensive evaluation, K-Net demonstrated superior performance in our task, particularly in handling the complex boundaries and fine details of thyroid nodule images. The specific architecture of K-Net is shown in Figure 2. K-Net employs a series of convolutional kernels to determine whether each pixel belongs to an instance category or a semantic category. To enhance the discriminability of the convolutional kernels, the fixed kernels are updated through the content within segmented pixel groups. A bipartite matching strategy is adopted to train the instance kernels.

The general workflow of the model is as follows: a set of learnable kernels first convolve with feature maps to predict masks. Then, the kernel update head takes the mask predictions, learned kernels, and feature maps as inputs, and produces class predictions, group-aware (dynamic) kernels, and mask predictions. The generated mask predictions, dynamic kernels, and feature maps are sent to the next kernel update head. This process is carried out iteratively to gradually refine the kernels and mask predictions. Mathematically, the workflow is given by the following equations (47):

This formula signifies the aggregation of features (F) over height (H) and width (W) dimensions of an image, where is the mask at a certain layer for pixel location (u,v). Here, refers to the previous layer in the network for a given pixel location, indicating that the mask being applied is from the layer of the model. Similarly, refers to the previous pixel’s feature from the same layer or the spatial location () in the height or width dimension. The result represents the new feature for each group, which is in the space , where B is the batch size, N is the number of pixels, and C is the number of channels.

Here, the updated feature FG is computed by element-wise multiplication of a transformed feature map with a transformed kernel . The transformation functions φ1 and φ2 could involve operations such as convolutions or other non-linear mappings to enhance feature representation.

This formula presents the process of updating kernels . σ is typically a non-linear activation function such as a sigmoid or softmax, which is applied to the transformed features [ and ]. The final updated kernel is calculated by the element-wise addition of two products: GF (the class-agnostic feature gate) combined with (a transformation of the aggregated features), and GK (the class-specific feature gate) combined with (a transformation of the previous features).

We used a batch size of 32 for training. This size was chosen as it strikes a balance between computational efficiency and model stability. Given the small size of the dataset, using a larger batch size could risk underfitting, as the model might fail to capture enough gradient variance across batches. Conversely, a smaller batch size may introduce too much noise in gradient estimation, leading to unstable training. To mitigate the risk of overfitting due to the limited dataset size, we employed several strategies.

First, in our training pipeline, we leveraged data augmentation strategies to enhance dataset variability and improve model robustness. Specifically, the pipeline begins by loading images and their corresponding segmentation masks. We then applied random flips with a probability of 0.5 to introduce horizontal and vertical variability. To further diversify the training data, images and labels were randomly resized within a scale range of (0.5, 2.0) and cropped to a fixed size (e.g., 512×512), ensuring consistent input dimensions while increasing spatial diversity. The images were normalized using predefined mean and standard deviation values, and padded to the desired size to maintain uniformity. Finally, the data was converted into PyTorch tensors and prepared for model input. These augmentation techniques effectively expanded the dataset and improved the model’s generalization capabilities during training. Secondly, we used early stopping to halt training when the validation loss stopped improving, preventing the model from fitting to noise in the training data. Lastly, dropout layers were incorporated to further reduce overfitting by randomly dropping neurons during training, which forces the model to generalize better. The implementation of the model is based on MMSegmentation version 1.2.2.

Development of classification models

Building upon the foundations laid by our previously developed segmentation models, we automatically created a classification dataset that specifically isolates pictures of the ROI, effectively omitting extraneous regions. This targeted approach significantly enhances the accuracy and efficiency of subsequent classification tasks, ensuring that our models focus solely on the most pertinent information.

Upon assembling this refined dataset, we embark on a rigorous process of fine-tuning and evaluating the classification capabilities across six advanced deep learning architectures:

- VGG-19: a classic deep CNN known for its simplicity and effectiveness in extracting hierarchical features, serving as a strong baseline for comparison.

- MobileNet: a lightweight CNN designed for efficiency, utilizing depth-wise separable convolutions to reduce computational cost without significant performance loss.

- ResNet: a deep residual network that addresses vanishing gradients through skip connections, enabling the training of very deep architectures for improved feature learning.

- Vision Transformer (ViT): a pure transformer-based model that processes images as sequences of patches, excelling in capturing long-range dependencies.

- Swin Transformer: a hierarchical ViT that leverages shifted windows for efficient global-local feature extraction, suitable for capturing fine-grained details in thyroid nodules.

- MobileViT: a lightweight hybrid architecture combining the strengths of CNNs and transformers, optimized for mobile and edge devices while maintaining high accuracy.

To improve our models’ performance from the outset, we leverage pretrained weights from the ImageNet-1k dataset, a strategy that provides a solid foundation of visual knowledge and accelerates the learning process. This multifaceted approach aims to ascertain the most effective architecture for our classification objectives, prioritizing both precision and computational efficiency.

Evaluation metrics

To objectively evaluate the segmentation performance, we employ the following metrics:

- Average accuracy (aAcc): measures the proportion of correctly classified pixels across all classes;

- Mean intersection over union (mIoU): computes the average ratio of the intersection to the union of predicted and ground truth regions across all classes;

- Mean accuracy (mAcc): calculates the aAcc, specifically for pixel-level classification in segmentation tasks;

- Mean Dice coefficient (mDice): represents the average overlap between predicted and ground truth regions;

- Mean F1-score (mFscore): the harmonic mean of precision and recall, evaluated at the pixel level;

- Mean precision (mPrecision): measures the proportion of true positives among all predicted positives at the pixel level;

- Mean recall (mRecall): measures the proportion of true positives among all actual positives at the pixel level;

- Hausdorff Distance 95 (HD95): computes the 95th percentile of the Hausdorff Distance, which evaluates the maximum boundary deviation between predicted and ground truth regions.

These metrics collectively provide a comprehensive assessment of segmentation performance, balancing region overlap, boundary alignment, and classification accuracy.

To evaluate the classification performance, we compare different models on the validation dataset using the following metrics:

- mAcc: measures the average proportion of correctly classified samples across all classes, specifically for sample-level classification tasks;

- mPrecision: computes the average proportion of true positives among all predicted positives at the sample level;

- mRecall: calculates the average proportion of true positives among all actual positives at the sample level;

- mFscore: represents the harmonic mean of precision and recall, evaluated at the sample level.

These metrics collectively provide a comprehensive assessment of classification performance, focusing on sample-level accuracy and prediction quality.

Training details

The hyper-parameters used for training both segmentation and classification models are summarized in Table 3. For segmentation models, the input image shape was set to 768×768×3 to preserve fine-grained details, while classification models used a smaller input size of 224×224×3 to optimize computational efficiency. Both tasks employed a batch size of 64 and the Adam optimizer with a learning rate of 0.001, ensuring consistent training dynamics. The ReLU activation function was used across all models due to its effectiveness in avoiding vanishing gradients. Detailed model parameters and configurations are available in the project’s GitHub repository: https://github.com/ychy7001/thyroid_dual-stage.git.

Table 3

| Name | Segmentation models | Classification models |

|---|---|---|

| Image shape | 768×768×3 | 224×224×3 |

| Batch size | 64 | 64 |

| Iterations/epochs | 20,000 or 40,000 iter | 100 epoch |

| Activation function | ReLU | ReLU |

| Learning rate | 0.001 | 0.001 |

| Optimizer | Adam | Adam |

| CPU threads | 8 | 8 |

| Loss function | Dice Loss | Cross-Entropy Loss |

| Data augmentation | Yes | Yes |

CPU, central processing unit.

Segmentation models were trained for 20,000 or 40,000 iterations with Dice Loss as the objective function, which is well-suited for pixel-wise tasks. Classification models, on the other hand, were trained for 100 epochs using Cross-Entropy Loss, tailored for multi-class classification. Data augmentation techniques, such as flipping and rotation, were applied to both tasks to enhance model robustness and generalization. All experiments were conducted using 8 central processing unit (CPU) threads to ensure efficient resource utilization.

Results

Performance of segmentation models

In this section, we present a comprehensive evaluation of the seven segmentation models (UNet, DeepLabV3+, PSPNet, FASTSCNN, Segformer, Mask2Former, and K-Net) using both qualitative and quantitative approaches.

Figure 3 qualitatively compares the segmentation results of the seven models. K-Net, Mask2Former, and Segformer, all based on Transformer or hybrid architectures, demonstrate superior performance, consistently producing accurate and smooth segmentations that closely match the ground truth, even in cases with irregular shapes or challenging artifacts. CNN-based models (PSPNet, FASTSCNN, DeepLabV3+, and UNet) show comparable performance but greater sensitivity to nodule boundaries, occasionally resulting in deviations or incomplete segmentations. These visual comparisons highlight the strengths of transformer-based architectures in medical image segmentation tasks.

To objectively evaluate the segmentation performance, we compare the seven models on the test dataset using multiple metrics. The results are summarized in Table 4. K-Net achieves the best overall performance, with the highest scores in most metrics: mIoU (87.06%), mDice (92.76%), mFscore (92.76%), and precision (92.97%), while also maintaining a low HD95 (11.01), indicating superior boundary alignment. Mask2Former and Segformer also exhibit strong performance, with mIoU scores of 85.53% and 84.32%, respectively, and mDice scores exceeding 90%. These results align with the qualitative observations in Figure 3, confirming the robustness of transformer-based and hybrid architectures in handling thyroid nodule segmentation.

Table 4

| Architecture | aAcc (%) | mIoU (%) | mAcc (%) | mDice (%) | mFscore (%) | mPrecision (%) | mRecall (%) | HD95 |

|---|---|---|---|---|---|---|---|---|

| K-Net | 99.47 | 87.06 | 92.56 | 92.76 | 92.76 | 92.97 | 92.56 | 11.01 |

| DeepLabV3+ | 98.95 | 64.94 | 72.51 | 74.63 | 74.63 | 79.18 | 72.61 | 18.93 |

| FASTSCNN | 98.61 | 75.57 | 82.44 | 84.41 | 84.41 | 90.91 | 82.44 | 16.06 |

| Mask2Former | 99.38 | 85.53 | 92.81 | 90.93 | 90.93 | 90.56 | 92.20 | 13.02 |

| PSPNet | 98.75 | 79.37 | 84.22 | 83.21 | 83.21 | 85.68 | 84.53 | 14.05 |

| Segformer | 98.30 | 84.32 | 89.18 | 91.18 | 91.18 | 93.67 | 89.18 | 13.21 |

| UNet | 98.78 | 74.18 | 78.74 | 80.71 | 80.71 | 84.21 | 80.74 | 16.58 |

aAcc, average accuracy; HD95, Hausdorff Distance 95; mAcc, mean accuracy; mDice, mean Dice coefficient; mFscore, mean F-score; mIoU, mean intersection over union.

Among the CNN-based models, PSPNet performs the best, achieving a mIoU of 79.37% and a mDice of 83.21%, while FASTSCNN and UNet show moderate performance, with mIoU scores of 75.57% and 74.18%, respectively. DeepLabV3+ underperforms compared to the other models, particularly in mIoU (64.94%) and HD95 (18.93), highlighting its limitations in capturing fine details and complex boundaries.

These quantitative results further validate the selection of K-Net as the optimal model for our task, while also demonstrating the competitive performance of Mask2Former and Segformer in medical image segmentation.

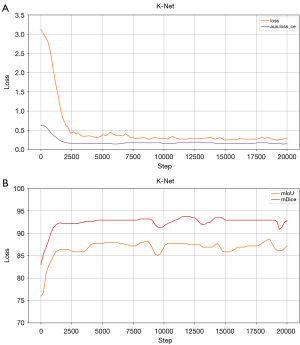

To further understand the training dynamics of the segmentation models, we analyze the loss curves and validation metrics (mIoU and mDice) during training. Figure 4 illustrates the training process of K-Net, the best-performing model. The loss curve (left) shows a rapid decrease in the early iterations, indicating effective learning and optimization, and stabilizes after approximately 2,500 iterations, demonstrating the model’s convergence. The validation metrics (right) exhibit a consistent improvement, with mIoU and mDice reaching high values, reflecting the model’s strong generalization capability.

For comparison, the training processes of the other models are provided in Figures S1,S2. While most models show stable convergence, K-Net achieves the highest validation metrics, further supporting its selection as the optimal model for our task.

In summary, the qualitative and quantitative analyses, along with the training process evaluation, demonstrate that K-Net outperforms the other segmentation models in carotid artery plaque segmentation. Its superior performance is evident in both visual results (Figure 3) and quantitative metrics (Table 4), while the training process (Figure 4) highlights its stable convergence and strong generalization capability. Although Mask2Former and Segformer also show competitive performance, K-Net’s robustness in handling complex boundaries and artifacts makes it the optimal choice for our task. The comparative analysis of the training processes (Figures S1,S2) further supports this conclusion, as K-Net consistently achieves higher validation metrics than the CNN-based and other Transformer-based models. These findings underscore the effectiveness of hybrid architectures like K-Net in medical image segmentation tasks.

Performance of classification models

In this paper, we explored various deep learning architectures for the classification of FNAB-required thyroid ultrasound images. Among the five evaluated models, including Swin Transformer (48), MobileViT, VGG-19, ViT, MobileNet, and ResNet (49).

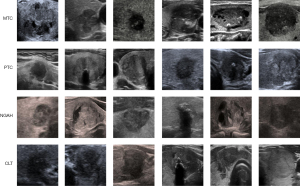

As shown in Figure 5, to provide further clarity on the dataset and the key characteristics of each class, representative examples of thyroid nodules for MTC, PTC, NGAH, and CLT are presented, highlighting their distinct sonographic features:

- MTC: smooth margins, hypoechoic or markedly hypoechoic, and usually with a taller-than-wide shape (aspect ratio typically less than 1);

- PTC: often hypoechoic with microcalcifications, irregular margins, and a taller-than-wide shape;

- NGAH: usually isoechoic or hypoechoic with well-defined margins and a spongiform appearance;

- CLT: diffusely hypoechoic with heterogeneous texture and possible pseudonodules.

These features, such as echogenicity, margins, and internal composition, are critical for accurate classification.

As shown in Tables 5,6, our experimental results demonstrate that MobileViT consistently outperforms all comparison models across both datasets. In the validation dataset, MobileViT achieved an mAcc of 92.33%, an mPrecision of 91.30%, an mRecall of 89.76%, and an mFscore of 90.42%, surpassing all other models. Similarly, in the test dataset, MobileViT maintained its superior performance with an mAcc of 90.27%, an mPrecision of 89.51%, an mRecall of 91.02%, and an mFscore of 90.10%.

Table 5

| Architecture | mAcc (%) | mPrecision (%) | mRecall (%) | mFscore (%) |

|---|---|---|---|---|

| Swin Transformer | 90.21 | 89.37 | 89.92 | 89.52 |

| MobileViT | 92.33 | 91.30 | 89.76 | 90.42 |

| VGG-19 | 86.51 | 85.55 | 82.70 | 83.63 |

| Vision Transformer | 87.04 | 84.53 | 81.61 | 82.13 |

| MobileNet | 85.45 | 84.15 | 81.62 | 82.28 |

| ResNet | 88.36 | 87.61 | 86.51 | 86.89 |

mAcc, mean accuracy; mFscore, mean F-score; mPrecision, mean precision; mRecall, mean recall.

Table 6

| Architecture | mAcc (%) | mPrecision (%) | mRecall (%) | mFscore (%) |

|---|---|---|---|---|

| Swin Transformer | 89.46 | 89.87 | 88.13 | 88.88 |

| MobileViT | 90.27 | 89.51 | 91.02 | 90.10 |

| VGG-19 | 83.51 | 81.86 | 81.43 | 81.52 |

| Vision Transformer | 82.97 | 81.73 | 83.18 | 81.00 |

| MobileNet | 81.89 | 79.48 | 78.12 | 78.52 |

| ResNet | 83.78 | 82.55 | 79.04 | 79.55 |

mAcc, mean accuracy; mFscore, mean F-score; mPrecision, mean precision; mRecall, mean recall.

The Swin Transformer also performed competitively, achieving an mAcc of 90.21% in the validation dataset and 89.46% in the test dataset, indicating its robustness. However, VGG-19, ViT, MobileNet, and ResNet showed relatively lower performance, with mAcc values ranging from 81.89% to 88.36% in the test dataset.

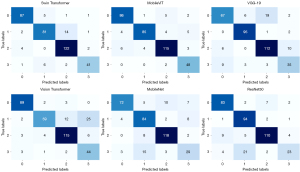

To further analyze the classification performance, Figure 6 presents the confusion matrices for the models, where the classes 0, 1, 2, and 3 correspond to MTC, PTC, NGAH, and CLT, respectively. These matrices provide a detailed breakdown of the model’s ability to distinguish between the four distinct categories. MobileViT’s confusion matrix demonstrates high diagonal values, indicating accurate classification across all categories, particularly for PTC and CLT, which are critical for clinical decision-making. This superior performance underscores the potential of MobileViT in accurately classifying thyroid ultrasound images, offering a promising tool for enhancing diagnostic accuracy and minimizing invasive diagnostic procedures like FNAB.

To further analyze the training dynamics of the evaluated models, Figures 7,8 present the training loss and accuracy curves, respectively. In Figure 7, the training loss curves illustrate the convergence behavior of each model over epochs. MobileViT and Swin Transformer exhibit the fastest and most stable convergence, with training loss decreasing rapidly and reaching a lower plateau compared to other models. This indicates their efficient learning capability and robustness in handling the classification task.

Similarly, Figure 8 shows the training accuracy curves, where MobileViT achieves the highest accuracy values across epochs, followed closely by Swin Transformer. Both models demonstrate consistent improvement in accuracy, reflecting their ability to effectively learn from the training data. In contrast, VGG-19, ViT, MobileNet, and ResNet show slower convergence and lower final accuracy, suggesting challenges in optimizing these architectures for the specific classification task.

These training dynamics further support the superior performance of MobileViT and Swin Transformer, as evidenced by their validation and test results, and highlight their potential as reliable tools for thyroid ultrasound image classification.

Discussion

While FNAB is the gold standard for thyroid nodule diagnoses, artificial intelligence (AI)-based ultrasound is a complementary, non-invasive tool. AI can serve as an initial screening method to identify patients who may need FNAB or subsequent surgery. By distinguishing between low-risk and high-risk nodules, AI can help prioritize FNAB for cases where it is truly necessary, optimizing patient management and minimizing unnecessary procedures.

Ultrasound technology plays a pivotal role in the evaluation of thyroid nodules, offering a non-invasive, accessible, and cost-effective method to assess characteristics that may indicate malignancy or benign conditions. By accurately characterizing thyroid nodules, ultrasound decreases the number of unnecessary biopsies performed on benign nodules (50). This not only reduces the burden on healthcare systems but also minimizes patient anxiety, potential complications, and costs associated with biopsy procedures. Ultrasound features can be used to stratify patients into different risk categories based on the appearance of nodules (51). Systems such as the TIRADS leverage ultrasound findings to recommend biopsy or follow-up based on risk levels. This stratification supports a tailored approach to patient care, ensuring that biopsies are recommended for nodules with a higher likelihood of cancer, while patients with low-risk nodules can avoid unnecessary procedures.

In addition to traditional methods (52,53), by providing an automated and accurate assessment of nodule malignancy risk, deep learning can reduce the number of unnecessary biopsies. This not only decreases patient discomfort and anxiety but also significantly cuts down healthcare costs and resource use, making the management of thyroid nodules more efficient. Our findings revealed that among several evaluated semantic segmentation models, K-Net emerged as the top performer, demonstrating exceptional ability in accurately delineating the ROI with a mIoU of 87.06% and an overall accuracy of 92.56%. For the classification task, the MobileViT model demonstrated superior performance, achieving the highest mAcc of 92.33% on the validation dataset and 90.27% on the test dataset. This indicates its effectiveness in accurately classifying thyroid nodules into papillary carcinoma, medullary carcinoma, NGAH, and CLT.

The dual deep learning architecture presented in this study significantly advances the field of thyroid ultrasound diagnostics by providing a robust, accurate, and non-invasive tool for early detection of malignant thyroid cancer. By automating the segmentation and classification process, our approach minimizes subjectivity and variability inherent in human interpretation, thus potentially reducing unnecessary biopsies and improving patient outcomes. Furthermore, our study underscores the importance of integrating advanced deep learning techniques in medical imaging, showcasing their potential to enhance diagnostic accuracy beyond the capabilities of traditional methods and existing thyroid classification systems. The promising results from our dual architecture pave the way for future research in this area, encouraging further development and validation of deep learning models in larger, multi-centric studies.

While the dual deep learning architecture presented in this study demonstrates significant potential, clinical validation remains a critical next step to ensure its practical applicability and reliability in real-world medical settings. Future work should focus on multi-centric clinical trials to validate the model’s performance across diverse patient populations and imaging equipment. Such trials would not only assess the generalizability of the model but also evaluate its impact on clinical decision-making, diagnostic accuracy, and patient outcomes.

Additionally, collaboration with clinicians and radiologists is essential to refine the model based on clinical feedback and to integrate it seamlessly into existing diagnostic workflows. Longitudinal studies could further investigate the model’s ability to track disease progression and its utility in guiding treatment plans. By addressing these aspects, our approach can evolve from a promising research tool to a clinically validated solution, ultimately enhancing the standard of care for patients with thyroid disorders.

Future studies should aim to quantify the reduction in unnecessary FNABs enabled by AI-based ultrasound systems, as this could significantly reduce iatrogenic risks and healthcare costs. Advances in medical imaging and AI have the potential to refine diagnostic workflows, ensuring that invasive procedures are reserved for cases with clear clinical indications.

A significant proportion of FNABs are performed on benign or low-risk nodules, which do not ultimately require intervention. AI-based ultrasound systems have the potential to significantly reduce this number by improving the precision of nodule classification. For example, by reliably identifying low-risk nodules (e.g., those with benign ultrasound features or low malignancy risk scores), AI could reduce the need for FNABs in these cases. Future research should aim to quantify this reduction through prospective studies, comparing the FNAB referral rates and outcomes between traditional diagnostic workflows and AI-assisted approaches. Reducing unnecessary FNABs not only minimizes iatrogenic risks (e.g., pain, bleeding, or infection) but also alleviates patient anxiety and healthcare costs. FNAB is a resource-intensive procedure, requiring specialized equipment, trained personnel, and follow-up care. By optimizing the selection of patients for FNAB, AI could lead to substantial cost savings for healthcare systems. Additionally, it could improve patient satisfaction by avoiding invasive procedures when they are not clinically justified.

In this study, the adoption of a four-class classification model provides additional clinical value by offering more detailed diagnostic information. Unlike traditional binary classification models, our approach distinguishes between different types of thyroid conditions, such as papillary carcinoma, medullary carcinoma, NGAH, and CLT. This refined classification aids in more tailored treatment planning and better-informed surgical decision-making. Furthermore, the four-class model increases the overall applicability and robustness of the AI tool, allowing it to address the diverse characteristics found in real-world clinical cases.

However, we have to acknowledge that this study, while provided its application of deep learning to enhance the diagnostic precision of thyroid nodule identification and biopsy recommendation, encounters several limitations that warrant consideration. One of the primary constraints is the reliance on a limited dataset. The breadth and diversity of data available for training and validating our deep learning models, such as K-Net, are essential for the development of robust and generalizable AI tools. A limited dataset may not comprehensively represent the variability in thyroid nodules, including differences in size, echogenicity, and patient demographics, which could influence the model’s ability to learn nuanced patterns indicative of malignancy. Consequently, the findings and the model’s performance must be interpreted with caution, acknowledging the potential for improved accuracy and reliability with access to larger and more varied datasets. Moreover, the automated labeling model, K-Net, employed for identifying ROIs within ultrasound images, demonstrated notable capability in streamlining the data preparation process. This affects the overall performance and reliability of the predictive model. The challenge with automated ROI detection underscores the complexity of medical imaging, where variations in image quality, nodule characteristics, and overlapping tissue structures can confound even sophisticated AI algorithms. This limitation highlights the need for ongoing refinement of automated labeling tools, potentially incorporating more advanced AI techniques or hybrid approaches that combine automated processes with expert radiologist validation.

Addressing these limitations opens several avenues for future research. Expanding the dataset, both in size and diversity, is paramount to enhancing the model’s learning capacity and its applicability across different patient populations. Additionally, while the development aimed for more accurate and reliable ROI detection, it did not achieve 100% accuracy. This shortfall is critical, as the precise delineation of ROIs is fundamental for training deep learning models to recognize malignant features accurately. Inaccuracies in ROI labeling could lead to missed malignancies or false positive automated labeling models, perhaps through the incorporation of newer AI methodologies or improved training strategies, could significantly bolster the accuracy of ROI detection. Collaboration with radiologists in refining and validating these models can also provide a critical bridge between AI potential and clinical applicability. Meanwhile, we plan to prioritize optimizing inference speed, developing confidence accumulation for video frames, and creating a sequence prediction model for real-time assessment on edge devices.

In summary, while the limitations related to the dataset size and automated labeling accuracy present challenges, they also outline the path forward for enriching deep learning research in medical imaging. By addressing these constraints, future studies can build upon our findings to develop more sophisticated, accurate, and clinically relevant AI tools for thyroid nodule diagnosis and management.

Conclusions

For the segmentation task, we chose K-Net as our model due to its robust performance in medical imaging, particularly for segmenting thyroid nodules. Compared to state-of-the-art approaches like DeepLabV3+, FASTSCNN, and U-Net, K-Net excels in preserving spatial information and enhancing feature extraction without sacrificing efficiency. For the classification task, MobileViT demonstrated superior performance, achieving high accuracy and efficiency in distinguishing benign from malignant nodules, making it an ideal choice for real-time clinical applications. Together, the combination of K-Net and MobileViT provides a comprehensive solution for thyroid nodule analysis, significantly enhancing diagnostic precision and clinical utility.

In conclusion, the dual deep learning approach presented in this study represents a significant step forward in the non-invasive prediction of FNAB-required thyroid cancer. It holds the promise of improving the accuracy and efficiency of thyroid nodule assessment, ultimately aiding in the early detection and treatment of malignant conditions, and contributing to better patient care and outcomes in thyroid cancer management.

Acknowledgments

We would like to express our sincere gratitude to the Breast and Thyroid Ultrasound Team at Peking University Shenzhen Hospital for providing additional datasets that significantly contributed to this study.

Footnote

Reporting Checklist: The authors have completed the CLEAR reporting checklist. Available at https://qims.amegroups.com/article/view/10.21037/qims-24-2336/rc

Funding: This work was supported by

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://qims.amegroups.com/article/view/10.21037/qims-24-2336/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. This study was conducted in accordance with the Declaration of Helsinki and its subsequent amendments. The study was approved by Medical Ethics Committee of Peking University Shenzhen Hospital (No. [2024] 084) and individual consent for this retrospective analysis was waived.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Cabanillas ME, McFadden DG, Durante C. Thyroid cancer. Lancet 2016;388:2783-95. [Crossref] [PubMed]

- Fugazzola L. Medullary thyroid cancer - An update. Best Pract Res Clin Endocrinol Metab 2023;37:101655. [Crossref] [PubMed]

- Filetti S, Durante C, Hartl D, Leboulleux S, Locati LD, Newbold K, Papotti MG, Berruti AESMO Guidelines Committee. Electronic address: clinicalguidelines@esmo. Thyroid cancer: ESMO Clinical Practice Guidelines for diagnosis, treatment and follow-up†. Ann Oncol 2019;30:1856-83. [Crossref] [PubMed]

- Varadarajulu S, Fockens P, Hawes RH. Best practices in endoscopic ultrasound-guided fine-needle aspiration. Clin Gastroenterol Hepatol 2012;10:697-703. [Crossref] [PubMed]

- Namsena P, Songsaeng D, Keatmanee C, Klabwong S, Kunapinun A, Soodchuen S, Tarathipayakul T, Tanasoontrarat W, Ekpanyapong M, Dailey MN. Diagnostic performance of artificial intelligence in interpreting thyroid nodules on ultrasound images: a multicenter retrospective study. Quant Imaging Med Surg 2024;14:3676-94. [Crossref] [PubMed]

- Freilinger A, Kaserer K, Zettinig G, Pruidze P, Reissig LF, Rossmann T, Weninger WJ, Meng S. Ultrasound for the detection of the pyramidal lobe of the thyroid gland. J Endocrinol Invest 2022;45:1201-8. [Crossref] [PubMed]

- Kitahara CM, Sosa JA. The changing incidence of thyroid cancer. Nat Rev Endocrinol 2016;12:646-53. [Crossref] [PubMed]

- Larcher de Almeida AM, Delfim RLC, Vidal APA, Chaves MCDCM, Santiago ACL, Gianotti MF, Gonçalves MDDC, Vaisman M, de Carvalho DP, Teixeira PFDS. Combining the American Thyroid Association's Ultrasound Classification with Cytological Subcategorization Improves the Assessment of Malignancy Risk in Indeterminate Thyroid Nodules. Thyroid 2021;31:922-32. [Crossref] [PubMed]

- Yang WT, Ma BY, Chen Y. A narrative review of deep learning in thyroid imaging: current progress and future prospects. Quant Imaging Med Surg 2024;14:2069-88. [Crossref] [PubMed]

- Tessler FN, Middleton WD, Grant EG, Hoang JK, Berland LL, Teefey SA, Cronan JJ, Beland MD, Desser TS, Frates MC, Hammers LW, Hamper UM, Langer JE, Reading CC, Scoutt LM, Stavros AT. ACR Thyroid Imaging, Reporting and Data System (TI-RADS): White Paper of the ACR TI-RADS Committee. J Am Coll Radiol 2017;14:587-95. [Crossref] [PubMed]

- Haugen BR, Alexander EK, Bible KC, Doherty GM, Mandel SJ, Nikiforov YE, Pacini F, Randolph GW, Sawka AM, Schlumberger M, Schuff KG, Sherman SI, Sosa JA, Steward DL, Tuttle RM, Wartofsky L. 2015 American Thyroid Association Management Guidelines for Adult Patients with Thyroid Nodules and Differentiated Thyroid Cancer: The American Thyroid Association Guidelines Task Force on Thyroid Nodules and Differentiated Thyroid Cancer. Thyroid 2016;26:1-133. [Crossref] [PubMed]

- Russ G, Bonnema SJ, Erdogan MF, Durante C, Ngu R, Leenhardt L. European Thyroid Association Guidelines for Ultrasound Malignancy Risk Stratification of Thyroid Nodules in Adults: The EU-TIRADS. Eur Thyroid J 2017;6:225-37. [Crossref] [PubMed]

- Kwak JY, Han KH, Yoon JH, Moon HJ, Son EJ, Park SH, Jung HK, Choi JS, Kim BM, Kim EK. Thyroid imaging reporting and data system for US features of nodules: a step in establishing better stratification of cancer risk. Radiology 2011;260:892-9. [Crossref] [PubMed]

- Zhou J, Song Y, Zhan W, Wei X, Zhang S, Zhang R, et al. Thyroid imaging reporting and data system (TIRADS) for ultrasound features of nodules: multicentric retrospective study in China. Endocrine 2021;72:157-70. [Crossref] [PubMed]

- Kim DH, Kim SW, Basurrah MA, Lee J, Hwang SH. Diagnostic Performance of Six Ultrasound Risk Stratification Systems for Thyroid Nodules: A Systematic Review and Network Meta-Analysis. AJR Am J Roentgenol 2023;220:791-803. [Crossref] [PubMed]

- Yang L, Li C, Chen Z, He S, Wang Z, Liu J. Diagnostic efficiency among Eu-/C-/ACR-TIRADS and S-Detect for thyroid nodules: a systematic review and network meta-analysis. Front Endocrinol (Lausanne) 2023;14:1227339. [Crossref] [PubMed]

- Kim PH, Yoon HM, Hwang J, Lee JS, Jung AY, Cho YA, Baek JH. Diagnostic performance of adult-based ATA and ACR-TIRADS ultrasound risk stratification systems in pediatric thyroid nodules: a systematic review and meta-analysis. Eur Radiol 2021;31:7450-63. [Crossref] [PubMed]

- Wolinski K, Stangierski A, Ruchala M. Comparison of diagnostic yield of core-needle and fine-needle aspiration biopsies of thyroid lesions: Systematic review and meta-analysis. Eur Radiol 2017;27:431-6. [Crossref] [PubMed]

- Choi SH, Baek JH, Lee JH, Choi YJ, Hong MJ, Song DE, Kim JK, Yoon JH, Kim WB. Thyroid nodules with initially non-diagnostic, fine-needle aspiration results: comparison of core-needle biopsy and repeated fine-needle aspiration. Eur Radiol 2014;24:2819-26. [Crossref] [PubMed]

- Alexander EK, Cibas ES. Diagnosis of thyroid nodules. Lancet Diabetes Endocrinol 2022;10:533-9. [Crossref] [PubMed]

- Akkus Z, Cai J, Boonrod A, Zeinoddini A, Weston AD, Philbrick KA, Erickson BJ. A Survey of Deep-Learning Applications in Ultrasound: Artificial Intelligence-Powered Ultrasound for Improving Clinical Workflow. J Am Coll Radiol 2019;16:1318-28. [Crossref] [PubMed]

- Wildman-Tobriner B, Taghi-Zadeh E, Mazurowski MA. Artificial Intelligence (AI) Tools for Thyroid Nodules on Ultrasound, From the AJR Special Series on AI Applications. AJR Am J Roentgenol 2022;219:1-8. [Crossref] [PubMed]

- Shen YT, Chen L, Yue WW, Xu HX. Artificial intelligence in ultrasound. Eur J Radiol 2021;139:109717. [Crossref] [PubMed]

- Gao Y, Wang W, Yang Y, Xu Z, Lin Y, Lang T, Lei S, Xiao Y, Yang W, Huang W, Li Y. An integrated model incorporating deep learning, hand-crafted radiomics and clinical and US features to diagnose central lymph node metastasis in patients with papillary thyroid cancer. BMC Cancer 2024;24:69. [Crossref] [PubMed]

- Chi J, Li Z, Sun Z, Yu X, Wang H. Hybrid transformer UNet for thyroid segmentation from ultrasound scans. Comput Biol Med 2023;153:106453. [Crossref] [PubMed]

- Bi H, Cai C, Sun J, Jiang Y, Lu G, Shu H, Ni X. BPAT-UNet: Boundary preserving assembled transformer UNet for ultrasound thyroid nodule segmentation. Comput Methods Programs Biomed 2023;238:107614. [Crossref] [PubMed]

- Peng S, Liu Y, Lv W, Liu L, Zhou Q, Yang H, et al. Deep learning-based artificial intelligence model to assist thyroid nodule diagnosis and management: a multicentre diagnostic study. Lancet Digit Health 2021;3:e250-9. [Crossref] [PubMed]

- Göreke V. A Novel Deep-Learning-Based CADx Architecture for Classification of Thyroid Nodules Using Ultrasound Images. Interdiscip Sci 2023;15:360-73. [Crossref] [PubMed]

- Liu Y, Feng Y, Qian L, Wang Z, Hu X. Deep learning diagnostic performance and visual insights in differentiating benign and malignant thyroid nodules on ultrasound images. Exp Biol Med (Maywood) 2023;248:2538-46. [Crossref] [PubMed]

- Liu Y, Chen C, Wang K, Zhang M, Yan Y, Sui L, Yao J, Zhu X, Wang H, Pan Q, Wang Y, Liang P, Xu D. The auxiliary diagnosis of thyroid echogenic foci based on a deep learning segmentation model: A two-center study. Eur J Radiol 2023;167:111033. [Crossref] [PubMed]

- Yang J, Page LC, Wagner L, Wildman-Tobriner B, Bisset L, Frush D, Mazurowski MA. Thyroid Nodules on Ultrasound in Children and Young Adults: Comparison of Diagnostic Performance of Radiologists' Impressions, ACR TI-RADS, and a Deep Learning Algorithm. AJR Am J Roentgenol 2023;220:408-17. [Crossref] [PubMed]

- Chen G, Tan G, Duan M, Pu B, Luo H, Li S, Li K. MLMSeg: A multi-view learning model for ultrasound thyroid nodule segmentation. Comput Biol Med 2024;169:107898. [Crossref] [PubMed]

- Wang J, Jiang J, Zhang D, Zhang YZ, Guo L, Jiang Y, Du S, Zhou Q. An integrated AI model to improve diagnostic accuracy of ultrasound and output known risk features in suspicious thyroid nodules. Eur Radiol 2022;32:2120-9. [Crossref] [PubMed]

- Sun J, Li C, Lu Z, He M, Zhao T, Li X, Gao L, Xie K, Lin T, Sui J, Xi Q, Zhang F, Ni X. TNSNet: Thyroid nodule segmentation in ultrasound imaging using soft shape supervision. Comput Methods Programs Biomed 2022;215:106600. [Crossref] [PubMed]

- Zhao SX, Chen Y, Yang KF, Luo Y, Ma BY, Li YJ. A Local and Global Feature Disentangled Network: Toward Classification of Benign-Malignant Thyroid Nodules From Ultrasound Image. IEEE Trans Med Imaging 2022;41:1497-509. [Crossref] [PubMed]

- Deng P, Han X, Wei X, Chang L. Automatic classification of thyroid nodules in ultrasound images using a multi-task attention network guided by clinical knowledge. Comput Biol Med 2022;150:106172. [Crossref] [PubMed]

- Wang J, Yang X, Jia X, Xue W, Chen R, Chen Y, Zhu X, Liu L, Cao Y, Zhou J, Ni D, Gu N. Thyroid ultrasound diagnosis improvement via multi-view self-supervised learning and two-stage pre-training. Comput Biol Med 2024;171:108087. [Crossref] [PubMed]

- Gökmen Inan N, Kocadağlı O, Yıldırım D, Meşe İ, Kovan Ö. Multi-class classification of thyroid nodules from automatic segmented ultrasound images: Hybrid ResNet based UNet convolutional neural network approach. Comput Methods Programs Biomed 2024;243:107921. [Crossref] [PubMed]

- Kang Q, Lao Q, Li Y, Jiang Z, Qiu Y, Zhang S, Li K. Thyroid nodule segmentation and classification in ultrasound images through intra- and inter-task consistent learning. Med Image Anal 2022;79:102443. [Crossref] [PubMed]

- Vadhiraj VV, Simpkin A, O'Connell J, Singh Ospina N, Maraka S, O'Keeffe DT. Ultrasound Image Classification of Thyroid Nodules Using Machine Learning Techniques. Medicina (Kaunas) 2021;57:527. [Crossref] [PubMed]

- Ronneberger O, Fischer P, Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In: Navab N, Hornegger J, Wells W, Frangi A. editors Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015. Lecture Notes in Computer Science, vol 9351. Springer, Cham; 2015:234-41.

- Chen LC, Zhu Y, Papandreou G, Schroff F, Adam H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In: Ferrari V, Hebert M, Sminchisescu C, Weiss Y. editors. Computer Vision – ECCV 2018. Lecture Notes in Computer Science, vol 11211. Springer, Cham; 2018:833-51.

- Zhao H, Shi J, Qi X, Wang X, Jia J. Pyramid scene parsing network. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2017:6230-9.

- Liu J, Zhang H, Xiao D. Research on anti-clogging of ore conveyor belt with static image based on improved Fast-SCNN and U-Net. Sci Rep 2023;13:17880. [Crossref] [PubMed]

- Xie E, Wang W, Yu Z, Anandkumar A, Alvarez JM, Luo P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Advances in Neural Information Processing Systems 2021;34:12077-90.

- Cheng B, Misra I, Schwing AG, Kirillov A, Girdhar R. Masked-attention mask transformer for universal image segmentation. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 18-24 June 2022; New Orleans, LA, USA. IEEE; 2022.

- Zhang W, Pang J, Chen K, Loy CC. K-net: Towards unified image segmentation. Advances in Neural Information Processing Systems 2021;34:10326-38.

- Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B. Swin transformer: Hierarchical vision transformer using shifted windows. 2021 IEEE/CVF International Conference on Computer Vision (ICCV); 10-17 October 2021; Montreal, QC, Canada. IEEE; 2021.

- He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 27-30 June 2016; Las Vegas, NV, USA. IEEE; 2016.

- Kobaly K, Kim CS, Mandel SJ. Contemporary Management of Thyroid Nodules. Annu Rev Med 2022;73:517-28. [Crossref] [PubMed]

- Durante C, Grani G, Lamartina L, Filetti S, Mandel SJ, Cooper DS. The Diagnosis and Management of Thyroid Nodules: A Review. JAMA 2018;319:914-24. [Crossref] [PubMed]

- Ruan J, Xu X, Cai Y, Zeng H, Luo M, Zhang W, Liu R, Lin P, Xu Y, Ye Q, Ou B, Luo B. A Practical CEUS Thyroid Reporting System for Thyroid Nodules. Radiology 2022;305:149-59. [Crossref] [PubMed]

- Holt EH. Current Evaluation of Thyroid Nodules. Med Clin North Am 2021;105:1017-31. [Crossref] [PubMed]