Utilizing shallow features and spatial context for weakly supervised intracerebral hemorrhage segmentation

Introduction

Intracerebral hemorrhage (ICH) refers to non-traumatic bleeding within the brain parenchyma. It is the second most common type of stroke, with an acute phase (within 24 hours of onset) mortality rate of approximately 30–40% (1). In many Asian countries, ICH is the second leading cause of death and permanent disability (2), severely impacting human physical and mental health and imposing a substantial economic burden on patients’ families and society. Early intervention during the acute phase can significantly improve functional prognosis, enhancing survival rates and quality of life (3). Thus, the rapid and accurate diagnosis of hemorrhage sites is crucial for early treatment.

With the advancement of deep learning, the use of modern deep neural networks, such as convolutional neural networks (CNNs) (4-6), to assist doctors in diagnosing diseases (7,8) has become increasingly popular. The ICH classification model provides a classification result for hemorrhage, but it does not specify the precise location of the hemorrhage. Semantic segmentation (7,9) involves the training of a model to classify each pixel with abundant pixel-level labeled data. Unlike classification models, segmentation models can identify the specific location and contour of the hemorrhage, thus offering better diagnostic assistance to doctors. In ICH segmentation tasks, despite numerous efforts using fully supervised semantic segmentation with pixel-level annotations (10-13), publicly available pixel-level annotated datasets for ICH are still very limited due to the high cost and significant time labeling each pixel. For instance, the publicly available Brain Hemorrhage Segmentation Dataset (BHSD) (14) includes only 200 scan volumes with pixel-level annotations. Therefore, weakly-supervised semantic segmentation (WSSS) methods using weak annotations, such as image-level annotations (15), point annotations (16), scribbles (17), and bounding boxes (18), have emerged. Image-level annotations are easy to obtain but provide no information about the location of the target object. Therefore, designing a model using only image-level annotations through WSSS methods is challenging.

The existing paradigm of WSSS methods involves training a classification model and then generating a class activation map (CAM) to obtain the rough location of the target object. The essence of these methods is performing a weighted summation on the feature maps to highlight the parts of the image that the classification model focuses on the most. These focused areas often represent the most recognizable parts of an object (19), which is known as under-activation. Several explorations have been made in this area. Region-erasure methods (20,21) hide the most recognizable parts identified by CAM to force the model to focus on other parts of the target object, thus expanding the activation region. However, this approach assumes that the target object is larger than the activation region. Pixel-pixel methods (15,22) use under-activated areas of the target object in CAM as “seeds” and expand the activation region by computing pixel-to-pixel relationships, extending to cover the entire target object. Ahn et al. (22) proposed AffinityNet, which learns the semantic relationships between pixels within an image to refine the initial CAM further. Although effective in natural images, these methods do not account for inter-image relationships. Cross-image methods (23,24) explore inter-image relationships. Wu et al. (25) introduced the embedded discriminative attention mechanism (EDAM) method, pairing images containing similar objects and combining their CAMs to complement each other. However, it typically focuses on the semantics of similar objects rather than spatial context. Salient object-guided methods (26,27) combine salient maps highlighting the main objects in an image (28) to distinguish background and foreground. For instance, Chen et al. (27) proposed the I2CRC method, which uses inter- and intra-class constraints guided by salient maps. However, these methods are not well-suited due to the inherent ambiguous boundaries in medical images. Unsupervised-enhanced methods (29,30) integrate unsupervised methods into weakly supervised learning to obtain more accurate object contour information. For example, Jo et al. (30) utilized semantic consistency features from unsupervised techniques to eliminate biased objects in pseudo segmentation masks. However, these methods require large datasets, and in medical imaging, particularly for ICH, datasets are often small, making model convergence difficult. Recently, Abidin et al. (31) comprehensively summarized the research on medical image segmentation techniques, especially for the segmentation of brain abnormalities. The segmentation tasks of brain tumors and ICH are similar in detecting and delineating abnormal regions in brain imaging, which provide important references for our ICH segmentation strategy.

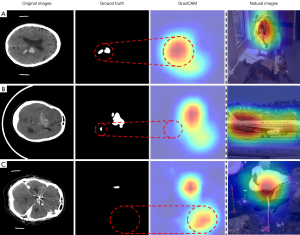

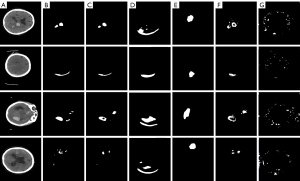

Compared to other segmentation tasks, ICH segmentation tasks present three unique challenges, as shown in Figure 1. (I) Over-activation: hemorrhage sites are often small, and their locations and shapes are variable. Commonly used CAM methods, such as Grad-CAM (32), often produce over-activated activation maps from the deep feature maps in weakly supervised ICH segmentation tasks. This over-activation manifests as activation regions that not only cover the hemorrhage areas but also extend to large surrounding regions, leading to significant inaccuracies in the segmentation results. (II) Incomplete-activation: it is common to observe two or more hemorrhage sites in scans of the same patient. The model often focuses on only the prominent ones, neglecting others, leading to incomplete-activation and potentially missing critical information. (III) Misleading-activation occurs when the model correctly predicts the presence of hemorrhage in a slice, but the activation region does not correspond to the actual hemorrhage sites. These issues make accurate segmentation of hemorrhage sites challenging with only image-level annotations. The “natural images” column in Figure 1 is included to contrast the activation patterns of CAMs between natural images and computed tomography (CT) images. In natural images, CAM activation typically concentrates on the most prominent parts of natural objects. Current WSSS methods do not address these specific issues. Due to insufficient supervisory information, the WSSS networks often struggle to achieve ideal results. Changing datasets can easily lead to the model learning a seemingly correct but significantly biased result.

Based on the above observations, this paper proposes a method to resolve the issues of over-activation, incomplete-activation, and misleading-activation in CAMs generated from existing methods. This method utilizes fine-grained information from shallow feature maps to accurately locate hemorrhage sites in images and achieve precise object contours to address over-activation and misleading-activation. Additionally, it fully utilizes the spatial contextual information of CT to address incomplete-activation in CAM, thereby identifying other potential hemorrhage sites. By integrating CAMs from two models, the segmentation masks are further refined and expanded, effectively mitigating the inaccuracies and incompleteness caused by over-activation and incomplete-activation. Currently, research and application of WSSS in medical imaging are limited. Our study fills this gap by utilizing image-level annotations for WSSS in ICH.

In summary, our main contributions are as follows:

- We propose a novel WSSS method utilizing shallow features and spatial context for weakly-supervised ICH segmentation. The segmentation results are significantly improved compared to other WSSS methods, reaching state-of-the-art performance.

- The Shallow-Feature CAM module addresses issues of over-activation and misleading-activation by utilizing fine-grained information from shallow feature maps to capture more precise locations and contours of hemorrhage sites. It effectively selects important features from the perspectives of gradients and pixel variances of feature maps.

- The Spatial Context Aware (SCA) module addresses the issue of incomplete activation by leveraging the distinct focus areas of different models and innovatively adjusting weights based on the distance between adjacent slices. This approach integrates spatial contextual information to optimize the CAM of the current slice, fills in regions that were not activated, and effectively redirects the CAM’s attention to inactivated hemorrhage areas.

We present this article in accordance with the TRIPOD+AI reporting checklist (available at https://qims.amegroups.com/article/view/10.21037/qims-24-1462/rc).

Methods

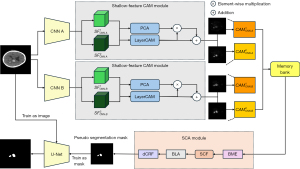

Figure 2 presents an overview of our proposed method. First, we train two CNNs for classification. The feature maps generated by the trained classification models containing information about the target locations. We then use the Shallow-Feature CAM module to generate CAMs from the shallow feature maps extracted from the two models and store them in the memory bank. In the memory bank, consecutive slices predicted as hemorrhage are stored as a sequence for spatial context fusion (SCF) in SCA. Once the CAMs for all images have been generated, SCA integrates the CAMs produced by the two models and then uses the CAM information from adjacent slices for SCF. Next, it aggregates the CAMs from different layers and binarizes them, following existing work (22) by using dense conditional random field (dCRF) (33) to further refine the pseudo segmentation masks. Finally, the generated pseudo segmentation masks are used to train a standard U-Net. During inference, only the final trained U-Net is required. This study was conducted in accordance with the Declaration of Helsinki and its subsequent amendments.

Motivation

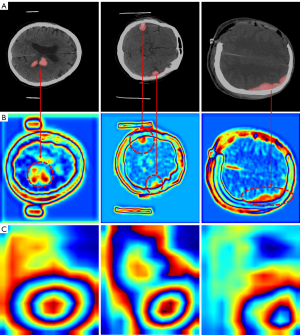

As shown in Figure 3, in common CAM-generation methods, for example, GradCAM (32), SmoothGradCAM++ (34), and ScoreCAM (35), the CAMs are often generated from the deepest feature maps of the backbone. These methods above generate CAM using the feature maps from the final convolutional layer, neglecting the fine-grained information in shallow feature maps. The deepest feature maps have very low resolution, and each pixel has a large receptive field. Consequently, each pixel’s weight in the generated CAM is also large. A few pixels can become a large region when scaled up to the original size through interpolation. In this task, hemorrhage sites are often small, leading to over-activation issues, making accurate localization of hemorrhage sites very difficult.

Jiang et al. (36) asserted that the poor performance of these methods on shallow feature maps is due to applying a single weight to the entire feature map of a channel without considering the highly dispersed and fine-grained nature of shallow feature maps. They proposed LayerCAM, a new method that multiplies the gradients and activation values of each pixel within the feature maps and then linearly adds all weighted feature maps along the channel dimension. The gradients are processed using the rectified linear unit (ReLU) activation function to set negative gradients to zero. This approach retains the importance of different channels and spatial positions, producing higher resolution and finer CAM. The calculation formula of LayerCAM for the CAM is as follows:

where denotes the gradient value at the spatial position of the feature map, and denotes the activation value at the spatial position of the feature map.

In the work of Yasuki et al. (37), the first principal component feature (PC1) obtained by applying principal component analysis (PCA) to the deepest feature maps of modern CNNs with large convolution kernels and large receptive fields in natural images demonstrated good object edge extraction capabilities. Inspired by this work, in our study, as shown in Figure 4, we found that PC1 obtained from the shallow feature maps of small convolution kernel CNN contains relatively complete contour information for suspected hemorrhage areas, indicating that after shallow layer extraction by the CNN, the variance of suspected hemorrhage areas in the shallow feature maps is relatively large, forming a significant difference from the surrounding normal areas. This characteristic of the shallow features provides a sufficient basis for obtaining CAM with accurate localization of hemorrhage sites from shallow feature maps. In contrast, the PC1 obtained from the deepest feature maps is very coarse and cannot be effectively utilized. Therefore, PC1 from shallow feature maps can also be used to optimize edges. Meanwhile, since the activation values in CAM generated from shallow feature maps are often relatively small (36), the difference between foreground and background is also minimal. After processing with the combination of PC1 and CAM, the activation values of all suspected hemorrhagic regions are amplified, allowing more precise differentiation of hemorrhagic areas from extensive background noise. Additionally, following Yasuki et al. (37), we evaluated the performance of the new generation of CNN with large receptive fields on the ICH dataset. The CAMs in the fourth column of Figure 3 were generated using the Shallow-Feature CAM method from the stage 2 feature maps of ConvNextV2 (38), showing too many activated areas, making them difficult to utilize.

Notably, we also considered adopting these more modern architectures such as Vision Transformer (ViT) (39) to replace CNNs for feature extraction. However, on the one hand, due to the relatively small scale of our dataset, ViT-based models usually require a large amount of data for effective training and optimization, resulting in poor performance in classification task. In preliminary experiments, when using a ViT-based model for ICH classification, the classification accuracy was significantly lower than that of a CNN-based model, which directly affected the subsequent segmentation accuracy. On the other hand, the attention mechanism of ViT-based model is global, lacking the local feature extraction ability and inductive bias property of convolutional operations. In this paper, we need to accurately extract fine-grained features in the shallow layers of the image to precisely identify the boundaries and locations of hemorrhage sites. The global attention mechanism of ViTs makes it difficult to focus on local subtle features. When dealing with medical images such as ICH, where local feature differences are relatively small, ViTs cannot extract key information as effectively as CNNs. In our ICH segmentation task, the CNN-based method shows obvious advantages in precise segmentation using shallow features. Therefore, we chose CNNs as the feature extraction architecture for this study.

Additionally, we observed two points. First, the spatial context information in the two-dimensional (2D) slices obtained from CT scans is lost when input into the 2D classification model and is not utilized. However, the consecutive CAMs for hemorrhage localization generated from CT slices of the same patient can complement each other. Second, for the task addressed in this paper, different models focus on different regions of the target, making it possible for the CAMs from different models to complement each other.

Therefore, we use the Shallow-Feature CAM module, which combines the target localization information extracted from shallow feature maps using LayerCAM and the edge information extracted by PC1 to obtain CAM. We then designed a method to aggregate the information from consecutive contextual CAMs and a strategy to aggregate the CAMs generated by two models.

Generating CAMs from shallow features

In this section, we introduce the Shallow-Feature CAM module. Directly using methods such as Grad-CAM on shallow feature maps yields suboptimal CAM, as these methods assign a global weight to the entire channel without accounting for the significant background noise present in shallow feature maps. Therefore, we employ the LayerCAM method, specifically designed to capture fine-grained information from shallow feature maps to generate CAMs. Specifically, for the task in this paper, as illustrated in the Shallow-Feature CAM module section of Figure 2, we use ResNet34 and ResNet50 as classifiers. First, we obtain feature maps from Layer1 and Layer2 of the two classifiers , and apply the LayerCAM method to obtain the corresponding CAM , calculated as follows:

where i represents the layer from which the feature map is derived, and j represents the specific model.

Next, we apply PCA to the feature maps to obtain . We then multiply PC1 by the CAMs to eliminate much of the background noise activation present in . Finally, we add to to obtain PC1 edge-optimized CAMs , calculated as follows:

where i represents the layer from which the feature map is derived, and j represents the specific model. This approach increases the relative magnitude of the activation values in the CAM for the most suspected hemorrhage areas.

Finally, we store these CAMs in the memory bank.

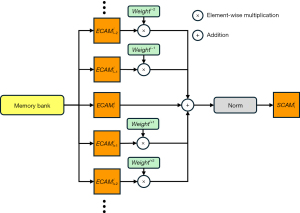

Spatial Context-Aware module

The Spatial Context-Aware module consists of four steps: bi-model CAM ensemble (BME), SCF, bi-layer aggregation (BLA), and dCRF. After generating the required CAMs for all target images, the next step is the bi-model CAM ensemble, as shown in the SCA part of Figure 2. First, following the work of LayerCAM, since the activation values of the CAMs generated from shallow feature maps are relatively small, we need to amplify the CAM from the shallowest layer, namely, Layer 1, otherwise the weight of the information from the shallowest CAMs will be too small. The amplified CAM is calculated as follows:

where λ is the scaling factor, we follow the work of Jiang et al. (36) and set λ to 2.

Then, the CAMs generated from the same layer of the two models are integrated to obtain , which is calculated as follows:

where i represents the layer from which the feature map is derived, and j and m represent different models. The calculated is stored in the memory bank for further use.

Next, we perform SCF. As shown in Figure 5, for the previously obtained , when the prediction result of whether the current slice is hemorrhagic is true, considering the contributions of the current slice and its adjacent slices, the weights are adjusted according to the distance between the current slice and the adjacent slices. The information from the adjacent slices is optimally integrated using the adjusted weights and added to the current slice to obtain the CAM , which is calculated as follows:

where i represents the layer from which the feature map is derived, c represents the maximum number of preceding and following slices used for integration, l is the current image number, NF is the normalization factor, w is the weight coefficient of these adjacent slices, and n represents the length of the sequence of slices predicted to be hemorrhagic. Based on the meaning of the parameters, c is set to 2, and w is set to 0.5. We observed that the values of c and w only slightly affect the choice of binarization threshold.

Finally, the processed CAMs from different layers are binarized using a logical ‘OR’ according to the threshold, resulting in the final pseudo segmentation masks , as follows:

where TH is the selected threshold, 1 represents the foreground, and 0 represents the background. The effects of different threshold values will be detailed in next section.

In medical images, especially CT images of ICH, the spatial context information between different slices is crucial for accurate segmentation. The BME module we proposed integrates the CAMs generated by two models, taking advantage of the differences in the focused regions of different models to effectively expand the activation range of hemorrhage areas. The SCF module innovatively adjusts the weights according to the distance between adjacent slices to fuse spatial context information, filling in the inactivated regions in the CAM of the current slice, which is particularly effective in dealing with multiple hemorrhage sites in the same patient. The BLA module further optimizes the aggregation of CAMs from different layers. These modules are closely integrated and specifically designed to address the challenges in ICH segmentation, which is different from the application scenarios and purposes of similar ideas in other fields, representing a unique and innovative solution for this task.

Post-processing the segmentation masks with dCRF is a common step. Following the pipeline of previous work, we further refine the pseudo segmentation masks generated by dCRF, which will be used to train a U-Net.

Results

In our experiments, we used two publicly available medical image datasets for ICH segmentation, employing image-level weak supervision for each dataset.

Datasets

Computed Tomography Images for Intracranial Hemorrhage Detection and Segmentation Dataset (BCIHM)

The BCIHM (40) includes brain CT scans of 82 patients, 36 of whom were diagnosed with ICH. Each CT scan for each patient includes about 30 slices with 5 mm slice-thickness. The mean and standard deviation of patients’ age were 27.8 and 19.5, respectively. Of the 82 patients, 46 of the patients were males and 36 of them were females. The annotations in this dataset only include foreground and background. We followed the method of the original authors to extract 2D slices and then generate image-level labels, with a window level of 50 and a window width of 130.

BHSD (14)

This dataset contains 200 scan volumes with pixel-level annotations for five types of brain hemorrhages. The images in BHSD dataset are collected from the Radiological Society of North America (RSNA) dataset (41). The RSNA dataset is a public collection of 874,035 CT head images from a mixed patient cohort with and without ICH. It is multi-institutional and multi-national and includes image-level expert annotations from neuroradiologists about the presence and type of bleed. In this study, we set the window level to 50 and the window width to 130, merged all hemorrhage types, and converted the original three-dimensional (3D) volumes and annotations into 2D slices, generating image-level annotations.

Evaluation metrics

Following the typical evaluation metrics in existing WSSS studies (22,25), we used the mean Intersection over Union (mIoU) to evaluate our method, which is calculated as follows:

Here, represents the predicted area for the category, and represents the ground truth area for the category.

Additionally, we analyzed the segmentation results of different methods to count the respective correct localization (CL), missed localization (ML), and false-positive localization (FPL) of hemorrhage sites. As long as there is an overlap between the predicted result and the hemorrhage site, it is considered a CL. The calculation methods for CL, ML, and FPL are as follows:

Here, NCorrect represents the number of correctly localized hemorrhage sites, NGT represents the total number of hemorrhage sites in the ground truth masks, NFP represents the number of falsely localized hemorrhage sites, and NPred represents the total number of hemorrhage sites in the predicted results.

During the classifier training phase, 60% of the slices are used as the training set, 20% as the validation set, and 20% as the test set, ensuring that the class proportions are balanced across the training, validation, and test sets, and that the slices of a single patient are kept intact. In the pseudo segmentation mask generation phase, pseudo segmentation masks are generated for all data, and the order of the slices is not shuffled to preserve spatial context information. The pseudo segmentation masks generated from the training set are used to train the U-Net. Then, the trained U-Net outputs the prediction results for the validation and test sets, and the relevant metrics are calculated against the ground truth annotations.

Implementation details

Our work was implemented in the PyTorch framework (PyTprch Foundation, San Francisco, CA, USA). All experiments were conducted on a single NVIDIA RTX 3090 GPU (NVIDIA, Santa Clara, CA, USA). The two classifiers were both optimized using the AdamW optimizer with an initial learning rate of 1e−3, a batch size of 64, and the cross-entropy loss function. The segmentation model U-Net was trained using pseudo segmentation masks and optimized with stochastic gradient descent (SGD) at an initial learning rate of 0.01, a batch size of 32, and a loss function that is a linear combination of the DICE loss and cross-entropy loss. All models were trained for 200 epochs, and early stop was used. The input resolution for all images was 384×384.

For training a classification model, we apply a series of data augmentation strategies to the input images, including horizontal flipping, translation, scaling, rotation, color transformations, blurring, and distortion. For training a segmentation model, we apply horizontal and vertical flipping to the input images sequentially.

Comparison with other WSSS methods using image-level labels

To further assess the effectiveness of our proposed method, we compared it with other WSSS methods using image-level annotations. We first trained a fully supervised standard U-Net using ground truth annotations as the baseline. Since automated ensemble (AE) (42) is designed for segmenting human organs and other methods are designed for natural images, we applied these methods to the ICH domain using the BHSD and BCIHM datasets for a fair comparison. We followed the procedures of these methods to generate pseudo segmentation masks, adjusting thresholds as needed for optimal results. For AffinityNet (22), the parameter alpha related to the background threshold was set to 8. For EDAM (25), the background threshold was adjusted to 0.95, and the saliency map that highlights main objects was not used to further refine the pseudo segmentation masks due to its inaccuracy for medical images with ambiguous boundaries. For regional semantic contrast and aggregation (RCA) (23), the background threshold was set to 0.9, and for AE, it was set to 0.15. Since AE’s pipeline does not include further training of a semantic segmentation network, all methods except AE followed the hyperparameters of the fully supervised U-Net, using the generated pseudo segmentation masks to train a U-Net.

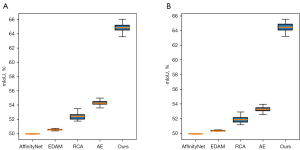

Table 1 shows the comparison results using the respective thresholds for different methods. On both datasets, our proposed method, utilizing ResNet34 and ResNet50 as the classifiers, achieved the highest metrics, with mIoU of 69.8% and 68.9%, respectively, outperforming previous methods and reaching 88% and 86% of the fully supervised U-Net performance (78.9% and 79.6%) on the BHSD and BCIHM datasets, respectively.

Table 1

| Methods | Label level | mIoU (%) | |

|---|---|---|---|

| BHSD | BCIHM | ||

| U-Net (7) | Pixel-level (FS) | 78.9 | 79.6 |

| AffinityNet (22) | Image-level (WS) | 49.3 | 49.2 |

| EDAM (25) | Image-level (WS) | 51.6 | 51.4 |

| RCA (23) | Image-level (WS) | 53.7 | 52.2 |

| AE (40) | Image-level (WS) | 54.7 | 53.5 |

| Ours* | Image-level (WS) | 69.8* | 68.9* |

| Ours-DenseNet | Image-level (WS) | 69.4 | 68.1 |

| Ours-VGG | Image-level (WS) | 68.6 | 67.3 |

* denotes superior values. AE, automated ensemble; BCIHM, Computed Tomography Images for Intracranial Hemorrhage Detection and Segmentation Dataset; BHSD, Brain Hemorrhage Segmentation Dataset; EDAM, Embedded Discriminative Attention Mechanism; FS, fully supervised; mIoU, mean Intersection over Union; RCA, regional semantic contrast and aggregation; VGG, Visual Geometry Group; WS, weakly supervised; WSSS, weakly supervised semantic segmentation.

We also evaluated the performance of our proposed method on different CNN architectures. For the DenseNet architecture (43), we trained DenseNet121 and DenseNet169 as classifiers. DenseNet consists of four main blocks, and we integrated the CAMs generated from the feature maps of DenseBlock1 and DenseBlock2. For the Visual Geometry Group (VGG) (6) architecture, we trained VGG16 and VGG19 as classifiers. VGG consists of five stages, and we integrated the CAMs generated from the feature maps of stage 2 and stage 3. The methods using the DenseNet architecture achieved mIoUs of 69.4% and 68.1% on the BHSD and BCIHM datasets, respectively, whereas those using the VGG architecture achieved mIoUs of 68.6% and 67.3% on the two datasets, respectively. Both outperformed other comparison methods, demonstrating the generalizability of our proposed method across different CNN architectures.

Table 2 provides statistics on the CL, ML, and FPL of hemorrhage areas in the segmentation results of different methods. As seen from the table, the CL rate for AffinityNet, EDAM, and RCA is less than 20% of all actual hemorrhage sites. AE performed the best among these methods, achieving 42.2% and 41.8% on the BHSD and BCIHM datasets, respectively, but still lower than our proposed method, which achieved 48.1% and 48.9%, respectively. For the ML metric, AE performed the best but was still higher than our method’s 51.9% and 51.1%, whereas other methods were all above 80%. For the FPL metric, AffinityNet performed the worst, reaching about 99% on both datasets due to the overly cluttered segmentation results it generates. EDAM and AE also performed poorly, reaching around 90%. RCA performed the best among these methods, reaching 71.7% and 69.1% on the BHSD and BCIHM datasets, respectively, but still higher than our proposed method’s 49.8% and 51.2%.

Table 2

| Methods | CL (%) ↑ | ML (%) ↓ | FPL (%) ↓ | |||||

|---|---|---|---|---|---|---|---|---|

| BHSD | BCIHM | BHSD | BCIHM | BHSD | BCIHM | |||

| AffinityNet (22) | 15.4 | 15.9 | 84.6 | 84.1 | 99.0 | 98.9 | ||

| EDAM (25) | 13.8 | 14.5 | 86.2 | 85.5 | 90.2 | 89.6 | ||

| RCA (23) | 19.9 | 19.4 | 80.1 | 80.6 | 71.7 | 69.1 | ||

| AE (40) | 42.2 | 41.8 | 57.8 | 58.2 | 91.5 | 90.4 | ||

| Ours* | 48.1* | 48.9* | 51.9* | 51.1* | 49.8* | 51.2* | ||

* denotes superior values; ↑ denotes the higher the better; ↓ denotes the lower the better. AE, automated ensemble; BCIHM, Computed Tomography Images for Intracranial Hemorrhage Detection and Segmentation Dataset; BHSD, Brain Hemorrhage Segmentation Dataset; CL, correct localization; EDAM, Embedded Discriminative Attention Mechanism; FPL, false positive localization; ML, missed localization; RCA, regional semantic contrast and aggregation.

Figure 6 illustrates the results of our method compared to other methods. As previously discussed, due to the lack of target location supervision in image-level annotations and the ambiguous boundaries characteristic of medical images, many models learn results that appear correct but are significantly deviated. AffinityNet’s segmentation results were the worst, possibly because the boundaries between background and foreground are too ambiguous, preventing it from correctly computing the relationship between foreground and background pixels. EDAM encountered a problem similar to mode collapse in generative adversarial networks (GANs) (44), where the generated pseudo segmentation masks always include a part below the brain. The results of RCA often deviate from the actual location of the hemorrhage sites, with rough contours. Compared to the above methods, AE achieved better segmentation results. However, although it alleviated misleading-activation, the logic ‘AND’ used by AE also caused many positive hemorrhage pixels to be classified as background. These analyses indicate that current WSSS methods for natural images and human organs are unsuitable for the unique challenges of ICH segmentation. In contrast, our method addresses these challenges to a certain extent.

Furthermore, we generated box plots to illustrate data distribution of pseudo segmentation masks’ mIoU produced by different methods on the BHSD and BCIHM datasets, as shown in Figure 7. The pseudo segmentation masks generated by our method maintain the highest mIoU values and exhibit notable stability.

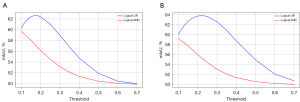

Threshold sensitivity and aggregation logic selection

Since CAM values range between 0 and 1, with activation values closer to 1 indicating higher confidence, it is crucial to select an appropriate threshold to generate pseudo segmentation masks from the processed CAM. Additionally, we evaluated two different aggregation logics, ‘OR’ and ‘AND’, applied to the results. We conducted extensive experiments evaluating threshold values ranging from 0.1 to 0.7 for both datasets for the two aggregation logics. Since this section focuses on evaluating threshold sensitivity and aggregation logic selection, the results in this experiment do not use dCRF for post-processing.

As shown in Figure 8, the best results for the ‘OR’ logic in both datasets are higher than the highest results for the ‘AND’ logic. Therefore, our method chooses the ‘OR’ logic to aggregate different CAMs. Additionally, the choice of threshold significantly impacts the results. In both datasets, the quality of pseudo segmentation masks generated using the ‘OR’ logic is consistently higher than those generated using the ‘AND’ logic. The better performance of ‘OR’ logic is because the CAMs generated from Layers 1 and 2 contain much noise in the non-hemorrhagic regions with low activation values, especially in the shallowest Layer 1. If the chosen threshold is too low, a large amount of background will be falsely identified as foreground, whereas if the threshold is too high, some correct foreground will be lost.

Therefore, choosing an appropriate threshold is crucial. Based on the analysis of the generated CAM, the activation values of background noise range between 0 and 0.15. Thanks to the strategy combining PC1 and CAM, the difference in activation values between the foreground and background is further amplified, and pixels with values greater than 0.2 can be considered as belonging to the foreground. In the BHSD dataset, the pseudo segmentation masks perform best when the threshold is 0.17, with an mIoU of 62.6%. In the BCIHM dataset, the pseudo segmentation masks perform best when the threshold is 0.22, with an mIoU of 63.7%. In both datasets, the optimal threshold for binarization is approximately 0.2, which can nearly achieve the best performance.

Effect of image resolution

Our proposed Shallow-Feature CAM module utilizes the fine-grained information from shallow feature maps, enabling more accurate localization of small hemorrhage sites and effectively mitigating issues of over-activation and misleading-activation. Shallow feature maps have a higher resolution compared to deep feature maps. For instance, in ResNet, the resolution of the feature map produced by Layer 1 is eight times that of Layer 4, providing richer semantic information. The Shallow-Feature CAM module, which utilizes this abundant semantic information, demonstrates significantly improved performance. When higher resolution inputs are used, the feature maps output by each layer also have higher resolutions, containing more semantic information.

We evaluated the performance of commonly used image resolutions of three different sizes (512×512, 384×384, and 256×256) as inputs on the BHSD and BCIHM datasets to train ResNet34 and ResNet50 as classifiers. Our method was then used to generate pseudo segmentation masks. The pseudo segmentation masks generated in this experiment were not processed with dCRF. For clearer comparison, Table 3 lists the resolutions of the feature maps from Layer 1 and Layer 2 used in our method.

Table 3

| Input | Layer 1 | Layer 2 | mIoU (%) | |

|---|---|---|---|---|

| BHSD | BCIHM | |||

| 512×512 | 128×128 | 64×64 | 62.9 | 63.3 |

| 384×384 | 96×96 | 48×48 | 62.6 | 63.7 |

| 256×256 | 64×64 | 32×32 | 60.2 | 61.1 |

BCIHM, Computed Tomography Images for Intracranial Hemorrhage Detection and Segmentation Dataset; BHSD, Brain Hemorrhage Segmentation Dataset; mIoU, mean Intersection over Union.

As shown in Table 3, the lowest resolution input of 256×256 performed the worst, with mIoU scores of 60.2% and 61.1% on BHSD and BCIHM, respectively, which are 2.4% and 2.6% lower than those of the 384×384 input. Compared to the 512×512 input, these scores are lower by 2.7% and 2.2%, respectively. This result indicates that increasing the input resolution from lower to higher significantly enhances performance. However, for an input resolution of 512×512 compared to 384×384, there was only a marginal improvement of 0.3% on the BHSD dataset and a decrease of 0.4% on the BCIHM dataset, suggesting that when the input resolution is already high, further increasing it does not positively affect the generation of pseudo segmentation masks. As the resolution of the feature maps in Layers 1 and 2 increased, so did background noise, reducing the quality of the final pseudo segmentation masks. Increasing the input resolution from 384×384 to 512×512 did not significantly enhance performance, but the computational complexity increased by 1.78 times (45). Based on these experimental results, we finally chose an input resolution of 384×384.

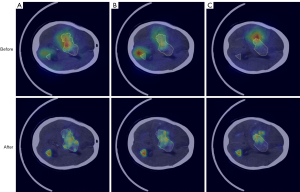

Ablation study

To validate the effectiveness of the proposed method, we conducted ablation experiments on the BHSD and BCIHM datasets. Figure 9 shows the comparison of the results before and after processing with our proposed method. Table 4 presents the ablation experiment results of each module in our method. The results indicate that compared to the CAMs generated by the Grad-CAM method from the deepest feature maps of ResNet34 and ResNet50, the BME, Shallow-Feature CAM module, and SCF all improved the quality of the generated pseudo segmentation masks. All results in Table 4 were derived from using the optimal threshold values.

Table 4

| 34 | 50 | SF-CAM | SCF | BLA | dCRF | U-Net | mIoU (%) | |

|---|---|---|---|---|---|---|---|---|

| BHSD | BCIHM | |||||||

| √ | √ | 52.5 | 50.1 | |||||

| √ | √ | √ | 53.1 | 50.3 | ||||

| √ | √ | 59.1 | 58.3 | |||||

| √ | √ | 60.7 | 60.3 | |||||

| √ | √ | √ | 61.8 | 61.9 | ||||

| √ | √ | √ | √ | 62.6 | 63.7 | |||

| √ | √ | √ | √ | √ | 63.1 | 63.9 | ||

| √ | √ | √ | √ | √ | √ | 65.1 | 64.4 | |

| √ | √ | √ | √ | √ | √ | √ | 69.8* | 68.9* |

√ denotes whether this module is applied; * denotes superior values. 34, ResNet34; 50, ResNet50; BCIHM, Computed Tomography Images for Intracranial Hemorrhage Detection and Segmentation Dataset; BHSD, Brain Hemorrhage Segmentation Dataset; BLA, bi-layer aggregation; CAM, class activation map; dCRF, dense conditional random field; mIoU, mean Intersection over Union; SCF, spatial context fusion; SF-CAM, Shallow-Feature CAM.

Among these modules, the contribution of the Shallow-Feature CAM module is the most significant. Compared to the results without using the Shallow-Feature CAM module, the mIoU improved by 9.3% on the BHSD dataset and by 11.8% on the BCIHM dataset, greatly enhancing the quality of the generated pseudo segmentation masks, demonstrating that the Shallow-Feature CAM module, which uses the fine-grained information from shallow feature maps, can effectively address the issues of over-activation and misleading-activation when applying previous CAM-generation methods to ICH datasets.

Since the SCA module consists of four steps: BME, SCF, BLA, and dCRF, we analyze them individually. Compared to using CAMs output by a single model, the application of the BME module, which combines the CAMs from two models, improved the mIoU of the generated pseudo segmentation masks by 2.7% on the BHSD dataset (compared to using only ResNet34) and by 1.1% (compared to using only ResNet50), and by 3.6% on the BCIHM dataset (compared to using only ResNet34) and by 1.6% (compared to using only ResNet50), indicating that integrating CAMs from different models can utilize the different areas of focus of each model on the same image, thereby correctly expanding the activation areas of hemorrhages in CT images of ICH.

For the SCF, compared to the results of using only the BME, the mIoU of the generated pseudo segmentation masks improved by 0.6% on the BHSD dataset and by 0.2% on the BCIHM dataset. However, compared to the results of using both the BME and Shallow-Feature CAM module, the mIoU of the generated pseudo segmentation masks improved by 0.8% on the BHSD dataset and by 1.8% on the BCIHM dataset, indicating that the designed method effectively reuses the spatial context information lost in the slices of 3D medical images, achieving the goal of optimizing the quality of pseudo segmentation masks.

After using BLA, the mIoU on BHSD and BCIHM improved by 0.5% and 0.2%, respectively. Finally, we applied dCRF as a post-processing method. Compared with the results using all the proposed modules, the mIoU of the pseudo segmentation masks increased by 2.0% on the BHSD dataset and by 0.5% on the BCIHM dataset after adding dCRF. The four steps of SCA each contributed to improving the final results, indicating the significant effectiveness of SCA.

After training U-Net with pseudo segmentation masks generated by our method, the results improved by 4.7% and 4.5% on BHSD and BCIHM, respectively.

Discussion

The segmentation of ICH based on medical images is increasingly relevant to the quantitative analysis in radiology and surgery, as it can assist radiologists and surgeons in diagnosis and surgical planning. However, in the deep learning-assisted ICH diagnosis, as previously discussed, pixel-level annotated datasets for ICH are scarce due to high costs, whereas image-level annotated datasets are more abundant. Currently, most WSSS methods are designed for natural images. They are not entirely suitable for medical images, particularly in how they handle the processing of feature maps fed into the classification head. For example, Wu et al. (25) directly multiplied the obtained masks by the original image to extract the object to be segmented. However, due to the ambiguous boundaries of medical images, the lack of information on the surrounding region makes it difficult for the classifier to correctly identify the extracted region alone. Zhou et al. (23) proposed region semantic contrast and aggregation to aggregate different classes’ features. However, in medical images, the semantic information of the background and foreground is very similar, unlike the distinct contrast in natural images. Moreover, existing WSSS methods for medical images are also not entirely suitable for the task of ICH. Patel et al. (46) focused on optimizing WSSS results using different modalities of medical images, whereas Chen et al. (47) addressed solving co-occurrence issues in medical images, which do not exist in ICH datasets. The framework proposed by (42) does not utilize shallow feature information and spatial context information.

To address this, we have designed a method to utilize weak image-level labels for ICH segmentation. When applying existing CAM-generation methods directly to ICH, issues such as misleading-activation, over-activation, and incomplete-activation arise. To address these issues, we have designed the Shallow-Feature CAM module and the SCA module. Our results demonstrate that our method can not only tackle the challenge of insufficient weak image-level supervision and accurately localize multiple concurrent hemorrhage sites, but also address the challenge of irregularly shaped hemorrhage sites, obtaining accurate hemorrhage contours. Our method achieved mIoU of 69.8% and 68.9% on the BHSD and BCIHM datasets, respectively, outperforming other WSSS methods.

However, the proposed method has certain limitations that should be noted. Since we only utilized shallow feature maps to generate CAMs, our method often fails to fully cover larger target objects in the pseudo segmentation masks. Additionally, the quality of the generated CAMs is largely dependent on the performance of the classification model. Currently, our segmentation model is trained using pseudo segmentation masks generated by our proposed method from the BHSD and BCIHM datasets. If applied to a new ICH dataset, it will be necessary to regenerate the corresponding pseudo segmentation masks using our proposed method to train the segmentation model. The obtained weights of the segmentation model and classification model can also be used as pre-trained weights for fine-tuning on other ICH datasets, reducing the required training time.

From the perspective of practical applications, this study has certain advantages in clinical deployment. The U-Net network structure finally used in this method shows good performance in terms of resource requirements and inference speed. Compared with some complex deep learning models, U-Net consumes fewer resources, which means it can run smoothly on the existing hardware devices in hospitals without the need for significant additional investment in hardware upgrades. At the same time, its fast inference speed can provide the segmentation results of ICH in a short time, meeting the needs of rapid clinical diagnosis and facilitating deployment.

For doctors, using this system does not require in-depth knowledge of artificial intelligence. Doctors only need to undergo simple training to become familiar with the operation interface and basic functions of the system, and then they can easily get started. This reduces the threshold for clinical applications, enabling the system to be quickly integrated into the daily medical workflow.

In addition, the trained model is expected to be integrated into the hospital’s Picture Archiving and Communication System (PACS). Once integrated, when doctors conduct daily diagnoses, they can directly call the model in the PACS system to obtain the segmentation results of ICH. This not only provides convenience for doctors but also assists doctors in making more accurate diagnoses, improving the diagnostic efficiency and accuracy, and thus better serving patients.

In future work, we may design a specialized WSSS network that combines CAMs generated from both shallow and deep feature maps and incorporates prior knowledge to maximally address the issue of insufficient supervision information inherent in WSSS and the problem of insufficient activation coverage for larger objects that exists in our method. The performance validation of this study is based on existing publicly available datasets. However, the morphology and distribution of ICH in clinical practice exhibit a high degree of diversity. In the future, we will collect CT data from multiple centers and various devices to perform external validation and explore transfer learning strategies to enhance the generalization ability of the model. Regarding the issue of the negative impact caused by excessive background noise in the shallow feature maps of our method, we will consider using medical image denoising methods, such as the method proposed by Naqvi et al. (48), to preprocess the input CT scans in order to improve the quality of the generated pseudo segmentation masks.

In the work of Imran et al. (49), the semantic segmentation techniques for preserving fine features are similar to our study’s utilization of shallow features for better boundary detection. Their techniques for preserving detailed vascular structures offer new ideas for improving the contour detection of ICH. Future research could consider borrowing these methods to further optimize the segmentation results. In addition, in the work of Ali et al. (50), the discussion on network selection and information fusion is related to our study’s bi-model ensemble approach. It provides an additional perspective for effectively combining the information of multiple models, facilitating our further exploration of better model fusion strategies. Regarding the work of Khan et al. (51), their research has significantly contributed to the advancement of hardware in quantum computing. It holds promise to explore and deploy more comprehensive and higher -performance models. Moreover, the error mitigation strategies proposed by Khan et al. (51), which reduce measurement errors and enhance calculation accuracy through specific methods, also offer insights for further improvement in addressing the three types of activation errors in WSSS of ICH.

Conclusions

In this paper, we have proposed a method that utilizes fine-grained information from shallow feature maps and spatial context information in slices for WSSS of ICH using image-level annotations. First, we analyzed the limitations of applying popular methods to generate CAM for weakly supervised ICH segmentation. Based on the differences between ICH and other domains, we designed the Shallow-Feature CAM module. Instead of generating CAM from the deepest feature maps, we generate CAM from shallow feature maps to fully utilize the fine-grained information. This approach allows for more accurate localization of hemorrhage sites and better contour detection of complex-shaped hemorrhage sites, addressing issues of misleading-activation and over-activation. The SCA module ensembles CAMs from different models, aggregates activation regions from different layers, and then uses spatial context information to resolve incomplete-activation and further refine the activation regions. The segmentation network U-Net, trained using the pseudo segmentation masks generated by our method, achieved state-of-the-art performance in image-level annotation WSSS on the BHSD and BCIHM datasets, demonstrating the effectiveness of our method and that it could significantly reduce the workload for professional radiologists in creating pixel-level annotated datasets. In future work, it will be possible to design a specialized WSSS network that combines CAMs from shallow and deep features as prior knowledge input to provide the model with more reliable information. Additionally, it is possible to extend the method proposed in this paper to other medical image segmentation tasks, such as the detection and segmentation of abdominal trauma, tumors, and lesions.

Acknowledgments

None.

Footnote

Reporting Checklist: The authors have completed the TRIPOD+AI reporting checklist. Available at https://qims.amegroups.com/article/view/10.21037/qims-24-1462/rc

Funding: This work was supported by

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://qims.amegroups.com/article/view/10.21037/qims-24-1462/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. This study was conducted in accordance with the Declaration of Helsinki and its subsequent amendments.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- McGurgan IJ, Ziai WC, Werring DJ, Al-Shahi Salman R, Parry-Jones AR. Acute intracerebral haemorrhage: diagnosis and management. Pract Neurol 2020; Epub ahead of print. [Crossref]

- Hua W, Chen X, Wang J, Zang W, Jiang C, Ren H, Hong M, Wang J, Wu H, Wang J. Mechanisms and potential therapeutic targets for spontaneous intracerebral hemorrhage. Brain Hemorrhages 2020;1:99-104.

- Dastur CK, Yu W. Current management of spontaneous intracerebral haemorrhage. Stroke Vasc Neurol 2017;2:21-9. [Crossref] [PubMed]

- He K, Zhang X, Ren S, Sun J. Deep Residual Learning for Image Recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 27-30 June 2016; Las Vegas, NV, USA. IEEE; 2016:770-8.

- Krizhevsky A, Sutskever I, Hinton GE. ImageNet Classification with Deep Convolutional Neural Networks. In: Advances in Neural Information Processing Systems. Curran Associates, Inc.; 2012.

- Liu S, Deng W. Very deep convolutional neural network based image classification using small training sample size. In: 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR); 03-06 November 2015; Kuala Lumpur, Malaysia. IEEE; 2015:730-4.

- Ronneberger O, Fischer P, Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In: Navab N, Hornegger J, Wells W, Frangi A. editors. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015. Cham: Springer International Publishing; 2015:234-41.

- Milletari F, Navab N, Ahmadi SA. V-net: Fully convolutional neural networks for volumetric medical image segmentation. In: 2016 Fourth International Conference on 3D Vision (3DV). IEEE; 2016:565-71.

- Shelhamer E, Long J, Darrell T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans Pattern Anal Mach Intell 2017;39:640-51. [Crossref] [PubMed]

- Li L, Wei M, Liu B, Atchaneeyasakul K, Zhou F, Pan Z, Kumar SA, Zhang JY, Pu Y, Liebeskind DS, Scalzo F. Deep Learning for Hemorrhagic Lesion Detection and Segmentation on Brain CT Images. IEEE J Biomed Health Inform 2021;25:1646-59. [Crossref] [PubMed]

- Inkeaw P, Angkurawaranon S, Khumrin P, Inmutto N, Traisathit P, Chaijaruwanich J, Angkurawaranon C, Chitapanarux I. Automatic hemorrhage segmentation on head CT scan for traumatic brain injury using 3D deep learning model. Comput Biol Med 2022;146:105530. [Crossref] [PubMed]

- Abramova V, Clèrigues A, Quiles A, Figueredo DG, Silva Y, Pedraza S, Oliver A, Lladó X. Hemorrhagic stroke lesion segmentation using a 3D U-Net with squeeze-and-excitation blocks. Comput Med Imaging Graph 2021;90:101908. [Crossref] [PubMed]

- Khan MM, Chowdhury MEH, Arefin ASMS, Podder KK, Hossain MSA, Alqahtani A, Murugappan M, Khandakar A, Mushtak A, Nahiduzzaman M. A Deep Learning-Based Automatic Segmentation and 3D Visualization Technique for Intracranial Hemorrhage Detection Using Computed Tomography Images. Diagnostics (Basel) 2023;13:2537. [Crossref] [PubMed]

- Wu B, Xie Y, Zhang Z, Ge J, Yaxley K, Bahadir S, Wu Q, Liu Y, To MS. BHSD: A 3D Multi-class Brain Hemorrhage Segmentation Dataset. In: International Workshop on Machine Learning in Medical Imaging. Springer; 2023:147-56.

- Ahn J, Cho S, Kwak S. Weakly Supervised Learning of Instance Segmentation With Inter-Pixel Relations. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 2019:2209-18.

- Li W, Yuan Y, Wang S, Zhu J, Li J, Liu J, Zhang L. Point2mask: Point-supervised panoptic segmentation via optimal transport. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). 2023:572-81.

- Vernaza P, Chandraker M. Learning Random-Walk Label Propagation for Weakly-Supervised Semantic Segmentation. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2017:7158-66.

- Khoreva A, Benenson R, Hosang J, Hein M, Schiele B. Simple Does It: Weakly Supervised Instance and Semantic Segmentation. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2017:876-85.

- Zhou B, Khosla A, Lapedriza A, Oliva A, Torralba A. Learning Deep Features for Discriminative Localization. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 27-30 June 2016; Las Vegas, NV, USA. IEEE; 2016:2921-9.

- Kumar Singh K, Jae Lee Y. Hide-and-seek: Forcing a network to be meticulous for weakly-supervised object and action localization. In: Proceedings of the IEEE International Conference on Computer Vision. 2017:3524-33.

- Wei Y, Feng J, Liang X, Cheng MM, Zhao Y, Yan S. Object Region Mining with Adversarial Erasing: A Simple Classification to Semantic Segmentation Approach. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu, HI: IEEE; 2017:6488-96.

- Ahn J, Kwak S. Learning Pixel-Level Semantic Affinity With Image-Level Supervision for Weakly Supervised Semantic Segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2018:4981-90.

- Zhou T, Zhang M, Zhao F, Li J. Regional Semantic Contrast and Aggregation for Weakly Supervised Semantic Segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 2022:4299-309.

- Sun G, Wang W, Dai J, Van Gool L. Mining Cross-Image Semantics for Weakly Supervised Semantic Segmentation. In: Vedaldi A, Bischof H, Brox T, Frahm JM. editors. Computer Vision – ECCV 2020. Lecture Notes in Computer Science. Cham: Springer International Publishing; 2020:347-65.

- Wu T, Huang J, Gao G, Wei X, Wei X, Luo X, Liu CH. Embedded Discriminative Attention Mechanism for Weakly Supervised Semantic Segmentation. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 2021:16765-74.

- Wei Yunchao, Liang Xiaodan, Chen Yunpeng, Shen Xiaohui, Cheng Ming-Ming, Feng Jiashi, Zhao Yao, Yan Shuicheng. STC: A Simple to Complex Framework for Weakly-Supervised Semantic Segmentation. IEEE Trans Pattern Anal Mach Intell 2017;39:2314-20. [Crossref] [PubMed]

- Chen T, Yao Y, Zhang L, Wang Q, Xie GS, Shen F. Saliency guided inter-and intra-class relation constraints for weakly supervised semantic segmentation. IEEE Trans Multimedia 2022;25:1727-37.

- Wang L, Lu H, Wang Y, Feng M, Wang D, Yin B, Ruan X. Learning to Detect Salient Objects with Image-Level Supervision. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 21-26 July 2017; Honolulu, HI, USA. IEEE. 2017:3796-805.

- Chang YT, Wang Q, Hung WC, Piramuthu R, Tsai YH, Yang MH. Weakly-Supervised Semantic Segmentation via Sub-Category Exploration. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 2020:8991-9000.

- Jo S, Yu IJ, Kim K. MARS: Model-agnostic Biased Object Removal without Additional Supervision for Weakly-Supervised Semantic Segmentation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). 2023:614-23.

- Abidin ZU, Naqvi RA, Haider A, Kim HS, Jeong D, Lee SW. Recent deep learning-based brain tumor segmentation models using multi-modality magnetic resonance imaging: a prospective survey. Front Bioeng Biotechnol 2024;12:1392807. [Crossref] [PubMed]

- Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In: 2017 IEEE International Conference on Computer Vision (ICCV). 2017:618-26.

- Krähenbühl P, Koltun V. Efficient inference in fully connected CRFs with Gaussian edge potentials. In: NIPS'11: Proceedings of the 25th International Conference on Neural Information Processing Systems. Curran Associates Inc.; 2011:109-17.

- Pinciroli Vago NO, Milani F, Fraternali P, da Silva Torres R. Comparing CAM Algorithms for the Identification of Salient Image Features in Iconography Artwork Analysis. J Imaging 2021;7:106. [Crossref] [PubMed]

- Wang H, Wang Z, Du M, Yang F, Zhang Z, Ding S, Mardziel P, Hu X. Score-CAM: Score-Weighted Visual Explanations for Convolutional Neural Networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops. 2020.

- Jiang PT, Zhang CB, Hou Q, Cheng MM, Wei Y. LayerCAM: Exploring Hierarchical Class Activation Maps for Localization. IEEE Trans Image Process 2021;30:5875-88. [Crossref] [PubMed]

- Yasuki S, Taki M. CAM Back Again: Large Kernel CNNs from a Weakly Supervised Object Localization Perspective. 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 16-22 June 2024; Seattle, WA, USA. IEEE; 2024.

- Woo S, Debnath S, Hu R, Chen X, Liu Z, Kweon IS, Xie S. Convnext v2: Co-designing and scaling convnets with masked autoencoders. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 17-24 June 2023; Vancouver, BC, Canada. IEEE; 2023:16133-42.

- Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S, Uszkoreit J, Houlsby N. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. International Conference on Learning Representations (ICLR). 2021.

- Hssayeni MD, Croock MS, Salman AD, Al-khafaji HF, Yahya ZA, Ghoraani B. Intracranial Hemorrhage Segmentation Using a Deep Convolutional Model. Data 2020;5:14.

- Flanders AE, Prevedello LM, Shih G, Halabi SS, Kalpathy-Cramer J, Ball R, et al. Construction of a Machine Learning Dataset through Collaboration: The RSNA 2019 Brain CT Hemorrhage Challenge. Radiol Artif Intell 2020;2:e190211. [Crossref] [PubMed]

- Ostrowski E, Prabakaran BS, Shafique M. A novel weakly supervised semantic segmentation ensemble framework for medical imaging. In: 2024 International Joint Conference on Neural Networks (IJCNN). 2024:1-10.

- Huang G, Liu Z, Van Der Maaten L, Weinberger KQ. Densely connected convolutional networks. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE; 2017:2261-9.

- Goodfellow IJ, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y. Generative adversarial nets. In: Proceedings of the 27th International Conference on Neural Information Processing Systems - Volume 2. Cambridge, MA, USA: MIT Press; 2014:2672-80. (NIPS’14).

- Chollet F. Xception: Deep learning with depthwise separable convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition. 2017:1251-8.

- Patel G, Dolz J. Weakly supervised segmentation with cross-modality equivariant constraints. Med Image Anal 2022;77:102374. [Crossref] [PubMed]

- Chen Z, Tian Z, Zhu J, Li C, Du S. C-CAM: Causal CAM for Weakly Supervised Semantic Segmentation on Medical Image. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 2022:11676-85.

- Naqvi RA, Haider A, Kim HS, Jeong D, Lee SW. Transformative Noise Reduction: Leveraging a Transformer-Based Deep Network for Medical Image Denoising. Mathematics 2024;12:2313.

- Imran SMA, Saleem MW, Hameed MT, Hussain A, Naqvi RA, Lee SW. Feature preserving mesh network for semantic segmentation of retinal vasculature to support ophthalmic disease analysis. Front Med (Lausanne) 2022;9:1040562. [Crossref] [PubMed]

- Ali MU, Zafar A, Tanveer J, Khan MA, Kim SH, Alsulami MM, Lee SW. Deep learning network selection and optimized information fusion for enhanced COVID-19 detection. Int J Imaging Syst Technol 2024;34:e23001.

- Khan MU, Kamran MA, Khan WR, Ibrahim MM, Ali MU, Lee SW. Error Mitigation in the NISQ Era: Applying Measurement Error Mitigation Techniques to Enhance Quantum Circuit Performance. Mathematics 2024;12:2235.