Can diffusion-based generated magnetic resonance images predict glioma methylation accurately?

Introduction

Gliomas, predominantly representing brain tumors, pose substantial prognostic and survival challenges, with glioblastomas being the most aggressive subtype (1-4). These tumors, including diffuse astrocytic gliomas, display significant intrinsic heterogeneity in both shape and microscopic anatomy, frequently presenting a complex amalgam of tumor tissue and edema. Precise delineation of these intricate internal structures is vital for accurate diagnosis, prognosis, and treatment planning.

The evaluation of brain tumors is achievable through diverse imaging modalities. Although widely utilized, computed tomography (CT) is hindered by its lower soft tissue contrast, rendering it less effective in discerning subtle structural details. Conversely, magnetic resonance imaging (MRI) offers superior visualization of soft tissue anatomy and pathology, establishing itself as an invaluable asset in diagnostic radiology. Nevertheless, MRI faces significant drawbacks, particularly in terms of prolonged acquisition times, which stem from the fundamental physics of imaging and the limitations of current equipment. These extended scanning durations contribute to patient discomfort and reduce accessibility. Traditional acceleration techniques, such as compressed sensing (5) and parallel imaging (6), have been employed to mitigate long scan times; however, these methods frequently result in compromised image quality. Consequently, there is a pressing need for more advanced methodologies that enhance efficiency while preserving the integrity of MR images in brain tumor diagnostics.

In recent years, deep learning has significantly propelled the field of medical imaging forward, with generative models markedly improving clinical outcomes (7-11). For instance, advancements have been achieved in generating contrast-enhanced CT images from non-contrast scans (12-14), converting low-dose CT scans into high-resolution counterparts (15,16), and synthesizing CT angiography from non-contrast CT images (17). In parallel, significant progress has been made in MRI, particularly in improving dynamic contrast-enhanced MR image reliability (18) and optimizing reconstruction techniques (9,19,20). These developments collectively enhance clinical efficiency and patient comfort while preserving diagnostic accuracy. However, the literature is limited in methods to generate time-consuming and difficult-to-acquire MR parameters [e.g., T2, fluid attenuated inversion recovery (FLAIR)] from more readily obtainable MR parameters (e.g., T1) in the context of glioma MRI (10).

Previous investigations into generative models have predominantly emphasized generative adversarial networks (GANs). Despite showing substantial promise, GANs present inherent drawbacks such as instability during the training phase and difficulties in accurately reproducing intricate details (18,20,21). Diffusion models (22,23), as more recently introduced generative frameworks, offer a solution to these limitations by achieving superior fine detail generation. This advancement may significantly reduce patient scan times, thereby enhancing patient comfort and improving the efficacy of clinical management for those with gliomas (9).

This study sets out to achieve two main goals. Firstly, we aim to utilize diffusion models to generate supplementary MR modalities from T1-weighted images, thereby decreasing scan duration. This will be followed by comprehensive quantitative and qualitative assessments of the generated images. Secondly, we intend to evaluate the predictive consistency between the original and generated images in the context of glioma methylation classification. We aim to demonstrate the efficacy of the generative approach in expediting imaging procedures while maintaining the structural fidelity crucial for precise glioma diagnosis and effective treatment planning.

This manuscript is organized as follows: Section 2 describes the methodology, encompassing the dataset, algorithms, and assessment metrics. Section 3 presents the experimental results, including both quantitative and qualitative evaluations. Section 4 provides a comprehensive discussion of our findings and potential future improvements. Finally, Section 5 concludes the study. In Appendix 1, we present various visual and quantitative analysis. We present this article in accordance with the TRIPOD+AI reporting checklist (available at https://qims.amegroups.com/article/view/10.21037/qims-24-1688/rc).

Methods

Dataset

The Brain Tumor Segmentation (BraTS) 2021 dataset (24) comprises an extensive compilation of 1,480 multi-parametric MRI scans from glioma patients, each featuring a voxel resolution of 240×240×155. This collection includes high-resolution sequences such as T1-weighted, T1 contrast-enhanced (T1CE, Gadolinium), T2-weighted, and T2 FLAIR images. Notably, 1,251 of these scans have been annotated with detailed masks, accurately delineating tumor sub-regions, including enhancing regions, necrotic tissue, and edematous areas. Moreover, the dataset provides binary labels for 695 subjects, denoting the methylation status of the MGMT promoter, a genetic marker imperative for the personalized treatment of glioma (25,26), albeit undetectable via MRI.

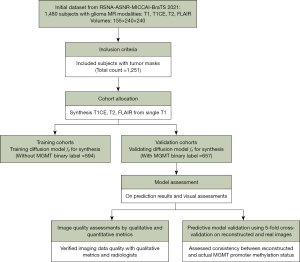

We developed a diffusion model designed to reconstruct an array of multi-parametric As shown in Figure 1, MR images from the reference T1-weighted and accelerated T1CE, T2, and FLAIR images, initial training utilized 785 scans without MGMT annotations. The detailed process of the acceleration is depicted in Figure S1. This training dataset was crucial for constructing the model’s foundational architecture. Subsequent testing and validation were conducted on 695 scans annotated with MGMT methylation status, employing a 5-fold cross-validation strategy. This stringent assessment was vital to confirm that the synthetic data produced by our model accurately mirrors real MR images, thereby validating the model’s predictive reliability.

Deep learning algorithm

In the preprocessing of MR image data, the intensities within each cubic region were normalized to a uniform floating-point range of [0, 1]. The normalization was achieved by scaling the voxel intensities using the extremal values within the cube.

Diffusion-based reconstruction

In the training phase of the diffusion model, the Denoising Diffusion Probabilistic Model (DDPM) (22) framework was adopted. Figure 2A shows that the architecture employed was a 2D U-Net fθ, capable of processing single-channel grayscale 2D slices. This configuration was tailored to manage T1-weighted images, tumor masks, and k-space undersampled target images, aiming to produce sequences specific outputs, i.e., T1CE, T2, or FLAIR. Separate models were trained for each sequence. The encoder component of the U-Net (27) began with a base channel width of 64, which was incrementally doubled to 1024, concurrently reducing the image resolution. In contrast, the decoder increased resolution while reducing the number of channels.

For the diffusion model, a linear noise schedule ranging from 0.0001 to 0.02 over 1,000 timesteps was implemented. The training regimen involved batches comprising 16-image patches over 1,000,000 iterations. For the final sampling phase, a batch size of 4 was utilized. Optimization was conducted using the Adam optimizer (28), with parameters configured as follows: a learning rate of 0.00002, β1=0.9, β2=0.99, and ϵ=10e−9. An exponential moving average with a weighting factor of 0.999 was applied during parameter updates. During the inference stage, the Denoising Diffusion Implicit Models (DDIM) (29) framework was used to accelerate the sampling process, executed in 20 steps.

MGMT promoter methylation prediction

For the development of the MGMT promoter methylation prediction model, a three-dimensional (3D) ResNet (30) architecture was trained utilizing the central 64-slice segment of MR images, each slice possessing a resolution of 240×240 pixels, as shown in Figure 2B. Model training was conducted with a batch size of 8 over 15 epochs for each fold within a 5-fold cross-validation framework. Fold distribution was randomized to ensure a balanced representation of binary labels. Separate models were trained for each respective MR sequence to predict the methylation status. The optimization procedure for the ResNet model employed the Adam optimizer (28), configured with a learning rate of 0.0001, β1=0.9, β2=0.99, and ϵ=10e−9. A learning rate scheduler was implemented to halve the learning rate at the 10th epoch. The cross-entropy loss function was utilized to assess model accuracy. All training processes were executed on Nvidia V100 GPUs.

Image quality assessment

Image quality assessment employs both quantitative and qualitative measures. For quantitative analysis, established metrics such as root mean square error (RMSE), normalized mean absolute error (NMAE), peak signal-to-noise ratio (PSNR), and the structural similarity index measure (SSIM) (30) are utilized to evaluate the correspondence between synthesized and actual MR images.

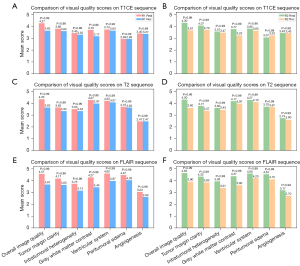

In the qualitative evaluation, a junior and a senior radiologist reviewed a randomly selected set of 50 MR images depicting tumor cross-sections in the generated T1CE, T2, and FLAIR phases. They assessed the images based on various criteria: overall image quality, clarity of tumor margins, intratumoral heterogeneity, gray-white matter contrast, quality of the ventricular system, visibility of peritumoral edema, and clarity of angiogenesis. The ratings ranged from 1 (indicating the lowest quality) to 5 (indicating the highest quality), as detailed in Table S1. To ensure unbiased evaluation, the radiologists were aware that the set included both authentic and synthesized MR images; however, the images were presented in a randomized order, along with three additional authentic sequences for context. Statistical analysis was subsequently performed to determine the significance and consistency of the radiologists’ evaluations.

Classification prediction assessment

The objective of the classification prediction assessment was to evaluate how effectively the performance of generated MR images can approximate that of real images in predicting MGMT promoter methylation status, a critical biomarker in neuro-oncology that poses significant challenges for imaging-based identification (31,32). For this task, we adopted an established methodology that achieved top performance in the 2021 RSNA-MICCAI Brain Tumor Radiogenomic Classification Challenge (github.com/FirasBaba/brain-tumor-radiogen), employing a 5-fold training and validation protocol. The selected model, based on a 3D ResNet architecture, processed images from three different sequences: T1CE, T2, and FLAIR, by analyzing continuous slices from the central cerebral region. Despite its straightforward architecture, 3D ResNet demonstrated superior performance compared to more complex models, without requiring sophisticated ensemble techniques (https://www.kaggle.com/c/rsna-miccai-brain-tumor-radiogenomic-classification/discussion/281347). We maintained consistency with the original model’s hyperparameters throughout our training phase, enabling the measurement of the area under curve (AUC) and the estimation of probabilities for each subject’s MGMT status.

We subsequently applied the validated 5-fold models to the generated images, calculating both their AUC values and the predictive probabilities for each synthetic MR subject. The detailed numbers for each fold split, which are random and balanced by label, are provided in Figure S2. To assess the resemblance of our generated images to actual clinical imagery, we conducted a statistical analysis to evaluate the consistency between the predicted probabilities and the ground truth MGMT promoter.

Statistical analysis

For the image quality assessment, the visual quality scores of the synthetic MR image and real MR images were compared using the χ2 test (33) to evaluate the consistency of image quality across different radiologists. A P value of less than 0.05 was considered indicative of a statistically significant difference.

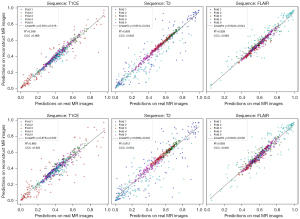

For the classification prediction assessment, 5-fold validation models were employed to infer the synthetic and real MR images for binary classification of MGMT methylation status. The output probabilities, ranging from 0 to 1, were evaluated using the concordance correlation coefficient (CCC) (34) and R2 score. A CCC value greater than 0.9 was considered indicative of good consistency. The R2 score, ranging between 0 and 1, quantified the proportion of variance in the predictions explained by the model, with higher values signifying increased explanatory power.

Results

Quantitative evaluation

The quantitative assessment summarized in Table 1 illustrates the performance variability across different MR sequences when comparing 4-fold and 32-fold reconstructions, using NMAE, RMSE, PSNR, and SSIM metrics as evaluation criteria. For the T2 sequence, 4-fold reconstructions exhibited superior performance with SSIM (0.963) and PSNR (33.44), compared to 32-fold reconstructions which attained SSIM (0.944) and PSNR (30.54). Similarly, the FLAIR sequence demonstrated better quality for 4-fold reconstructions with SSIM (0.963) and PSNR (34.16) against 32-fold reconstructions that achieved SSIM (0.937) and PSNR (31.24), indicating more significant degradation at higher acceleration factors. The T1CE sequence showcased the highest fidelity, with 4-fold reconstructions achieving SSIM (0.972) and PSNR (37.43) in comparison to 32-fold reconstructions, which recorded SSIM (0.954) and PSNR (33.79). This high fidelity for T1CE can be attributed to the fact that T1 and T1CE sequences are homogeneous, sharing nearly identical primary components and structures, with differences occurring mainly in vessel enhancement. These outcomes suggest that 4-fold reconstructions generally preserve superior image quality across all sequences, whereas 32-fold reconstructions present greater challenges, particularly in the T2 and FLAIR sequences.

Table 1

| Inputs | T1CE | T2 | FLAIR | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| NMAE | RMSE | PSNR | SSIM | NMAE | RMSE | PSNR | SSIM | NMAE | RMSE | PSNR | SSIM | |||

| 4-fold acceleration | 0.107 | 1.354 | 19.61 | 0.660 | 0.080 | 0.913 | 21.97 | 0.664 | 0.115 | 1.234 | 19.00 | 0.654 | ||

| Reconstruction ×4 | 0.014 | 0.126 | 37.43 | 0.972 | 0.022 | 0.161 | 33.44 | 0.963 | 0.021 | 0.147 | 34.16 | 0.963 | ||

| 32-fold acceleration | 0.133 | 1.604 | 17.66 | 0.648 | 0.094 | 0.971 | 20.61 | 0.647 | 0.124 | 1.257 | 18.37 | 0.648 | ||

| Reconstruction ×32 | 0.021 | 0.170 | 33.79 | 0.954 | 0.031 | 0.210 | 30.54 | 0.944 | 0.029 | 0.190 | 31.24 | 0.937 | ||

Lower values denote superior performance for NMAE and RMSE, while higher values denote superior performance for PSNR and SSIM. FLAIR, fluid attenuated inversion recovery; MR, magnetic resonance; NMAE, normalized mean absolute error; PSNR, peak signal-to-noise ratio; RMSE, root mean square error; SSIM, structural similarity index measure; T1CE, T1 contrast-enhanced.

Visual quality evaluation

The visual evaluation performed by senior (R1) and junior (R2) radiologists demonstrated that real MR images generally attained higher or very similar scores compared to reconstructed images in various attributes, including overall image quality, clarity of tumor margins, intratumoral heterogeneity, gray-white matter contrast, quality of the ventricular system, visibility of peritumoral edema, and clarity of angiogenesis across the evaluated sequences (T1CE, T2, and FLAIR). As illustrated in Figure 3, there are no statistically significant differences in visual assessments between real and reconstructed images. Both radiologists rated crucial aspects, such as the delineation of tumor margins and the depiction of intratumoral heterogeneity, similarly in both image types. Notably, the reconstructed FLAIR sequences were regarded as having the highest quality by both radiologists, and the peritumoral edema and angiogenesis in the reconstructed T1CE and T2 phases exhibited the highest consistency with the real images. In contrast, the clarity of gray-white matter contrast and the ventricular system showed the greatest differences, although these differences were not statistically significant. Furthermore, the quantitative scores were positively correlated with the visual scores, thereby reinforcing the high fidelity of the reconstructed images.

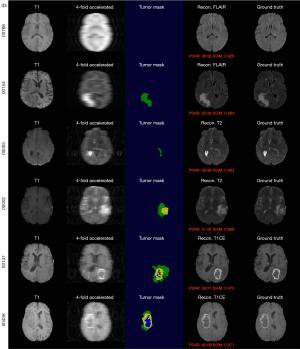

Representative cases are presented in Figure 4 and Figure S3, showcasing the model’s ability to accurately reconstruct both normal and pathological brain tissues. The differentiation between the presence and absence of a tumor mask enables precise control over the generation of normal and tumorous regions. Vascular regions are relatively easier to generate due to the distinct brightness of vessels in specific imaging sequences. However, the accurate reconstruction of the tumor core and necrotic areas presents significant challenges, largely dependent on detailed tissue structure information obtained from the tumor mask and the prior of T1 sequence. Although 32-fold reconstructions are inherently more challenging than 4-fold reconstructions, the 32-fold reconstructions achieve visually consistent results, owing to the robust generative capabilities of the diffusion model.

Prediction consistency evaluation

Table 2 presents the AUC metrics for predicting MGMT promoter methylation using T1CE, T2, and FLAIR sequences. This analysis incorporates both original and reconstructed images, assessed through a 5-fold cross-validation. Complementary receiver operating characteristic (ROC) curves are available in Figure S4. Among the sequences, FLAIR demonstrated the most stable results, with mean AUC values ranging between 0.606 and 0.601, and an overall mean AUC spanning from 0.598 to 0.594. Notably, in certain validation folds, the reconstructed images outperformed the original ones in accuracy; this variation is expected due to inherent data instabilities. Figure 5 illustrates the consistency evaluation of MGMT promoter methylation predictions between original and reconstructed MR images for T1CE, T2, and FLAIR sequences across five folds. Each scatter plot shows predicted probabilities on the y-axis (reconstructed images) against those on the x-axis (original images) for each fold. Points close to the diagonal indicate high consistency between predictions on reconstructed and original images, while points further from the diagonal signify greater discrepancies. The highest CCC is found in the ×4 accelerated FLAIR sequence, with a CCC of 0.963, denoting excellent consistency. The ×4 accelerated T2 sequence also shows strong agreement, with an R2 of 0.938. Comparatively, the ×4 reconstructions exhibit tighter clustering around the diagonal across all sequences, indicating higher predictive consistency, whereas the ×32 reconstructions display slightly more dispersion from the diagonal, indicating minor discrepancies in MGMT prediction. Overall, predictions from the ×4 accelerated reconstructions are more centered along the diagonal, highlighting greater consistency with the original MR image predictions, and validating the robustness and reliability of the reconstruction methods across different sequences.

Table 2

| Sequence | Fold 1 | Fold 2 | Fold 3 | Fold 4 | Fold 5 | Overall | Mean ± SD |

|---|---|---|---|---|---|---|---|

| T1CE | 0.607 | 0.602 | 0.554 | 0.559 | 0.628 | 0.559 | 0.590±0.029 |

| T1CE (Rec. ×4) | 0.595 | 0.597 | 0.563 | 0.548 | 0.615 | 0.567 | 0.583±0.024 |

| T1CE (Rec. ×32) | 0.590 | 0.604 | 0.529 | 0.528 | 0.617 | 0.553 | 0.574±0.038 |

| T2 | 0.577 | 0.584 | 0.614 | 0.614 | 0.607 | 0.561 | 0.600±0.017 |

| T2 (Rec. ×4) | 0.572 | 0.585 | 0.626 | 0.572 | 0.616 | 0.557 | 0.594±0.023 |

| T2 (Rec. ×32) | 0.555 | 0.588 | 0.615 | 0.565 | 0.597 | 0.552 | 0.584±0.022 |

| FLAIR | 0.582 | 0.657 | 0.575 | 0.558 | 0.658 | 0.598 | 0.606±0.043 |

| FLAIR (Rec. ×4) | 0.583 | 0.670 | 0.565 | 0.526 | 0.671 | 0.596 | 0.603±0.058 |

| FLAIR (Rec. ×32) | 0.590 | 0.659 | 0.561 | 0.525 | 0.669 | 0.594 | 0.601±0.056 |

AUC, area under the curve; FLAIR, fluid attenuated inversion recovery; Rec., reconstructed; SD, standard deviation; T1CE, T1 contrast-enhanced.

Discussion

This research focuses on the development of a diffusion-based model for the reconstruction of accelerated brain MR images, with a specific emphasis on accurately reconstructing pathological regions. In clinical MRI applications, accelerated scanning techniques have the potential to significantly reduce scanning times by approximately 75% with 4-fold acceleration and by around 96.9% with 32-fold acceleration. However, the increased speed associated with these techniques often results in compromised image quality, mainly due to pronounced aliasing artifacts that are more evident at higher acceleration factors. Generative models, such as diffusion models, offer substantial promise for the reconstruction of aliasing-affected images, thereby facilitating a significant reduction in scanning time while preserving image quality. Our approach not only enhances T1-weighted scans but also extends its applicability to other modalities, including T1CE, FLAIR, and the more time-intensive T2 sequences. By simulating k-space degradation, our method produces high-quality images that accurately reflect faster acquisition techniques. Utilizing the well-curated BraTS dataset and tumor masks for training, our model effectively captures glioma features, thereby establishing a robust representation of the disease’s radiological profile.

Both qualitative and quantitative evaluations of image quality, as well as predictive consistency assessments, were conducted. Visual analysis by radiologists revealed no significant differences between the reconstructed and original MR images. Moreover, the predictive consistency analysis exhibited high agreement across all sequences (e.g., for 4-fold acceleration, T1CE: CCC =0.966, R2=0.938; T2: CCC =0.945, R2=0.893; FLAIR: CCC =0.963, R2=0.928). While the reconstruction process demonstrated consistent performance in both prediction accuracy and image quality, the complexity of predicting MGMT promoter methylation from imaging, due to the subtle and intricate relationships between genetic markers and the images, suggests that other biomarkers could also be employed to evaluate the predictive consistency on the reconstructed MR images.

To enhance both pathological and non-pathological regions in MR images, tumor masks are applied, which are crucial for accurately delineating the precise location and overall structure of the reconstructed tumor. This approach requires the pretraining of a segmentation model capable of generating masks for unsegmented images from a reference sequence, such as T1-weighted images. Incorporating the tumor mask can significantly enhance the training process. Currently, these tumor masks are readily available in the annotated BraTS datasets. However, in practical applications, a well-trained segmentation model would be needed to generate tumor masks from full-resolution T1 images, with optional undersampling for T1ce, T2, or FLAIR images, which presents a current limitation. That said, tumor segmentation for gliomas is not an overly complex challenge due to the availability of many established methods, e.g., one study (35) demonstrated that using only T1 images for segmentation achieved a Dice score of 83.67, and it is able to handle cases with missing modalities.

The diffusion model differs markedly from end-to-end generative models. Despite its superior generative representation capabilities, it is limited by slow inference speeds. In DDPM, inference involves the same number of steps as in the noise addition process. Although methods such as DDIM (29) and model distillation (36) can theoretically accelerate inference, they often do so at the expense of reconstruction accuracy. Therefore, further research is necessary to develop a more efficient diffusion model specifically optimized for MR image reconstruction.

In this study, we employed the publicly available BraTS dataset with simulated acceleration. For practical application, it is crucial to acquire real k-space signals during scanning, considering real-world perturbations and the necessity for collecting and training on real-world tumor datasets. Although this paper focused on four MR parameters (T1, T1CE, T2, and FLAIR), clinical diagnostics commonly utilize other MR parameters that hold significant diagnostic value, e.g., Proton Density, Diffusion-Weighted Imaging, Nuclear Magnetic Resonance, and Magnetic Resonance Angiography. These additional sequences were not explored in this work, but their considerable clinical importance warrants further investigation.

It is important to note that training the diffusion model requires the registration of images across different MR sequences. In this study, we used the preregistered BraTS dataset; however, in real clinical scenarios, ensuring patient immobility during MR scanning is essential. This requirement is relatively manageable for brain imaging, but presents substantial challenges for abdominal organs due to respiratory-induced motion within the abdominal cavity, which complicates the imaging process for these structures (37).

Conclusions

We have proposed a diffusion-based generative model for reconstructing accelerated brain glioma MR images, which demonstrates promising results in both image quality assessment and prediction consistency evaluation.

Acknowledgments

None.

Footnote

Reporting Checklist: The authors have completed the TRIPOD+AI reporting checklist. Available at https://qims.amegroups.com/article/view/10.21037/qims-24-1688/rc

Funding: This work was supported in part by

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://qims.amegroups.com/article/view/10.21037/qims-24-1688/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Louis DN, Ellison DW, Brat DJ, Aldape K, Capper D, Hawkins C, Paulus W, Perry A, Reifenberger G, Figarella-Branger D, von Deimling A, Wesseling P. cIMPACT-NOW: a practical summary of diagnostic points from Round 1 updates. Brain Pathol 2019;29:469-72. [Crossref] [PubMed]

- Bakas S, Shukla G, Akbari H, Erus G, Sotiras A, Rathore S, Sako C, Min Ha S, Rozycki M, Shinohara RT, Bilello M, Davatzikos C. Overall survival prediction in glioblastoma patients using structural magnetic resonance imaging (MRI): advanced radiomic features may compensate for lack of advanced MRI modalities. J Med Imaging (Bellingham) 2020;7:031505. [Crossref] [PubMed]

- Louis DN, Wesseling P, Aldape K, Brat DJ, Capper D, Cree IA, et al. cIMPACT-NOW update 6: new entity and diagnostic principle recommendations of the cIMPACT-Utrecht meeting on future CNS tumor classification and grading. Brain Pathol 2020;30:844-56. [Crossref] [PubMed]

- Guo X, Shi Y, Liu D, Li Y, Chen W, Wang Y, et al. Clinical updates on gliomas and implications of the 5th edition of the WHO classification of central nervous system tumors. Front Oncol 2023;13:1131642.

- Lustig M, Donoho D, Pauly JM. Sparse MRI: The application of compressed sensing for rapid MR imaging. Magn Reson Med 2007;58:1182-95. [Crossref] [PubMed]

- Deshmane A, Gulani V, Griswold MA, Seiberlich N. Parallel MR imaging. J Magn Reson Imaging 2012;36:55-72. [Crossref] [PubMed]

- Ghodrati V, Shao J, Bydder M, Zhou Z, Yin W, Nguyen KL, Yang Y, Hu P. MR image reconstruction using deep learning: evaluation of network structure and loss functions. Quant Imaging Med Surg 2019;9:1516-27. [Crossref] [PubMed]

- Jayachandran Preetha C, Meredig H, Brugnara G, Mahmutoglu MA, Foltyn M, Isensee F, et al. Deep-learning-based synthesis of post-contrast T1-weighted MRI for tumour response assessment in neuro-oncology: a multicentre, retrospective cohort study. Lancet Digit Health 2021;3:e784-94. [Crossref] [PubMed]

- Gohla G, Hauser TK, Bombach P, Feucht D, Estler A, Bornemann A, Zerweck L, Weinbrenner E, Ernemann U, Ruff C. Speeding Up and Improving Image Quality in Glioblastoma MRI Protocol by Deep Learning Image Reconstruction. Cancers (Basel) 2024;16:1827. [Crossref] [PubMed]

- Ruff C, Bombach P, Roder C, Weinbrenner E, Artzner C, Zerweck L, Paulsen F, Hauser TK, Ernemann U, Gohla G. Multidisciplinary quantitative and qualitative assessment of IDH-mutant gliomas with full diagnostic deep learning image reconstruction. Eur J Radiol Open 2024;13:100617. [Crossref] [PubMed]

- Chukwujindu E, Faiz H, Ai-Douri S, Faiz K, De Sequeira A. Role of artificial intelligence in brain tumour imaging. Eur J Radiol 2024;176:111509. [Crossref] [PubMed]

- Choi JW, Cho YJ, Ha JY, Lee SB, Lee S, Choi YH, Cheon JE, Kim WS. Generating synthetic contrast enhancement from non-contrast chest computed tomography using a generative adversarial network. Sci Rep 2021;11:20403. [Crossref] [PubMed]

- Haubold J, Hosch R, Umutlu L, Wetter A, Haubold P, Radbruch A, Forsting M, Nensa F, Koitka S. Contrast agent dose reduction in computed tomography with deep learning using a conditional generative adversarial network. Eur Radiol 2021;31:6087-95. [Crossref] [PubMed]

- Chun J, Chang JS, Oh C, Park I, Choi MS, Hong CS, Kim H, Yang G, Moon JY, Chung SY, Suh YJ, Kim JS. Synthetic contrast-enhanced computed tomography generation using a deep convolutional neural network for cardiac substructure delineation in breast cancer radiation therapy: a feasibility study. Radiat Oncol 2022;17:83. [Crossref] [PubMed]

- Chao HS, Wu YH, Siana L, Chen YM. Generating High-Resolution CT Slices from Two Image Series Using Deep-Learning-Based Resolution Enhancement Methods. Diagnostics (Basel) 2022;12:2725. [Crossref] [PubMed]

- Kim J, Kim J, Han G, Rim C, Jo H. Low-dose CT image restoration using generative adversarial networks. Inform Med Unlocked 2020;21:100468.

- Lyu J, Fu Y, Yang M, Xiong Y, Duan Q, Duan C, Wang X, Xing X, Zhang D, Lin J, Luo C, Ma X, Bian X, Hu J, Li C, Huang J, Zhang W, Zhang Y, Su S, Lou X. Generative Adversarial Network-based Noncontrast CT Angiography for Aorta and Carotid Arteries. Radiology 2023;309:e230681. [Crossref] [PubMed]

- Lee J, Jung W, Yang S, Park JH, Hwang I, Chung JW, Choi SH, Choi KS. Deep learning-based super-resolution and denoising algorithm improves reliability of dynamic contrast-enhanced MRI in diffuse glioma. Sci Rep 2024;14:25349. [Crossref] [PubMed]

- Lee EJ, Hwang J, Park S, Bae SH, Lim J, Chang YW, Hong SS, Oh E, Nam BD, Jeong J, Sung JK, Nickel D. Utility of accelerated T2-weighted turbo spin-echo imaging with deep learning reconstruction in female pelvic MRI: a multi-reader study. Eur Radiol 2023;33:7697-706. [Crossref] [PubMed]

- Almansour H, Herrmann J, Gassenmaier S, Lingg A, Nickel MD, Kannengiesser S, Arberet S, Othman AE, Afat S. Combined Deep Learning-based Super-Resolution and Partial Fourier Reconstruction for Gradient Echo Sequences in Abdominal MRI at 3 Tesla: Shortening Breath-Hold Time and Improving Image Sharpness and Lesion Conspicuity. Acad Radiol 2023;30:863-72. [Crossref] [PubMed]

- Arora S, Risteski A, Zhang Y. Theoretical limitations of encoder-decoder gan architectures. arXiv 2017:1711.02651.

- Ho J, Jain A, Abbeel P. Denoising diffusion probabilistic models. In: Larochelle H, Ranzato M, Hadsell R, Balcan M, Lin H. editors. Advances in Neural Information Processing Systems 2020.

- Ozdenizci O, Legenstein R. Restoring Vision in Adverse Weather Conditions With Patch-Based Denoising Diffusion Models. IEEE Trans Pattern Anal Mach Intell 2023;45:10346-57. [Crossref] [PubMed]

- Baid U, Ghodasara S, Mohan S, Bilello M, Calabrese E, Colak E, et al. The RSNA-ASNR-MICCAI BraTS 2021 benchmark on brain tumor segmentation and radiogenomic classification. arXiv 2021:2107.02314.

- Rivera AL, Pelloski CE, Gilbert MR, Colman H, De La Cruz C, Sulman EP, Bekele BN, Aldape KD. MGMT promoter methylation is predictive of response to radiotherapy and prognostic in the absence of adjuvant alkylating chemotherapy for glioblastoma. Neuro Oncol 2010;12:116-21. [Crossref] [PubMed]

- Louis DN, Perry A, Reifenberger G, von Deimling A, Figarella-Branger D, Cavenee WK, Ohgaki H, Wiestler OD, Kleihues P, Ellison DW. The 2016 World Health Organization Classification of Tumors of the Central Nervous System: a summary. Acta Neuropathol 2016;131:803-20. [Crossref] [PubMed]

- Ronneberger O, Fischer P, Brox T. U-net: Convolutional networks for biomedical image segmentation. In: Navab N, Hornegger J, Wells WM III, Frangi AF. editors. In: International Conference on Medical Image Computing and Computer-Assisted Intervention; 2015:234-41.

- Kingma DP, Ba J. Adam: A method for stochastic optimization. arXiv 2014:1412.6980.

- Song J, Meng C, Ermon S. Denoising diffusion implicit models. International Conference on Learning Representations; 2021.

- He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition; 2016:770-8.

- Wang Z, Bovik AC, Sheikh HR, Simoncelli EP. Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 2004;13:600-12. [Crossref] [PubMed]

- Qureshi SA, Hussain L, Ibrar U, Alabdulkreem E, Nour MK, Alqahtani MS, Nafie FM, Mohamed A, Mohammed GP, Duong TQ. Radiogenomic classification for MGMT promoter methylation status using multi-omics fused feature space for least invasive diagnosis through mpMRI scans. Sci Rep 2023;13:3291. [Crossref] [PubMed]

- Doi M, Takahashi F, Kawasaki Y. Bayesian noninferiority test for 2 binomial probabilities as the extension of Fisher exact test. Stat Med 2017;36:4789-803. [Crossref] [PubMed]

- Lin LI. A concordance correlation coefficient to evaluate reproducibility. Biometrics 1989;45:255-68.

- Wang Z, Hong Y. A2fseg: Adaptive multi-modal fusion network for medical image segmentation. In: International Conference on Medical Image Computing and Computer-Assisted Intervention; 2023:673-81.

- Meng C, Rombach R, Gao R, Kingma D, Ermon S, Ho J, et al. On distillation of guided diffusion models. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2023:14297-306.

- Taouli B, Koh DM. Diffusion-weighted MR imaging of the liver. Radiology 2010;254:47-66. [Crossref] [PubMed]