Value of deep learning model for predicting Breast Imaging Reporting and Data System 3 and 4A lesions on mammography

Introduction

Breast cancer is the most prevalent malignancy, threatening the lives of women worldwide (1). Mammography, one of the most widely used tools for the early detection of breast cancer, has been proven to reduce breast cancer mortality by 30–40% (2-4). However, a high density of fibroglandular breast tissue may obscure lesions in the breast and reduce the sensitivity of mammography (5,6). Indeed, it is estimated that 12.5% of breast cancers elude detection during mammography (7). Moreover, 75% of breast biopsies prompted by a suspicious mammography result are eventually confirmed to be benign; these false-positive results expose patients to increased anxiety, unnecessary biopsy or treatment, and additional medical expenses (8).

According to the fifth edition Breast Imaging Reporting and Data System (BI-RADS) of the American College of Radiology (ACR), BI-RADS 3 lesions (≤2% malignancy probability) require regular follow-up (9). In contrast, the possibility of malignancy in BI-RADS 4 lesions (further divided into 4A, 4B, and 4C) ranges from 2% to 95%, and histological examination is recommended for these lesions (9). However, substantial inter-reader variability in mammographic interpretation exists between radiologists (7). This variability might cause underdiagnosis of high-risk BI-RADS 3 lesions and overdiagnosis and overtreatment of benign BI-RADS 4A lesions (10). Therefore, accurate classification of BI-RADS 3 and 4A lesions is critical for optimizing clinical outcomes, enabling patients with high-risk BI-RADS 3 to receive timely intervention and those with benign BI-RADS 4A to avoid unnecessary anxiety, biopsies, and interventions.

In recent years, deep learning (DL), the most advanced image classification algorithm currently available, has garnered considerable attention due to its automatic image analysis capabilities (11). Moreover, DL-based artificial intelligence (AI) systems have demonstrated superior accuracy in diagnosing malignant breast lesions (12-14). However, the capability of AI systems in assisting radiologists in the assessment or arbitration of BI-RADS 3 and 4A cases has not been extensively examined. Therefore, this retrospective study aimed to evaluate the performance of a mammography-based DL model in improving radiologists’ accuracy when differentiating BI-RADS 3 and 4A lesions. We present this article in accordance with the TRIPOD+AI reporting checklist (available at https://qims.amegroups.com/article/view/10.21037/qims-24-1523/rc).

Methods

Study population

This study was conducted in accordance with the Declaration of Helsinki (as revised in 2013) and was approved by the Medical Ethics Committee of Shenzhen People’s Hospital (No. LL-KY-2021624). The requirement for individual consent was waived due to the retrospective nature of the analysis.

We retrospectively collected 9,334 consecutive mammograms from Shenzhen People’s Hospital and 4,040 consecutive mammograms from Shenzhen Luohu People’s Hospital acquired between January and December 2020. The inclusion criteria were as follows: (I) mammographic identification of BI-RADS 3 or 4A lesions, (II) complete bilateral breast craniocaudal (CC) and mediolateral oblique (MLO) images, and (III) ≥2-year follow-up or histopathological confirmation. Meanwhile, the exclusion criteria were as follows: (I) mammography images not meeting requirements; and (II) a history of breast augmentation, surgery, or trauma. From an initial cohort of 1,360 patients with BI-RADS 3 and 4A lesions, 536 were excluded (175 surgical histories, 33 augmentations, and 328 cases of incomplete follow-up). Finally, 824 patients with 846 lesions were included, comprising 22 patients with bilateral breast lesions and 802 unilateral breast lesions. All participants were female and aged between 21 and 81 years (mean age 46±9 years).

Image acquisition

Mammograms were acquired with the MAMMOMAT Inspiration (Siemens Healthineers, Erlangen, Germany) and the MD full-field digital mammography system (IMS Giotto, Sasso Marconi, Italy). All participants underwent standard bilateral CC and MLO examinations.

Construction and application of the DL model

The DL model (Mammo AI V3), codeveloped by Shenzhen People’s Hospital and Ping-An Technology (Shenzhen China), can implement lesion detection, segmentation, and benign-versus-malignant classification. The model integrates calcification and noncalcification detection submodels (15). The calcification detection model employs a U-Net-based segmentation network, while the noncalcification detection model contains three modules: the ipsilateral dual-view network, the bilateral dual-view network, and the integrated fusion network. The noncalcification DL model can process images from multiple projection positions of the same patient. It utilizes two high-resolution detection and segmentation networks with different depths for ipsilateral and contralateral images. Through a combination of the nipple-detection algorithm and target-detection algorithm, the DL model can jointly detect lesions. The model uses DenseNet-121 to associatively learn and extract lesion features while taking into account the correlation between BI-RADS categories and benign/malignant labels. The classification head outputs continuous malignancy probability scores (0= benign and 100= malignant), with empirically optimized thresholds differentiating benign from malignant categories (noncalcified: 0.60; calcified: 0.80).

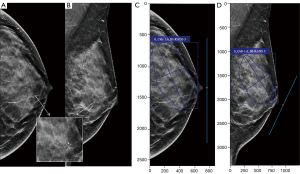

In this study, the DL model used mammograms in the left-CC, left-MLO, right-CC, and right-MLO views from each patient. Each image underwent various preprocessing steps, including patient-identifying information cleansing, desensitization, image window adjustment, artifact removal, and view registration, before it was included in the DL model for the detection of noncalcification and calcification lesions on mammograms. Finally, the malignancy classification of the lesion was formulated as a regression task, and the cancer risk score for each lesion was estimated. The model outputs a category from BI-RADS 1 to 5 (Figure 1).

DL-assisted reader study

After data anonymization and desensitization, all mammograms were systematically deidentified and randomly assigned to the radiologists. Six radiologists with different levels of experience, all specializing in the field of breast imaging, were divided into two groups. One group included three junior radiologists with 3, 5, and 6 years of experience in breast radiology who were denoted as junior radiologists A, B, and C, respectively. The other group included three senior radiologists with 15, 20, and 21 years of experience in breast imaging who were denoted as senior radiologists A, B, and C, respectively. The patients’ mammograms were downloaded from the picture archiving and communication system (PACS), and the radiologists were blinded to any clinical data, other imaging studies, and pathology results. They evaluated 846 cases through a crossover design—423 cases with DL model assistance and 423 without—using distinct case subsets to mitigate recall bias from repeated image interpretations (Figure 2).

As shown in Figure 3, the DL model provided radiologists with four outputs: lesion demarcation via boundary detection, morphological classification, malignancy probability scores, and the BI-RADS classification. Clinically, when interpreting screening and diagnostic mammograms, radiologists can synthesize the DL models with their expertise, patient’s clinical presentation, and medical history to formulate a comprehensive BI-RADS diagnosis. The mammograms were independently reevaluated by the radiologists and categorized as BI-RADS 1–5. Breast lesions reevaluated as BI-RADS 1–3 by the radiologists were categorized as negative findings, while those reevaluated as BI-RADS 4A or higher were categorized as positive findings in this study.

Statistical analysis

Statistical analysis was performed in R version 4.2.2 software (The R Foundation for Statistical Computing; http://www.Rproject.org). Count data are presented as numbers and percentages and were compared between two groups via the Chi-squared test. The diagnostic performance of the DL model and the six radiologists with and without the assistance of the DL model was evaluated using receiver operating characteristic (ROC) curves and the area under the curve (AUC). Differences in AUCs were compared with the DeLong test. P values of <0.05 were considered statistically significant.

Results

Characteristics of the study population

This study included 824 patients with 846 breast lesions that were originally classified as BI-RADS category 3 or 4A on mammography. Longitudinal follow-up data ≥24 months) were obtained for 578 evaluable lesions. The lesions that remained stable or disappeared during follow-up were defined as follow-up negative (n=554), while those exhibiting progression or upgraded to suspicious during follow-up were defined as follow-up positive (n=24). The characteristics of the study population are summarized in Table 1.

Table 1

| Characteristic | Subgroup | Follow-up results | Histopathology results | Total | |||

|---|---|---|---|---|---|---|---|

| Negative | Positive | Negative | Positive | ||||

| Age (years) | ≤40 | 115 | 4 | 79 | 7 | 205 | |

| 41–50 | 300 | 13 | 93 | 29 | 435 | ||

| 51–60 | 115 | 4 | 38 | 13 | 170 | ||

| ≥61 | 24 | 3 | 2 | 7 | 36 | ||

| Breast density category | a | 9 | 2 | 2 | 1 | 14 | |

| b | 50 | 2 | 20 | 3 | 75 | ||

| c | 334 | 10 | 128 | 37 | 509 | ||

| d | 161 | 10 | 62 | 15 | 248 | ||

| Mammography findings | Calcification | 126 | 10 | 67 | 26 | 229 | |

| Mass | 356 | 10 | 128 | 26 | 520 | ||

| Architectural distortion | 5 | 0 | 8 | 0 | 13 | ||

| Asymmetries | 67 | 4 | 9 | 4 | 84 | ||

| BI-RADS category | 3 | 499 | 11 | 100 | 22 | 632 | |

| 4A | 55 | 13 | 112 | 34 | 214 | ||

| Total | – | 554 | 24 | 212 | 56 | 846 | |

BI-RADS, Breast Imaging Reporting and Data System.

High-risk and potentially malignant lesions underwent histopathological confirmation through either percutaneous core needle biopsy or surgical excision and were further defined as pathologically positive. Pathologically positive lesions included intraductal papillary lesions, complex sclerosing adenosis of the breast, and breast cancer. The remaining biopsy-confirmed benign lesions were considered eligible for imaging surveillance. Among the 846 lesions, 268 lesions had definitive histopathological verification, including 56 pathologically positive and 212 pathologically negative cases. The detailed biopsy-proven histopathology results are shown in Table 2.

Table 2

| Histopathology results | Histopathology type | n (%) |

|---|---|---|

| Histopathology positive (n=56) | ||

| Malignant (n=41) | Invasive carcinoma | 21 (37.50) |

| Ductal carcinoma in situ | 15 (26.79) | |

| Encapsulated papillary carcinoma | 1 (1.79) | |

| Mucinous adenocarcinoma | 4 (7.14) | |

| High risk (n=15) | Intraductal papilloma | 12 (21.43) |

| Complex sclerosing adenosis | 2 (3.57) | |

| Borderline phyllodes tumor | 1 (1.79) | |

| Histopathology negative (n=212) | Adenoma | 2 (0.94) |

| Pyogenic change | 3 (1.42) | |

| Granulomatous lobular mastitis | 3 (1.42) | |

| Cyst | 3 (1.42) | |

| Fibroadenoma | 98 (46.23) | |

| Adenosis | 99 (46.70) | |

| Fibroadenolipoma | 1 (0.47) | |

| Normal breast tissue | 1 (0.47) | |

| Lipoma | 1 (0.47) | |

| Adenomyoepithelioma | 1 (0.47) |

N, number of lesions.

Comparison of diagnostic performances between the DL model and radiologists with and without DL model assistance

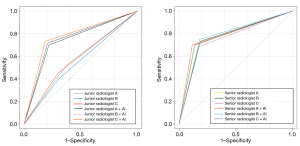

The AUC of the DL model in diagnosing BI-RADS 3 and 4A lesions was 0.74, which was higher than those of the three junior radiologists (0.57, 0.55, and 0.58) but lower than those of the three senior radiologists (0.78, 0.77, and 0.76). With the assistance of the DL model, sensitivity improvements reached statistical significance for junior radiologist B (P=0.01) and junior radiologist C (P=0.02), while the specificity, accuracy, and AUC values exhibited significant enhancement across all junior readers (all P values <0.001).

However, the senior radiologists exhibited no statistically significant differences in sensitivity, specificity, or accuracy following DL model implementation (all P value >0.05; Table 3 and Figure 4).

Table 3

| Group | Sensitivity | Specificity | Accuracy | AUC (95% CI) | Z value | P value |

|---|---|---|---|---|---|---|

| Junior radiologist A | 2.61 | 0.009 | ||||

| Without DL model | 0.52 | 0.63 | 0.61 | 0.57 (0.50–0.65) | ||

| With DL model | 0.70 | 0.78 | 0.78 | 0.74 (0.70–0.78) | ||

| Junior radiologist B | 3.42 | <0.001 | ||||

| Without DL model | 0.40 | 0.69 | 0.68 | 0.55 (0.41–0.68) | ||

| With DL model | 0.72 | 0.79 | 0.78 | 0.77 (0.73–0.81) | ||

| Junior radiologist C | 3.01 | 0.003 | ||||

| Without DL model | 0.45 | 0.70 | 0.69 | 0.58 (0.44–0.71) | ||

| With DL model | 0.73 | 0.81 | 0.80 | 0.77 (0.73–0.81) | ||

| Senior radiologist A | 0.23 | 0.819 | ||||

| Without DL model | 0.70 | 0.86 | 0.85 | 0.78 (0.66–0.90) | ||

| With DL model | 0.70 | 0.88 | 0.86 | 0.79 (0.72–0.86) | ||

| Senior radiologist B | 0.20 | 0.843 | ||||

| Without DL model | 0.73 | 0.81 | 0.80 | 0.77 (0.70–0.84) | ||

| With DL model | 0.75 | 0.82 | 0.82 | 0.78 (0.67–0.90) | ||

| Senior radiologist C | 0.41 | 0.681 | ||||

| Without DL model | 0.68 | 0.83 | 0.81 | 0.76 (0.68–0.83) | ||

| With DL model | 0.70 | 0.86 | 0.86 | 0.78 (0.66–0.90) | ||

| DL model | 0.66 | 0.81 | 0.80 | 0.74 (0.68–0.80) | – | – |

AUC, area under the curve; BI-RADS, Breast Imaging Reporting and Data System; CI, confidence interval; DL, deep learning.

Comparison of diagnostic performance of radiologists with and without DL model assistance for different breast density categories, mammography findings, and BI-RADS categories

For dense breasts, the AUCs of junior radiologists A and B assisted by the DL model in diagnosing BI-RADS 3 and 4A lesions were significantly increased (P=0.04 and P<0.001, respectively). Meanwhile, no significant diagnostic differences were observed for the AUCs of junior radiologist C and the three senior radiologists (P>0.05). In nondense breasts, only junior radiologist A exhibited statistically significant AUC improvement (P=0.03), while the other readers exhibited no improvement from DL model assistance (P>0.05).

For noncalcified lesions, the AUCs of junior radiologists B and C in diagnosing BI-RADS 3 and 4A lesions were significantly increased due to the assistance of the DL model (P=0.001 and P=0.03, respectively), whereas the other radiologists did not exhibit remarkable changes (P>0.05). For calcified lesions, the application of the DL model did not significantly change the AUC values of any of the six radiologists (P>0.05).

For lesions originally classified as BI-RADS 3, among the six radiologists, only junior practitioners B and C showed clinically relevant AUC improvements (P=0.01 and P=0.03, respectively). Additionally, for BI-RADS 4A lesions, the DL model significantly improved the AUCs of junior radiologists A and C and senior radiologist A (P=0.01, P=0.03, and P=0.02, respectively; Figure 4) but not those of the other groups (P>0.05; Table 4).

Table 4

| Group | Breast density category | Mammography findings | BI-RADS category | |||||

|---|---|---|---|---|---|---|---|---|

| Dense | Nondense | Calcified lesion | Noncalcified lesion | 3 | 4A | |||

| Junior radiologist A | ||||||||

| Without DL model | 0.58 | 0.56 | 0.56 | 0.57 | 0.58 | 0.52 | ||

| With DL model | 0.72 | 0.85 | 0.72 | 0.72 | 0.59 | 0.71 | ||

| Z value | 2.05 | 2.17 | 1.75 | 1.61 | 0.09 | 2.65 | ||

| P value | 0.04 | 0.03 | 0.08 | 0.11 | 0.93 | 0.01 | ||

| Junior radiologist B | ||||||||

| Without DL model | 0.50 | 0.60 | 0.54 | 0.54 | 0.56 | 0.62 | ||

| With DL model | 0.79 | 0.84 | 0.74 | 0.82 | 0.83 | 0.67 | ||

| Z value | 4.24 | 1.79 | 1.91 | 3.19 | 2.78 | 0.59 | ||

| P value | <0.001 | 0.07 | 0.06 | 0.001 | 0.01 | 0.56 | ||

| Junior radiologist C | ||||||||

| Without DL model | 0.56 | 0.63 | 0.73 | 0.53 | 0.50 | 0.59 | ||

| With DL model | 0.70 | 0.72 | 0.75 | 0.68 | 0.72 | 0.78 | ||

| Z value | 1.95 | 0.44 | 0.78 | 2.19 | 2.22 | 2.12 | ||

| P value | 0.05 | 0.66 | 0.44 | 0.03 | 0.03 | 0.03 | ||

| Senior radiologist A | ||||||||

| Without DL model | 0.79 | 0.73 | 0.81 | 0.75 | 0.65 | 0.59 | ||

| With DL model | 0.79 | 0.82 | 0.82 | 0.77 | 0.81 | 0.74 | ||

| Z value | 0.07 | 0.43 | 0.13 | 0.27 | 1.54 | 2.28 | ||

| P value | 0.94 | 0.67 | 0.90 | 0.79 | 0.12 | 0.02 | ||

| Senior radiologist B | ||||||||

| Without DL model | 0.76 | 0.89 | 0.76 | 0.75 | 0.77 | 0.73 | ||

| With DL model | 0.77 | 0.84 | 0.80 | 0.81 | 0.79 | 0.74 | ||

| Z value | 0.14 | 0.93 | 0.41 | 0.86 | 0.23 | 0.11 | ||

| P value | 0.89 | 0.35 | 0.68 | 0.39 | 0.81 | 0.92 | ||

| Senior radiologist C | ||||||||

| Without DL model | 0.75 | 0.79 | 0.71 | 0.76 | 0.70 | 0.69 | ||

| With DL model | 0.76 | 0.90 | 0.80 | 0.79 | 0.78 | 0.73 | ||

| Z value | 0.09 | 1.02 | 0.94 | 0.42 | 0.77 | 0.53 | ||

| P value | 0.93 | 0.31 | 0.34 | 0.68 | 0.44 | 0.60 | ||

Nondense breasts include categories a and b; dense breasts include categories c and d; noncalcified lesions include masses, architectural distortions, and asymmetries. AUC, area under the curve; BI-RADS, Breast Imaging Reporting and Data System; DL, deep learning.

Discussion

The development of the ACR BI-RADS lexicon has established a critically needed standard for mammographic interpretation and malignancy risk stratification (9,16). However, due to considerable inter-reader differences in the application of BI-RADS criteria, biopsy decisions vary considerably, resulting in diagnostic delays and unnecessary biopsies for some patients (17). In actual clinical practice, 0.9–7.9% of lesions assessed as BI-RADS 3 are upgraded to “suspicious malignant” and require biopsy (18). More significantly, 70% of false-positive lesions on mammography are classified as BI-RADS 4. Among these, BI-RADS 4A lesions exhibit a higher rate of overdiagnosis than do 4B and 4C lesions, with the majority of 4A lesions being found to be benign on biopsy examination (19). In China, due to high costs and limited medical resources, it is difficult to biopsy all BI-RADS 4A lesions (17,20); thus, some patients choose to undergo follow-up monitoring, which may lead to delayed intervention for breast cancer. Clinical decision-making often incorporates physicians’ experience alongside the patient’s clinical condition and physical examination and imaging results. This study included 632 BI-RADS 3 lesions, of which 510 were followed up, and 11 demonstrated progression requiring subsequent biopsy; in addition, 122 BI-RADS 3 lesions were biopsied, among which 22 were confirmed to be malignant. Therefore, 5.2% (33/632) of BI-RADS 3 lesions were diagnosed as malignant, indicating their diagnoses were missed on the initial mammography. With the assistance of the DL model, the three junior radiologists reassessed 42.4% (14/33), 39.4% (13/33), and 33.3% (11/33) of these cases as BI-RADS 4A or higher, respectively. Our study also included 214 BI-RADS 4A lesions, of which 146 were biopsied, with only 34 of these lesions being confirmed to be malignant; another 68 lesions were followed up, and 55 of these lesions were downgraded. The original reporting radiologists incorrectly called for 112 unnecessary biopsy examinations, and 13 BI-RADS 4A lesions progressed during follow-up. Therefore, the overbiopsy and overdiagnosis rates of BI-RADS 4 lesions were 52.3% (112/214) and 25.7% (55/214), respectively. In view of the above findings, a more effective and accurate method is urgently needed to assist in the diagnosis of BI-RADS 3 and 4A lesions.

Consistent with our observations, Choi et al. found that DL-based AI systems predominantly modified assessments for BI-RADS 3 and 4A classification while maintaining diagnostic stability for definitive benign (BI-RADS 2) or highly suspicious malignant (BI-RADS 4B-5) lesions. In cases of BI-RADS 3 or 4A lesions, for which it is difficult to determine whether a biopsy is needed, AI systems based on DL can enhance the diagnostic performance of radiologists, thus allowing them to formulate appropriate decisions concerning biopsy (21). Additionally, Ouyang et al. constructed a predictive model that combined DL techniques with relevant clinical factors to predict the classification of BI-RADS 4A-positive lesions in mammography. The model showed commendable diagnostic performance, with AUC values of 0.85, 0.82, and 0.84 for the training, validation, and external validation sets, respectively (22). This model may thus be a powerful tool in helping radiologists enhance diagnostic precision and reduce unnecessary biopsies in patients with BI-RADS 4A-positive lesions. In their study, Meng et al. found that the proportion of lesions downgraded by their proposed DL model was 90.73%, 84.76%, and 80.19% for BI-RADS categories 4A, 4B, and 4C, respectively, which could help reduce unnecessary biopsy rates for less experienced residents (20). In line with this, Zhao et al. reported that DL integration significantly enhanced residents’ diagnostic specificity (19.47–48.67% vs. 46.02–76.11%) and overall performance (AUC: 0.62–0.74 vs. 0.71–0.85; P<0.001), supporting its role as an adjunctive tool for residents to downgrade 4A lesions and reduce unnecessary biopsies (23). Consistent with these observations, our study revealed substantial improvements in junior radiologists’ performance when assisted by the DL model: the specificity increased from 0.63, 0.69, and 0.70 to 0.78, 0.79, and 0.81, respectively, while the AUC values improved from 0.57, 0.55, and 0.58 to 0.74, 0.77, and 0.77 (P<0.05), respectively. These results indicated that our DL model could enhance the accurate prediction of BI-RADS 3 and 4A lesions by junior radiologists, and may serve as an effective method to reducing missed diagnoses and unnecessary biopsies.

Kim et al. found that AI assistance increased radiologists’ sensitivity and specificity in the differential diagnosis of noncalcified lesions; the radiologists showed high sensitivity in the evaluation of fatty breasts without the aid of AI, but in dense breasts, their performance was greatly improved when aided by AI (24). This finding is consistent with those reported by Sasaki et al., who confirmed that AI is not affected by breast density in distinguishing benign and malignant breast lesions (25). In our study, we found that DL assistance significantly improved junior radiologists’ diagnostic performance for BI-RADS 3 and 4A lesions in dense breasts and for noncalcified lesions. This is because radiologists’ performance can decrease with the increase in breast density since dense parenchymal tissue is more likely to mask cancer lesions in mammograms. The difference in the sensitivity of AI between nondense and dense breasts is much smaller than that for radiologists, leading to the significantly improved performance of the radiologists for lesions in dense breasts when assisted by AI (24). Thus, the DL model could effectively assist radiologists in reducing missed diagnoses and misdiagnoses caused by breast density or inconspicuous lesion features.

There are several limitations to this study that should be acknowledged. First, we did not consider clinical factors such as family history and clinical symptoms in the DL model, which may limit its ability to perform comprehensive analysis, leading to diagnostic differences between the clinical and trial settings. Second, some patients were excluded due to a lack of follow-up or pathology results, so there may be a patient selection bias in this retrospective study. Although the mammography data in this study were from two medical institutions and were obtained using two different devices, which could verify the generalizability of the proposed model to some extent, prospective studies with larger sample sizes in multiple centers with devices from more manufacturers are still needed in to verify the efficacy of our proposed DL model.

Conclusions

DL models can significantly improve the diagnostic performance of junior radiologists in assessing BI-RADS category 3 and 4A lesions, effectively reducing missed diagnoses and unnecessary biopsies while reducing medical costs.

Acknowledgments

The authors would like to express their gratitude to Qingshan Chen for his invaluable statistical expertise and guidance throughout the research process.

Footnote

Reporting Checklist: The authors have completed the TRIPOD+AI reporting checklist. Available at https://qims.amegroups.com/article/view/10.21037/qims-24-1523/rc

Funding: This study was supported by

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://qims.amegroups.com/article/view/10.21037/qims-24-1523/coif). Y.Z. is an employee of Ping-An Technology. The other authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. This study was conducted in accordance with the Declaration of Helsinki (as revised in 2013) and was approved by the Medical Ethics Committee of Shenzhen People’s Hospital (No. LL-KY-2021624). The requirement for individual consent was waived due to the retrospective nature of the analysis.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Bray F, Laversanne M, Sung H, Ferlay J, Siegel RL, Soerjomataram I, Jemal A. Global cancer statistics 2022: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin 2024;74:229-63. [Crossref] [PubMed]

- Duffy SW, Tabár L, Yen AM, Dean PB, Smith RA, Jonsson H, et al. Mammography screening reduces rates of advanced and fatal breast cancers: Results in 549,091 women. Cancer 2020;126:2971-9. [Crossref] [PubMed]

- Tabár L, Yen AM, Wu WY, Chen SL, Chiu SY, Fann JC, Ku MM, Smith RA, Duffy SW, Chen TH. Insights from the breast cancer screening trials: how screening affects the natural history of breast cancer and implications for evaluating service screening programs. Breast J 2015;21:13-20. [Crossref] [PubMed]

- Sechopoulos I, Teuwen J, Mann R. Artificial intelligence for breast cancer detection in mammography and digital breast tomosynthesis: State of the art. Semin Cancer Biol 2021;72:214-25. [Crossref] [PubMed]

- Kerlikowske K, Zhu W, Tosteson AN, Sprague BL, Tice JA, Lehman CD, Miglioretti DLBreast Cancer Surveillance Consortium. Identifying women with dense breasts at high risk for interval cancer: a cohort study. Ann Intern Med 2015;162:673-81. [Crossref] [PubMed]

- Kwon MR, Chang Y, Park B, Ryu S, Kook SH. Performance analysis of screening mammography in Asian women under 40 years. Breast Cancer 2023;30:241-8. [Crossref] [PubMed]

- Sprague BL, Arao RF, Miglioretti DL, Henderson LM, Buist DS, Onega T, Rauscher GH, Lee JM, Tosteson AN, Kerlikowske K, Lehman CDBreast Cancer Surveillance Consortium. National Performance Benchmarks for Modern Diagnostic Digital Mammography: Update from the Breast Cancer Surveillance Consortium. Radiology 2017;283:59-69. [Crossref] [PubMed]

- Elmore JG, Nakano CY, Koepsell TD, Desnick LM, D'Orsi CJ, Ransohoff DF. International variation in screening mammography interpretations in community-based programs. J Natl Cancer Inst 2003;95:1384-93. [Crossref] [PubMed]

- Available online: https://www.acr.org/-/media/ACR/Files/RADS/BI-RADS/Mammography-Reporting.pdf

- Yang Y, Hu Y, Shen S, Jiang X, Gu R, Wang H, Liu F, Mei J, Liang J, Jia H, Liu Q, Gong C. A new nomogram for predicting the malignant diagnosis of Breast Imaging Reporting and Data System (BI-RADS) ultrasonography category 4A lesions in women with dense breast tissue in the diagnostic setting. Quant Imaging Med Surg 2021;11:3005-17. [Crossref] [PubMed]

- LeCun Y, Bengio Y, Hinton G. Deep learning. Nature 2015;521:436-44. [Crossref] [PubMed]

- McKinney SM, Sieniek M, Godbole V, Godwin J, Antropova N, Ashrafian H, et al. International evaluation of an AI system for breast cancer screening. Nature 2020;577:89-94. [Crossref] [PubMed]

- Rodríguez-Ruiz A, Krupinski E, Mordang JJ, Schilling K, Heywang-Köbrunner SH, Sechopoulos I, Mann RM. Detection of Breast Cancer with Mammography: Effect of an Artificial Intelligence Support System. Radiology 2019;290:305-14. [Crossref] [PubMed]

- Yoon JH, Kim EK. Deep Learning-Based Artificial Intelligence for Mammography. Korean J Radiol 2021;22:1225-39. [Crossref] [PubMed]

- Yang Z, Cao Z, Zhang Y, Tang Y, Lin X, Ouyang R, Wu M, Han M, Xiao J, Huang L, Wu S, Chang P, Ma J. MommiNet-v2: Mammographic multi-view mass identification networks. Med Image Anal 2021;73:102204. [Crossref] [PubMed]

- Pesce K, Orruma MB, Hadad C, Bermúdez Cano Y, Secco R, Cernadas A. BI-RADS Terminology for Mammography Reports: What Residents Need to Know. Radiographics 2019;39:319-20. [Crossref] [PubMed]

- He T, Puppala M, Ezeana CF, Huang YS, Chou PH, Yu X, Chen S, Wang L, Yin Z, Danforth RL, Ensor J, Chang J, Patel T, Wong STC. A Deep Learning-Based Decision Support Tool for Precision Risk Assessment of Breast Cancer. JCO Clin Cancer Inform 2019;3:1-12. [Crossref] [PubMed]

- Lee KA, Talati N, Oudsema R, Steinberger S, Margolies LR. BI-RADS 3: Current and Future Use of Probably Benign. Curr Radiol Rep 2018;6:5. [Crossref] [PubMed]

- Wang H, Hu Y, Lu Y, Zhou J, Guo Y. The uncertainty of boundary can improve the classification accuracy of BI-RADS 4A ultrasound image. Med Phys 2022;49:3314-24. [Crossref] [PubMed]

- Meng M, Li H, Zhang M, He G, Wang L, Shen D. Reducing the number of unnecessary biopsies for mammographic BI-RADS 4 lesions through a deep transfer learning method. BMC Med Imaging 2023;23:82. [Crossref] [PubMed]

- Choi JS, Han BK, Ko ES, Bae JM, Ko EY, Song SH, Kwon MR, Shin JH, Hahn SY. Effect of a Deep Learning Framework-Based Computer-Aided Diagnosis System on the Diagnostic Performance of Radiologists in Differentiating between Malignant and Benign Masses on Breast Ultrasonography. Korean J Radiol 2019;20:749-58. [Crossref] [PubMed]

- Ouyang R, Liao T, Yang Y, Lin X, Zhou X, Ma J. Novel study on the prediction of BI-RADS 4A positive lesions in mammography using deep learning technology and clinical factors. Quant Imaging Med Surg 2024;14:8864-77. [Crossref] [PubMed]

- Zhao C, Xiao M, Liu H, Wang M, Wang H, Zhang J, Jiang Y, Zhu Q. Reducing the number of unnecessary biopsies of US-BI-RADS 4a lesions through a deep learning method for residents-in-training: a cross-sectional study. BMJ Open 2020;10:e035757. [Crossref] [PubMed]

- Kim HE, Kim HH, Han BK, Kim KH, Han K, Nam H, Lee EH, Kim EK. Changes in cancer detection and false-positive recall in mammography using artificial intelligence: a retrospective, multireader study. Lancet Digit Health 2020;2:e138-48. [Crossref] [PubMed]

- Sasaki M, Tozaki M, Rodríguez-Ruiz A, Yotsumoto D, Ichiki Y, Terawaki A, Oosako S, Sagara Y, Sagara Y. Artificial intelligence for breast cancer detection in mammography: experience of use of the ScreenPoint Medical Transpara system in 310 Japanese women. Breast Cancer 2020;27:642-51. [Crossref] [PubMed]