MHAU-Net: a multi-scale hybrid attention U-shaped network for the segmentation of MRI breast tumors

Introduction

It was estimated that breast cancer comprised 15.3% of novel neoplastic cases in 2022, constituting 31% of cancers affecting females and 15% of cancer-related deaths. However, a remarkable 5-year survival rate of 99% can be achieved if detection and treatment are implemented in the early stages. Conversely, if breast cancer is diagnosed in the later stages, the survival rate declines to 27% (1,2).

Various imaging techniques, including ultrasonography, digital mammography, digital breast tomosynthesis, and magnetic resonance imaging (MRI) of the breast, are used to diagnose breast cancer in clinical practice. Notably, breast MRI facilitates multi-parametric examination through techniques such as dynamic contrast-enhanced MRI (DCE-MRI) and diffusion-weighted imaging. Over the years, it has been extensively applied in the screening, diagnosis, preoperative prognostication, and therapeutic response evaluation of breast diseases. Numerous studies have shown that DCE-MRI provides abundant data for differentiating between benign and malignant breast neoplasms, substantially enhancing the diagnostic accuracy of breast cancer and decreasing unnecessary benign breast biopsies (3,4). In 2021, the Chinese consensus of experts on breast cancer recognized MRI as an important auxiliary diagnostic tool for suspected breast malignancy cases (5).

MRI employs three-dimensional (3D) scanning techniques, providing superior tumor measurement capabilities than digital mammography. Previous studies have shown that the precise pre-treatment measurement of the maximum diameter and tumor volume informs optimal treatment plan selection (6,7). Additionally, volumetric change during treatment is an important criterion for assessing therapeutic efficacy. Therefore, it is critical that clinicians have precise tumor measurement data. However, current image processing techniques depend on radiologists’ expertise and are time consuming; moreover, diagnostic accuracy varies across hospitals. Recently, with expanded medical applications of computer-aided technologies, deep learning has gained significant momentum. Tumor segmentation is a vital component of deep-learning models for breast cancer; thus, segmentation accuracy needs to be improved.

Among the various deep-learning algorithms currently available, U-Net and its variants have made notable advancements in breast tumor segmentation (8-10). U-Net, a deep neural network with a U-shaped encoder-decoder structure (11), plays a pivotal role in feature extraction across different levels, transmitting them to the decoder through skip connections. Subsequent to multiple feature fusion and upsampling operations on input features, the decoder generates the segmentation mask for the target. This architectural design has proven effective in the segmentation of various organs, including the pancreas (12), liver (13), and kidney (14). In the context of breast tumor segmentation, Pramanik et al. developed the dual-path DBU-Net. This method features two independent encoding paths; one takes the original image as input, and the other processes the edge information of the image, thereby enhancing the extraction of semantic features (15). Additionally, to reduce the interference of background chest tissue on breast tumor recognition in DCE-MRI images, Qin et al. designed a two-stage U-Net segmentation architecture combined with the dense residual module to automatically extract regions of interest in advance of the actual segmentation process, thereby reducing irrelevant information and further improving segmentation accuracy (16).

However, U-Net still has two primary shortcomings in breast MR image tumor segmentation. First, its inability to extract multi-scale contextual information limits its ability to segment tumors of varying sizes. This weakness is further exacerbated when distinguishing the tumor from its surrounding tissues (17). Structures for extracting multi-scale context information, such as atrous spatial pyramid pooling (ASPP) in DeepLab V3+ (18), have been shown to be effective and have been widely employed in medical segmentation tasks (19-21). ASPP uses multiple sets of atrous convolutions with varying dilation rates to analyze the input matrix from different fields of view, ultimately generating multi-scale contextual features through fusion (22). This architecture adeptly captures long-range dependencies while maintaining robust local feature extraction, showcasing exceptional tumor segmentation capabilities (23). Additionally, U-Net exhibits a lack of specificity during the feature extraction process, making it susceptible to interference from extraneous information outside the tumor.

To address this issue, one viable solution is to incorporate attention mechanisms (24,25). Attention mechanisms can be categorized into channel attention and spatial attention mechanisms (26,27), based on the feature dimension. The channel attention mechanism involves weighting the feature maps of each channel, with representative networks, including SENet (28) and ResNeSt (29). This structure facilitates the analysis of interdependencies between channel features, enabling adaptive adjustments to each channel feature and enhancing the effective channel feature extraction capability of the model (30). The spatial attention mechanism complements the channel attention mechanism by using the single-channel attention matrix to prioritize key areas of the image (31,32). Given that the two attention mechanisms optimize feature extraction from different perspectives, an increasing number of studies have recently focused on the fusion of these two mechanisms (33,34). Multi-scale or attention methods have been individually applied to breast MRI scans (35,36), and some studies have applied both structures to breast ultrasound images (37); however, there is currently a paucity of research that integrates both multi-scale and attention structures into U-Net for breast MRI scan tumor segmentation.

Therefore, this study introduced multi-scale and attention structures into U-Net, presenting a novel multi-scale hybrid attention U-shaped network (MHAU-Net) for breast MRI scan tumor segmentation. Built on the foundation of 3D U-Net, the MHAU-Net incorporates multiple sets of cavity convolutions with different expansion ratios to achieve a multi-scale capability. It employs global pooling and single-channel convolution to construct hybrid channels and spatial attention structures. Unlike existing methods, the hybrid channel and spatial attention structure of the MHAU-Net independently generate and fuse attention matrices for features from two distinct fields of view, enhancing the input features. This innovative structure enhances the network’s ability to express multi-scale complex information, improving more effective segmentation of breast tumors of varying sizes and shapes. Extensive experiments showed that the MHAU-Net significantly improves breast MRI tumor segmentation. The key contributions and main accomplishments of our study include:

- The introduction the multi-scale hybrid attention block (MHAB), comprising the convolution of four different visual fields and featuring a dual-attention space and channel structure. This design enables the segmentation of tumors of different sizes and enhances effective information extraction;

- The construction of a new 3D network, the MHAU-Net, based on the MHAB, for the automatic segmentation of breast tumors;

- The establishment of a new clinical 3D MRI breast tumor segmentation dataset comprising 906 3D images, each with a corresponding breast tumor segmentation mask;

- A comparison of the proposed method with seven existing methods using the dataset, and an analysis of different types of breast images to show the superior segmentation performance of the proposed method.

We present this article in accordance with the TRIPOD + AI reporting checklist (available at https://qims.amegroups.com/article/view/10.21037/qims-24-1515/rc).

Methods

In this section, we outline how the proposed MHAU-Net automatically segments breast tumors from breast MRI scans. We first introduce the overall structure and workflow of the method, and then detail its core block MHAB and loss function.

MHAU-Net

The MHAU-Net follows a U-shaped network structure based on an encoder-decoder architecture (Figure 1). The network employs an encoder to extract four sets of features at different scales. These features are then fed into the decoder to provide spatial details and contextual information about the image, facilitating the accurate segmentation of breast tumors. Due to the substantial computational resources required for 3D networks, the input image is initially divided into multiple 3D patches of a fixed size before being input into the network. After inputting each patch into the network, the first stage involves feature extraction through two MHABs, followed by compression using subsampling modules. The subsampling module incorporates a 3D convolution with a step size of 2, compressing the depth, height, and width of the feature to half that of the original feature. After four stages of the MHAB and downsampling modules, four sets of features with channel numbers of 32, 64, 128, and 160 are generated. After a set of upsampling modules implemented primarily through deconvolution, the features with a channel number of 160 are concatenated with the features of 128. The concatenated features are then processed through an additional MHAB to obtain fused features that retain the original image details. Following three rounds of cross-link feature fusion, the fused features are mapped into a matrix with a channel number of 2, and the same depth, height, and width as the input blocks. This step enables the generation of segmentation prediction results for the input blocks. Finally, all the 3D segmentation results are combined to produce the complete tumor segmentation results for the breast MRI scans.

MHAB

The MHAB serves as the core component of the improved algorithm, and its flow and overall structure are illustrated in Figure 2. The input feature undergoes processing through a 3D convolution block (CB), comprising a 3D convolution layer, an instance normalization layer, and a leaky rectified linear unit (LReLU) activation layer. During this process, the CB adjusts the number of feature channels to align with the output channels of the MHAB, generating the feature . Next, is split in the channel dimension, and four groups of split features , , , and are generated. Four sets of features , , , and containing multi-scale context information are generated by four dilated CBs with ratios of 1, 2, 3, and 4, respectively.

The multi-scale context features and generated by the dilated blocks with dilated ratios of 1 and 2, respectively, are processed by the hybrid channel attention block to generate and , using the following process:

where the input feature label , represents global average pooling, represents data concatenation, represents element addition, represents element multiplication, P represents the weight parameters, represents the full connection layer parameters, P3×3×3 represents the parameters of a 3D CB with a kernel size of 3×3×3, and represents the parameters of a 3D CB with a kernel size of 1×1×1. For the input , the hybrid channel attention block first adopts the global averaging pooling compress feature to generate vector . is concatenated with another vector , and a multi-scale fusion vector is generated through the fully connected layer. The resultant fusion vector and are added together, and the hybrid attention vector is generated through the Sigmoid layer. The attention vector and input feature undergo element-wise multiplication, and a more inclined hybrid attention feature is generated by a 3×3×3 CB. Finally, is concatenated with , and the output feature of the hybrid channel attention information, and context information is generated by a 3D 1×1×1 CB that only fuses the channel-level information.

The overall process and structure of the hybrid space attention block is similar to that of channel attention, and the process is as follows:

where j = i + 2, represents the parameters of a 3D 3×3×3 convolution layer. Compared with the hybrid channel attention block, the hybrid space attention block uses a 3D convolution layer where the number of output channels is 1 to generate , and then generates the spatial attention matrix . Finally, the output feature , which combines the hybrid spatial attention information and the context information, is generated.

After completing the above operations, the features Fc1, Fc2, Fc3, and Fc4 extracted from the hybrid channel and space attention blocks are fused to produce . is joined with F2 by cross-layer connection, and the output feature is generated. In summary, the MHAB not only incorporates the hybrid channel and spatial attention mechanisms into the network but also enhances the network’s multi-scale context information extraction capability. This augmentation enables the network to effectively adapt to the segmentation of tumors of various sizes and shapes.

Loss function

To suppress the influence of positive and negative sample imbalance on the model training process, we adopted a mixed loss combining weighted cross entropy (WCE) loss and dice loss, which can be expressed as:

where represents the number of voxels in the divided sample, represents the true value corresponding to the label, represents the predicted value generated by the network, and are the weights of the front and background, is a small constant to prevent denominator 0 and smoothing loss, and and are the weights of the WCE loss and dice loss, respectively. In the context of breast tumor segmentation, where there is a substantial proportion of negative samples, relying solely on traditional cross entropy (CE) loss for training may lead the network to predominantly output 0, resulting in network inactivation. Unlike CE loss, WCE loss and dice loss can suppress positive and negative sample imbalances, allowing the network to effectively focus on the foreground region. However, there are several drawbacks to both of the two types of losses. WCE loss is intuitive and stable, but it ignores spatial structure information. While dice loss focuses on contour shapes, but is also overly sensitive to small targets. Specifically, for small tumors, even a minor number of voxel prediction errors can disproportionately affect the loss value. To address these challenges and strike a balance between efficiency and stability in the convergence process, a mixed loss strategy combining WCE loss and dice loss was employed for network training. This approach leverages the strengths of both loss functions, harnessing the regional correlation of dice loss while mitigating the sensitivity (SE) to sample proportions.

Experiments and results

Dataset

This retrospective analysis was conducted in accordance with the principles outlined in the Declaration of Helsinki (as revised in 2013). The study was approved by the Ethics Committee of the Guangxi Medical University Cancer Hospital (No. KY2024395). Given the retrospective nature of the study, the requirement for written informed consent was waived.

In this study, the proposed MHAU-Net was applied to evaluate a breast tumor MRI dataset. The dataset comprised 3D T1-weighted imaging enhanced images from breast cancer patients, whose diagnoses had been confirmed by surgery and pathology at the Cancer Hospital affiliated with Guangxi Medical University between January 2018 and April 2021. After excluding low-quality images with noticeable noise artifacts and other interference, the 3D images of 906 patients remained. Of these images, 901 had a slice thickness of 1.5 mm, and only 5 had a slice thickness of 1 mm. Of the samples, 858 had a plane spacing of 0.684, and the other 48 had different plane spacings, ranging from 0.527 to 0.879. Of the samples, 817 had a slice number of 144, and 89 had slice numbers ranging from 112 to 168. All samples had the same plane resolution of 512×512. For further details of the dataset attributes, see Table 1.

Table 1

| Attribute | Value |

|---|---|

| Sample size | 906 |

| MRI scanner manufacturer | General Electric |

| Field strength (T) | 3 |

| Slice thickness (mm) | 1.5 (n=901), 1.0 (n=5) |

| Main plane spacing (mm) | 0.684 (n=858) |

| Scope (mm) | 0.527–0.879 |

| Main slice number | 144 (n=817) |

| Scope | 112–168 |

| Plane resolution | 512×512 |

| Flip angle (°) | 15 |

MRI, magnetic resonance imaging.

All the DCE-MRI images were imported into ITK-SNAP 3.6.0 software in Digital Imaging and Communications in Medicine format. A radiologist with 5 years of MRI diagnosis experience manually delineated each tumor region based on the transverse image, referencing the DCE-MRI image (specifically selecting the most obvious phase of tumor enhancement, which was the second phase of the contrast-enhanced scans for this study) and combining the coronal and sagittal views (Figure 3A). Subsequently, a radiologist with over 15 years of MRI diagnosis experience reviewed and made modifications to the marked regions, ultimately merging them into a 3D tumor mask (Figure 3B).

After data acquisition and labeling, a breast MRI dataset containing 906 3D images [129,972 two-dimensional (2D) slices] was generated. A five-fold cross-validation strategy was employed for the model training and validation. In each fold, the dataset was randomly split into training (64%), validation (16%), and test (20%) sets. To prevent data leakage, the smallest unit for data partitioning was the entire 3D image of an individual patient. Additionally, the test sets across the five folds were non-overlapping, and their union covered the entire dataset.

Baselines and metrics

Next, our MHAU-Net was compared with four existing 2D networks [i.e., U-Net (11), U-Net++ (38), adaptive attention U-Net (AAU-Net) (37), and efficient medical-images-aimed segment anything model (EMedSAM) (39)], and three 3D networks [i.e., voxel segmentation network (VoxSegNet) (40), 3D U-Net (41), and V-Net (42)]. Among them, U-Net stands out as the most commonly used network for medical image segmentation, and the MHAU-Net builds upon the foundational architecture of 3D U-Net. U-Net++, an enhanced variant incorporating a dense connection structure, is rooted in the U-Net framework. EMedSAM is an efficient medical image object segmentation model based on SAM. AAU-Net, which shares a comparable structure with MHAU-Net, is a 2D network that has demonstrated outstanding segmentation results, particularly in the domain of breast ultrasound images. Meanwhile, VoxSegNet and V-Net, which are both 3D segmentation networks, are extensively used in the medical field for volumetric segmentation tasks.

To comprehensively evaluate network performance, the SE, positive predictive value (PPV), intersection over union (IoU), and dice similarity coefficient (DSC) were used as the assessment metrics, which can be expressed by the following formulas:

where is the number of positive samples correctly predicted, samples refer to the corresponding category of each voxel, is the number of negative samples correctly predicted, represents the negative samples incorrectly predicted as positive samples, and represents the positive samples incorrectly predicted as negative samples.

Experimental details

The same training strategy, for which the number of training epochs was 200 and the learning rate decreased from 0.01 to 0.00001, was employed for all the networks. , , , and of the loss function were set to 3.0, 0.5, 0.6, and 0.4. The optimization was performed using the AdamW optimizer. All the sub-experiments were based on five-fold cross-validation. Each 3D image was cut into several patches 64×192×192 in size before being fed into the network. During training, the positive patch and negative patch had an equal representation of 50%. For testing, the entire image was segmented with a 50% overlapping step length. Dynamic data augmentation was applied with a probability of 50%.

The experimental setup used an Ubuntu 22.04.1 system with the generic Linux kernel (version 6.2.0-32). CUDA 11.6 served as the parallel computing framework, and CUDNN 8.3.2 functioned as the deep neural network acceleration tool. Python 3.10.4 was employed as the programming language, PyTorch 1.13.0 as the deep-learning tool, and SimpleITK 2.2.1 as the image processing tool. The computational hardware comprised three NVIDIA RTX 3090 graphics processing units (GPU), and two Intel Xeon Gold 6230 central processing units (CPU).

Results

2D vs. 3D

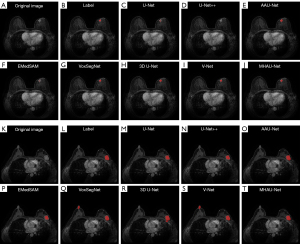

In the field of 3D tumor segmentation, segmentation networks can be broadly categorized into 2D and 3D networks. Figure 4 illustrates the segmentation outcomes of both 2D and 3D networks. Notably, in the highlighted yellow circle area, 2D networks tend to mistakenly identify small, irrelevant tissues as target tumors, resulting in false-positive segmentation results when compared to their 3D counterparts. One contributing factor to this phenomenon is that 2D networks can only access information from single-layer planar images, and thus lack the complete 3D context of tumors. Typically, the projection area of both ends of the tumor in the plane is limited, and the limited projection can easily cause confusion between the tumors and other tissues. This confusion creates challenges for the network during training, especially when discerning whether certain small targets are tumors, which leads to difficulties in network fitting and the subsequent misidentification of small targets. Conversely, the overlay-based segmentation approach employed by 3D networks can encapsulate the comprehensive information of a single tumor in most cases. This capability significantly mitigates the false-positive segmentation of tiny tissues caused by information loss, resulting in smoother segmentation at the 3D level. Consequently, 3D networks exhibit advantages over 2D networks in the specific task of tumor segmentation.

Comparison of existing methods

To assess the efficacy of the enhanced MHAU-Net, we conducted a comparative analysis of its segmentation performance against seven existing networks: U-Net, U-Net++, AAU-Net, EMedSAM, VoxSegNet, 3D U-Net, and V-Net. The results of the analysis are presented in Table 2. The results showed the general superiority of the 3D networks over their 2D counterparts. Compared to 3D U-Net, the MHAU-Net increased the SE by 2.3%, the PPV by 7.0%, the IoU by 7.1%, and the DSC by 6.0%, and the P values of all four metrics were less than 0.05, indicating that the MHAU-Net performed significantly better than 3D U-Net. Notably, among the eight networks, the MHAU-Net achieved the highest SE, IoU, and DSC, surpassing the suboptimal results by 1.3%, 4.9%, and 3.9%, respectively. The PPV of the MHAU-Net was slightly lower than that of U-Net++; however, its other performance indicators were notably higher, including a 11.1% increase in SE compared to U-Net++. Moreover, the MHAU-Net had the lowest standard deviations, suggesting a more stable segmentation performance.

Table 2

| Type | Network | SE (%) | PPV (%) | IoU (%) | DSC (%) |

|---|---|---|---|---|---|

| 2D | U-Net | 85.9±2.6** | 71.2±5.0** | 64.5±5.8** | 76.3±4.2** |

| U-Net++ | 81.3±3.7** | 82.0±3.9 | 66.5±5.7* | 77.1±4.6** | |

| AAU-Net | 91.1±1.8 | 72.1±5.6** | 66.4±5.2** | 78.2±3.1* | |

| EMedSAM | 89.0±2.1** | 74.1±4.7* | 66.8±4.5** | 77.8±3.7* | |

| 3D | VoxSegNet | 88.4±2.4 | 72.5±5.1** | 65.8±4.3** | 76.5±3.8** |

| 3D U-Net | 90.1±1.6* | 73.9±5.5** | 67.1±3.7* | 78.1±3.3* | |

| V-Net | 89.7±1.8 | 76.8±4.0* | 69.3±3.8* | 80.2±2.4* | |

| MHAU-Net | 92.4±1.5 | 80.9±3.6 | 74.2±3.4 | 84.1±2.1 |

Data are presented as mean ± standard deviation. The Wilcoxon signed-rank test was used to test for differences between the MHAU-Net and the other networks in terms of the SE, PPV, IoU, and DSC. *, P<0.05; **, P<0.001. 2D, two-dimensional; 3D, three-dimensional; AAU-Net, adaptive attention U-Net; DSC, dice similarity coefficient; EMedSAM, efficient medical-images-aimed segment anything model; IoU, intersection over union; MHAU-Net, multi-scale hybrid attention U-shaped network; PPV, positive predictive value; SE, sensitivity; VoxSegNet, voxel segmentation network.

In addition, to provide a more objective comparison of the segmentation outcomes, we visualized the segmentation effects of the eight networks on two samples (Figure 5). For the small-tumor sample, the limitations of the 2D networks in capturing the 3D information of the surrounding frames resulted in poorer segmentation effects, particularly for U-Net++. In the case of the large-tumor sample, all the networks successfully segmented corresponding positive regions; however, false-positive segmentation regions from the left side of VoxSegNet and V-Net underscored the tendency of 3D networks, based on the segmentation methods, to misidentify tissues similar to target tumors due to the absence of complete 2D information. The visualization results showed that the MHAU-Net consistently achieved optimal segmentation outcomes for both sample scenarios, providing further evidence of the enhanced network’s effectiveness in this study.

Network hyperparameter experiment

To validate the rationality of the network hyperparameter settings, we examined the segmentation performance of the MHAU-Net with varying numbers of encoding stages and output channels (i.e., the number of output convolutional kernels). Specifically, we experimented with commonly used numbers of stages (3, 4, and 5), and also tested positive integer powers of 2 for the number of output channels. As detailed in Table 3, overall, the network with 3 stages ([32, 64, 128]) exhibited significantly inferior segmentation performance compared to those with 4 and 5 stages. The performance gap between the networks with 4 and 5 stages was relatively small; however, the 4-stage network performed slightly better than the 5-stage network. Further, networks with positive integer powers of 2 as the number of output channels ([32, 64, 128, 256]) did not exhibit a clear advantage over the final configuration used in this study ([32, 64, 128, 160]). Specifically, the SE, PPV, IoU, and DSC were 0.8%, 0.4%, 0.3%, and 0.3% lower, respectively. Additionally, considering the significant effect of the fourth stage on the number of network weights, the final network hyperparameters ([32, 64, 128, 160]) chosen in this study exhibited superiority in both segmentation performance and computational resource consumption.

Table 3

| Network shape | SE (%) | PPV (%) | IoU (%) | DSC (%) |

|---|---|---|---|---|

| [32, 64, 128] | 86.2±2.7 | 73.9±5.1 | 69.0±5.3 | 78.7±3.8 |

| [32, 64, 128, 256, 512] | 89.1±2.3 | 79.8±4.4 | 72.8±4.2 | 82.3±2.8 |

| [32, 64, 128, 256] | 91.6±1.6 | 80.5±3.4 | 73.9±3.8 | 83.8±2.2 |

| [32, 64, 128, 160, 240] | 90.5±2.0 | 79.1±4.2 | 72.6±3.9 | 82.3±3.4 |

| [32, 64, 128, 160] | 92.4±1.5 | 80.9±3.6 | 74.2±3.4 | 84.1±2.1 |

Data are presented as mean ± standard deviation. The network shape represents the number of output channels per encoding stage. DSC, dice similarity coefficient; IoU, intersection over union; PPV, positive predictive value; SE, sensitivity.

Different types of tumors

To provide a comprehensive evaluation of our approach’s performance in tumor segmentation across different types of tumors, we conducted an in-depth analysis focusing on single tumors, multiple tumors, small tumors, large tumors, mass tumors, and non-mass tumors. The dataset, comprising 906 samples, was initially categorized based on the number of tumors, resulting in 774 single-tumor samples and 132 multiple-tumor samples. For the evaluation of single tumors, the samples were further divided into small and large types, where the maximum diameter of the tumor was used as a criterion (≤3 cm for small tumors). This classification resulted in 444 large-tumor samples and 330 small-tumor samples. Additionally, among the 774 single-tumor samples, 619 tumors could be identified as mass or non-mass types (492 mass, and 127 non-mass).

The segmentation results and visualizations of the MHAU-Net for different tumor types are presented in Table 4 and Figure 6. An analysis of the results indicated that the segmentation performance of the network was notably higher for the single-tumor images than the multiple-tumor images. Compared with the single-tumor images, the SE of the network for multiple-tumor images was reduced by 15.6%, while the PPV was only reduced by 5.7%, indicating that the main problem of the network in multiple-tumor image segmentation was that it was easy to miss tumor regions. Figure 6J,6O depicts significant missing regions in the top tumor area, further emphasizing under-segmentation as the primary challenge affecting performance in multiple tumors.

Table 4

| Type | Number | SE (%) | PPV (%) | IoU (%) | DSC (%) |

|---|---|---|---|---|---|

| Single | 774 | 94.7±1.4 | 81.9±3.0 | 75.9±2.8 | 85.7±1.6 |

| Small | 330 | 95.2±1.5 | 79.1±3.5 | 74.8±3.4 | 84.3±2.8 |

| Large | 444 | 94.3±1.2 | 83.9±1.9 | 76.8±2.1 | 86.7±1.7 |

| Mass | 492 | 95.3±1.1 | 80.6±2.4 | 77.1±1.8 | 86.3±1.5 |

| Non-mass | 127 | 85.2±2.9 | 79.5±3.7 | 67.3±2.4 | 79.8±2.9 |

| Multiple | 132 | 79.1±6.2 | 76.2±7.1 | 61.1±7.5 | 74.3±5.1 |

Data are presented as mean ± standard deviation. DSC, dice similarity coefficient; IoU, intersection over union; PPV, positive predictive value; SE, sensitivity.

The comparison between small and large tumors revealed a slightly better segmentation effect for large tumors, though the difference was marginal, indicating the network’s relatively consistent SE across tumor sizes. Further, the high SE (95.2%) for small tumors indicated that the network was less prone to missing these smaller tumors.

The segmentation results for mass and non-mass tumors highlighted the superior performance of the network in mass-tumor segmentation. The marginal difference in the PPV between the two subgroups was only 1.1%, while the difference in SE was 10.1%, suggesting that the network may have missed part of the tumor boundary voxels due to characteristics such as non-mass type boundary ambiguity. This observation was corroborated by findings in Figure 6I,6N, where defects in the left edge of the model segmentation image were apparent relative to the labeled image.

Ablation study

The MHAB is the core module of the improved MHAU-Net, which enables the improved network to perceive the tumors of different sizes and shapes; thus, this study mainly focused on the MHAB for the ablation experiments. The MHAB is a kind of module based on CB. It incorporates the residual structure, hybrid attention structure, and multi-scale structure. To verify the rationality of the MHAB design, a CB, hybrid attention block (HAB), multi-scale block (MB), and MHAB, respectively, were taken as core modules in the complete network. The same strategy was used to train and test the complete network, where the HAB and MB were generated by a MHAB retaining only the hybrid attention structure and multi-scale structure, respectively.

The corresponding segmentation results of the four core modules are shown in Table 5. Notably, all indicators of the HAB and MB were ahead of the baseline CB, indicating that the hybrid attention and multi-scale mechanism of the MHAB had improved effects. In addition, the SE, PPV, IoU and DSC increased by 3.6%, 2.7%, 4.0%, and 3.3% in the HAB compared with the CB, respectively, which was higher than 1.8%, 1.9%, 2.8% and 2.3% in the MB, suggesting that the hybrid attention structure contributed more to tumor feature extraction than the multi-scale structure. At the same time, all indexes of the MHAB that integrated the features of the HAB and MB were better than both, which further showed the effectiveness and design rationale of the MHAB.

Table 5

| Core block | SE (%) | PPV (%) | IoU (%) | DSC (%) |

|---|---|---|---|---|

| CB (baseline) | 87.4±2.3 | 76.6±5.7 | 67.7±4.7 | 78.6±3.3 |

| HAB | 91.0±1.8 | 79.3±4.1 | 71.7±3.8 | 81.9±2.7 |

| MB | 89.2±1.7 | 78.5±4.6 | 70.5±4.9 | 80.9±3.6 |

| MHAB | 2.4±1.5 | 80.9±3.6 | 74.2±3.4 | 84.1±2.1 |

Data are presented as mean ± standard deviation. CB, convolution block; DSC, dice similarity coefficient; HAB, hybrid attention block; IoU, intersection over union; MB, multi-scale block; MHAB, multi-scale hybrid attention block; PPV, positive predictive value; SE, sensitivity.

Discussion

In this study, we introduced a novel 3D neural network named the MHAU-Net for automatic breast tumor segmentation in MRI scans. The MHAU-Net, a U-shaped network, features the MHAB as its core. The MHAB uses multiple sets of 3D convolution with varying dilated ratios to extract multi-scale context information. It incorporates a hybrid channel attention block comprising global pooling and fully connected layers to select channel features with crucial information, and a hybrid spatial attention block composed of a 3D convolution module with single-channel output to filter out irrelevant interference information in space.

The results presented above highlighted the advantages of the proposed network in breast tumor segmentation. First, a comparative analysis between 3D and 2D networks was conducted, which showed the superiority of 3D networks in this particular task. Second, the MHAU-Net was compared with seven existing segmentation networks, and the results highlighted the advanced segmentation capabilities of our proposed network. Third, by contrasting the segmentation performance of the MHAU-Net at different stages and with varying numbers of output channels, the rationality of the network’s hyperparameter settings was shown. Subsequently, we presented the segmentation results of the MHAU-Net on various tumor images, demonstrating its effectiveness in segmenting individual, large, small, and bulky tumors. Finally, through an ablation study, the validity of each improved method was shown.

The proposed MHAU-Net demonstrates clinical application value. Its automated tumor segmentation capability could facilitate the precise diagnosis of breast tumors and assist in delineating target volumes during radiotherapy (43). Further, tumor segmentation serves as a prerequisite for numerous downstream tumor classification tasks, including molecular subtype classification (44). In relation to the inference speed, the MHAU-Net took approximately 0.091 s for a single 64×192×192 patch, and approximately 9.7 s for a 144×512×512 3D image. In comparison, the baseline 3D U-Net had an inference performance of 0.064 s for a single patch and 7.1 s for a 3D image. The MHAU-Net was slightly slower than 3D U-Net; however, it remains feasible for application, and its superior segmentation performance provides it with greater advantages in practical use.

However, it should be acknowledged that the segmentation performance of the network on non-mass and multiple-tumor images could be further improved, especially in the challenging scenario of multiple tumors. This difficulty might arise from inherent segmentation challenges in the images and potential manual labeling errors. The unclear boundaries of some non-mass tumors, along with controversies in determining these boundaries, contributed to the segmentation challenge. Additionally, images with multiple tumors, especially those with numerous small- and medium-sized tumors, posed difficulties in discrimination, increasing the likelihood of misses or misjudgments. Due to the large number of 3D scans, manual labeling is a task that requires a significant amount of time, and the prolonged time frame inevitably leads to labeling errors. Specifically, as the labeling process progresses, the radiologist’s proficiency in labeling gradually improves. Therefore, there may be disagreements from the same radiologist at different times regarding whether the same tissue is a tumor. For single- and mass-tumor images with well-defined targets and boundaries, the labeling errors tend to be small; however, for non-mass and multiple-tumor images, where discrimination and labeling difficulty are high, doctor labeling errors may be amplified, affecting network training and evaluation. As a result, our future work will focus on addressing these challenges (e.g., by refining the network’s penalty for boundary segmentation errors during training, establishing more uniform labeling standards, and strengthening labeling audits).

In addition, since our dataset was derived from a single source, the generalization ability of the network might be limited. Therefore, a multi-center study needs to be conducted to collect breast MRI scans across diverse scenarios. Training the network on data from multiple centers would enhance its robustness, and validating its generalization ability using independent center data is crucial.

Conclusions

This study proposed a new 3D breast tumor segmentation network based on deep learning. Designed to accommodate the diverse sizes and shapes of breast tumors, and enhance the extraction and analysis of effective tumor information, the MHAU-Net demonstrates MHAB capability. The network’s adaptability to different tumor sizes and ability to extract context information are highlighted by its multi-scale features. The hybrid attention structure effectively suppresses irrelevant interference information at both spatial and channel levels. Through training and testing on a dataset comprising MRI scans from 906 breast cancer patients, we compared the MHAU-Net with seven existing networks. The results showed that the MHAU-Net outperformed other networks, demonstrating good segmentation effects on various tumor types. Our study findings lay the groundwork for further exploring the application value of deep learning in breast cancer diagnosis.

Acknowledgments

None.

Footnote

Reporting Checklist: The authors have completed the TRIPOD + AI reporting checklist. Available at https://qims.amegroups.com/article/view/10.21037/qims-24-1515/rc

Funding: This research was supported by

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://qims.amegroups.com/article/view/10.21037/qims-24-1515/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. This retrospective analysis was conducted in accordance with the principles outlined in the Declaration of Helsinki (as revised in 2013). The study was approved by the Ethics Committee of the Guangxi Medical University Cancer Hospital (No. KY2024395). Given the retrospective nature of the study, the requirement for written informed consent was waived.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Siegel RL, Miller KD, Fuchs HE, Jemal A. Cancer statistics, 2022. CA Cancer J Clin 2022;72:7-33. [Crossref] [PubMed]

- Giaquinto AN, Sung H, Miller KD, Kramer JL, Newman LA, Minihan A, Jemal A, Siegel RL. Breast Cancer Statistics, 2022. CA Cancer J Clin 2022;72:524-41. [Crossref] [PubMed]

- Atallah D, Arab W, El Kassis N, Nasser Ayoub E, Chahine G, Salem C, Moubarak M. Breast and tumor volumes on 3D-MRI and their impact on the performance of a breast conservative surgery (BCS). Breast J 2021;27:252-5. [Crossref] [PubMed]

- Parsons MW, Hutten RJ, Tward A, Khouri A, Peterson J, Morrell G, Lloyd S, Cannon DM, Johnson SB. The Effect of Maximum Tumor Diameter by MRI on Disease Control in Intermediate and High-risk Prostate Cancer Patients Treated With Brachytherapy Boost. Clin Genitourin Cancer 2022;20:e68-74. [Crossref] [PubMed]

- The Society of Breast Cancer China Anti-Cancer Association. Guidelines for breast cancer diagnosis and treatment by China Anti-cancer Association (2021 edition). China Oncology 2021;31:954-1040.

- Fusco R, Sansone M, Filice S, Carone G, Amato DM, Sansone C, Petrillo A. Pattern Recognition Approaches for Breast Cancer DCE-MRI Classification: A Systematic Review. J Med Biol Eng 2016;36:449-59. [Crossref] [PubMed]

- Kang SR, Kim HW, Kim HS. Evaluating the Relationship Between Dynamic Contrast-Enhanced MRI (DCE-MRI) Parameters and Pathological Characteristics in Breast Cancer. J Magn Reson Imaging 2020;52:1360-73. [Crossref] [PubMed]

- Alruily M, Said W, Mostafa AM, Ezz M, Elmezain M. Breast Ultrasound Images Augmentation and Segmentation Using GAN with Identity Block and Modified U-Net 3. Sensors (Basel) 2023;23:8599. [Crossref] [PubMed]

- Zhou J, Hou Z, Lu H, Wang W, Zhao W, Wang Z, Zheng D, Wang S, Tang W, Qu X. A deep supervised transformer U-shaped full-resolution residual network for the segmentation of breast ultrasound image. Med Phys 2023;50:7513-24. [Crossref] [PubMed]

- Drioua WR, Benamrane N, Sais L. Breast Cancer Histopathological Images Segmentation Using Deep Learning. Sensors (Basel) 2023;23:7318. [Crossref] [PubMed]

- Ronneberger O, Fischer P, Brox T. U-net: Convolutional networks for biomedical image segmentation. In: Navab N, Hornegger J, Wells WM, Frangi AF, editors. Medical Image Computing and Computer-Assisted Intervention - MICCAI 2015. Cham: Springer International Publishing; 2015;234-41.

- Chen Y, Xu C, Ding W, Sun S, Yue X, Fujita H. Target-aware U-Net with fuzzy skip connections for refined pancreas segmentation. Applied Soft Computing 2022;131:109818. [Crossref]

- Oh N, Kim JH, Rhu J, Jeong WK, Choi GS, Kim JM, Joh JW. Automated 3D liver segmentation from hepatobiliary phase MRI for enhanced preoperative planning. Sci Rep 2023;13:17605. [Crossref] [PubMed]

- Weng X, Song F, Tang M, Wang K, Zhang Y, Miao Y, Chan LW, Lei P, Hu Z, Yang F. MDM-U-Net: A novel network for renal cancer structure segmentation. Comput Med Imaging Graph 2023;109:102301. [Crossref] [PubMed]

- Pramanik P, Pramanik R, Schwenker F, Sarkar R. DBU-Net: Dual branch U-Net for tumor segmentation in breast ultrasound images. PLoS One 2023;18:e0293615. [Crossref] [PubMed]

- Qin C, Lin J, Zeng J, Zhai Y, Tian L, Peng S, Li F. Joint Dense Residual and Recurrent Attention Network for DCE-MRI Breast Tumor Segmentation. Comput Intell Neurosci 2022;2022:3470764. [Crossref] [PubMed]

- Chowdary GJ, Yoagarajah P. EU-Net: Enhanced U-shaped Network for Breast Mass Segmentation. IEEE J Biomed Health Inform 2023; Epub ahead of print. [Crossref] [PubMed]

- Chen LC, Zhu Y, Papandreou G, Schroff F, Adam H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In: Ferrari V, Hebert M, Sminchisescu C, Weiss Y, editors. Computer Vision - ECCV 2018. Cham: Springer International Publishing; 2018;833-51.

- Lee C, Liao Z, Li Y, Lai Q, Guo Y, Huang J, Li S, Wang Y, Shi R. Placental MRI segmentation based on multi-receptive field and mixed attention separation mechanism. Comput Methods Programs Biomed 2023;242:107699. [Crossref] [PubMed]

- Sunnetci KM, Kaba E, Celiker FB, Alkan A. Deep Network-Based Comprehensive Parotid Gland Tumor Detection. Acad Radiol 2024;31:157-67. [Crossref] [PubMed]

- Wang L, Shao A, Huang F, Liu Z, Wang Y, Huang X, Ye J. Deep learning-based semantic segmentation of non-melanocytic skin tumors in whole-slide histopathological images. Exp Dermatol 2023;32:831-9. [Crossref] [PubMed]

- Liu R, Tao F, Liu X, Na J, Leng H, Wu J, Zhou T. RAANet: A Residual ASPP with Attention Framework for Semantic Segmentation of High-Resolution Remote Sensing Images. Remote Sens 2022;14:3109. [Crossref]

- Sun X, Zhang Y, Chen C, Xie S, Dong J. High-order paired-ASPP for deep semantic segmentation networks. Information Sciences 2023;646:11936. [Crossref]

- Yang H, Yang D. CSwin-PNet: A CNN-Swin Transformer combined pyramid network for breast lesion segmentation in ultrasound images. Expert Systems with Applications 2023;213:119024. [Crossref]

- Iqbal A, Sharif M. MDA-Net: Multiscale dual attention-based network for breast lesion segmentation using ultrasound images. Journal of King Saud University-Computer and Information Sciences 2022;34:7283-99. [Crossref]

- Lu E, Hu X. Image super-resolution via channel attention and spatial attention. Applied Intelligence 2022;52:2260-8. [Crossref]

- Ding M, Xiao B, Codella N, Luo P, Wang J, Yuan L. DaViT: Dual Attention Vision Transformers. In: Avidan S, Brostow G, Cissé M, Farinella GM, Hassner T, editors. Computer Vision - ECCV 2022. Cham: Springer Nature Switzerland; 2022:74-92.

- Hu J, Shen L, Albanie S, Sun G, Wu E. Squeeze-and-Excitation Networks. IEEE Trans Pattern Anal Mach Intell 2020;42:2011-23. [Crossref] [PubMed]

- Zhang H, Wu C, Zhang Z, Zhu Y, Lin H, Zhang Z, Sun Y, He T, Mueller J, Manmatha R, Li M, Smola A. Resnest: Split-attention networks. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW); 19-20 June 2022; New Orleans, LA, USA. IEEE; 2022:2735-45.

- Zhang Y, Li K, Li K, Wang L, Zhong B, Fu Y. Image Super-Resolution Using Very Deep Residual Channel Attention Networks. In: Ferrari V, Hebert M, Sminchisescu C, Weiss Y. editors. Computer Vision – ECCV 2018. Lecture Notes in Computer Science, vol 11211. Springer, Cham; 2018:294-310.

- Zhang QL, Yang YB. Sa-net: Shuffle attention for deep convolutional neural networks. ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP); 06-11 June 2021; Toronto, ON, Canada. IEEE; 2021:2235-9.

- Zhao H, Zhang Y, Liu S, Shi J, Loy CC, Lin D, Jia J. Psanet: Point-wise spatial attention network for scene parsing. In: Ferrari V, Hebert M, Sminchisescu C, Weiss Y, editors. Computer Vision - ECCV 2018. Cham: Springer International Publishing; 2018:270-86.

- Zhang Q, Cheng J, Zhou C, Jiang X, Zhang Y, Zeng J, Liu L. PDC-Net: parallel dilated convolutional network with channel attention mechanism for pituitary adenoma segmentation. Front Physiol 2023;14:1259877. [Crossref] [PubMed]

- Zhang S, Liu Z, Chen Y, Jin Y, Bai G. Selective kernel convolution deep residual network based on channel-spatial attention mechanism and feature fusion for mechanical fault diagnosis. ISA Trans 2023;133:369-83. [Crossref] [PubMed]

- Zhang J, Cui Z, Shi Z, Jiang Y, Zhang Z, Dai X, et al. A robust and efficient AI assistant for breast tumor segmentation from DCE-MRI via a spatial-temporal framework. Patterns (N Y) 2023;4:100826. [Crossref] [PubMed]

- Wang H, Cao J, Feng J, Xie Y, Yang D, Chen B. Mixed 2D and 3D convolutional network with multi-scale context for lesion segmentation in breast DCE-MRI. Biomedical Signal Processing and Control 2021;68:102607. [Crossref]

- Chen G, Li L, Dai Y, Zhang J, Yap MH. AAU-Net: An Adaptive Attention U-Net for Breast Lesions Segmentation in Ultrasound Images. IEEE Trans Med Imaging 2023;42:1289-300. [Crossref] [PubMed]

- Zhou Z, Siddiquee MMR, Tajbakhsh N, Liang J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. Deep Learn Med Image Anal Multimodal Learn Clin Decis Support (2018) 2018;11045:3-11. [Crossref] [PubMed]

- Dong G, Wang Z, Chen Y, Sun Y, Song H, Liu L, Cui H. An efficient segment anything model for the segmentation of medical images. Sci Rep 2024;14:19425. [Crossref] [PubMed]

- Wang Z, Lu F. VoxSegNet: Volumetric CNNs for Semantic Part Segmentation of 3D Shapes. IEEE Trans Vis Comput Graph 2020;26:2919-30. [Crossref] [PubMed]

- Çiçek Ö, Abdulkadir A, Lienkamp SS, Brox T, Ronneberger O. 3D U-Net: learning dense volumetric segmentation from sparse annotation. In: Ourselin S, Joskowicz L, Sabuncu MR, Unal G, Wells W, editors. Medical Image Computing and Computer-Assisted Intervention - MICCAI 2016. Cham: Springer International Publishing; 2016:424-32.

- Milletari F, Navab N, Ahmadi SA. V-net: Fully convolutional neural networks for volumetric medical image segmentation. 2016 Fourth International Conference on 3D Vision (3DV); 25-28 October 2016; Stanford, CA, USA. IEEE; 2016:565-71.

- Hou Z, Gao S, Liu J, Yin Y, Zhang L, Han Y, Yan J, Li S. Clinical evaluation of deep learning-based automatic clinical target volume segmentation: a single-institution multi-site tumor experience. Radiol Med 2023;128:1250-61. [Crossref] [PubMed]

- Sun L, Tian H, Ge H, Tian J, Lin Y, Liang C, Liu T, Zhao Y. Cross-attention multi-branch CNN using DCE-MRI to classify breast cancer molecular subtypes. Front Oncol 2023;13:1107850. [Crossref] [PubMed]