Artificial intelligence-assisted tear meniscus height measurement: a multicenter study

Introduction

Dry eye disease (DED) is a multifactorial condition (1). The Tear Film and Ocular Surface Society (TFOS) Dry Eye Workshop (DEWS) II identified the key mechanism of DED as tear film instability, which may lead to damage to the ocular surface with an inflammatory reaction (2). DED is one of the most prevalent diseases in ophthalmology. A global prevalence study by the TFOS DEWS II subcommittee revealed that DED affects 5–50% of the population (3). As the global population continues to age and electronic device usage becomes increasingly widespread, the prevalence of DED has shown a steady rise. This condition, which manifests in varying degrees of severity, causes significant discomfort for those affected. Effective management of DED often requires a combination of treatment strategies (4). Consequently, the need for efficient, accurate, and accessible diagnostic methods has become critically important. Currently, new research is focusing on medications that can effectively prevent and alleviate the signs and symptoms of DED (5,6).

Evaluation of the tear meniscus is currently a highly effective approach in the diagnosis of DED. Tear meniscus height (TMH), a crucial parameter for evaluating the tear meniscus, has been extensively researched in recent years to explore its relationship with DED. In 2007, Uchida et al. utilized a tear interference device (Tearscope plus, Keeler, Windsor, UK) to compare the TMH in normal individuals and patients with DED. They observed that TMH in patients with DED is generally significantly lower than that in normal individuals (7). In 2010, Yuan et al. used optical coherence tomography (OCT) to measure tear meniscus dynamics in DED patients with aqueous tear deficiency and concluded that the TMH in patients with DED is lower than that in normal individuals under both normal and delayed blinking conditions (8).

Numerous relevant studies have been conducted regarding the implementation of automated TMH measurement algorithms. In 2019, Stegmann et al. used a custom OCT system and a threshold-based segmentation algorithm to examine the lower tear meniscus (9). Based on this, in 2020, Stegmann et al. developed a deep learning model using threshold-based segmentation algorithm-segmented tear meniscus images (10). Additionally, in 2019, Arita et al. devised and assessed a method using the Kowa DR-1a tear interferometer for quantitative TMH measurement. However, this method requires point selection by the operator before calculation (11). In 2019, Yang et al. introduced a novel automatic image recognition software that uses a threshold-based algorithm for TMH measurement, although image data collection in this method is somewhat invasive (12). Moreover, in 2021, Deng et al. proposed a fully convolutional neural network (CNN)-based approach for automatic segmentation of the tear meniscus area and TMH calculation. However, this method employed polynomial functions to delineate the overall upper and lower boundaries of the tear meniscus, introducing significant errors (13). In 2023, Wan et al. designed an algorithm for tear meniscus area segmentation using the DeepLabv3 structure, enhanced with elements of the ResNet50, Google-Net, and fully voluntary networks (FCN) structures (14). In 2024, Borselli et al. utilized fluorescent-stained images with portable devices to achieve automated TMH measurement (15). In the same year, Nejat et al. attempted to use smartphones to acquire data for measuring TMH. The use of smartphones and portable mobile devices represents a significant step forward for such research toward mobile healthcare (16). However, there is still room for improvement in terms of performance. The aforementioned studies lack consideration of the variability between different regions and different modalities of data.

In this study, we investigate the variability across different regions and data patterns and propose an efficient annotation method that combines edge detection (17,18) with threshold segmentation techniques. We introduce an attention-limiting neural network (ALNN) specifically designed for small-object (19,20) segmentation tasks. By utilizing scale transformation (21,22), ALNN focuses the model’s learning attention, converting small objects into larger ones to achieve precise segmentation. The algorithm’s performance was evaluated by comparing its results with measurements conducted by outpatient specialists during professional examinations. Ultimately, the study developed a robust TMH automated measurement model with strong generalization capabilities across multiple medical centers and two distinct data patterns, providing clinicians with recommendations for selecting the most suitable data collection methods. We present this article in accordance with the TRIPOD reporting checklist (available at https://qims.amegroups.com/article/view/10.21037/qims-24-1948/rc).

Methods

Data acquisition

This retrospective study involved a total of 3,894 TMH images collected from five centers across four regions in eastern, southern, and western China (Table 1). All images were obtained from July 2020 to August 2024. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). This study was approved by the Institutional Review Board (IRB) of the Eye Hospital, Wenzhou Medical University (IRB approval No. H2023-045-K-42) and the requirement for individual consent for this retrospective analysis was waived due to the retrospective nature. All participating hospitals/institutions were informed and agreed the study.

Table 1

| Dataset | Center name | Image type | Quantity | Location |

|---|---|---|---|---|

| Color1 | Eye Hospital, Wenzhou Medical University, Hangzhou Campus | Color | 834 | Eastern China |

| Color2 | Hangzhou Red Cross Hospital | Color | 996 | Eastern China |

| Infrared1 | Shenzhen Eye Institute, Shenzhen Eye Hospital, Jinan University | Infrared | 276 | Southern China |

| Infrared2 | Eye Hospital, Wenzhou Medical University, Wenzhou campus | Infrared | 829 | Eastern China |

| Infrared3 | The First People’s Hospital of Aksu District in Xinjiang | Infrared | 959 | Western China |

All TMH images were captured using the Keratograph 5M (K5M; Oculus, Wetzlar, Germany). Each image had a resolution of 1,024×1,360 pixels and was saved in PNG format. The dataset included images in both color (RGB mode) and infrared (grayscale mode). The experimental hardware configuration for model training and testing consisted of 20 Intel(R) Xeon(R) W-2255 CPUs @ 3.70 GHz and an NVIDIA RTXA4000 GPU (NVIDIA, Santa Clara, CA, USA). The software environment used for the experiments included Ubuntu 22.04.2 LTS (Canonical, London, UK) as the operating system, PyCharm 2023.1.4 Professional Edition (JetBrains, Prague, Czechia) as the development platform, and Python 3.8.17 (Python Software Foundation, Wilmington, DE, USA) as the programming language.

Data annotation

In this study, the upper and lower edges of the stripe-shaped tear film near the lower eyelid margin were defined as the upper and lower boundaries of the tear meniscus, respectively. The center point within the smallest ring of the Placido ring was defined as the center of the pupil area.

The study obtained 1,830 color images from two different centers. These centers were encoded as Color1 (Eye Hospital, Wenzhou Medical University, Hangzhou Campus) and Color2 (Hangzhou Red Cross Hospital). The 834 images from Color1 were used as the dataset for model development, whereas the 996 images from Color2 were used as the external validation set. Additionally, 2,064 infrared images were collected from three different centers, which were encoded as Infrared1 (Shenzhen Eye Institute, Shenzhen Eye Hospital, Jinan University), Infrared2 (Eye Hospital, Wenzhou Medical University, Wenzhou campus), and Infrared3 (The First People’s Hospital of Aksu District in Xinjiang). The 276 images from Infrared1 and the 829 images from Infrared2 were used as the development dataset, whereas the 959 images from Infrared3 were used as the external validation set.

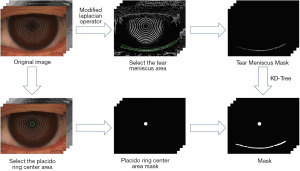

The data used for model development generated the tear meniscus masks under the guidance of image gradient information combined with KD-Tree (23), thereby improving annotation efficiency. This process is illustrated in Figure 1.

A total of 200 images were randomly selected, and the horizontal coordinates of TMH were measured by two experts. This process was repeated three times without any time constraints to minimize the influence of prior annotations. The results of these measurements were used to demonstrate the consistency among the experts as well as the consistency across multiple measurements by each expert. Therefore, the ground truth (GT) annotations for TMH in all images were completed collectively by the two experts.

In this study, the measurements taken by doctors represent the TMH as measured by outpatient special inspection doctors (DOC) in real clinical environments at various hospitals. Subsequently, we compared the accuracy of the measurements produced by our model with the accuracy of the doctors’ measurements.

Gradient information guidance

We designed a modified Laplacian operator (24) to extract gradient information from images. Guided by the gradient information, annotations were generated using ImageJ software (National Institutes of Health, Bethesda, MD, USA) and KD-Tree. The calculation formula is:

Where Out represents the output image, Input represents the input image, represents the modified Laplacian operator, and represents the convolution operations. In this paper, we set , the choice of the operator’s center weight, and was determined based on empirical experience.

This annotation, guided by image gradient information, is the result of human-computer collaboration, leading us to name the proposed method the Human-Computer Collaboration Method. This approach enhances the efficiency and quality of the generated masks, ensuring strong alignment between the masks and the GT of TMH.

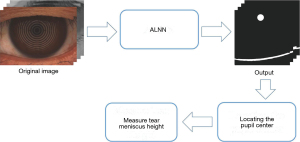

Segmentation models

The segmentation of the tear meniscus and pupil can be categorized as a small target segmentation task. To enhance the stability and accuracy of the segmentation results, this study proposes an ALNN based on CNNs (25), integrating the classic U-Net (26) architecture. The model consists of three main components. Both the first and third components consist of 9 convolutional blocks, 4 up sampling layers, and 4 down sampling layers. Each convolutional block includes a 3×3 convolutional layer with a stride of 1, a batch normalization layer, a dropout layer, and an activation layer with LeakyReLU as the activation function. These layers are sequentially stacked twice. Each upsampling block consists of a 1×1 convolutional layer with a stride of 1. The down sampling block includes 3×3 convolutional layers, batch normalization layers, and activation layers, sequentially stacked for down sampling. The final output is obtained through a 3×3 convolutional layer followed by a Sigmoid layer. Reflect padding is used in the convolutional blocks and down sampling blocks, whereas nearest-neighbor interpolation is employed in the upsampling block. The third component also includes a cascading structure designed to enhance the previously extracted feature information. The second component is a region of interest (ROI) detection layer that connects the first and third components. The ROI detection layer identifies a square ROI based on the output of the first part of the model. This selected region includes the complete pupil area as well as the vertically intact tear meniscus region. The extracted ROI from the original image and label is subsequently fed into the third part of the model for training. The specific network structure used in this study is illustrated in Figure 2, The workflow of the ROI detection layer is shown in Figure 3.

The 834 color images from Color1 and 1,105 infrared images (276 from Infrared1 and 829 from Infrared2) were used as the development set, which was divided into training, validation, and test sets in a ratio of 5:2:3. The training and validation sets were subjected to identical data augmentation procedures. The remaining datasets (Color2 and Infrared3) were used as external validation sets. The workflow of the artificial intelligence (AI) algorithm in this paper is shown in Figure 4.

Loss function and optimizer

The loss function in this study is a combination of binary cross-entropy loss (BCELoss), dice loss (DiceLoss), and matrix norm loss (MatrixLoss) functions. BCELoss aided in better fitting the model to the training data, DiceLoss enhanced segmentation and object control in images, and MatrixLoss regulated the scale and complexity of model parameters, thereby improving the model’s stability and generalization ability. The formula of the loss function is as follows:

where represents the mean, represents the value of the annotated mask at coordinate , represents the value of the model’s predicted image at coordinate , Y is the ground-truth of the mask, Y' is the model’s output prediction mask, represents the number of Y non-zero elements, represents the number of non-zero elements in , and represents the number of intersecting non-zero elements between and .

The model in this study adopted the Adam optimizer, leveraging its fast adaptive learning rate and momentum to facilitate rapid and stable training of deep neural networks. The specific parameters are as follows:

Learning rate (lr) =0.0001. Adam uses exponentially decaying moving averages to estimate the first-order moment (mean, α) and the second-order moment (mean square, β) of the gradient, which are 0.9 and 0.999, respectively. To ensure numerical stability and avoid division by zero, ε=1e−8 was added to the denominator.

Calculation of TMH

TMH was defined as the distance between the upper and lower boundaries of the tear meniscus directly below the pupil center. Deng et al. (13) demonstrated that when the length of the measurement section ranges from 0.5 to 4 mm, the TMH measurements are highly robust. In this study, a mask consisting of the pupil and tear meniscus areas was generated using our model (ALNN). The pupil and tear meniscus are distinguished using positional information. The pupil center is precisely located via Hough circle detection, enabling the subsequent calculation of TMH.

As shown in Figure 5, in this study, the measurement section is defined as a length of 10 pixels, which is 0.115 mm, taken from the tear meniscus region directly below the pupil center coordinates.

Firstly, let TMR represent the set of coordinates in the tear meniscus region:

where represents the value corresponding to the , xirepresents the , and PC represents the x-coordinate of the pupil center.

The difference between the highest and lowest y-coordinates within the measurement section was calculated and averaged to determine TMH; this is the method commonly used in the literature to measure TMH (13,14), and the formula is:

where represents the average, d represents the length of the measurement section ranges of TMH, represents the maximum value, and represents the minimum value.

Results

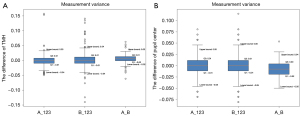

Analysis of annotations

Two experts conducted three measurements of TMH and the horizontal coordinates of the pupil center on the same set of 200 images. Figure 6 provides a visual representation of intra- and inter-group measurement deviations using box plots. The normality of all data was tested using the Kolmogorov-Smirnov test, and the results indicated non-normal distributions (P<0.001). The TMH measurements from all experts were subjected to reliability analysis using intra- and inter-class correlation coefficients (ICC =0.99, P<0.001), and variability analysis was conducted using the Friedman test (P<0.001). The results showed that these measurements had good reliability. According to Figure 6, the differences in TMH measurements between experts typically range from −0.04 to 0.04 mm. The pupil center measurements by the two experts fall within the range of −0.05 to 0.05 mm, indicating stability, as corroborated by another study (13).

Networks performance

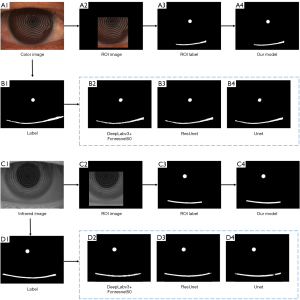

This study evaluated the segmentation results of the model on the development set using four metrics: average mean intersection over union (MIoU), recall, precision, and F1-score. Under the same conditions, the segmentation results of the other three network models on the color image test set and the infrared image test set are shown in Table 2. The values in bold indicate the best results for each metric. The data in the tables indicate that our model outperforms the other models in most metrics across both color image and infrared image datasets, demonstrating superior segmentation performance. Figure 7 presents the original image, segmentation labels, the output results of different networks, as well as the ROI automatically constrained by the attention model of the ALNN network, along with the corresponding ROI labels and the results generated by our model (ALNN).

Table 2

| Method | Color image test set | Infrared image test set | |||||||

|---|---|---|---|---|---|---|---|---|---|

| MIoU | Recall | Precision | F1-score | MIoU | Recall | Precision | F1-score | ||

| Our model | 0.9578 | 0.9648 | 0.9526 | 0.9576 | 0.9290 | 0.9150 | 0.9388 | 0.9249 | |

| U-Net (26) | 0.9319 | 0.9264 | 0.9320 | 0.9274 | 0.9283 | 0.9056 | 0.9413 | 0.9209 | |

| ResUnet (27) | 0.9185 | 0.9141 | 0.9134 | 0.9120 | 0.9108 | 0.8944 | 0.9169 | 0.9031 | |

| DeepLabv3 (28) + fcnresnet50 (29) | 0.8826 | 0.8472 | 0.8954 | 0.8684 | 0.8821 | 0.8499 | 0.8941 | 0.8688 | |

MIoU, mean intersection over union.

Comparison between AI and DOC

Table 3 presents the descriptive statistics of TMH measurements by DOC and AI, along with the results of correlation tests and non-parametric tests comparing them to the GT. The correlation and non-parametric tests were conducted using Spearman’s rank correlation test (with P<0.05 considered statistically significant) and the Mann-Whitney U test, respectively. The normality of all data was assessed using the Kolmogorov-Smirnov test, which indicated a non-normal distribution (P<0.001). TMH measurements by AI and DOC showed significant correlations with the GT across the datasets—Color1, Color2, Infrared1, Infrared2, and Infrared3—confirmed by the Spearman’s rank correlation test (P<0.001). The Mann-Whitney U test revealed no significant statistical difference between DOC measurements and GT in the Color1 and Infrared3 datasets (P>0.05), whereas significant differences were observed in the other datasets (P<0.05). The AI measurements showed a significant statistical difference with GT only in the Color2 dataset (P<0.05), with no significant differences in the remaining datasets.

Table 3

| Dataset | Source-based | Median | IQR | Spearman rank test | Mann-Whitney U test, P value | |

|---|---|---|---|---|---|---|

| r value | P value | |||||

| Color1 | GT | 0.20 | 0.11 | |||

| AI | 0.21 | 0.12 | 0.935 | <0.001* | 0.0628 | |

| DOC | 0.22 | 0.12 | 0.803 | <0.001* | 0.7497 | |

| Color2 | GT | 0.26 | 0.14 | |||

| AI | 0.25 | 0.14 | 0.957 | <0.001* | <0.001* | |

| DOC | 0.28 | 0.13 | 0.872 | <0.001* | <0.05* | |

| Infrared1&2 | GT | 0.25 | 0.10 | |||

| AI | 0.25 | 0.11 | 0.855 | <0.001* | 0.2707 | |

| DOC | 0.23 | 0.10 | 0.762 | <0.001* | <0.001* | |

| Infrared3 | GT | 0.25 | 0.11 | |||

| AI | 0.25 | 0.11 | 0.803 | <0.001* | 0.7497 | |

| DOC | 0.24 | 0.10 | 0.742 | <0.001* | 0.2230 | |

TMH values are provided in mm and described by median value and IQR. Infrared1&2 refers to the Infrared1 and Infrared2 datasets used together as the training and internal test sets; GT represents the GT of the TMH; AI represents the AI measurement results; and DOC represents the measurements by clinicians from the specialized examination department. The r value represents the Spearman correlation coefficient between the measurements and the GT. *, P<0.05 is considered a significant difference. AI, artificial intelligence; DOC, outpatient special inspection doctor; IQR, interquartile range; GT, ground truth; TMH, tear meniscus height.

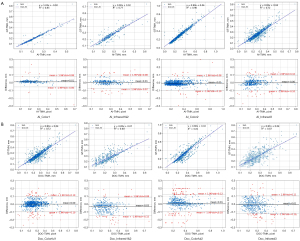

Direct comparisons of all measurement results were conducted using linear regression (Figure 8). Both AI_TMH and DOC_TMH exhibited high consistency across all datasets. Figure 8 further illustrates Bland-Altman plots comparing AI and DOC measurements with GT across different datasets. The average error of AI measurements across the four datasets ranged from −0.01 to 0.01 mm, with 91.13% (1,007/1,105) to 97.80% (974/996) of points falling within the 95% confidence interval (CI). For DOC, the average error ranged from −0.03 to 0.01 mm, with 91.06% (907/996) to 95.2% (238/250) of points within the 95% CI.

Discussion

DED is a prevalent eye disease that significantly impacts the visual function of patients, and its detrimental effects should not be underestimated (30). Research indicates that TMH is a critical parameter in assessing the tear meniscus and is essential in diagnosing DED. However, the accuracy of TMH measurements by doctors may also be influenced by various factors, and there is a lack of standardized methods for TMH measurement. Stegmann et al. (9,10) and Yang et al. (12) utilized OCT and fluorescein staining images; the complexity and cost of data acquisition in such methods are significantly higher, and the calculations were performed using traditional segmentation approaches (31). Compared to previous studies, this research utilized more accessible anterior segment photography and proposes a Human-Computer Collaboration Method annotation method guided by image gradients. This approach helps to eliminate the subjectivity and instability associated with purely manual annotation.

This study employed a U-Net-based deep learning framework, which demonstrates significant performance advantages in the field of medical image segmentation. We developed a deep learning model with constrained attention based on this framework. The first part of the model, along with the ROI detection layer, is designed to extract ROIs, significantly reducing the model’s focus on irrelevant information, which enhances its discriminative ability. In addition, our model effectively captures multi-scale features (19,20), enabling precise segmentation of small targets (21,22), while utilizing different padding strategies to preserve high-resolution details. The final results show that on the development dataset, the MIoUs achieved on the color dataset and the infrared dataset were 0.9578 and 0.9290, respectively. The correlation coefficients between the measured TMH and the GT were 0.935 (Color1) and 0.855 (Infrared1&2), demonstrating the superior performance of our model in both segmentation and TMH measurement. In terms of TMH, we tested the model on two external validation sets. The correlation coefficients between the AI measurements and the GT were 0.957 (Color2) and 0.803 (Infrared3), with an average deviation ranging from −0.01 to 0.01 mm. For the DOC’s measurements, the correlation coefficients with the GT were 0.872 and 0.742, with an average deviation ranging from −0.03 to 0.01 mm. By comparing the measurement results of AI with those of DOCs (13), it was found that the average measurement error range of AI was smaller than that of DOCs. Therefore, it can be concluded that the performance of AI surpasses that of DOCs, further confirming that doctors are indeed more susceptible to various factors, leading to higher likelihoods of error.

During the research process, it was found that the color image dataset was more advantageous for accurate measurement of TMH. However, capturing color images requires the use of white light, which may stimulate tear secretion. Therefore, it is recommended to first use the infrared mode for initial focusing, and then switch to white light for rapid focusing and capturing TMH images, in order to reduce tear stimulation and minimize errors. We also observed that some images exhibited abnormally wide regions on both sides of the tear film. This variability may be associated with conditions such as lid-parallel conjunctival folds and conjunctivochalasis. Automating the segmentation and measurement of TMH can facilitate research into the correlation between different tear meniscus shapes and ocular diseases (32), support the investigation of drug efficacy (5), and guide subsequent treatment strategies (33). By integrating TMH with other related indicators, a more comprehensive multimodal AI system can be developed for the auxiliary diagnosis of DED. The significance of this work lies in providing recommendations for data collection and measurement standardization, enhancing the reliability of indicators, and offering practical support for the clinical diagnosis, evaluation, classification, and treatment guidance of DED.

However, there are still some limitations in our study: (I) although the data were collected from multiple centers, the overall quantity remains insufficient. In particular, there is a relative scarcity of data with TMHs greater than 0.4 mm, which results in a lack of robustness in the model when handling such cases. (II) We recognize that the findings may be influenced by the specific characteristics of the Keratograph 5M (Oculus, Wetzlar, Germany), which limits the wider application of this model. (III) For cases with abnormal tear meniscus shape or excessively high TMH, we found associations with certain diseases, such as conjunctivochalasis and eyelid-parallel conjunctival folds. However, the current model is unable to provide more detailed and accurate alerts and recommendations.

Conclusions

In this study, we proposed a two-stage deep learning approach that integrates data acquired through K5M in two different modes and incorporates a gradient-guided human-computer collaborative method for annotation. The model demonstrated good generalization performance on both the development and external datasets, with AI-measured TMH showing a high correlation with the GT. Compared to infrared images, color images are more conducive to accurate TMH measurement by both DOC and AI. This finding can guide clinicians in better capturing ocular image data, leading to more efficient and accurate TMH measurements, and ultimately enhancing the efficiency of clinical dry eye screening.

Acknowledgments

None.

Footnote

Reporting Checklist: The authors have completed the TRIPOD reporting checklist. Available at https://qims.amegroups.com/article/view/10.21037/qims-24-1948/rc

Funding: This research was funded by

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://qims.amegroups.com/article/view/10.21037/qims-24-1948/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). This study was approved by the Institutional Review Board (IRB) of the Eye Hospital, Wenzhou Medical University (IRB approval No. H2023-045-K-42) and the requirement for individual consent for this retrospective analysis was waived due to the retrospective nature. All participating hospitals/institutions were informed and agreed the study.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Craig JP, Nichols KK, Akpek EK, Caffery B, Dua HS, Joo CK, Liu Z, Nelson JD, Nichols JJ, Tsubota K, Stapleton F. TFOS DEWS II Definition and Classification Report. Ocul Surf 2017;15:276-83. [Crossref] [PubMed]

- Bron AJ, de Paiva CS, Chauhan SK, Bonini S, Gabison EE, Jain S, Knop E, Markoulli M, Ogawa Y, Perez V, Uchino Y, Yokoi N, Zoukhri D, Sullivan DA. TFOS DEWS II pathophysiology report. Ocul Surf 2017;15:438-510. [Crossref] [PubMed]

- Stapleton F, Alves M, Bunya VY, Jalbert I, Lekhanont K, Malet F, Na KS, Schaumberg D, Uchino M, Vehof J, Viso E, Vitale S, Jones L. TFOS DEWS II Epidemiology Report. Ocul Surf 2017;15:334-65. [Crossref] [PubMed]

- Jones L, Downie LE, Korb D, Benitez-Del-Castillo JM, Dana R, Deng SX, Dong PN, Geerling G, Hida RY, Liu Y, Seo KY, Tauber J, Wakamatsu TH, Xu J, Wolffsohn JS, Craig JP. TFOS DEWS II Management and Therapy Report. Ocul Surf 2017;15:575-628. [Crossref] [PubMed]

- Ballesteros-Sánchez A, Sánchez-González JM, Tedesco GR, Rocha-de-Lossada C, Murano G, Spinelli A, Mazzotta C, Borroni D. Evaluating GlicoPro Tear Substitute Derived from Helix aspersa Snail Mucus in Alleviating Severe Dry Eye Disease: A First-in-Human Study on Corneal Esthesiometry Recovery and Ocular Pain Relief. J Clin Med 2024;13:1618. [Crossref] [PubMed]

- Borroni D, Mazzotta C, Rocha-de-Lossada C, Sánchez-González JM, Ballesteros-Sanchez A, García-Lorente M, Zamorano-Martín F, Spinelli A, Schiano-Lomoriello D, Tedesco GR. Dry Eye Para-Inflammation Treatment: Evaluation of a Novel Tear Substitute Containing Hyaluronic Acid and Low-Dose Hydrocortisone. Biomedicines 2023;11:3277. [Crossref] [PubMed]

- Uchida A, Uchino M, Goto E, Hosaka E, Kasuya Y, Fukagawa K, Dogru M, Ogawa Y, Tsubota K. Noninvasive interference tear meniscometry in dry eye patients with Sjögren syndrome. Am J Ophthalmol 2007;144:232-7. [Crossref] [PubMed]

- Yuan Y, Wang J, Chen Q, Tao A, Shen M, Shousha MA. Reduced tear meniscus dynamics in dry eye patients with aqueous tear deficiency. Am J Ophthalmol 2010;149:932-938.e1. [Crossref] [PubMed]

- Stegmann H, Aranha Dos Santos V, Messner A, Unterhuber A, Schmidl D, Garhöfer G, Schmetterer L, Werkmeister RM. Automatic assessment of tear film and tear meniscus parameters in healthy subjects using ultrahigh-resolution optical coherence tomography. Biomed Opt Express 2019;10:2744-56. [Crossref] [PubMed]

- Stegmann H, Werkmeister RM, Pfister M, Garhöfer G, Schmetterer L, Dos Santos VA. Deep learning segmentation for optical coherence tomography measurements of the lower tear meniscus. Biomed Opt Express 2020;11:1539-54. [Crossref] [PubMed]

- Arita R, Yabusaki K, Hirono T, Yamauchi T, Ichihashi T, Fukuoka S, Morishige N. Automated Measurement of Tear Meniscus Height with the Kowa DR-1α Tear Interferometer in Both Healthy Subjects and Dry Eye Patients. Invest Ophthalmol Vis Sci 2019;60:2092-101. [Crossref] [PubMed]

- Yang J, Zhu X, Liu Y, Jiang X, Fu J, Ren X, Li K, Qiu W, Li X, Yao J. TMIS: a new image-based software application for the measurement of tear meniscus height. Acta Ophthalmol 2019;97:e973-80. [Crossref] [PubMed]

- Deng X, Tian L, Liu Z, Zhou Y, Jie Y. A deep learning approach for the quantification of lower tear meniscus height. Biomedical Signal Processing and Control 2021;68:102655. [Crossref]

- Wan C, Hua R, Guo P, Lin P, Wang J, Yang W, Hong X. Measurement method of tear meniscus height based on deep learning. Front Med (Lausanne) 2023;10:1126754. [Crossref] [PubMed]

- Borselli M, Toro MD, Rossi C, Taloni A, Khemlani R, Nakayama S, Nishimura H, Shimizu E, Scorcia V, Giannaccare G. Feasibility of Tear Meniscus Height Measurements Obtained with a Smartphone-Attachable Portable Device and Agreement of the Results with Standard Slit Lamp Examination. Diagnostics (Basel) 2024;14:316. [Crossref] [PubMed]

- Nejat F, Eghtedari S, Alimoradi F. Next-Generation Tear Meniscus Height Detecting and Measuring Smartphone-Based Deep Learning Algorithm Leads in Dry Eye Management. Ophthalmol Sci 2024;4:100546. [Crossref] [PubMed]

- Ma P, Yuan H, Chen YY, Chen HT, Weng GR, Liu Y. A Laplace operator-based active contour model with improved image edge detection performance. Digital Signal Processing 2024;151:104550. [Crossref]

- Orhei C, Bogdan V, Bonchis C, Vasiu R. Dilated Filters for Edge-Detection Algorithms. Appl Sci 2021;11:10716. [Crossref]

- Cheng G, Yuan X, Yao X, Yan K, Zeng Q, Xie X, Han J. Towards Large-Scale Small Object Detection: Survey and Benchmarks. IEEE Trans Pattern Anal Mach Intell 2023;45:13467-88. [Crossref] [PubMed]

- Sang S, Zhou Y, Islam MT, Xing L. Small-Object Sensitive Segmentation Using Across Feature Map Attention. IEEE Trans Pattern Anal Mach Intell 2023;45:6289-306. [Crossref] [PubMed]

- Zheng Z, Zhong Y, Wang J, Ma A. Foreground-aware relation network for geospatial object segmentation in high spatial resolution remote sensing imagery. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2020:4096-105.

- Zhang J, Lei J, Xie W, Fang Z, Li Y, Du Q. SuperYOLO: Super Resolution Assisted Object Detection in Multimodal Remote Sensing Imagery. IEEE Transactions on Geoscience and Remote Sensing 2023;61:1-15. [Crossref]

- Moore AW. An introductory tutorial on kd-trees. IEEE Colloquium on Quantum Computing: Theory, Applications & Implications; 1991.

- Wang X. Laplacian operator-based edge detectors. IEEE Trans Pattern Anal Mach Intell 2007;29:886-90. [Crossref] [PubMed]

- Huang G, Liu Z, Van Der Maaten L, Weinberger KQ, editors. Densely Connected Convolutional Networks. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2017:4700-8.

- Ronneberger O, Fischer P, Brox TJA. U-Net: Convolutional Networks for Biomedical Image Segmentation. Medical Image Computing and Computer-Assisted Intervention – MICCAI; 2015:234-41.

- He K, Zhang X, Ren S, Sun J, editors. Deep Residual Learning for Image Recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2016:770-8.

- Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z, editors. Rethinking the Inception Architecture for Computer Vision. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2016:2818-26.

- Long J, Shelhamer E, Darrell T, editors. Fully convolutional networks for semantic segmentation. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2015:3431-40.

- The epidemiology of dry eye disease: report of the Epidemiology Subcommittee of the International Dry Eye WorkShop (2007). Ocul Surf 2007;5:93-107. [Crossref] [PubMed]

- Tung CI, Perin AF, Gumus K, Pflugfelder SC. Tear meniscus dimensions in tear dysfunction and their correlation with clinical parameters. Am J Ophthalmol 2014;157:301-310.e1. [Crossref] [PubMed]

- García-Resúa C, Santodomingo-Rubido J, Lira M, Giraldez MJ, Vilar EY. Clinical assessment of the lower tear meniscus height. Ophthalmic Physiol Opt 2009;29:487-96. [Crossref] [PubMed]

- Ballesteros-Sánchez A, Sánchez-González JM, Borrone MA, Borroni D, Rocha-de-Lossada C. The Influence of Lid-Parallel Conjunctival Folds and Conjunctivochalasis on Dry Eye Symptoms with and Without Contact Lens Wear: A Review of the Literature. Ophthalmol Ther 2024;13:651-70. [Crossref] [PubMed]