Development and validation of the Artificial Intelligence-Proliferative Vitreoretinopathy (AI-PVR) Insight system for deep learning-based diagnosis and postoperative risk prediction in proliferative vitreoretinopathy using multimodal fundus imaging

Introduction

The aging of the global population and the concurrent rise in myopia rates are contributing to an increase in the prevalence of retinal detachment (1-3). This ocular condition is characterized by the separation of the sensory retina from the underlying retinal pigment epithelium. The retina, crucial for vision, converts light into electrical signals that are transmitted to the central nervous system, facilitating the process of visual perception. Detachment of the retina disrupts this critical function, leading to interference with the normal operation of the visual system. Primary rhegmatogenous retinal detachment (RRD) requires early surgical intervention to prevent loss of vision or blindness (4). Proliferative vitreoretinopathy (PVR) is a significant complication following retinal detachment, often leading to failure of the surgery (5). It is characterized by proliferation of cells either on the retinal surface or in the vitreous cavity, leading to contraction and foreshortening of the retina and resulting in traction and recurrent detachment of the retina (6). Statistical data indicate that among all instances of retinal detachment, the occurrence of PVR ranges from 5% to 10% (7-9). Despite significant advancements in vitreoretinal surgery over the past 2.5 decades, including the introduction of valved trocars and the use of smaller gauge instrumentation, the incidence of PVR has remained stable (7,10-13). There is an unmet need for effective pharmacological treatments for PVR. Approximately 95% of postoperative PVR cases appear within the first 45 days after retinal detachment surgery, with reported anatomical success rates varying from 45% to 85% (7,14-16).

Artificial intelligence (AI), a pivotal segment of computer science, endeavors to engineer machines capable of executing tasks that are traditionally associated with human intelligence, including perception, decision-making, and problem-solving. Machine learning (ML), a crucial subfield within AI, leverages computational algorithms to devise models that can accomplish a spectrum of tasks such as prediction, dimensionality reduction, and clustering, drawing from the data they are trained on. Through the application of algorithms, ML enhances its analytical capacity, and has been widely adopted across diverse sectors, encompassing image processing, medical diagnostics, cybersecurity, and predictive analytics (17,18). AI has been used in ophthalmology to treat eye conditions impairing vision, including macular holes (MH), age-related macular degeneration (AMD), diabetic retinopathy, glaucoma, and cataracts. The more common occurrence of these diseases has led to AI development (19-21).

Given the intricacies inherent to PVR, conventional diagnostic approaches frequently rely on the clinical expertise and subjective assessments of healthcare providers. This reliance can introduce inconsistencies and diminish the precision of diagnostic results. Nevertheless, the emergence of deep learning technologies presents a significant advancement, enabling the utilization of sophisticated computational algorithms for the analysis of medical imagery. This novel approach is poised to markedly enhance the precision and uniformity of PVR detection and risk stratification, thus affording more dependable diagnostic insights. Although predictive models for PVR based on genetic and clinical variables have been developed, their predictive performance is generally poor and has not reached the ideal standards for clinical application (22-24). Therefore, the existing predictive methods are still not widely applicable in clinical practice.

The aim of this study is to develop an AI system named AI-PVR Insight, which integrates commonly used clinical imaging technologies such as B-scan ultrasound (B-scan), optical coherence tomography (OCT), and ultra-widefield (UWF) retinal imaging to achieve automated diagnosis and grading of PVR, as well as to assess the risk of postoperative PVR development. This system is designed to provide clinicians with precise diagnostic support, enabling efficient evaluation of PVR progression in patients, thereby optimizing postoperative management and treatment strategies, and ultimately enhancing the scientific accuracy and precision of clinical decision-making. We present this article in accordance with the TRIPOD + AI reporting checklist (available at https://qims.amegroups.com/article/view/10.21037/qims-24-1644/rc).

Methods

The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The study was approved by the ethics committee of the Affiliated Eye Hospital of Nanchang University (No. YLP20220802) and the requirement for individual consent for this retrospective analysis was waived. The Jiangxi Provincial People’s Hospital was also informed and agreed to conduction of the study. The diagnostic prediction system contains three tasks: Task 1, automatic identification of PVR; Task 2, automatic grading of PVR according to the PVR grading standards (25); Task 3, automatic prediction of the risk of PVR complications in patients undergoing vitrectomy for retinal detachment, based on a PVR risk prediction formula. The flow chart of the study design is shown in Figure 1.

Clinical information and image acquisition

We retrospectively included 1,274 eyes from 1,274 patients who underwent vitrectomy at the Eye Hospital affiliated with Nanchang University between June 2015 and December 2023 as the primary cohort to develop a diagnostic prediction system. Concurrently, an external test cohort was established, comprising 213 eyes from 213 patients who underwent vitrectomy at Jiangxi Provincial People’s Hospital over the same period.

The inclusion criteria were as follows:

- Patients diagnosed with RRD.

- The patient must be assessed by a physician and deemed eligible for vitreous surgery, having already undergone vitreous body removal. Specific indications for vitreous surgery include, but are not limited to:

- Retinal detachment due to a retinal hole, associated with vitreous traction or the formation of a pre-retinal membrane that affects retinal reattachment;

- Significant retinal detachment or retinal rupture, where traditional treatment methods (such as laser photocoagulation or cryotherapy) are ineffective;

- The presence of vision-threatening complications within the eye, such as severe vitreous hemorrhage or macular traction, where surgery is the only viable method to prevent further vision loss.

- Patients with complete clinical data and preoperative fundus image data available (including UWF images, B-scan images, and OCT images).

Exclusion criteria:

- Patients with severe ocular comorbidities, such as corneal disease, intraocular infection, or intraocular tumors;

- Patients with intraocular implants (e.g., intraocular lenses or intraocular drug delivery devices) or history of other ophthalmic surgeries that may affect the quality of fundus images or interfere with image analysis;

- Patients for whom sufficient fundus image data cannot be obtained (e.g., due to abnormal ocular structure, poor image quality, or poor patient cooperation resulting in unclear images).

For each patient, clinical data and preoperative fundus image data were collected. The clinical data included age, gender, presence or absence of the lens, presence of uveitis, presence of vitreous hemorrhage, and whether cryotherapy for retinopexy was administered. The fundus image data included three types: UWF images, which provide a panoramic view of the retina; B-scan images, obtained through eye ultrasound examinations and displaying longitudinal sections; and OCT images, which offer cross-sectional views of the retinal detachment’s maximum horizontal diameter. For each type of image, the clearest one was kept for each patient.

For the primary cohort, UWF images were acquired using the Optomap Daytona device from Optos plc (Dunfermline, UK). OCT images were captured with the Cirrus HD-OCT 500 system (ZEISS, Oberkochen, Germany). B-scan images were obtained using the ODM-2100 system (MEDA Co., Ltd., Tianjin, China). In the external test cohort, UWF images were similarly captured using the Optomap Daytona. For OCT imaging, the Heidelberg Spectralis device (Heidelberg Engineering, Heidelberg, Germany) was used. B-scan ultrasound examinations were conducted with the SOUER SW-2100 system (Opthalmika, Ajman, UAE).

Image preprocessing

We formed a labeling team consisting of four ophthalmologists, including a retinal expert with over 20 years of clinical experience, an attending physician with over 10 years of clinical experience, a resident physician with 5 years of clinical experience, and medical interns with 1 year of clinical experience. All team members underwent standardized training and testing before participating in the classification labeling process. Furthermore, during the labeling process, the assessors were not involved in the prior research steps to ensure blinded evaluation of the outcomes. For the PVR identification and grading task, each image was independently reviewed and classified by the medical interns, attending physician, and retinal expert referring to the PVR grading standards (25). When a consensus was reached among the three, the corresponding labels were assigned to the images. In cases where different classification results were obtained, the three doctors, along with the retinal specialist, discussed the discrepancies and made a final decision based on the outcome of the discussion.

Subsequently, all images were assigned non-PVR and PVR labels for the training of Task 1, which focused on PVR identification. Images labeled with PVR were further classified into Grade A, Grade B, and Grade C for the training of Task 2, aimed at grading the severity of PVR. In preparation for Task 3, which focused on postoperative risk prediction, samples with missing clinical data that could not be used to calculate the postoperative risk of PVR according to the PVR risk prediction formula (26) were excluded. The remaining samples were then scored using the PVR risk prediction formula. A score above 6.33 indicated a higher risk of PVR and was labeled as high-risk for Task 3, whereas a score below 6.33 indicated a lower risk and was labeled as low risk for the same task. The prediction formula was as follows:

PVR = 2.88 × (grade C PVR) + 1.85 × (grade B PVR) + 2.92 × (aphakia) + 1.77× (anterior uveitis) +1.23 × (quadrants of detachment) + 0.83 × (vitreous hemorrhage) + 1.23 × (previous cryotherapy)

For each risk factor, a value of 1 is added to the equation if the factor is present, and 0 is added if it is absent. For the number of quadrants detached, a value ranging from 1 to 4 is added, corresponding to the number of affected quadrants. A cumulative risk factor score above 6.33 points denotes that patient is at high risk.

Development of AI-PVR Insight

We established a system named AI-PVR Insight, which comprised three models: Model 1 for the automatic detection of PVR; Model 2 for grading PVR severity; and Model 3 for predicting the risk of PVR complications in patients who underwent vitrectomy for retinal detachment, based on a prognostic formula.

Models 1 and 2 were integrated to constitute a cascaded deep learning architecture. Model 1 utilized TwinsSVT architecture as the foundational model to detect PVR in the input images. Subsequently, Model 2, based on the DenseNet-121 architecture, further classified the severity of PVR from the images identified by Model 1. The datasets for both tasks were divided in an 8:2:2 ratio for training, validation, and internal test sets, ensuring robust model evaluation. In addition, an external test set was employed to further validate the models’ performance. The foundational models were trained individually on B-scan, OCT, and UWF image datasets. First, data augmentation techniques such as rotation, translation, scaling, and flipping were applied to increase the diversity of the training samples and enhance the model’s generalization ability. Additionally, the Synthetic Minority Over-sampling Technique (SMOTE) was used to oversample the minority class, ensuring a balanced distribution of samples across all classes. Subsequently, standardization was applied to the images, with TwinsSVT using 256×256 pixels and DenseNet-121 using 224×224 pixels, normalizing pixel values to a 0–1 range. Training was conducted for a total of 200 epochs, with performance being evaluated on a validation set after each epoch to identify the epoch with the highest validation accuracy as the optimal state of the model. Subsequently, the models at their optimal states were employed to extract features from the respective imaging modalities. Then, principal component analysis (PCA) was applied to these features to achieve dimensionality reduction, aiming to retain the most informative components while minimizing information loss. The reduced features, obtained through dimensionality reduction, were concatenated to form a comprehensive feature set. Then, the combined features and the educed features extracted from the B-ultrasound, OCT, and UWF images were input into a multi-layer perceptron (MLP) classifier for downstream tasks of PVR identification and grading. The performance of the multi-modal model was compared with the three single modality models on the test set, and that with the best performance was selected as the final model.

Model 3 was utilized for predicting the risk of postoperative PVR complications. It employed the same TwinsSVT architecture as Model 1 but was focused on the prediction of postoperative risks. The dataset for model training was divided into training, validation, and internal test sets in an 8:2:2 ratio, respectively, and was further supplemented by an external test set to assess the model’s performance. The images were also standardized; the training process for the images was identical to that of Model 1, and after 200 epochs of training, the optimal model was selected for feature extraction. The features were then subjected to PCA for dimensionality reduction and feature integration. Unlike Model 1, Model 3 employed a support vector machine (SVM) as the classifier to predict the level of risk for postoperative PVR. Similarly, the performance of the multi-modal model was compared with those of the single modality models on the test set, and the one with superior performance was chosen as the final model.

Performance evaluation and statistical analysis

In our research, all data preprocessing, model training, and statistical analyses were conducted using Python version 3.9.7 (www.python.org). To quantitatively evaluate the effectiveness of our models, we constructed receiver operating characteristic (ROC) curves and calculated the corresponding area under the curve (AUC) values. In addition to the AUC, we utilized several key performance indicators to assess the models’ accuracy. These included Accuracy, which quantifies the proportion of correct predictions among the total; Precision, which measures the proportion of correctly identified positive instances among the predicted positives; and Recall, also known as sensitivity, which is the proportion of actual positives that were correctly identified by the model. Additionally, we calculated the accuracy and AUC values for the identification of PVR by interns, residents, and attending physicians both with and without AI assistance, to assess whether AI assistance could enhance the diagnostic accuracy of ophthalmologists. The formulas for these metrics are as follows:

Here, true positive (TP) is the number of correctly predicted positive instances, true negative (TN) is the number of correctly predicted negative instances, false positive (FP) is the number of incorrectly predicted positive instances, and false negative (FN) is the number of incorrectly predicted negative instances.

Results

Baseline characteristics of patients

The development and validation of the AI-PVR Insight system encompassed a total of 1,487 samples, comprising 4,461 images, with an equal number of 1,487 images for each imaging modality: OCT, UWF, and B-scan. The flowchart of sample selection is presented in Figure 2. In Task 1, the distribution of sample sizes across the training dataset, validation set, internal test set (from the Affiliated Eye Hospital of Nanchang University), and external test set (from Jiangxi Provincial People’s Hospital) were 849, 213, 212, and 213, respectively. For Task 2, the sample sizes allocated to the training dataset, validation set, internal test set, and external test set were 287, 72, 72, and 72, respectively. In Task 3, the sample sizes for the training set, validation set, internal test set, and external test set were 583, 147, 145, and 149, respectively. The clinical baseline characteristics of each sample are detailed in Table 1.

Table 1

| Characteristics | Training set (N=583) | Validation set (N=147) | Internal test set (N=145) | External test set (N=149) | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Low risk (N=495) | High risk (N=88) | P value | Low risk (N=118) | High risk (N=29) | P value | Low risk (N=114) | High risk (N=31) | P value | Low risk (N=122) | High risk (N=27) | P value | ||||

| Age (years) | 59.96±6.737 | 59.45±7.166 | 0.518 | 59.53±6.442 | 59.28±5.993 | 0.845 | 59.98±6.509 | 59.32±7.045 | 0.624 | 59.04±6.304 | 59.93±7.082 | 0.520 | |||

| Gender | 0.425 | >0.999 | 0.443 | >0.999 | |||||||||||

| Male | 268 | 43 | 64 | 16 | 55 | 18 | 60 | 13 | |||||||

| Female | 227 | 45 | 54 | 13 | 59 | 13 | 62 | 14 | |||||||

| PVR grading | <0.001 | <0.001 | <0.001 | <0.001 | |||||||||||

| Grade A or below | 380 | 1 | 87 | 0 | 89 | 0 | – | – | |||||||

| Grade B | 83 | 13 | 18 | 3 | 14 | 5 | – | – | |||||||

| Grade C | 32 | 74 | 13 | 26 | 11 | 26 | – | – | |||||||

| Aphakia | 0.151 | >0.999 | 0.045 | >0.999 | |||||||||||

| Negative | 482 | 70 | 118 | 29 | 114 | 29 | 122 | 27 | |||||||

| Positive | 13 | 18 | 0 | 0 | 0 | 2 | 0 | 0 | |||||||

| Vitreous haemorrhage | <0.001 | 0.079 | 0.127 | <0.001 | |||||||||||

| Negative | 482 | 70 | 114 | 25 | 105 | 25 | 117 | 20 | |||||||

| Positive | 13 | 18 | 4 | 4 | 9 | 6 | 5 | 7 | |||||||

| Quadrants of detachment | <0.001 | <0.001 | <0.001 | <0.001 | |||||||||||

| 1 | 305 | 0 | 68 | 0 | 68 | 0 | 70 | 0 | |||||||

| 2 | 136 | 0 | 43 | 0 | 32 | 1 | 42 | 0 | |||||||

| 3 | 48 | 18 | 6 | 5 | 12 | 4 | 10 | 6 | |||||||

| 4 | 6 | 70 | 1 | 24 | 2 | 26 | 0 | 21 | |||||||

Data are presented as mean ± standard deviation or number. PVR, proliferative vitreoretinopathy.

PVR identification model performance

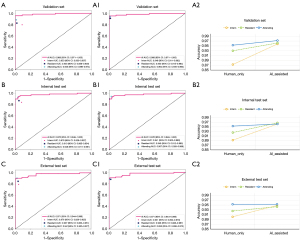

The multimodal model for PVR identification achieved an AUC, accuracy, precision, and recall of 0.976, 0.948, 0.913, and 0.926 in the internal test set, respectively, and 0.971, 0.958, 0.985, and 0.890 in the external test set, respectively. The OCT model demonstrated AUC, accuracy, precision, and recall values of 0.959, 0.930, 0.921, and 0.853 in the internal test set, and 0.916, 0.854, 0.744, and 0.877 in the external test set, respectively. The B-scan model exhibited AUC, accuracy, precision, and recall of 0.953, 0.897, 0.774, and 0.956 in the internal test set, and 0.956, 0.877, 0.764, and 0.932 in the external test set, respectively. The UWF model showed AUC, accuracy, precision, and recall of 0.913, 0.920, 0.981, and 0.765 in the internal test set, and 0.911, 0.873, 0.819, and 0.808 in the external test set, respectively. The results indicate that the multimodal model outperforms the unimodal models across all test sets, as detailed in Table 2 and illustrated in Figure 3A.

Table 2

| Model | Internal test set | External test set | |||||||

|---|---|---|---|---|---|---|---|---|---|

| AUC (95% CI) | Accuracy | Precision | Recall | AUC (95% CI) | Accuracy | Precision | Recall | ||

| BUS | 0.953 (0.925–0.982) | 0.897 | 0.774 | 0.956 | 0.956 (0.933–0.980) | 0.877 | 0.764 | 0.932 | |

| OCT | 0.959 (0.927–0.991) | 0.930 | 0.921 | 0.853 | 0.916 (0.874–0.958) | 0.854 | 0.744 | 0.877 | |

| UWF | 0.913 (0.859–0.966) | 0.920 | 0.981 | 0.765 | 0.911 (0.863–0.958) | 0.873 | 0.819 | 0.808 | |

| Multimodal | 0.976 (0.949–1.000) | 0.948 | 0.913 | 0.926 | 0.971 (0.944–0.998) | 0.958 | 0.985 | 0.890 | |

AUC, area under the curve; BUS, B-scan model; CI, confidence interval; Multimodal, multimodal model; OCT, optical coherence tomography model; PVR, proliferative vitreoretinopathy; UWF, ultra-widefield model.

PVR grading model performance

The multimodal model for PVR grading demonstrated superior classification performance across all grades compared to the unimodal models. Particularly, the model showed the best performance in identifying PVR Grade A (class 0), with AUC values as high as 0.985 in both the internal and external test sets, and accuracy rates exceeding 0.95, along with precision and recall rates approaching or exceeding 0.9. For PVR Grades B (class 1) and C (class 2), the model also performed well, with AUC values exceeding 0.95 in both test sets, accuracy rates above 0.9, and precision and recall rates both over 0.85. In contrast, the unimodal models exhibited variable performance in identifying different grades of PVR, but in both internal and external test sets, the AUC values, accuracy, precision, and recall were all lower compared to the multimodal model. For further details, please refer to Table 3, as well as Figure 3B,3C.

Table 3

| Model | Grade | Internal test set | External test set | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| AUC (95% CI) | Accuracy | Precision | Recall | AUC (95% CI) | Accuracy | Precision | Recall | |||

| BUS | A | 0.733 (0.522–0.945) | 0.806 | 1.000 | 1.000 | 0.855 (0.756–0.953) | 0.806 | 0.571 | 0.571 | |

| B | 0.802 (0.700–0.905) | 0.736 | 1.000 | 0.989 | 0.782 (0.664–0.899) | 0.792 | 0.706 | 0.545 | ||

| C | 0.821 (0.724–0.918) | 0.764 | 0.992 | 1.000 | 0.766 (0.656–0.876) | 0.681 | 0.667 | 0.722 | ||

| OCT | A | 0.750 (0.622–0.877) | 0.750 | 0.267 | 0.364 | 0.777 (0.651–0.903) | 0.819 | 0.600 | 0.214 | |

| B | 0.700 (0.567–0.832) | 0.708 | 0.667 | 0.444 | 0.774 (0.653–0.894) | 0.722 | 0.536 | 0.682 | ||

| C | 0.738 (0.622–0.853) | 0.653 | 0.615 | 0.706 | 0.812 (0.712–0.913) | 0.736 | 0.718 | 0.778 | ||

| UWF | A | 0.803 (0.682–0.925) | 0.806 | 0.400 | 0.545 | 0.798 (0.654–0.942) | 0.806 | 0.500 | 0.571 | |

| B | 0.845 (0.746–0.944) | 0.806 | 0.783 | 0.667 | 0.885 (0.785–0.986) | 0.819 | 0.680 | 0.773 | ||

| C | 0.831 (0.739–0.923) | 0.722 | 0.706 | 0.706 | 0.885 (0.801–0.969) | 0.819 | 0.871 | 0.750 | ||

| Multimodal | A | 0.985 (0.955–1.000) | 0.972 | 0.909 | 0.909 | 0.985 (0.960–1.000) | 0.958 | 0.867 | 0.929 | |

| B | 0.957 (0.902–1.000) | 0.931 | 0.958 | 0.852 | 0.978 (0.947–1.000) | 0.958 | 1.000 | 0.864 | ||

| C | 0.989 (0.974–1.000) | 0.931 | 0.892 | 0.971 | 0.976 (0.941–1.000) | 0.944 | 0.921 | 0.972 | ||

AUC, area under the curve; BUS, B-scan model; CI, confidence interval; Multimodal, multimodal model; OCT, optical coherence tomography model; PVR, proliferative vitreoretinopathy; UWF, ultra-widefield model.

Assessment of PVR risk model performance

The multimodal model demonstrated an AUC of 0.857, accuracy of 0.834, precision of 0.459, and recall of 0.810 on the internal test set, outperforming the single-modality B-scan and OCT models, but falling short in comparison to the UWF model. On the external test set, the AUC was 0.827, slightly lower than that of the UWF and B-scan models, with accuracy, precision, and recall rates of 0.779, 0.408, and 0.833, respectively, which were marginally lower than those of the B-scan model but close to those of the UWF model. These discrepancies may stem from imbalances among modalities, where the dominant modality could potentially suppress the optimization potential of other modalities, thereby constraining the multimodal model from fully leveraging its inherent integrative advantages, for further details, please refer to Table 4 and Figure 3D.

Table 4

| Model | Internal test set | External test set | |||||||

|---|---|---|---|---|---|---|---|---|---|

| AUC (95% CI) | Accuracy | Precision | Recall | AUC (95% CI) | Accuracy | Precision | Recall | ||

| BUS | 0.752 (0.618–0.885) | 0.772 | 0.357 | 0.714 | 0.849 (0.750–0.948) | 0.785 | 0.413 | 0.792 | |

| OCT | 0.812 (0.689–0.936) | 0.759 | 0.354 | 0.810 | 0.722 (0.606–0.839) | 0.711 | 0.321 | 0.708 | |

| UWF | 0.924 (0.834–1.000) | 0.903 | 0.621 | 0.857 | 0.841 (0.762–0.920) | 0.779 | 0.408 | 0.833 | |

| Multimodal | 0.857 (0.750–0.963) | 0.834 | 0.459 | 0.810 | 0.827 (0.737–0.916) | 0.779 | 0.408 | 0.833 | |

AUC, area under the curve; BUS, B-scan model; CI, confidence interval; Multimodal, multimodal model; OCT, optical coherence tomography model; PVR, proliferative vitreoretinopathy; UWF, ultra-widefield model.

Identification of PVR by ophthalmologists with AI assistance versus without AI assistance

As shown in Figure 4, in the validation set, with the assistance of AI, the accuracy and AUC values for PVR identification by interns, residents, and attending physicians all showed a significant improvement, with the most notable enhancement observed in interns. In the internal test set, although the improvement in PVR identification with AI assistance was not as pronounced as in the testing set, there was still a discernible enhancement. In the external test set, both interns and residents experienced a certain increase in accuracy and AUC values for PVR identification with AI assistance, whereas attending physicians did not exhibit any significant changes in accuracy or AUC with AI assistance.

Discussion

The AI-PVR Insight system utilized a multimodal feature fusion approach to accurately identify PVR, assess its severity, and predict the risk of PVR development following vitrectomy surgery. This system demonstrated exceptional performance in recognizing and evaluating the severity of PVR. Additionally, by using traditional risk assessment formulas as a benchmark, the model achieved an evaluation of the risk of PVR development following vitrectomy based solely on fundus images. By providing a comprehensive assessment of PVR status, the AI-PVR Insight system integrated AI into the clinical workflow, thereby assisting physicians in formulating more targeted treatment plans and offering critical insights for the effective prevention or management of PVR progression.

In the tasks of PVR identification and severity grading, the AI-PVR Insight system demonstrated superior performance over unimodal models across all validation sets, highlighting its capability to accurately identify PVR and stratify its severity. This is crucial for devising personalized treatment strategies (9). The system’s enhanced performance can be ascribed to the integration of diverse imaging modalities—OCT, UWF, and B-scan—which together provide a more comprehensive characterization of retinal pathology. The synergistic interaction of these modalities within the multimodal model bolsters the system’s ability to detect subtle PVR features, thereby augmenting the precision of diagnosis (27,28).

The traditional methods for assessing the risk of PVR rely on a series of complex clinical indicators, including PVR grading, aphakia, the extent of retinal detachment, vitreous hemorrhage, and a history of prior cryotherapy (22,29). In contrast, our model utilizes fundus images as the sole input and achieved an AUC value of over 0.8 in the validation set. This indicates that the model can effectively extract and learn features related to PVR risk from fundus images and map these features to predefined risk levels. This finding is of significant importance. Fundus images are non-invasive and easily accessible clinical resources, and their widespread use in clinical practice suggests that image-based predictive models have broad application potential (30). The deep learning algorithm’s robust image recognition and feature extraction capabilities allow the model to detect subtle PVR risk-related changes without reliance on complex clinical indicators. Although there are deviations from traditional formulas, this does not negate the effectiveness of the model. On the contrary, such deviations may provide an opportunity for us to re-examine and refine existing risk assessment methods. Coupled with follow-up results, we may be able to construct a more comprehensive and precise PVR risk assessment system.

Furthermore, we assessed the practical advantages that ophthalmologists, at different career stages, experience from AI-augmented clinical practice. Our comparative analysis indicated a significant increase in the precision of PVR detection among early-career doctors, particularly those in their internship and residency phases, following the implementation of AI support. However, this improvement was not as pronounced among attending physicians. This finding underscores the potential of AI to bolster diagnostic accuracy and contribute positively to the training and skill development of early-career ophthalmologists. This phenomenon can be attributed to several factors. Junior doctors, such as interns and residents, may have limited experience in diagnosing PVR. The objective analysis and pattern recognition capabilities of AI systems can compensate for the inexperience and variability in clinical decision-making among these early-career physicians, thereby significantly enhancing their diagnostic accuracy (31,32). Meanwhile, attending physicians possess extensive clinical experience and well-honed diagnostic skills, which may already afford them a high level of accuracy in identifying PVR (33,34). Their diagnostic approach may incorporate a broader range of factors and subjective assessments that AI algorithms might not fully capture (35). In essence, the data suggest that AI acts as a powerful adjunct in the training of novice ophthalmologists, potentially revolutionizing the educational landscape by providing a robust and objective standard for skill development. Simultaneously, it offers a complementary analytical perspective to experienced practitioners. This integration of AI into ophthalmic practice represents a significant step forward in leveraging technology to improve clinical outcomes and education across all levels of professional experience.

This study had several limitations. Firstly, our study, which utilized traditional assessment formulas as a benchmark for predicting PVR risk, demonstrated promising performance. This finding suggests that the use of fundus images for PVR risk assessment is feasible. However, to comprehensively evaluate the model’s applicability and efficacy in clinical practice, further follow-up studies are urgently needed. Secondly, although our multimodal model outperformed unimodal models in PVR detection and severity grading, its performance in predicting post-PVR surgery risk was slightly inferior to that of unimodal models. The specific mechanisms by which the integration of OCT, B-scan, and UWF imaging data synergize to produce these outcomes require additional investigation. Therefore, future research endeavors will focus on quantifying specific image-derived indices and examining their correlation with PVR, which will aid in enhancing the interpretability of the AI model. Additionally, we will incorporate follow-up data to compare the model’s capability with traditional formulas in assessing PVR risk in actual clinical contexts. This could potentially aid in refining the model and devising a more accurate and clinically relevant PVR risk assessment strategy.

Conclusions

The AI-PVR Insight system developed in this study, through the integration of various imaging technologies, achieves the automatic identification, grading, and postoperative risk assessment of PVR. This advancement aids clinicians in devising more targeted treatment plans and provides critical insights for the effective prevention and management of PVR progression, thereby enhancing the quality of care for patients.

Acknowledgments

Some of our experiments were carried out on OnekeyAI platform. We express our thanks to OnekeyAI and its developers for their help in this scientific research project.

Footnote

Reporting Checklist: The authors have completed the TRIPOD + AI reporting checklist. Available at https://qims.amegroups.com/article/view/10.21037/qims-24-1644/rc

Funding: This work was supported by grant from

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://qims.amegroups.com/article/view/10.21037/qims-24-1644/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The study was approved by the ethics committee of the Affiliated Eye Hospital of Nanchang University (No. YLP20220802) and the requirement for individual consent for this retrospective analysis was waived. The Jiangxi Provincial People’s Hospital was also informed and agreed to the conduction of the study.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Castro-Luna G, Sánchez-Liñán N, Alaskar H, Pérez-Rueda A, Nievas-Soriano BJ. Comparison of Iris-Claw Phakic Lens Implant versus Corneal Laser Techniques in High Myopia: A Five-Year Follow-Up Study. Healthcare (Basel) 2022.

- Ikuno Y. Overview of the complications of high myopia. Retina 2017;37:2347-51. [PubMed]

- Lingham G, Yazar S, Lucas RM, Milne E, Hewitt AW, Hammond CJ, MacGregor S, Rose KA, Chen FK, He M, Guggenheim JA, Clarke MW, Saw SM, Williams C, Coroneo MT, Straker L, Mackey DA. Time spent outdoors in childhood is associated with reduced risk of myopia as an adult. Sci Rep 2021;11:6337. [Crossref] [PubMed]

- Sella R, Sternfeld A, Budnik I, Axer-Siegel R, Ehrlich R. Epiretinal membrane following pars plana vitrectomy for rhegmatogenous retinal detachment repair. Int J Ophthalmol 2019;12:1872-7. [Crossref] [PubMed]

- Xiao W, Chen X, Liu X, Luo L, Ye S, Liu Y. Trichostatin A, a histone deacetylase inhibitor, suppresses proliferation and epithelial-mesenchymal transition in retinal pigment epithelium cells. J Cell Mol Med 2014;18:646-55. [Crossref] [PubMed]

- Papastavrou VT, Chatziralli I, McHugh D. Gas Tamponade for Retinectomy in PVR-Related Retinal Detachments: A Retrospective Study. Ophthalmol Ther 2017;6:161-6. [Crossref] [PubMed]

- Idrees S, Sridhar J, Kuriyan AE. Proliferative Vitreoretinopathy: A Review. Int Ophthalmol Clin 2019;59:221-40. [Crossref] [PubMed]

- Pastor JC, de la Rúa ER, Martín F. Proliferative vitreoretinopathy: risk factors and pathobiology. Prog Retin Eye Res 2002;21:127-44. [Crossref] [PubMed]

- Smith JM, Steel DH. Anti-vascular endothelial growth factor for prevention of postoperative vitreous cavity haemorrhage after vitrectomy for proliferative diabetic retinopathy. Cochrane Database Syst Rev 2011;(5):CD008214. Update in Cochrane Database Syst Rev 2015;CD008214. [PubMed]

- Pennock S, Haddock LJ, Mukai S, Kazlauskas A. Vascular endothelial growth factor acts primarily via platelet-derived growth factor receptor α to promote proliferative vitreoretinopathy. Am J Pathol 2014;184:3052-68. [Crossref] [PubMed]

- Leiderman YI, Miller JW. Proliferative vitreoretinopathy: pathobiology and therapeutic targets. Semin Ophthalmol 2009;24:62-9. [Crossref] [PubMed]

- Heimann H, Bartz-Schmidt KU, Bornfeld N, Weiss C, Hilgers RD, Foerster MHScleral Buckling versus Primary Vitrectomy in Rhegmatogenous Retinal Detachment Study Group. Scleral buckling versus primary vitrectomy in rhegmatogenous retinal detachment: a prospective randomized multicenter clinical study. Ophthalmology 2007;114:2142-54. [Crossref] [PubMed]

- Oellers P, Stinnett S, Hahn P. Valved versus nonvalved cannula small-gauge pars plana vitrectomy for repair of retinal detachments with Grade C proliferative vitreoretinopathy. Clin Ophthalmol 2016;10:1001-6. [PubMed]

- Blumenkranz MS, Azen SP, Aaberg T, Boone DC, Lewis H, Radtke N, Ryan SJ. Relaxing retinotomy with silicone oil or long-acting gas in eyes with severe proliferative vitreoretinopathy. Silicone Study Report 5. The Silicone Study Group. Am J Ophthalmol 1993;116:557-64. [Crossref] [PubMed]

- Charteris DG, Sethi CS, Lewis GP, Fisher SK. Proliferative vitreoretinopathy-developments in adjunctive treatment and retinal pathology. Eye (Lond) 2002;16:369-74. [Crossref] [PubMed]

- Grigoropoulos VG, Benson S, Bunce C, Charteris DG. Functional outcome and prognostic factors in 304 eyes managed by retinectomy. Graefes Arch Clin Exp Ophthalmol 2007;245:641-9. [Crossref] [PubMed]

- Han Z, Gong Q, Huang S, Meng X, Xu Y, Li L, Shi Y, Lin J, Chen X, Li C, Ma H, Liu J, Zhang X, Chen D, Si J. Machine learning uncovers accumulation mechanism of flavonoid compounds in Polygonatum cyrtonema Hua. Plant Physiol Biochem 2023;201:107839. [Crossref] [PubMed]

- Helm JM, Swiergosz AM, Haeberle HS, Karnuta JM, Schaffer JL, Krebs VE, Spitzer AI, Ramkumar PN. Machine Learning and Artificial Intelligence: Definitions, Applications, and Future Directions. Curr Rev Musculoskelet Med 2020;13:69-76. [Crossref] [PubMed]

- Balyen L, Peto T. Promising Artificial Intelligence-Machine Learning-Deep Learning Algorithms in Ophthalmology. Asia Pac J Ophthalmol (Phila) 2019;8:264-72. [PubMed]

- Grzybowski A, Brona P, Lim G, Ruamviboonsuk P, Tan GSW, Abramoff M, Ting DSW. Artificial intelligence for diabetic retinopathy screening: a review. Eye (Lond) 2020;34:451-60. [Crossref] [PubMed]

- Popescu Patoni SI, Muşat AAM, Patoni C, Popescu MN, Munteanu M, Costache IB, Pîrvulescu RA, Mușat O. Artificial intelligence in ophthalmology. Rom J Ophthalmol 2023;67:207-13. [PubMed]

- Antaki F, Kahwati G, Sebag J, Coussa RG, Fanous A, Duval R, Sebag M. Predictive modeling of proliferative vitreoretinopathy using automated machine learning by ophthalmologists without coding experience. Sci Rep 2020;10:19528. [Crossref] [PubMed]

- Asaria RH, Kon CH, Bunce C, Charteris DG, Wong D, Luthert PJ, Khaw PT, Aylward GW. How to predict proliferative vitreoretinopathy: a prospective study. Ophthalmology 2001;108:1184-6. [Crossref] [PubMed]

- Ricker LJ, Kessels AG, de Jager W, Hendrikse F, Kijlstra A, la Heij EC. Prediction of proliferative vitreoretinopathy after retinal detachment surgery: potential of biomarker profiling. Am J Ophthalmol 2012;154:347-354.e2. [PubMed]

- Machemer R, Aaberg TM, Freeman HM, Irvine AR, Lean JS, Michels RM. An updated classification of retinal detachment with proliferative vitreoretinopathy. Am J Ophthalmol 1991;112:159-65. [Crossref] [PubMed]

- Kon CH, Asaria RH, Occleston NL, Khaw PT, Aylward GW. Risk factors for proliferative vitreoretinopathy after primary vitrectomy: a prospective study. Br J Ophthalmol 2000;84:506-11. [PubMed]

- Xie X, Yang L, Zhao F, Wang D, Zhang H, He X, Cao X, Yi H, He X, Hou Y. A deep learning model combining multimodal radiomics, clinical and imaging features for differentiating ocular adnexal lymphoma from idiopathic orbital inflammation. Eur Radiol 2022;32:6922-32. [PubMed]

- Jin K, Yan Y, Chen M, Wang J, Pan X, Liu X, Liu M, Lou L, Wang Y, Ye J. Multimodal deep learning with feature level fusion for identification of choroidal neovascularization activity in age-related macular degeneration. Acta Ophthalmol 2022;100:e512-20. [Crossref] [PubMed]

- Lei H, Velez G, Hovland P, Hirose T, Kazlauskas A. Plasmin is the major protease responsible for processing PDGF-C in the vitreous of patients with proliferative vitreoretinopathy. Invest Ophthalmol Vis Sci 2008;49:42-8. [PubMed]

- Foo LL, Lim GYS, Lanca C, Wong CW, Hoang QV, Zhang XJ, Yam JC, Schmetterer L, Chia A, Wong TY, Ting DSW, Saw SM, Ang M. Deep learning system to predict the 5-year risk of high myopia using fundus imaging in children. NPJ Digit Med 2023;6:10. [PubMed]

- Kinoshita M, Ueda D, Matsumoto T, Shinkawa H, Yamamoto A, Shiba M, Okada T, Tani N, Tanaka S, Kimura K, Ohira G, Nishio K, Tauchi J, Kubo S, Ishizawa T. Deep Learning Model Based on Contrast-Enhanced Computed Tomography Imaging to Predict Postoperative Early Recurrence after the Curative Resection of a Solitary Hepatocellular Carcinoma. 2023;15:2140.

- Tong T, Gu J, Xu D, Song L, Zhao Q, Cheng F, Yuan Z, Tian S, Yang X, Tian J, Wang K, Jiang T. Deep learning radiomics based on contrast-enhanced ultrasound images for assisted diagnosis of pancreatic ductal adenocarcinoma and chronic pancreatitis. BMC Med 2022;20:74. [PubMed]

- Wang J, Ji J, Zhang M, Lin JW, Zhang G, Gong W, Cen LP, Lu Y, Huang X, Huang D, Li T, Ng TK, Pang CP. Automated Explainable Multidimensional Deep Learning Platform of Retinal Images for Retinopathy of Prematurity Screening. JAMA Netw Open 2021;4:e218758. [PubMed]

- Tang VL, Shi Y, Fung K, Tan J, Espaldon R, Sudore R, Wong ML, Walter LC. Clinician Factors Associated With Prostate-Specific Antigen Screening in Older Veterans With Limited Life Expectancy. JAMA Intern Med 2016;176:654-61. [Crossref] [PubMed]

- Tai HC, Chen KY, Wu MH, Chang KJ, Chen CN, Chen A. Assessing Detection Accuracy of Computerized Sonographic Features and Computer-Assisted Reading Performance in Differentiating Thyroid Cancers. Biomedicines 2022;10:1513. [Crossref] [PubMed]