Fine-grained pathomorphology recognition of cervical lesions with a dropped multibranch Swin Transformer

Introduction

Cervical cancer is the fourth most common gynecological malignancy worldwide, posing a serious threat to women’s health (1). Early screening is the most effective means to preventing cervical cancer, as it facilitates the assignment of a grade, which directs the selection of appropriate therapeutics crucial to reducing the associated incidence and mortality rate (2).

The ThinPrep cytologic test (TCT) is one of the most widely used and effective early screening methods (3). Based on different cellular tumor reactions, such as nuclear atypia and nuclear-cytoplasmic ratio, lesions can be classified as atypical squamous cells of undetermined significance (ASC-US), atypical squamous cells, cannot exclude high-grade squamous intraepithelial lesions (ASCHs), low-grade squamous intraepithelial lesions (LSILs), high-grade squamous intraepithelial lesions (HSILs), squamous cell carcinoma (SCC), and atypical glandular cell (AGC) (2). Moreover, there are certain pathogenic infections, including trichomoniasis (TRICH), vulvovaginal candidiasis (CAND), bacterial flora (FLORA), genital herpes (HERPS), and actinomycosis (ACTIN) (Figure 1) (2) that may increase women’s susceptibility to human papillomavirus infection and potentially affect the progression of cervical lesions. However, owing to the diversity of cytological reactions, including normal metaplastic cells and atrophic cellular changes, the cells within the female genital tract can present tumor-like characteristics (2). Moreover, cellular lesions are not readily detectable microscopically due to degeneration and artifactual changes, which further exacerbates diagnostic difficulty. Indeed, the diagnosis of cellular tumor reactions relies on the experience and expertise of pathologists and has low reproducibility, impeding the early and rapid clinical diagnosis of cervical cancer.

With the rapid development of artificial intelligence and deep learning technologies, object detection can be used to localize and grade pathological cells in large-scale pathological images, which has improved the accuracy of early cervical cancer diagnosis (3,4). However, the automatic diagnosis of cervical cell cytology has proven challenging due to the subtle morphological and color variations in cervical cells and the scarcity of cervical cytology datasets which contain inconsistent annotation information. Previous studies in this area have focused primarily on automated cell classification and segmentation processing. For instance, Zhao et al. proposed a cervical cytology image generation (CCG) model based on taming transformers (CCG-taming transformers), achieving high accuracies on various public datasets (5). Cao et al. also proposed a novel attention-guided convolutional neural network (CNN) based on DenseNet to identify abnormal cells in pap cervical images, achieving an accuracy of up to 98% (6). Zhao et al. proposed a novel lightweight feature attention network (LFANet), achieving higher segmentation accuracy and stronger generalization ability at a lower cost as compared to other methods (7).

An increasing number of efficient and accurate object detection methods are being developed, including detection transformer (DETR) (8), faster region-based CNN (R-CNN) (9), and mask R-CNN (10). These methods have been widely adopted in cervical cell diagnosis for detection (or segmentation) and classification in an end-to-end manner (11-13). Transformers, which better capture the deep features of a region of interest, have led to the subsequent development of additional methods such as vision transformer (14) and Swin Transformer (15), which combine the strengths of CNNs and transformers for multilevel semantic information extraction. These methods have performed well on major, common, large-scale datasets but are suboptimal for detecting uncommon objects, analyzing long-tail datasets, and performing tasks with small differences between objects (16-18).

To overcome the challenged posed by the distinct characteristics of cervical cells that require fine-grained feature detection, we developed the YoGaNet platform based on YOLOv5l with a dropped multibranch Swin Transformer (DMBST) to detect the fine-grained pathomorphological changes of cervical lesions. The primary objectives of this study were as follows: (I) to design a YOLOv5l model with DMBST to improve the fine-grained pathomorphological recognition of cervical lesions in massive TCT images; and (II) to evaluate the performance of our proposed method with a large public dataset and clinical dataset. We present this article in accordance with the TRIPOD + AI reporting checklist (available at https://qims.amegroups.com/article/view/10.21037/qims-24-1590/rc).

Methods

Overview

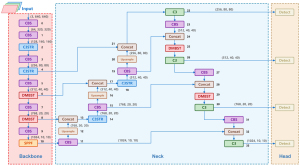

In this section, we introduce our proposed method, YoGaNet, which is based on the YOLOv5l network and which incorporates DMBST modules and a multiscale feature fusion small-object detection head (Figure 2). The DMBST module (Figure 3) enables the model to learn fine-grained features and improves the discriminability of indistinguishable objects. Accordingly, the DMBST module was incorporated into the deeper layers of YOLOv5l. Furthermore, a DMBST module was incorporated into the neck section of YOLOv5l to reduce classification loss and obtain favorable results. Additionally, the bottleneck in the shallow and deep C3 modules of the YOLOv5l backbone was replaced by a Swin Transformer block to enhance the network’s global feature extraction capability and reduce the computational complexity. Finally, to enhance the ability of the network to detect small objects, we added a multi-scale feature fusion module and a detection head to the neck and head of YOLOv5l, respectively, and designated the combined structure as the multi-scale feature fusion small-object detection head.

Algorithm structure

In our proposed network, 11 modules were included in the backbone, while the adopted neck and head structures were similar to those of YOLOv5l, with 34 modules. A clustered blueprint separable (CBS) convolutional layer was also applied for batch normalization along, with sigmoid linear unit serving as an activation function.

DMBST

This network was primarily designed to enhance the recognition of objects that are difficult to distinguish without hard labels. Swin Transformers have global attention capabilities similar to those of vision transformers, as well as lower computational requirements. However, CNNs require considerable local attention. Therefore, we proposed a DMBST module.

For DMBST, a feature map of size (c, h, w) served as the input, and the channels were split into n equal parts as the input for each branch (c/n, h, w). This reduced the computational complexity and allowed for different model branches to generate unique feature maps during training. Each branch then used a Swin Transformer to extract the global attention maps, and left atrium network (LA Net) was applied to compress the number of image channels. LA Net is a CNN with only three layers and compresses the input image to r channels through a convolution layer, further compressing it to one channel through a convolution layer and finally forming a single attention map through the sigmoid activation layer. Hence, there are as many attention maps as there are branches. To drive the network to automatically learn new and more delicate features that are beneficial for improving image classification and enhancing model robustness, we used a random branch dropout function, which randomly zeros the feature maps of a certain branch. The relevant formula is represented by Eq. [1]:

Where represents the feature map of the branch. It is a matrix of size h × w (height and width), which all elements are zero.

Each branch may have a different attentional region, which must be merged. Hence, we employed channel maximize to combine the attention regions of each channel into a single attention map. Specifically, the maximum value along the channel dimension was determined for all attention maps. This served to identify the regions to which the model should be directed. The formula for channel maximization is represented by Eq. [2]:

Where represents the merged feature map at position . It is the result of applying the channel maximization operation across all branches. represents the feature map of the k-th branch at position . The maximum value across all branches at this position is selected.

After obtaining the attention map, we calculated the dot product between the input feature and attention maps, resulting in a feature map of size (c, h, w). Through this module, the regions on which the model was focused were also enhanced.

Multiscale feature fusion small-object detection head

For the multiscale feature fusion small-object detection head, we found that the feature extraction layers of YOLOv5l were relatively shallow, potentially affecting the extraction of fine-grained details and consequently limiting the detection performance for small objects in images. Therefore, we first added the CBS and DMBST modules with a size of 762×20×20 pixels after the sixth layer of the backbone to enhance the feature extraction ability in subsequent layers. Subsequently, we added a 762×20×20 pixels feature map fusion in the neck and a 762×20×20 pixels detection head in the detection layer. As the network depth increased, more refined features could be extracted.

Dataset

We validated the effectiveness of YoGaNet on two datasets: the Comparison Detector dataset and a clinical dataset.

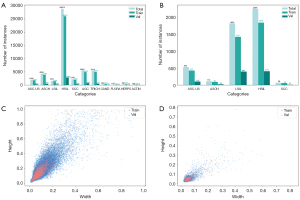

- The Comparison Detector dataset (12) comprises 7,410 TCT smears with 48,587 objects and 11 categories: ASC-US, ASCH, LSIL, HSIL, SCC, AGC, TRICH, CAND, FLORA, HERPS, and ACTIN. All TCT smears in this database were prepared using the Papanicolaou staining method. The whole-slide images (WSIs) were obtained using a Panoramic MIDI II digital slide scanner, which were then cropped to appropriate images, with the final image sizes standardized to 1,175×581 pixels.

- For the creation of the clinical dataset, 117 cases were retrospectively collected from Daping Hospital, Army Medical University (Chongqing, China) in 2020. The inclusion criteria were as follows: (i) patients aged 18 years old; and (ii) TCT diagnosis results of intraepithelial neoplasia or cervical cancer. Meanwhile, the exclusion criteria were as follows: (i) poor image quality; and (ii) TCT smears containing at least 2,000 well-defined squamous epithelial cells. A total of 12 patients, comprising 1,514 images and 4,698 instances, were enrolled in the analysis. The average age of the patients was 39.91 years, with a standard deviation of 8.90 years. The cases were divided into five categories: ASC-US, ASCH, LSIL, HSIL, and SCC (Figure 4A,4B). Two pathologists were invited to annotate cervical lesions using labelImg (https://github.com/HumanSignal/labelImg). To ensure interannotator agreement, the two pathologists annotated the data together rather than independently. In cases of disagreement, they discussed further until reaching a consensus. Finally, a senior pathologist reviewed the pathological cell information in the database to ensure data accuracy.

This study was approved by the Ethics Committee of the Army Medical University (approval No. KY201774) and was conducted in accordance with the Declaration of Helsinki (as revised in 2013). As this study used retrospectively collected anonymous data and the sensitive information of patients was anonymized, the requirement for patient consent was waived.

Heights of all cells in the two datasets were visualized in scatter plots (Figure 4C,4D), which indicated that most cell heights in the Comparison Detector dataset were in the 0.1–0.6 range. In contrast, in our clinical dataset, the heights were primarily concentrated in the 0–0.1 range, indicating that most objects were small, particularly in our dataset.

Implementation details

We randomly divided the Comparison Detector dataset into training and validation datasets in a 9:1 ratio. The training dataset comprised 6,666 images, and the validation dataset comprised 774 images. Additionally, we randomly divided our dataset into training and validation datasets at the same 9:1 ratio.

We implemented our proposed method using PyTorch on four Titan Xp GPUs (NVIDIA, Santa Clara, CA, USA) with 12 GB of memory. The batch size was 16, the initial learning rate was 0.02, and the of the cosine annealing learning rate scheduler was set to 3.0. To achieve faster convergence, we trained the model using the stochastic gradient descent optimizer with a weight decay of 5×10−4 and a momentum of 0.937. We employed the mosaic, hue saturation value augmentation, rotation, translation, flipping, and mix-up methods to prevent overfitting during the training stage. Additionally, to improve the model’s ability to extract generalizable features, enhance convergence speed, and optimize training performance, we pretrained the YOLOv5l model on the Common Objects in Context (COCO) dataset and selected an image size of 640×640 pixels to maintain consistency with the pretrained model. Finally, the mean average precision calculated at an intersection over a union threshold of 0.50 (mAP50), precision, recall, and F1 scores were calculated for each category in ablation experiments to evaluate the performance of the models.

Results

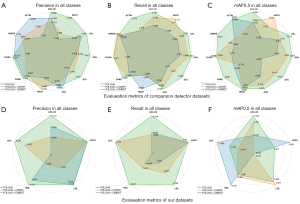

Comparison Detector dataset detection

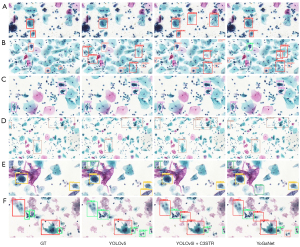

The mAP50 and recall of YoGaNet in the Comparison Detector dataset reached 68.6% and 66.7%, respectively. Compared with the values for the Comparison Detector dataset reported by Liang et al. (12), the mAP50 and recall of our method improved by 22.8% and 3.2%, respectively (Table 1). Meanwhile, compared with the baseline model (YOLOv5l), our method improved the mAP50 by 6.2% and recall by 3.3%. Moreover, our method provided considerable advantages in detecting ASC-US, ASCH, LSIL, HSIL, SCC, Candida, and flora cells (Table 2, Figure 5). In our experiments, the average inference time was 0.0913 s per image, indicating high efficiency.

Table 1

| Methods | Dataset | mAP50 | mAP50:95 | Recall | Precision | F1 |

|---|---|---|---|---|---|---|

| Comparison Detector | Comparison | 45.9 | – | 63.5 | – | – |

| YOLOv5l | Comparison | 62.4 | 35.2 | 63.4 | 58.8 | 61.0 |

| Our dataset | 33.3 | 18.5 | 38.5 | 52.9 | 17.9 | |

| YOLOv5l + C3STR | Comparison | 66.2 | 36.3 | 68.0† | 57.8 | 61.0 |

| Our dataset | 35.6 | 19.9 | 38.5 | 53.4 | 22.4 | |

| YoGaNet (proposed) | Comparison | 68.6† | 37.1† | 66.7 | 60.7 | 62.0† |

| Our dataset | 36.8† | 21.2† | 58.5† | 48.1 | 22.6† |

Data are presented as percentage (%). †, the best results. C3STR, cross stage partial network with 3 convolutions and a residual block; mAP50, mean average precision calculated at an intersection over a union threshold of 0.50; mAP50:95, mean average precision averaged over intersection over union thresholds from 0.50 to 0.95 with a step size of 0.05.

Table 2

| Methods | Metric | ASC-US (n=212) | ASC-H (n=402) | LSIL (n=162) | HSIL (n=2,792) | SCC (n=228) | AGC (n=653) | TRICH (n=477) | CAND (n=26) | FLORA (n=24) | HERP (n=35) | ACTIN (n=16) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv5l | mAP 50 | 52.2 | 28.8 | 57.4 | 54.9 | 33.3 | 69.0 | 68.4† | 80.6 | 75.4 | 80.8 | 87.4† |

| Recall | 48.5 | 26.9 | 59.4 | 53.7 | 37.7 | 65.8 | 68.8† | 80.8 | 79.2 | 77.1 | 75.0 | |

| Precision | 56.9 | 34.2 | 53.4 | 52.9 | 44.1 | 68.2† | 63.5 | 83.6 | 53.0 | 73.3† | 66.5† | |

| YOLOv5l + C3STR | mAP50 | 54.4 | 34.7 | 57.7 | 59.2 | 41.2 | 73.3 | 67.7 | 77.0 | 84.8 | 83.7† | 74.6 |

| Recall | 52.8 | 31.3† | 64.2 | 58.6 | 41.7 | 69.8 | 59.3 | 84.6 | 91.7† | 80.0† | 75.0 | |

| Precision | 55.1 | 38.2 | 52.4 | 54.3 | 47.1 | 63.1 | 70.9† | 80.8 | 46.7 | 69.1 | 59.0 | |

| YoGaNet (proposed) | mAP50 | 57.7† | 35.5† | 66.0† | 62.9† | 46.9† | 77.9† | 67.9 | 90.7† | 86.8† | 82.5 | 82.3 |

| Recall | 55.6† | 30.3 | 66.7† | 60.1† | 46.2† | 70.9† | 58.7 | 86.8† | 91.7† | 79.1 | 87.5† | |

| Precision | 60.2† | 48.0† | 56.4† | 61.1† | 51.5† | 66.2† | 69.4† | 84.9† | 53.7† | 66.4† | 50.2† |

Data are presented as percentage (%). †, the best results. ACTIN, actinomycosis; AGC, atypical glandular cell; ASC-H, atypical squamous cells, cannot exclude high-grade squamous intraepithelial lesion; ASC-US, atypical squamous cells of undetermined significance; C3STR, cross stage partial network with 3 convolutions and a residual block; CAND, vulvovaginal candidiasis; FLORA, bacterial flora; HERPS, genital herpes; HSIL, high-grade squamous intraepithelial lesion; LSIL, low-grade squamous intraepithelial lesion; mAP50, mean average precision calculated at an intersection over a union threshold of 0.50; SCC, squamous cell carcinoma; TRICH, trichomoniasis.

Clinical dataset detection

Our clinical dataset included five types of squamous epithelial cell lesions. The mAP50 and recall of YoGaNet for our clinical dataset were 36.8% and 58.5%, respectively. Compared with the baseline model (YOLOv5l), YoGaNet exhibited significant improvements in mAP50, recall, and F1, with increases of 3.5%, 20%, and 4.7%, respectively (Table 1). However, the precision was 4.8% lower. Moreover, our method improved the mAP50, recall, and precision for the ASC-US category, with improvements in recall and precision for the other categories; however, the performance decreased in terms of mAP50 (Table 3, Figure 5).

Table 3

| Methods | Metric | ASC-US (n=103) | ASC-H (n=22) | LSIL (n=399) | HSIL (n=410) | SCC (n=10) |

|---|---|---|---|---|---|---|

| YOLOv5l | mAP50 | 33.2 | 36.0† | 3.1 | 44.7† | 45.9† |

| Recall | 60.2 | – | 52.1 | 42.7 | – | |

| Precision | 30.2 | 1 | 41.2 | 40.1 | 1 | |

| YOLOv5l + C3STR | mAP50 | 39.7 | 2.5 | 51.7† | 40.2 | 34.4 |

| Recall | 58.3 | 0 | 67.2 | 47.5 | 30.5 | |

| Precision | 25.8 | 1 | 32.1 | 27.9 | 43.7 | |

| YoGaNet (ours) | mAP50 | 42.2† | 30.1 | 50.0 | 39.1 | 40.0 |

| Recall | 72.8† | – | 78.8† | 62.7† | 30.2† | |

| Precision | 33.7† | 1† | 41.3† | 42.2† | 48.5† |

Data are presented as percentage (%). †, the best results. ASC-H, atypical squamous cells, cannot exclude high-grade squamous intraepithelial lesion; ASC-US, atypical squamous cells of undetermined significance; C3STR, cross stage partial network with 3 convolutions and a residual block; HSIL, high-grade squamous intraepithelial lesion; LSIL, low-grade squamous intraepithelial lesion; mAP50, mean average precision calculated at an intersection over a union threshold of 0.50; SCC, squamous cell carcinoma.

Robustness of the models

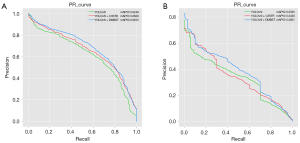

We plotted the precision-recall (PR) curves to demonstrate the detection capabilities of the different methods (Figure 6). YoGaNet exhibited considerable advantages for both datasets as compared with YOLOv5l and YOLOv5l + cross stage partial network with 3 convolutions and a residual block (C3STR) model. Moreover, ablation experiments were conducted by simply adding a C3STR module to YOLOv5l; the results revealed that our algorithm performed better than did YOLOv5l + C3STR and highlighted the robustness of our proposed approach.

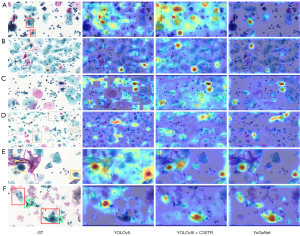

Discriminative localization of YoGaNet

Based on the detection results (Figure 7) and the associated feature maps generated via the gradient-weighted class activation mapping (Grad-Cam) method (Figure 8), YoGaNet detected regions of pathological cells more clearly and accurately than did YOLOv5l or YOLOv5l + C3STR, thus demonstrating superior higher detection performance for small objects.

Discussion

YoGaNet, based on YOLOv5l and DMBST modules, can efficiently detect ASC-US, ASCH, LSIL, HSIL, SCC, AGC, and pathogenic infections with fine-grained pathomorphology from massive TCT images. Our end-to-end detection model represents an improvement to the cumbersome process of cervical cell detection presented in previous studies (11,12). More specifically, compared with the YOLOv51 baseline model examined in the ablation experiments and the approach described by Liang et al. (12), YoGaNet achieved excellent results. In general, our research findings demonstrate the value of artificial intelligence technology in cervical cytology screening. YoGaNet exhibited exceptional sensitivity and specificity and may simplify the complexity of cytopathology detection tasks and achieve accurate classification of various types of pathological cells. The proposed approach can alleviate the workload of pathologists in clinical examinations and provide effective assistance for medical professionals in resource-limited areas and those with less experience, enhancing the possibility of early diagnosis and the intervention of cervical cancer (19). It can therefore contribute to the modernization of the healthcare system and promote medical equity.

Grad-Cam visualization of TCT images confirmed that our YoGaNet algorithm can more readily localize and classify cervical lesions, particularly those involving smaller objects. YoGaNet exploits the respective strengths of CNNs and transformers. CNNs excel at extracting local semantic information, whereas transformers efficiently extract global semantic information. Moreover, we randomly dropped one branch to drive the model to learn other feature representations. Finally, the generated attention maps enhanced the regions of interest for the model while weakening the others, achieving better localization. In contrast, the YOLOv5 model pays excessive attention to the background of images, which is undesirable.

Qualitatively, the overall performance of our proposed algorithm was superior in the Comparison Detector dataset, which contained 11 types of lesion cells, which is more numerous that than the five types in our clinical dataset. Although the Comparison Detector dataset has more categories, its overall and individual category data volume is almost six times larger than the clinical dataset, resulting in better accuracy. Specifically, the ASCH and SCC categories in our dataset had fewer data samples, with only 116 and 69 cases in the training dataset, respectively, leading to slightly lower results than those for other lesion cell types. These findings highlight the importance of data volume in improving the effectiveness of deep learning. Additionally, the smaller size of the cells in our study likely affected the localization task. In the Comparison Detector dataset, although the number of squamous epithelial cell lesions was higher than that of TRICH, CAND, FLORA, HERPS, ACTIN, and other cell types, the performance was poor. This may be because the cytological changes in squamous epithelial lesions do not have clear boundaries, causing them to overlap; additionally, these epithelial lesions can react similarly to normal cells. This is a typical long-tail dataset, and thus the cytological changes are diverse, whereas the morphological characteristics of biological pathogens are typically more overt, resulting in good detection performance.

Most diagnostic studies on cervical cytology are based on the segmentation or classification of cervical cells (20-22), requiring pathologists to manually delineate the cell boundaries. This is a time-consuming and labor-intensive task, and the classification accuracy is affected by the segmentation accuracy. Although Cao et al. (6) achieved a high 95% cell detection accuracy in large-scale TCT images, they focused solely on the classification of lesions and normal cells. In contrast, our study involved the successful detection of multiclass lesion cells in the Comparison Detector dataset and a clinical dataset, which better aligns with clinical needs. Another study (23) proved the feasibility of using YOLO for the detection of cervical lesion cells in WSIs of cervical cytology. This study employed an extensive dataset for testing and validation, achieving state-of-the-art results, which suggests that further research in this field should involve the collection of additional data for in-depth analysis. However, the work did not assess pathogenic infections in cells related to TRICH, CAND, FLORA, HERPS, or ACTIN. By identifying these infections, physicians can assess the state of cervical inflammation, predict the risk of cervical cancer, and guide further screening and therapeutic decisions accordingly. In our study, this aspect was addressed, which enables a more precise characterization of the cervical environment of patients, thereby achieving a more comprehensive diagnosis and monitoring of cervical cancer progression.

The proposed method involves certain limitations which should be addressed. First, although the results obtained by our algorithm indicated improvement, the accuracy did not meet the requirements for clinical use. Therefore, additional data should be collected and better transfer-learning methods applied to improve the detection ability and robustness of the model. Second, compared with YOLOv5l, the model proposed in this study contains a greater number of parameters, which increases the computational complexity and inference time. Although we believe that these compromises are acceptable for improving detection accuracy, the computational complexity must be considered in subsequent research. Finally, the backbone of the proposed algorithm is YOLOv5l, which requires support from nonmaximum suppression, resulting in a gap between our model and the current most advanced models exists in accuracy.

Conclusions

In this study, the proposed YoGaNet achieved end-to-end intelligent detection of multiclass cervical lesion cells in large-scale TCT images with improved performance in the Comparison Detector dataset and a clinical dataset. Compared with the baseline model and methods proposed elsewhere, YoGaNet focused more on recognizing the pathological morphology of small cells, indicating that this proposed method is effective for cell detection with fine morphological features. In future research, we will collect additional clinical data to validate our method and improve the clinical generalizability of the model.

Acknowledgments

None.

Footnote

Reporting Checklist: The authors have completed the TRIPOD + AI reporting checklist. Available at https://qims.amegroups.com/article/view/10.21037/qims-24-1590/rc

Funding: This work was supported by the Natural Science Foundation of Chongqing (No. cstc2021jcyj-msxmX0965).

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://qims.amegroups.com/article/view/10.21037/qims-24-1590/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. This study was approved by the Ethics Committee of the Army Medical University (approval No. KY201774) and was conducted in accordance with the Declaration of Helsinki (as revised in 2013). As this study used retrospectively collected anonymous data and the sensitive information of patients was anonymized, the requirement for patient consent was waived.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Small W Jr, Bacon MA, Bajaj A, Chuang LT, Fisher BJ, Harkenrider MM, Jhingran A, Kitchener HC, Mileshkin LR, Viswanathan AN, Gaffney DK. Cervical cancer: A global health crisis. Cancer 2017;123:2404-12. [Crossref] [PubMed]

- Nayar R, Wilbur DC. The Bethesda system for reporting cervical cytology: definitions, criteria, and explanatory notes. 3rd ed. New York: Springer; 2015.

- Zhao M, Wu A, Song J, Sun X, Dong N. Automatic screening of cervical cells using block image processing. Biomed Eng Online 2016;15:14. [Crossref] [PubMed]

- Liu L, Wang Y, Wu D, Zhai Y, Tan L, Xiao J. Multitask learning for pathomorphology recognition of squamous intraepithelial lesion in thinprep cytologic test. In: Proceedings of the 2nd International Symposium on Image Computing and Digital Medicine. 2018:73-7.

- Zhao C, Shuai R, Ma L, Liu W, Wu M. Improving cervical cancer classification with imbalanced datasets combining taming transformers with T2T-ViT. Multimed Tools Appl 2022;81:24265-300. [Crossref] [PubMed]

- Cao L, Yang J, Rong Z, Li L, Xia B, You C, Lou G, Jiang L, Du C, Meng H, Wang W, Wang M, Li K, Hou Y. A novel attention-guided convolutional network for the detection of abnormal cervical cells in cervical cancer screening. Med Image Anal 2021;73:102197. [Crossref] [PubMed]

- Zhao Y, Fu C, Xu S, Cao L, Ma HF. LFANet: Lightweight feature attention network for abnormal cell segmentation in cervical cytology images. Comput Biol Med 2022;145:105500. [Crossref] [PubMed]

- Carion N, Massa F, Synnaeve G, Usunier N, Kirillov A, Zagoruyko S. End-to-end object detection with transformers. In: European Conference on Computer Vision. Cham: Springer International Publishing; 2020:213-29.

- Ren S, He K, Girshick R, Sun J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans Pattern Anal Mach Intell 2017;39:1137-49. [Crossref] [PubMed]

- He K, Gkioxari G, Dollar P, Girshick R. Mask R-CNN. IEEE Trans Pattern Anal Mach Intell 2020;42:386-97. [Crossref] [PubMed]

- Wang W, Tian Y, Xu Y, Zhang XX, Li YS, Zhao SF, Bai YH. 3cDe-Net: a cervical cancer cell detection network based on an improved backbone network and multiscale feature fusion. BMC Med Imaging 2022;22:130. [Crossref] [PubMed]

- Liang Y, Tang Z, Yan M, Chen J, Liu Q, Xiang Y. Comparison-based convolutional neural networks for cervical cell/clumps detection in the limited data scenario. arXiv:1810.05952 [Preprint]. 2018. Available online: https://arxiv.org/abs/1810.05952

- Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser Ł, Polosukhin I. Attention is all you need. In: Advances in Neural Information Processing Systems 30 (NIPS 2017) 2017:6000-10.

- Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S, Uszkoreit J, Houlsby N. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv:2010.11929 [Preprint]. 2020. Available online: https://arxiv.org/pdf/2010.11929

- Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B. Swin transformer: Hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. 2021:10012-22.

- Li LH, Zhang P, Zhang H, Yang J, Li C, Zhong Y, Wang L, Yuan L, Zhang L, Hwang JN, Chang KW, Gao J. Grounded language-image pre-training. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022:10965-75.

- Zhu X, Lyu S, Wang X, Zhao Q. TPH-YOLOv5: Improved YOLOv5 based on transformer prediction head for object detection on drone-captured scenarios. In: Proceedings of the IEEE/CVF International Conference on Computer Vision 2021:2778-88.

- Xue F, Wang Q, Guo G. Transfer: Learning relation-aware facial expression representations with transformers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision 2021:3601-10.

- Bao H, Bi H, Zhang X, Zhao Y, Dong Y, Luo X, Zhou D, You Z, Wu Y, Liu Z, Zhang Y, Liu J, Fang L, Wang L. Artificial intelligence-assisted cytology for detection of cervical intraepithelial neoplasia or invasive cancer: A multicenter, clinical-based, observational study. Gynecol Oncol 2020;159:171-8. [Crossref] [PubMed]

- Saha R, Bajger M, Lee G. Circular shape constrained fuzzy clustering (CiscFC) for nucleus segmentation in Pap smear images. Comput Biol Med 2017;85:13-23. [Crossref] [PubMed]

- Zhang L, Kong H, Chin CT, Liu S, Chen Z, Wang T, Chen S. Segmentation of cytoplasm and nuclei of abnormal cells in cervical cytology using global and local graph cuts. Comput Med Imaging Graph 2014;38:369-80. [Crossref] [PubMed]

- Zhang L, Kong H, Liu S, Wang T, Chen S, Sonka M. Graph-based segmentation of abnormal nuclei in cervical cytology. Comput Med Imaging Graph 2017;56:38-48. [Crossref] [PubMed]

- Wang J, Yu Y, Tan Y, Wan H, Zheng N, He Z, et al. Artificial intelligence enables precision diagnosis of cervical cytology grades and cervical cancer. Nat Commun 2024;15:4369. [Crossref] [PubMed]