Multi-scale perceptual modulation network for low-dose computed tomography denoising

Introduction

Computed tomography (CT) is an important imaging modality used for clinical diagnosis and radiation treatment planning. However, the widespread use of CT raises serious concerns about the potential cancer risks of low-level X-ray radiation. Therefore, a low-dose computed tomography (LDCT) technique is urgently needed in clinical practice. The most common method for reducing the radiation dose is to lower the X-ray tube current (or voltage). However, as a consequence, the resulting LDCT images contain severe noise leading to degraded image quality, which affects the accuracy of clinical diagnosis. To effectively improve the image quality of LDCT, a large number of algorithms have been developed that can be broadly categorized into the following three types: (I) sinogram filtering; (II) iterative reconstruction; and (III) post-processing methods.

Sinogram domain methods mainly focus on the original projection data. These methods often use filters, such as filtered back-projection, on the raw data to smooth the sinogram before image reconstruction. Representative denoising methods in the sinogram domain mainly include bilateral filtering (1), structural adaptive filtering (2), and penalized weighted least-squares approach (3). However, these methods always lead to edge blurring or spatial resolution loss. In recent decades, iterative reconstruction algorithms have been used for LDCT denoising. These methods describe the CT imaging process with a mathematical model that integrates the prior image information as a penalty term into the objective function, and then reconstructs images by iteratively solving the objective function. The representative penalty terms include total variation (TV) (4,5), dictionary learning (6), and low rank (7). Nevertheless, these algorithms are very time consuming due to the large number of projection and back-projection operations in the iterative process, which hinders their clinical application.

In recent years, many post-processing methods (8-11) based on deep-learning frameworks have been proposed for image domain denoising in LDCT. Chen et al. developed a residual encoder-decoder convolutional neural network (RED-CNN) to reduce noise in LDCT images and achieved superior outcomes (9). Kang et al. proposed a deep residual learning convolutional neural network (CNN) architecture to remove noise with the aid of directional wavelets (12), showing the potential of deep learning in LDCT denoising. With the advancement of generative adversarial networks, Wolterink et al. trained a generator CNN together with a discriminator CNN to extract normal-dose computed tomography (NDCT) from the LDCT, and thus achieved noise reduction (13). Wu et al. improved denoising performance in LDCT by iteratively building a cascade of CNNs (14). Shan et al. presented a modularized adaptive processing neural network model that applies end-to-process denoising mapping to guide the LDCT denoising process in a task-specific fashion (10).

Since 2020, transformers (15) that rely on self-attention mechanisms have shown superior performance compared to CNNs in LDCT denoising. Zhang et al. were the first to apply transformers to LDCT denoising, dividing LDCT images into high- and low-frequency parts, and using transformers for composite inference (16). Wang et al. presented a convolution-free transformer that enhances feature extraction and noise reduction by introducing dilated tokenization and circular shifting (17). Yang et al. designed a sinogram inner-structure transformer that introduces the inner-structure of the original projection data to improve LDCT denoising performance in the image domain (18). However, these existing transformer-based methods have a number of limitations. First, the complexity of the vanilla Transformer is quadratic with respect to the input size, which is computationally expensive for high-resolution LDCT inputs. Second, some transformers reduce the complexity by adopting a hybrid approach (e.g., by introducing a “sliding-window” strategy and applying self-attention in local image regions), which makes their behavior more similar to that of CNNs. Advanced methods, such as cyclic shifting, can optimize speed; however, these methods also increase the complexity of the system design. Ironically, a CNN can achieve numerous desired properties with a straightforward and succinct approach.

Recently, some studies have shown the potential of CNN models with large kernel and advanced modules, such as Gaussian error linear unit, to compete with transformers on tasks such as classification and detection. However, simply increasing the kernel size to improve performance also greatly increases the computational burden. Moreover, simply applying convolutions directly to feature maps falls short of achieving the desired performance. Inspired by recent convolutional modulation networks (19,20), we found that convolutional modulation has superior modeling capabilities compared to standalone convolutional blocks. Therefore, we proposed a multi-scale perceptual modulation network (MSPMnet), and conducted experiments on the Mayo Clinic data set to evaluate our proposed network. Both the quantitative and qualitative results showed that the proposed method suppressed the noise and artifacts in LDCT images more effectively than existing methods while preserving details. The contributions of this study are as follows:

- It proposed the multi-scale perceptual modulation (MSPM) module that incorporates a powerful multi-head decomposable convolution (MHDC) and a 1×1 omni-dimensional dynamic convolution (ODDC) to enhance the receptive field and facilitate the integration of multi-scale contexts;

- It proposed a novel receptive field-ramp mechanism that gradually increases the receptive field as the depth of the network increases to improve model performance;

- It validated the effectiveness of the proposed modules through ablation experiments, and compared the impact of three training paradigms on the denoising performance of the model.

The remaining sections of this article are organized as follows: in “Methods” section, a detailed description of the proposed network structure is presented; in “Results” section, the proposed model is rigorously evaluated and validated; finally, in “Discussion and conclusions” sections, the article concludes by summarizing the main findings and contributions of this study as discussed in the preceding sections.

Methods

In this section, the overall pipeline of our MSPMnet architecture is first described. Second, we elaborate on the proposed MSPM block. Third, we describe the proposed receptive field-ramp mechanism. Fourth, we present the learning paradigm of our model. Finally, we provide details of the experimental datasets and network training.

Network architecture

The overall pipeline of the proposed MSPMnet is depicted in Figure 1. A LDCT image is first processed by a convolution operation to generate low-level feature embeddings with spatial dimensions and channels. Next, the low-level features are transformed into deep features after passing through a four-level encoder-decoder. Each level contains a stack of well-designed convolutional blocks. Taking high resolution as the input, the encoder shrinks the spatial size hierarchically while enlarging the channel capacity. The decoder restores the high-resolution representation progressively taking the low-resolution latent feature produced by the fourth level of the encoder as input. The feature down-sampling between each level of encoders and the feature up-sampling between each level of decoders are performed using pixel-unshuffle and pixel-shuffle, respectively. The encoder features are concatenated to the decoder features through skip connections, followed by 1×1 convolutions to halve the number of channels at all levels, except for level one (L1). At L1, the low-level features from the encoder and the high-level features from the decoder are aggregated by concatenation only. Next, the deep features pass through a convolution layer to generate the residual image . Finally, the restored CT image is obtained by adding the residual image to the input LDCT, which is expressed as follows: .

MSPM

The structures of the proposed modules are shown in Figure 2, and discussed in detail below.

MHDC

The proposed MHDC incorporates multiple convolutions with various kernel sizes, and a maximum-pooling operation to capture features across multiple scales. Further, the MHDC is able to expand the receptive field with large convolutional kernels, enhancing its ability to model long-range dependencies. Since conventional depth-wise convolutions with large kernels greatly increase the computational burden of the model, we split the two large two-dimensional kernels with sizes k×k and (k+2) × (k+2), respectively, into two sets of cascaded one-dimensional kernels to reduce the computational cost. The value of k is given in the subsequent section. In addition, considering the sharp sensitivity of the maximum filter, we introduce a maximum-pooling layer as one of the branches. As Figure 2B shows, the MHDC divides the input into four groups along the channel, with two groups flowing into a maximum-pooling layer and a 3×3 depth-wise convolution, respectively, while the remaining two groups pass through different sets of large decomposable kernel convolutions, reducing the parameter size and computational cost. Finally, the outputs from all branches are concatenated. Our proposed MHDC is expressed as follows:

where denotes the band kernel size.

Multi-scale aggregation

The most popular method for enhancing the channel-wise interaction of features is the multi-layer perceptron (MLP) with a 4× channel amplification factor. However, such a MLP carries a considerable computational cost. Therefore, we introduce a 1×1 ODDC (21) to enhance the channel-wise interaction among multiple scale features. The ODDC integrates a squeeze-excitation (SE) module (22) with multiple heads to compute weight factors along the following four dimensions of the convolutional kernel space: spatial dimension, input channel dimension, output channel dimension, and kernel dimension. To trade-off the performance and efficiency, we employ a 1×1 kernel size and discard the weights of the spatial and kernel dimensions, focusing solely on the input and output channel dimensions to enhance the channel interactions. Here, the modulator is expressed as follows:

Multi-scale modulation

As Figure 2A shows, after capturing and aggregating multi-scale spatial features, we obtain an output feature map, denoted as modulator , which is then used to modulate values via Hadamard product. Given the input , the output can be calculated as follows:

where ⊙ denotes the Hadamard product, and and represent the weight matrices of two linear layers. The modulator is computed by Eq. [3], which allows it to change dynamically based on different inputs, thereby enabling adaptive self-modulation. Moreover, unlike self-attention, which calculates the similarity score matrix where , discarding the channel dimension and causing the computational complexity to increase quadratically with the sequence length , the modulator preserves the channel dimension, allowing for both the spatial- and channel-specific modulation of the maps after Hadamard product.

Receptive field-ramp mechanism

In general hierarchical vision frameworks, the input images are down-sampled (i.e., the resolution is reduced) multiple times from the top levels to the bottom levels, resulting in a loss of detail information. Previous research reported that top levels tend to capture local information, while bottom levels tend to model relatively long-range dependencies (23). Based on this, we propose a receptive field-ramp mechanism that gradually increases the receptive field from the top to bottom level to enhance the modeling of long-range dependencies. As Figure 1 shows, our network has four levels with different channel capacities and spatial resolutions. For each level, we design the specific kernel size. Specifically, we define and let in Eq. [1], where is the level index of the network. Smaller kernels are used in the top levels to better capture the local features of the image; for example, it has in L1. Larger kernels are introduced in the lower levels to allow the network to better learn the long-range dependencies; that is, gradually increases as the network level increases, and it has in level four. Through this flexible receptive field-ramp mechanism, the MSPMnet can effectively improve its ability to model long-range dependencies, enhancing the overall performance of the model.

Learning paradigm

Currently, there are two popular learning paradigms in deep-learning networks: direct mapping and residual learning. In direct mapping, the network directly learns the complete mapping function from the input LDCT to the output denoised image (24). Conversely, in residual learning, the network learns residuals; that is, it attempts to capture the difference (the residual noise) between the input LDCT and desired denoised output rather than learning the entire mapping function directly. Residual learning has been shown to be effective for restoration, and to outperform the direct mapping paradigm in detail recovery (25). Therefore, we adopt the residual learning paradigm in the proposed network, as described in “Network architecture” section. Recently, Zhu et al. (26) introduced an intriguing paradigm in which the residual noise is extracted prior to image denoising, and then concatenated with the noisy image for input into the network, which directly outputs the denoised image. We refer to this paradigm as residual extraction + direct mapping. To evaluate the impact of these learning paradigms on the network performance, comparative experiments were conducted.

Experimental datasets and network training

Dataset

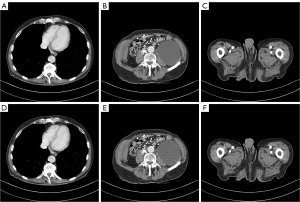

To assess the performance of the model, we employed a classic dataset from the Mayo Clinic for the 2016 LDCT Grand Challenge. This dataset comprised a total of 2,378 CT image pairs that were collected at 120 kV and a quarter dose, with a size of 512×512, form 10 patients. The data of nine patients were used as the training set for the model, while the data of the remaining patient L506, comprising 211 images, was used as the testing set. Sample image pairs from the Mayo Clinic data set are presented in Figure 3. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The patient images used in this study are publicly available, and they were retrospectively obtained after approval by the Institutional Review Board of the Mayo Clinic.

Network training

We used a NVIDIA RTX 4090 (24GB) GPU card to train the models with the AdamW optimizer (, , weight decay 1e−4). The learning rate was initialized as 3e–4 and gradually decayed to 1e–5 using the cosine annealing. During the training process, a data augmentation technique was employed to enhance the diversity of the training data. More training images were generated by randomly rotating (90°, 180°, or 270°) and flipping (horizontally and vertically) the original images.

Results

The peak signal-to-noise ratio (PSNR), root mean-square error (RMSE), structural similarity index (SSIM) (27), and feature similarity index (FSIM) (28) were used to quantify the quality of the denoised images. We compared our method with five different state-of-the-art methods, including the TV and RED-CNN (9), wavelet residual network (WavResNet) (12), dual-path transformer for low-dose computed tomography (TransCT) (16), and convolution-free token2token dilated vision transformer (CTformer) (17). TV is a conventional image domain-based denoising algorithm. RED-CNN and WavResNet are two classical CNN-based methods. TransCT and CTformer are transformer-based methods. All the selected methods are popular LDCT denoising methods that have been the subject of articles published in flagship journals, and we retrained these models with their officially disclosed codes.

Experimental results

Visual evaluation

To evaluate the denoising ability of the proposed MSPMnet, we provide results of two representative slices, a pelvic slice and an abdominal slice. Figure 4 compares the MSPMnet with the other baseline methods for the pelvic slice. All methods exhibited varying degrees of noise and artifact suppression, but the denoised images obtained by the MSPMnet stand out as the clearest and most visually appealing. Specifically, the images processed by TV retained noticeable noise with low-image contrast and excessively blurred edges. The processing results of the RED-CNN overly smooth the image details, hindering the differentiation of crucial structural edges. The WavResNet, TransCT, and CTformer better removed image noise and artifacts, but edge preservation was inadequate, making it challenging to distinguish edges of structures with similar tissue densities. In contrast, the MSPMnet effectively showcased and preserved the edges of different tissues, and visually, aligned closely with NDCT. To better identify low-contrast regions to illustrate image details and facilitate further analysis of the processing effects of various methods, we zoomed in on the region of interest (ROI) area indicated by the yellow box in the image. The magnified image of the ROI is shown in the lower left corner of Figure 4. In the magnified image, TV, RED-CNN, and TransCT smoothed the edges of the lesion, making it difficult to differentiate between the lesion edges and normal tissue. The WavResnet and CTformer retained these edge structures, but exhibited a degree of oversmoothing and introduced additional artifacts near the lesion. In contrast, the MSPMnet effectively removed noise and artifacts while exceptionally preserving the edges of the lesion, which are clear and easy to distinguish.

Figure 6 shows the difference maps of the denoised images obtained with the different methods relative to NDCT. The richness of information depicted in the difference maps was correlated with higher deviations. It is evident that the images obtained by MSPMnet exhibited minimal deviation from the ground truth, suggesting that the denoised image obtained by the MSPMnet more effectively recovered the fine structures of the images and significantly reduced the noise and artifacts.

Comparative results of the representative abdominal slice are illustrated in Figure 7. Notably, the MSPMnet generated the clearest image, which was closest to NDCT. TV partially mitigated noise and artifacts, but a certain degree of noise persisted, leading to low-image contrast and unclear edges. RED-CNN removed noise and artifacts but excessively smoothed the low-contrast structures, making it challenging to discern certain crucial structural edges. The WavResnet, TransCT, and CTformer effectively eliminated the image noise and artifacts, but still lost the image details in organ regions. In contrast, the proposed MSPMnet excelled at both denoising and structural preservation, yielding images that showcased intricate structural details unrecoverable by other competing methods. A further performance analysis was conducted by examining magnified ROI images. In the magnified ROI images, only the MSPMnet recovered the edges of the caudate lobe and left lateral segment, achieving the best visual resemblance to NDCT.

The difference maps relative to NDCT for different methods are shown in Figure 8. Notably, the images obtained by the MSPMnet exhibited the least deviation from the reference images, indicating that the MSPMnet preserved the majority of image details and significantly reduced noise and artifacts.

Quantitative evaluation

To better evaluate differences in the CT numbers in the denoised images, Figure 5 provides profile plots of the two slices marked in Figure 4A and Figure 7A with red lines. Notably, the CT numbers restored by the MSPMnet were considerably closer to those of NDCT.

Table 1 presents the quantitative metrics of LDCT, and the denoised images obtained by various processing methods. The values (mean ± standard deviation) were obtained from a single evaluation of each model on the L506 test set, reflecting variation across the 211 images. The proposed MSPMnet had the lowest RMSE of 8.3094±1.9325 and the highest PSNR of 33.8525±1.8213 dB, SSIM of 0.9309±0.0272, and FSIM of 0.9699±0.0113, indicating the superior performance of the proposed MSPMnet compared to the other competing methods.

Table 1

| Methods | PSNR (dB)↑ | RMSE↓ | SSIM↑ | FSIM↑ |

|---|---|---|---|---|

| LDCT | 29.2489±2.1151 | 14.2416±3.9617 | 0.8891±0.0354 | 0.9548±0.0153 |

| TV | 31.6660±2.1929 | 10.8123±3.1717 | 0.9173±0.0314 | 0.9648±0.0131 |

| RED-CNN | 32.3290±1.6569 | 9.8630±2.0890 | 0.9194±0.0257 | 0.9667±0.0090 |

| WavResNet | 32.7731±1.5689 | 9.3513±1.8500 | 0.9205±0.0253 | 0.9621±0.0097 |

| TransCT | 32.5506±1.5984 | 9.6015±1.9563 | 0.9206±0.0270 | 0.9672±0.0096 |

| CTformer | 33.1307±1.7781 | 9.0200±2.0536 | 0.9247±0.0272 | 0.9681±0.0099 |

| MSPMnet | 33.8525±1.8213 | 8.3094±1.9325 | 0.9309±0.0272 | 0.9699±0.0113 |

↑ indicates that a higher value corresponds to a better result; ↓ indicates that a lower value corresponds to a better result. SD, standard deviation; LDCT, low-dose computed tomography; TV, total variation; RED-CNN, residual encoder-decoder convolutional neural network; WavResNet, wavelet residual network; TransCT, dual-path transformer for low-dose computed tomography; CTformer, convolution-free token2token dilated vision transformer; MSPMnet, multi-scale perceptual modulation network; PSNR, peak signal-to-noise ratio; dB, decibel; RMSE, root mean-square error; SSIM, structural similarity index; FSIM, feature similarity index.

Analytical experiments

Impact of MSPM

To augment the capability of the model to capture multi-scale spatial features and expand the receptive field effectively while remaining light weight, we proposed MSPM, the key component of which is MHDC. To evaluate the effects of the proposed MSPM, we replaced the MSPM module with a Resblock (29) (composed of conventional convolutions), and a ConvNeXt (30) block (mainly powered by depth-wise convolution), respectively. The results are shown in Table 2. Compared to the conventional convolution empowered module and depth-wise convolution empowered module, MSPM showed the best denoising performance with the lowest RMSE, and the highest PSNR, SSIM, and FSIM. In terms of complexity, MSPM had the fewest trainable parameters and multiply-accumulate operations (MACs), which were 65.60% and 77.61% lower than those of the Resblock, respectively. Further, to demonstrate the superiority of MHDC in terms of the computational cost, we present the comparison results of MACs for different convolutions in Figure 9. Notably, the proposed MHDC has much fewer MACs than both conventional convolution and depth-wise convolution as kernel size increases.

Table 2

| Convolution type | Parm (M) | MACs (G) | PSNR (dB) ↑ | RMSE↓ | SSIM↑ | FSIM↑ |

|---|---|---|---|---|---|---|

| Conventional conv. | 35.53 | 35.78 | 33.8506±1.8289 | 8.3129±1.9407 | 0.9304±0.0273 | 0.9690±0.0119 |

| Depth-wise conv. | 19.97 | 19.01 | 33.8355±1.8285 | 8.3272±1.9432 | 0.9307±0.0276 | 0.9694±0.0119 |

| MSPM | 12.22 | 8.01 | 33.8525±1.8213 | 8.3094±1.9325 | 0.9309±0.0272 | 0.9699±0.0113 |

↓ indicates that a lower value corresponds to a better result. MSPM, multi-scale perceptual modulation; SD, standard deviation; conv., convolution; Parm, trainable parameters; M, millions; MACs, multiply-accumulate operations; G, giga; PSNR, peak signal-to-noise ratio; dB, decibel; RMSE, root mean-square error; SSIM, structural similarity index; FSIM, feature similarity index; ↑ indicates that a higher value corresponds to a better result.

Impact of internal components in MHDC

The proposed MHDC enhances the multi-scale feature learning by fusing multiple branches. To evaluate the performance of combinations of different branches, we replaced the components in various branches with different modules, respectively. The experimental results are presented in Table 3. A → B denotes that component A in MHDC is replaced by module B. The MHDC achieved the best overall performance, demonstrating its superiority over other combinations.

Table 3

| Components | PSNR (dB) ↑ | RMSE↓ | SSIM↑ | FSIM↑ |

|---|---|---|---|---|

| Maximum pooling → average pooling | 33.8422±1.8223 | 8.3196±1.9360 | 0.9306±0.0273 | 0.9696±0.0114 |

| dwconv 3×3 → maximum pooling | 33.8333±1.8195 | 8.3274±1.9330 | 0.9310±0.0270 | 0.9698±0.0114 |

| C(k) → dwconv 3×3 | 33.8371±1.8271 | 8.3255±1.9427 | 0.9298±0.0278 | 0.9682±0.0124 |

| C(k+2) → dwconv 3×3 | 33.8084±1.8136 | 8.3501±1.9324 | 0.9310±0.0264 | 0.9698±0.0106 |

| MHDC | 33.8525±1.8213 | 8.3094±1.9325 | 0.9309±0.0272 | 0.9699±0.0113 |

A → B denotes replacing component A in MHDC with module B; dwconv 3×3, depth-wise convolution with kernel size of 3×3; C(k), cascade of k×1 and 1×k kernels; C(k+2), cascade of (k+2)×1 and 1×(k+2) kernels. ↑ indicates that a higher value corresponds to a better result; ↓ indicates that a lower value corresponds to a better result. MHDC, multi-head decomposable convolution; SD, standard deviation; PSNR, peak signal-to-noise ratio; dB, decibel; RMSE, root mean-square error; SSIM, structural similarity index; FSIM, feature similarity index.

Impact of 1×1 ODDC

We enhanced the channel interaction through the ODDC1×1, which integrates a multi-head SE module to calculate the weight factors along different dimensions of the convolution kernel space. To evaluate the effectiveness of the ODDC1×1, we replaced it with a 1×1 convolution and a SE block, respectively. The results are presented in Table 4. The ODDC1×1 outperformed both the 1×1 convolution and SE module, achieving the lowest RMSE and the highest PSNR, SSIM, and FSIM.

Table 4

| Modules | PSNR (dB) ↑ | RMSE↓ | SSIM↑ | FSIM↑ |

|---|---|---|---|---|

| 1×1 convolution | 33.8263±1.8339 | 8.3371±1.9508 | 0.9300±0.0283 | 0.9687±0.0125 |

| SE module | 33.8413±1.8245 | 8.3208±1.9380 | 0.9309±0.0274 | 0.9694±0.0118 |

| ODDC1×1 | 33.8525±1.8213 | 8.3094±1.9325 | 0.9309±0.0272 | 0.9699±0.0113 |

↑ indicates that a higher value corresponds to a better result; ↓ indicates that a lower value corresponds to a better result. ODDC1×1, 1×1 omni-dimensional dynamic convolution; SD, standard deviation; SE module, squeeze-excitation module; PSNR, peak signal-to-noise ratio; dB, decibel; RMSE, root mean-square error; SSIM, structural similarity index; FSIM, feature similarity index.

Impact of receptive field-ramp mechanism

A previous study reported that the upper levels tend to capture local information while the lower layers tend to capture relatively long-range dependencies (23). Therefore, we hypothesized that there is a receptive field-ramp mechanism, in which the receptive field is hierarchically expanded from the top to bottom levels, which achieves better denoising performance. To validate this hypothesis, we investigated the effect of the kernel size setting on the denoising performance of the model. The results are summarized in Table 5. The model with kernel sizes of [5, 7, 9, 11] outperformed the models with other kernel sizes, showing the effectiveness of the proposed receptive field-ramp mechanism.

Table 5

| Kernel size | PSNR (dB) ↑ | RMSE↓ | SSIM↑ | FSIM↑ |

|---|---|---|---|---|

| [7, 7, 7, 7] | 33.8491±1.8223 | 8.3129±1.9337 | 0.9304±0.0270 | 0.9702±0.0113 |

| [9, 9, 9, 9] | 33.8498±1.8252 | 8.3129±1.9379 | 0.9307±0.0273 | 0.9693±0.0117 |

| [11, 11, 11, 11] | 33.8492±1.8192 | 8.3391±1.9375 | 0.9303±0.0268 | 0.9701±0.0109 |

| [13, 13, 13, 13] | 33.8495±1.8266 | 8.3135±1.9387 | 0.9309±0.0275 | 0.9696±0.0118 |

| [13, 11, 9, 7] | 33.8420±1.8225 | 8.3197±1.9355 | 0.9307±0.0273 | 0.9694±0.0115 |

| [7, 9, 11, 13] | 33.8525±1.8213 | 8.3094±1.9325 | 0.9309±0.0272 | 0.9699±0.0113 |

↑ indicates that a higher value corresponds to a better result; ↓ indicates that a lower value corresponds to a better result. SD, standard deviation; PSNR, peak signal-to-noise ratio; dB, decibel; RMSE, root mean-square error; SSIM, structural similarity index; FSIM, feature similarity index.

Impact of learning paradigms

To evaluate the impact of different learning paradigms in the image denoising task, we examined three learning paradigms, including direct mapping, residual extraction + direct mapping, and residual learning. The results of the quantitative comparison are shown in Table 6. Compared to direct mapping, both residual learning and residual extraction + direct mapping showed better performance. In particular, the model with the residual learning paradigm performed the best, yielding the highest PSNR, SSIM, and FSIM, and the lowest RMSE.

Table 6

| Learning paradigm | PSNR (dB) ↑ | RMSE↓ | SSIM↑ | FSIM↑ |

|---|---|---|---|---|

| Direct mapping | 33.7813±1.8059 | 8.3745±1.9298 | 0.9297±0.0271 | 0.9683±0.0116 |

| Residual extraction + direct mapping | 33.7771±1.8051 | 8.3786±1.9318 | 0.9307±0.0267 | 0.9698±0.0108 |

| Residual learning | 33.8525±1.8213 | 8.3094±1.9325 | 0.9309±0.0272 | 0.9699±0.0113 |

↑ indicates that a higher value corresponds to a better result; ↓ indicates that a lower value corresponds to a better result. MSPMnet, multi-scale perceptual modulation network; SD, standard deviation; PSNR, peak signal-to-noise ratio; dB, decibel; RMSE, root mean-square error; SSIM, structural similarity index; FSIM, feature similarity index.

Discussion

We proposed an MSPMnet that can effectively suppress noise and artifacts while preserving image details. The proposed MSPM in the MSPMnet includes an innovative MHDC for efficient receptive field expansion and multi-scale feature capture, along with a 1×1 ODDC for enhancing the channel-wise iterations of features. Further, a receptive field-ramp mechanism was proposed to model the shift from focusing on local to relatively long-range dependency capture as the network gets deeper, thereby achieving superior performance. Both the qualitative and quantitative results showed that the performance of the proposed MSPMnet was comparable to or better than that of well-designed CNNs and transformer-based methods in LDCT denoising, while also maintaining the conciseness of convolutional networks.

We improved the LDCT image denoising performance through multi-scale learning, enhanced channel interactions, and effective receptive field expansion. Kadimesetty et al. drew inspiration from natural image restoration, and proposed a denoising CNN for LDCT perfusion maps denoising, pioneering the direct mapping of low-dose cerebral blood flow (CBF) maps obtained from LDCT perfusion data to high-dose CBF maps (31). Recent advancements in image restoration provided valuable insights for our work. IRNeXt effectively handles degradations of different scales by incorporating multi-stage mechanism in a U-shaped network (32). The omni-kernel network employs an omni-kernel module composed of global, large, and local branches to efficiently learn global-to-local features (33). The selective frequency network decouples and recalibrates frequency components via multi-scale average pooling, enabling large receptive fields to address large-scale degradation (34). Similarly, the strip attention network integrates multi-scale information by applying strip attention in different directions (35). The ChaIR network enhances channel interactions via channel attention mechanisms in both the spatial and implicit frequency domains (36). Additionally, the focal network emphasizes critical information through a dual-domain selection mechanism during the restoration process (37). These recent advancements inspire further exploration of large and multi-scale receptive fields in LDCT denoising.

Another key design in our study is the receptive field-ramp mechanism. In a recent study (38), Wang et al. adapted the kernel size to the depth in the medical image segmentation tasks to enhance the recognition of complex structures, which is similar to our receptive field-ramp mechanism, and reported favorable segmentation results. In our study, we proposed the receptive field-ramp mechanism for LDCT denoising. The experimental results showed that the receptive field-ramp mechanism also exhibited superior LDCT denoising performance, demonstrating its superiority in medical image processing.

Our method exhibited excellent LDCT denoising performance; however, it has a number of limitations. The proposed MSPMnet is a supervised learning network that requires pairs of noisy and clean images for training. In clinical practice, it is challenging to collect such paired training images due to patient movement during data acquisition and radiation-dose limitations. In our future research, we intend to explore semi-supervised learning or blind denoising strategies to leverage the available noisy or clean images, thereby overcoming the limitations associated with noisy/clean image pairing. Besides, CT images in the clinical typically use three-dimensional volumes, but we only used two-dimensional slices of CT volumes for the model training, and did not consider the spatial information of inter-slices, which might have led to incoherent information of the denoised images in the vertical slice direction. In our further work, we will attempt to fully use the spatial information between different CT slices to further improve the restoration of spatial details. Further, we will explore the possibilities of extending this work to other imaging modalities, such as optical coherence tomography and magnetic resonance imaging, and applying it to a broader range of pathology images in the clinic to aid in disease diagnosis.

Conclusions

The proposed MSPMnet effectively removes noise and artifacts from LDCT images while preserving edge detail. Compared to state-of-the-art CNNs and transformer models, the MSPMnet delivered superior performance in both the qualitative and quantitative assessments, offering a promising solution for LDCT denoising.

Acknowledgments

Funding: None.

Footnote

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://qims.amegroups.com/article/view/10.21037/qims-24-1145/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The patient images used in this study are publicly available, and they were retrospectively obtained after approval by the Institutional Review Board of the Mayo Clinic.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Manduca A, Yu L, Trzasko JD, Khaylova N, Kofler JM, McCollough CM, Fletcher JG. Projection space denoising with bilateral filtering and CT noise modeling for dose reduction in CT. Med Phys 2009;36:4911-9. [Crossref] [PubMed]

- Balda M, Hornegger J, Heismann B. Ray contribution masks for structure adaptive sinogram filtering. IEEE Trans Med Imaging 2012;31:1228-39. [Crossref] [PubMed]

- Wang J, Li T, Lu H, Liang Z. Penalized weighted least-squares approach to sinogram noise reduction and image reconstruction for low-dose X-ray computed tomography. IEEE Trans Med Imaging 2006;25:1272-83. [Crossref] [PubMed]

- Zhang Y, Wang Y, Zhang W, Lin F, Pu Y, Zhou J. Statistical iterative reconstruction using adaptive fractional order regularization. Biomed Opt Express 2016;7:1015-29. [Crossref] [PubMed]

- Kudo H, Yamazaki F, Nemoto T, Takaki K. A very fast iterative algorithm for TV-regularized image reconstruction with applications to low-dose and few-view CT. Developments in X-Ray Tomography X; 2016: SPIE. DOI:

10.1117/12.2236788 . - Xu Q, Yu H, Mou X, Zhang L, Hsieh J, Wang G. Low-dose X-ray CT reconstruction via dictionary learning. IEEE Trans Med Imaging 2012;31:1682-97. [Crossref] [PubMed]

- Cai JF, Jia X, Gao H, Jiang SB, Shen Z, Zhao H. Cine cone beam CT reconstruction using low-rank matrix factorization: algorithm and a proof-of-princple study. IEEE Transactions on Medical Imaging 2014;33:1581-91. [Crossref] [PubMed]

- Zhang Z, Han X, Pearson E, Pelizzari C, Sidky EY, Pan X. Artifact reduction in short-scan CBCT by use of optimization-based reconstruction. Phys Med Biol 2016;61:3387-406. [Crossref] [PubMed]

- Chen H, Zhang Y, Kalra MK, Lin F, Chen Y, Liao P, Zhou J, Wang G, Low-Dose CT. With a Residual Encoder-Decoder Convolutional Neural Network. IEEE Trans Med Imaging 2017;36:2524-35. [Crossref] [PubMed]

- Shan H, Padole A, Homayounieh F, Kruger U, Khera RD, Nitiwarangkul C, Kalra MK, Wang G. Competitive performance of a modularized deep neural network compared to commercial algorithms for low-dose CT image reconstruction. Nat Mach Intell 2019;1:269-76. [Crossref] [PubMed]

- Zhang Y, Hao D, Lin Y, Sun W, Zhang J, Meng J, Ma F, Guo Y, Lu H, Li G, Liu J. Structure-preserving low-dose computed tomography image denoising using a deep residual adaptive global context attention network. Quant Imaging Med Surg 2023;13:6528-45. [Crossref] [PubMed]

- Kang E, Chang W, Yoo J, Ye JC. Deep Convolutional Framelet Denosing for Low-Dose CT via Wavelet Residual Network. IEEE Trans Med Imaging 2018;37:1358-69. [Crossref] [PubMed]

- Wolterink JM, Leiner T, Viergever MA, Isgum I. Generative Adversarial Networks for Noise Reduction in Low-Dose CT. IEEE Trans Med Imaging 2017;36:2536-45. [Crossref] [PubMed]

- Wu D, Kim K, Fakhri GE, Li Q. A Cascaded Convolutional Neural Network for X-ray Low-dose CT Image Denoising. arXiv:1705.04267 2017. DOI:

10.48550 /arXiv.1705.04267. - Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser Ł, Polosukhin I. Attention is all you need. Proceedings of the 31st International Conference on Neural Information Processing Systems 2017:6000-10.

- Zhang Z, Yu L, Liang X, Zhao W, Xing L. TransCT: dual-path transformer for low dose computed tomography. Medical Image Computing and Computer Assisted Intervention-MICCAI 2021: 24th International Conference, Strasbourg, France, September 27–October 1, 2021, Proceedings, Part VI 24; 2021: Springer. DOI:

10.1007/978-3-030-87231-1_6 . - Wang D, Fan F, Wu Z, Liu R, Wang F, Yu H. CTformer: convolution-free Token2Token dilated vision transformer for low-dose CT denoising. Phys Med Biol 2023; [Crossref]

- Yang L, Li Z, Ge R, Zhao J, Si H, Zhang D, Low-Dose CT. Denoising via Sinogram Inner-Structure Transformer. IEEE Trans Med Imaging 2023;42:910-21. [Crossref] [PubMed]

- Yang J, Li C, Dai X, Gao J. Focal modulation networks. Advances in Neural Information Processing Systems 2022;35:4203-17.

- Hou Q, Lu CZ, Cheng MM, Feng J. Conv2Former: A Simple Transformer-Style ConvNet for Visual Recognition. IEEE Trans Pattern Anal Mach Intell 2024;46:8274-83. [Crossref] [PubMed]

- Li C, Zhou A, Yao A, editors. Omni-Dimensional Dynamic Convolution. International Conference on Learning Representations; 2022. Available online: https://openreview.net/forum?id=DmpCfq6Mg39

- Hu J, Shen L, Sun G. Squeeze-and-excitation networks. Proceedings of the IEEE conference on computer vision and pattern recognition; 2018. DOI:

10.1109/CVPR.2018.00745 . - Raghu M, Unterthiner T, Kornblith S, Zhang C, Dosovitskiy A. Do vision transformers see like convolutional neural networks? Advances in Neural Information Processing Systems 2021;34:12116-28.

- Wang G, Hu X. Low-dose CT denoising using a Progressive Wasserstein generative adversarial network. Comput Biol Med 2021;135:104625. [Crossref] [PubMed]

- Zhang Y, Tian Y, Kong Y, Zhong B, Fu Y. Residual Dense Network for Image Restoration. IEEE Trans Pattern Anal Mach Intell 2021;43:2480-95. [Crossref] [PubMed]

- Zhu L, Han Y, Xi X, Fu H, Tan S, Liu M, Yang S, Liu C, Li L, Yan B. STEDNet: Swin transformer‐based encoder–decoder network for noise reduction in low‐dose CT. Medical Physics 2023;50:4443-58. [Crossref] [PubMed]

- Wang Z, Bovik AC, Sheikh HR, Simoncelli EP. Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 2004;13:600-12. [Crossref] [PubMed]

- Zhang L, Zhang L, Mou X, Zhang D. FSIM: a feature similarity index for image quality assessment. IEEE Trans Image Process 2011;20:2378-86. [Crossref] [PubMed]

- Lim B, Son S, Kim H, Nah S, Mu Lee K. Enhanced deep residual networks for single image super-resolution. Proceedings of the IEEE conference on computer vision and pattern recognition workshops; 2017. DOI:

10.1109/CVPRW.2017.151 . - Zhang SY, Wang ZX, Yang HB, Chen YL, Li Y, Pan Q, Wang HK, Zhao CX. Hformer: highly efficient vision transformer for low-dose CT denoising. Nuclear Science Techniques 2023;34:61.

- Kadimesetty VS, Gutta S, Ganapathy S, Yalavarthy PK. Convolutional neural network-based robust denoising of low-dose computed tomography perfusion maps. IEEE Transactions on Radiation and Plasma Medical Sciences 2018;3:137-52.

- Cui Y, Ren W, Yang S, Cao X, Knoll A. Irnext: Rethinking convolutional network design for image restoration. International Conference on Machine Learning; 2023;261:6545-64.

- Cui Y, Ren W, Knoll A. Omni-Kernel Network for Image Restoration. Proceedings of the AAAI Conference on Artificial Intelligence 2024;38:1426-34.

- Cui Y, Tao Y, Bing Z, Ren W, Gao X, Cao X, Huang K, Knoll A. Selective frequency network for image restoration. The Eleventh International Conference on Learning Representations; 2023. Available online: https://openreview.net/pdf?id=tyZ1ChGZIKO

- Cui Y, Tao Y, Jing L, Knoll A. Strip attention for image restoration. International Joint Conference on Artificial Intelligence. IJCAI; 2023. DOI:

10.24963/ijcai.2023/72 . - Cui Y, Knoll A. Exploring the potential of channel interactions for image restoration. Knowledge-Based Systems 2023;282:111156.

- Cui Y, Ren W, Cao X, Knoll A. Focal network for image restoration. Proceedings of the IEEE/CVF International Conference on Computer Vision; 2023. DOI:

10.1109/ICCV51070.2023.01195 . - Wang B, Qin J, Lv L, Cheng M, Li L, He J, Li D, Xia D, Wang M, Ren H, Wang S. DSML-UNet: Depthwise separable convolution network with multiscale large kernel for medical image segmentation. Biomedical Signal Processing and Control 2024;97:106731.