Measuring the binary thickness of buccal bone of anterior maxilla in low-resolution cone-beam computed tomography via a bilinear convolutional neural network

Introduction

The morphology of the buccal bone wall of the anterior maxilla is an important factor contributing to the aesthetic prognosis and decision-making in immediate dental implants (1). According to the thickness, the buccal bone can be classified into the thick type (thickness ≥1 mm) and the thin type (thickness <1 mm) (2). Supporting the overlying soft tissue, a thin buccal bone leads to high aesthetic risk after implant placement (3), whereas a thick buccal bone is understood to be favorable for immediate implant (4). Therefore, the thickness of the buccal bone should be precisely evaluated before implant surgery to prevent incorrect decision-making of dental implants and the accompanied aesthetic complications.

The accurate measurement of the binary thickness of buccal bone of the anterior maxilla is still very challenging in clinics. The buccal bone wall is typically a micro-scale structure that is traditionally measured by dentists through cone-beam computed tomography (CBCT), with a mean thickness of 0.75–1.05 mm (5). Meanwhile, the maximal visual ability of humans (approximately 100 microns) restricts the accurate measurement and even detection of this millimeter-scale structure (6). Another concern is that primary and basic dentists with insufficient background knowledge and clinical experience are prone to misdiagnosing the buccal bone (7). Although there are numerous edentulous patients, even a low error rate may result in extensive misdiagnosis and excessive economic loss. Worse still, with low-resolution CBCT, the buccal bone only consists of a few pixels and may result in loss of fine spatial information. Therefore, it is of great clinical significance to develop a quantitative analysis tool with high accuracy for micro-scale buccal bone even in low-resolution CBCT.

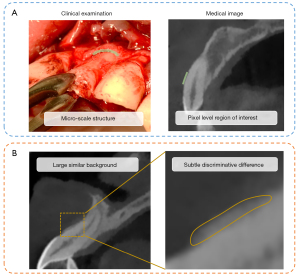

Deep learning has achieved certain success in detecting large-scale physiological and pathological structures (8-10); however, it encounters difficulties in the automatic quantitative analysis of micro-scale structures such as the buccal bone. As aforementioned, the micro-scale buccal bones manifest as pixel-level regions, and minimal errors in the feature derivation (i.e., missing one or several pixels) may result in wide divergence in its quantitative description (11). In addition, the subtle but discriminative inter-class visual features (i.e., the subtle difference in the thickness between the thick and thin buccal bone type) may be overwhelmed by the large but similar intra-class factors (i.e., the large similar background, tooth position, and so on) (12) (Figure 1). Further, the traditional supervised learning manner endorses the segmentation-based or key point-based strategies, which are time- and labor-intensive as they require abundant manual annotations of microstructures.

The dilemma of the deep learning-assisted analysis of micro-scale buccal bone calls on a deep learning network that can perform quantitative subtle feature extraction in an end-to-end manner. Previous research has provided some strategies that enhance the ability in subtle feature analysis, including the attention mechanism (13), the capsule neural network (14), and the integration of the external information (11). The attention module is a submodule that attends to the loosely defined fine-grained objects by the weights adjustment (15), although it tends to overfit, especially in small datasets (11). The capsule network uses vector as inputs and delivers the spatial relationship between different objects across the capsules (14). However, it mainly focuses on making full use of the spatial relationship rather than explicating the subtle features. Moreover, the integration of external information such as the multi-modality data requires additional manual annotations (11). Since the high-order feature encoding may produce more orderless translationally invariant texture descriptors that improve the fine-grained classification ability, the bilinear convolutional neural network (BCNN) model combines the features extracted from the 2 feature extractors through the outer product to produce a high-dimensional covariance matrix, and therefore has a great ability to magnify the most discriminative region and narrow the weight on the large similar factors (12). Furthermore, the architecture of BCNN realizes end-to-end gradients calculation without manual annotation (11). As a consequence, the BCNN is competent for the quantitative analysis of micro buccal bone.

In this study, we aimed to precisely analyze the binary thickness of the buccal bone of the anterior maxilla via the BCNN model and to compare the accuracy and efficiency between the BCNN and the dentists. This work provides a paradigm for the deep learning-assisted quantitative analysis of micro-scale structures in low-resolution medical images.

Methods

Ethical approval and data collection

This study received ethical approval (No. KQEC-2020-29-04) from the Medical Ethics Committee of the Hospital of Stomatology of Sun Yat-sen University. The requirement of informed consent from participants was exempted due to the retrospective nature of the study. This study collected the CBCT (NewTom VG; QR s.r.l., Verona, Italy) which were performed from 1 October 2019 to 10 July 2020 in the Hospital of Stomatology of Sun Yat-sen University. The inclusion criteria were as follows: (I) patients more than 18 years old; (II) the left and right central and lateral incisors (#12–#22 teeth) were natural. The exclusion criteria were as follows: (I) tooth and supporting structure deformities, including severe alveolar defect, periodontitis, crowded dentition, root resorption, fracture, and periapical periodontitis; (II) severe motion artifact and metal artifact caused by orthodontic appliance or restoration that the buccal bone cannot be manually identified. The included CBCT were imported into the coDiagnostiXTM (DentalWings, Montreal, Canada) and the anterior tooth axial images of #12–#22 were obtained (Figure 2).

The thickness of the buccal bone wall was measured on the level of 2 mm apical to the cementoenamel junction (CEJ) due to its clinical significance using Adobe Illustrator (Adobe Systems Inc., San Jose, CA, USA). Each image was measured by 2 independent researchers who were well-trained in dentistry (had completed dentistry courses and around 3-year of experience of dental imaging analysis) and were familiar with Adobe Illustrator (well trained by experienced dentists) with an intraclass correlation efficient (ICC) of 0.899 (Table S1). An agreement on measurement results was reached by 2 researchers. According to the genuine thickness, the buccal bone walls were classified into thick type (≥1 mm) and thin type (<1 mm) (Figure S1).

Construction and training of the deep learning models

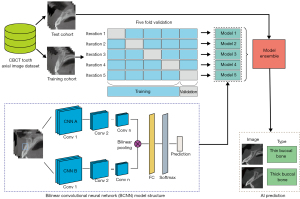

The images from the anterior tooth axial image dataset were first cropped into 187×187 pixels to contain tooth and alveolar bone, and were resized to 224×224 as inputs of the following networks. The cropped images were divided into a training cohort (900 patients, 3,600 cropped images) and a test cohort (100 patients, 400 cropped images) in a patient-wise fashion (16). The K-fold cross-validation method was adopted during the training process. The training cohort was spilt into 5-, 8-, and 10-fold patient-wise. In every iteration, K-1 folds were used to train the model and the remaining 1-fold was used for validation. The iteration repeated K times and finally, K models were saved. The performance metrics of every model on their respective validation sets were averaged.

The BCNN architecture consists of a quadruple

including 2 parallel feature extractors as feature functions (fA,fB), a bilinear pooling function (P), and a classification function (C). In the bilinear pooling module f, the outer product of the feature extracted from 2 backbones fA,fB at each location were calculated to produce a covariance matrix. Then, the bilinear feature was pooled and turned into a bilinear vector, followed by the signed square root step and L2 normalization (12). The classifier C predicted the probability of each classification according to the bilinear feature. This study adopted pretrained visual geometry group (VGG)16, ResNet 18, ResNet 34, ResNet 50, ResNet 101, and ResNeXt 50 as backbones of BCNN (Figure 3). The model complexity was reduced by reconstructing the VGG16 backbones to only 1 classification head with 1 linear layer. Further, mincing the second-order pooling method, the 2 feature extractors were identical (i.e., fully-shared parameters). The 2 identical feature maps were calculated as the outer product to enrich the local feature representation and improve the computational efficacy (17). At the training stage, the conventional convolutional neural networks (CNNs) that had been pretrained on the ImageNet 1K were also applied to our dataset as the baseline, including VGG16, ResNet 18, ResNet 34, ResNet 50, ResNet 101, and ResNeXt 50.

Evaluation of the performance of the deep learning models

The model ensemble was used at the test stage. All the K models saved during the K-fold cross-validation output their prediction on the test cohort, and their predictions were averaged to produce a final outcome. Furthermore, the loss reweight method was used on the best model to try to solve the problem of sample imbalance. The models were optimized by the stochastic gradient descent (SGD) method. The initial learning rate was 0.05 with a weight decay of 1e−4, and the batch size was 32. All the experiments were performed in PyTorch on a workstation with Nvidia A30 GPU (Nvidia, Santa Clara, CA, USA). The code was released at https://github.com/xiaohhuiiiii/Project_code.

The performance metrics of the buccal bone type prediction included accuracy, precision, sensitivity, specificity, F1 score, area under the receiver operator characteristic (ROC) curve (AUC), and area under the precision-recall curve (AUPRC). The detailed formulas are listed below. The TP, TN, FP, and FN referred to true positive, true negative, false positive, and false negative, respectively.

The bootstrap method was used for calculating 95% confidence interval (95% CI). The samplings with replacement were repeated for 1,000 iterations with a sampling size of 400 images.

Visualization of the model outputs

This study applied post hoc visualization methods including Gradient Weighted Class Activation Mapping (Grad-CAM), Guided Grad-CAM, and layer-wise relevance propagation (LRP) to exhibit the region on which the models focused (18). The Grad-CAM produces the feature map by the weight of the gradient and highlights the area in which the model is interested. The Guided Grad-CAM is the combination of the Grad-CAM and guide-backpropagation, displaying the more specific pixels which impact the model. Instead of using the gradients, the LRP relies on the relevance scores between the neurons (18). This study presented the 3 visualization results and their corresponding original images. To further analyze the model’s activation level toward different categories, the activation analysis was conducted by normalizing the activation score of a couples of truly or falsely predicted images (e.g., TP and TN pair, FP and FN pair) based on the larger score of the 2 images, rather than their respectively largest score. Then, the Grad-CAM heatmap was drawn based on the adjusted normalized activation scores of different categories.

The comparison between dental practitioners, BCNN, and human-machine fusion

This study invited 6 dental practitioners, including 2 senior dentists (more than 5-year of clinical experience), 2 junior dentists (less than 5-year of clinical experience), and 2 dental students (without clinical experience) to diagnose the buccal bone wall type from the test cohort through visual estimation. The speciality and familiarity in dental imaging increases as the level improves. To further validate the effect of the deep learning models in the clinical application, human-machine fusion experiments were carried out using the ‘Or’ strategy (19), that is, the buccal bones were diagnosed as thick bone type when either the practitioner or the deep learning model interpreted that they were. The thin bone type was diagnosed only when the practitioner and the deep learning model both considered the buccal bones were (Table S2). The performance of the dentists and human-machine fusion were compared to that of the deep learning models.

Results

The demographic characteristics of the dataset

The dataset contained CBCT from 1,000 patients, among which 388 were males and 612 were females. The average age of the included patients was 36.15±13.09 years. There were 4,000 teeth images in total including left and right central and lateral incisors (1,000 images for each type of tooth). The thick bone wall type included 1,181 teeth (29.5%) and the thin bone wall type included 2,819 teeth (70.5%) (Figure 2).

The construction of BCNN and its performance on the training cohort

We successfully constructed BCNN with 2 identical parallel CNN backbones, followed by a bilinear pooling module and classification module. BCNN with VGG16 backbone (BCNN-VGG16) reached the best general performance in the training cohort, with an AUC of 0.916 (95% CI: 0.903–0.929), AUPRC of 0.833 (95% CI: 0.806–0.858), and accuracy of 0.869 (95% CI: 0.855–0.883). The BCNN-VGG16 outperformed all the other backbones except for the sensitivity, which was lower than that of the BCNN-ResNet18 (Table S3). The loss and accuracy of BCNN-VGG16 reached convergence at the epochs of approximately 40 during the training process (Figure S2).

Compared to the conventional CNNs, the BCNN-VGG16 had comparable training cost yet better performance. The floating-point operations (FLOPs) of BCNN-VGG16 and VGG16 were 15.38 and 15.53 GFLOPs, respectively (Table S4). The AUC of the conventional CNN models were around 0.90, with the highest AUC of 0.908 (95% CI: 0.894–0.921) and AUPRC of 0.821 (95% CI: 0.793–0.847) acquired by ResNet 18 at the training stage, which were lower than the BCNN-VGG16 (Table S5).

The performance of BCNN on the test cohort

The BCNN-VGG16 achieved the top general performance on the test cohort on almost all metrics. The accuracy, sensitivity, specificity, F1-score, AUC, and AUPRC of BCNN-VGG16 were 0.870 (95% CI: 0.838–0.902), 0.843 (95% CI: 0.776–0.906), 0.701 (95% CI: 0.617–0.783), 0.943 (95% CI: 0.914–0.968), 0.765 (95% CI: 0.700–0.825), 0.924 (95% CI: 0.896–0.948), and 0.859 (95% CI: 0.803–0.903), respectively. However, the sensitivity of the BCNN-VGG16 (0.701) was slightly lower than that of BCNN-ResNet50 (0.706) (Table 1). The results of different K-fold cross-validation and loss reweighting methods implied that the setting of 5-fold cross-validation and 1:1 loss weight ratio achieved the optimized AUC (Tables S6,S7). Meanwhile, approximately 45% of the misclassified cases (FN and FP) were related with the poor image quality, including blurriness and motion artifact, whereas the remaining cases were mainly due to the abnormality in anatomical structures and the actual thickness of buccal bone around the cut point of the binary classifications (Table S8, Figure S3).

Table 1

| Model | Accuracy (95% CI) |

Precision (95% CI) |

Sensitivity (95% CI) |

Specificity (95% CI) |

F1 score (95% CI) |

AUC (95% CI) |

AUPRC (95% CI) |

|---|---|---|---|---|---|---|---|

| BCNN-VGG16 | 0.870 (0.838, 0.902) |

0.843 (0.776, 0.906) |

0.701 (0.617, 0.783) |

0.943 (0.914, 0.968) |

0.765 (0.700, 0.825) |

0.924 (0.896, 0.948) |

0.859 (0.803, 0.903) |

| BCNN-Resnet18 | 0.857* (0.825, 0.890) |

0.803* (0.734, 0.868) |

0.702 (0.617, 0.783) |

0.926* (0.893, 0.954) |

0.748* (0.681, 0.811) |

0.906* (0.871, 0.937) |

0.829* (0.769, 0.885) |

| BCNN-Resnet34 | 0.858* (0.825, 0.887) |

0.808* (0.737, 0.878) |

0.691* (0.608, 0.767) |

0.929* (0.896, 0.957) |

0.744* (0.684, 0.804) |

0.911* (0.877, 0.943) |

0.843* (0.788, 0.892) |

| BCNN-Resnet50 | 0.854* (0.818, 0.885) |

0.782* (0.712, 0.850) |

0.706* (0.625, 0.792) |

0.915* (0.882, 0.946) |

0.741* (0.678, 0.802) |

0.906* (0.872, 0.937) |

0.837* (0.785, 0.884) |

| BCNN-Resnet101 | 0.855* (0.820, 0.885) |

0.810* (0.741, 0.875) |

0.674* (0.592, 0.750) |

0.932* (0.904, 0.957) |

0.735* (0.670, 0.796) |

0.914* (0.881, 0.944) |

0.851* (0.791, 0.903) |

| BCNN-ResNeXt50 | 0.850* (0.820, 0.880) |

0.813* (0.745, 0.883) |

0.648* (0.567, 0.733) |

0.936* (0.907, 0.964) |

0.721* (0.654, 0.785) |

0.900* (0.866, 0.934) |

0.830* (0.776, 0.880) |

*, the result of BCNN-VGG16 is statistically significantly different from the result of all contrast models with t-test P<0.05. BCNN, bilinear convolutional neural network; CI, confidence interval; AUC, area under curve; AUPRC, area under the precision-recall curve; BCNN-VGG16, bilinear convolutional neural network with VGG16 as its backbone; VGG, visual geometry group; BCNN-Resnet18, bilinear convolutional neural network with Resnet18 as its backbone; BCNN-Resnet34, bilinear convolutional neural network with Resnet34 as its backbone; BCNN-Resnet50, bilinear convolutional neural network with Resnet50 as its backbone; BCNN-Resnet101, bilinear convolutional neural network with Resnet101 as its backbone; BCNN-ResNeXt50, bilinear convolutional neural network with ResNeXt50 as its backbone.

Visualization of the outcome of the BCNN

The visualization of the conventional CNNs and BCNN was performed on the finetuned VGG16 and BCNN-VGG16. Both the Grad-CAM, Guided Grad-CAM, and LRP of VGG16 depicted large scale of the focused region, including the tooth, alveolar bone, soft tissue, and background. On the contrary, the visualization results of BCNN-VGG16 presented small and local regions, but precisely focused on the buccal bone regardless of the predicted outcome (Figure 4).

Besides, the activation analysis of feature maps showed that the difference in activation score was somewhat large between TP and TN, yet small between FP and FN. The heatmap of the normalized activation score showed that the attention strength of TP was greater than TN, whereas the attention strength of FP and FN was similar (Figure S4).

The comparison between dental practitioners, BCNN, and human-machine fusion

The BCNN surpassed the 3 levels of dental practitioners on almost all performance metrics. Specifically, the sensitivity of BCNN was lower than that of the junior dentists (Table 2). The difference existed between dental practitioners, as the senior dentists performed better than the other practitioners except for sensitivity. The time cost of BCNN was profoundly less than that of the dental practitioners (Table 3). Further, in different intervals of confusing realm of the buccal bone thickness, where all practitioners were poorly predicted, the accuracy of BCNN surpassed all levels of practitioners, either in the narrow or large intervals (Table 4).

Table 2

| Predictor | Fusion | Accuracy | Precision | Sensitivity | Specificity | F1 score |

|---|---|---|---|---|---|---|

| Dental student | Pre | 0.71 | 0.51 | 0.73 | 0.70 | 0.60 |

| Post | 0.74 | 0.54 | 0.87 | 0.69 | 0.67 | |

| Junior dentist | Pre | 0.73 | 0.54 | 0.84 | 0.68 | 0.65 |

| Post | 0.74 | 0.55 | 0.89 | 0.68 | 0.67 | |

| Senior dentist | Pre | 0.80 | 0.69 | 0.62 | 0.87 | 0.64 |

| Post | 0.83 | 0.69 | 0.80 | 0.85 | 0.74 | |

| BCNN-VGG16 | – | 0.87 | 0.84 | 0.70 | 0.94 | 0.77 |

BCNN-VGG16, bilinear convolutional neural network with VGG16 as its backbone; VGG, visual geometry group.

Table 3

| Predictor | Time cost per image (s) |

|---|---|

| Dental student | 109 |

| Junior dentist | 113 |

| Senior dentist | 163 |

| BCNN-VGG16 | 0.06 |

BCNN, bilinear convolutional neural network; BCNN-VGG16, bilinear convolutional neural network with VGG16 as its backbone; VGG, visual geometry group.

Table 4

| Intervals of confusing realm | Included patients, n (%) | Predictor | Accuracy | Precision | Sensitivity | Specificity | F1 score |

|---|---|---|---|---|---|---|---|

| 0.9–1.1 mm | 40 (10.00%) | Dental student | 0.54 | 0.71 | 0.52 | 0.58 | 0.60 |

| Junior dentist | 0.58 | 0.72 | 0.65 | 0.42 | 0.67 | ||

| Senior dentist | 0.54 | 0.81 | 0.44 | 0.73 | 0.54 | ||

| BCNN-VGG16 | 0.67 | 0.82 | 0.52 | 0.86 | 0.64 | ||

| 0.8–1.2 mm | 88 (22.00%) | Dental student | 0.64 | 0.70 | 0.57 | 0.72 | 0.63 |

| Junior dentist | 0.64 | 0.67 | 0.70 | 0.56 | 0.67 | ||

| Senior dentist | 0.64 | 0.79 | 0.46 | 0.85 | 0.56 | ||

| BCNN-VGG16 | 0.72 | 0.87 | 0.55 | 0.90 | 0.68 | ||

| 0.7–1.3 mm | 128 (32.00%) | Dental student | 0.66 | 0.68 | 0.59 | 0.72 | 0.63 |

| Junior dentist | 0.66 | 0.67 | 0.73 | 0.59 | 0.68 | ||

| Senior dentist | 0.67 | 0.78 | 0.50 | 0.85 | 0.59 | ||

| BCNN-VGG16 | 0.75 | 0.84 | 0.63 | 0.87 | 0.72 | ||

| 0.6–1.4 mm | 157 (39.25%) | Dental student | 0.67 | 0.66 | 0.62 | 0.71 | 0.64 |

| Junior dentist | 0.72 | 0.66 | 0.76 | 0.59 | 0.69 | ||

| Senior dentist | 0.71 | 0.80 | 0.54 | 0.86 | 0.63 | ||

| BCNN-VGG16 | 0.78 | 0.86 | 0.66 | 0.90 | 0.75 | ||

| 0.5–1.5 mm | 191 (47.75%) | Dental student | 0.68 | 0.66 | 0.64 | 0.72 | 0.65 |

| Junior dentist | 0.70 | 0.67 | 0.78 | 0.63 | 0.71 | ||

| Senior dentist | 0.72 | 0.80 | 0.55 | 0.87 | 0.64 | ||

| BCNN-VGG16 | 0.81 | 0.88 | 0.68 | 0.92 | 0.77 |

BCNN, bilinear convolutional neural network; BCNN-VGG16, bilinear convolutional neural network with VGG16 as its backbone; VGG, visual geometry group.

After human-machine fusion, all the practitioners acquired remarkable advancement in sensitivity, AUC, and AUPRC, whereas their specificity decreased mildly (Table 2, Figure 5). The ROC and precision-recall (P-R) curves revealed that BCNN outperformed all the practitioners alone and human-machine fusion, indicating that the model performed at the expert level (Figure 5).

Discussion

In this study, we successfully introduced the BCNN to the micro-scale structures analysis in medical imaging and validated its success in the semi-quantitative analysis of the buccal bone from low-resolution CBCT. The best performance was achieved by BCNN-VGG16 with the AUC of 0.924 (95% CI: 0.896–0.948). The visualization showed that the BCNN precisely distinguished the buccal bone wall region from the tooth or alveolar bone region. The BCNN performed at expert-level and the dentists’ overall diagnosis performance was improved when combined with BCNN.

The application of fine-grained image recognition network to quantitative micro-scale structures analysis

The primary contribution of this study is to innovatively apply the fine-grained image recognition network to the analysis of binary buccal bone thickness in the low-resolution CBCT. The fine-grained image recognition networks had previously been successfully validated in a series of competitions of natural images identification (11), whereas no attempt had been made in the quantitative analysis. In this study, the BCNN performed end-to-end encoding with minimal annotations and training cost, successfully located micro-scale region even in low-resolution medical images, and yielded satisfying performance.

The bilinear-based high-order feature interactions enable the end-to-end encoding for quantitative feature analysis with few annotations and training prerequisites. The classical texture representation methods [e.g., scale invariant feature transform (SIFT) and CNN] perform just passable results in fine-grained features analysis for their failure to learn the underlying features in an end-to-end manner. On the contrary, the bilinear architecture is a directed acyclic graph, which enables better back propagation (12). In this way, the BCNN facilitates end-to-end learning of the discriminative local textures (20). To alleviate the potential exponential explosion of the computational cost incurred by high-order encoding, the parameters of the 2 identical backbones are fully-shared to improve the efficacy by reducing the parameters number while acquiring matchable result as 2 different backbones without parameters sharing (12,21). For the simplification of the backbones, for example, the prune of classification head is another contributor to reducing training cost. In this way, BCNN realizes the high efficacy in an end-to-end manner.

The BCNN exhibits precise micro-scale features learning even in low-resolution medical images. Even though the CBCT is widely used in dentistry with irreplaceable advantages including lower radiation dose, lower cost, and shorter scanning time (22), its main drawback is the lower spatial resolution compared with the planning computed tomography (CT) (23,24). In this study, the resolution of CBCT was as low as 196.5 µm per pixel and the region of buccal bone accounted for only 20×5 pixels. The covariance matrix-based representation of BCNN generates numerous high-order features in a non-linear manner. The combination of 2 feature maps realizes the derivation of specific features regardless of the pixel size and generates more features that improve the classification performance. As the visualization results depicted, the BCNN concentrated on the buccal bone wall, rather than concerning the whole tooth as conventional CNNs. The activation analysis also confirmed that BCNN paid more attention to the region of interest under the truly predicted conditions and less attention under the falsely predicted condition. This means that the model makes the prediction based on the identification and extraction of the buccal bone wall region rather than irrelevant features.

The BCNN in this study achieved comparable performance to that of the related work. Mastouri et al. used BCNN with 2 streams (VGG16 and VGG19) followed by a support vector machine (SVM) to classify the lung nodules on CT (512×512 pixels) into non-nodules, micro-nodules (<3 mm), and masses (≥3 mm). The accuracy of their study reached 0.9199 and the AUC reached 0.959 (25). Zhao et al. applied BCNN and fast BCNN to assess the wound depth and granulation tissue amount from pictures of diabetes patients and achieved the best accuracy of 0.846 (26). Huang et al. developed the bilinear MobileNet-V3 model to diagnose breast cancer from histopathological slide images and achieved an accuracy of 0.88 (27). In this study, the top-performing model (BCNN-VGG16) achieved an accuracy of 0.870 (95% CI: 0.838–0.902), an AUC of 0.924 (95% CI: 0.896–0.948), and an AUPRC of 0.859 (95% CI: 0.803–0.903). The potential reasons for the advanced performance may be that the backbones of BCNN in this study were fully finetuned, which possessed better generalization ability and outperformed the transfer learning methods (28). Besides, the VGG backbones may have outperformed the advanced CNN backbones due to its simple and straightforward architecture that facilitates its ability in fine-grained and local feature representation.

The significant potential for the clinical application of BCNN

The general performance of BCNN is equivalent to expert-level with higher efficiency. Recently, a meta-analysis depicted that the deep learning models possess higher sensitivity and specificity than doctors in medical images-derived diagnosis (29). In this study, the BCNN-VGG16 achieved significantly higher accuracy (0.87 vs. 0.80), precision (0.84 vs. 0.69), specificity (0.94 vs. 0.87), and F1 score (0.77 vs. 0.65) compared to all levels of dental practitioners, whereas its time-cost was thousands of times less than that of humans (Tables 2,3). In the dental scenario, 38.9% and 47.7% of patients in the 55–64 and 65–74 years groups in China, respectively, have unrestored missing teeth (30), and 96% of the elderly (≥60 years) have missing teeth in southern Vietnam (31). The BCNN may accelerate the clinical workflow and reduce numerous time-associated costs in the mechanically repeated medical image analysis on so many patients.

Another interesting finding is that human-machine fusion is promising in improving the dentist’s diagnostic ability, especially towards the thick buccal bone type. The fusion profoundly increased the sensitivity from 0.62 to 0.80 maximumly, indicating that the machine rescued many missed thick samples by the human. The slight decrease in specificity (from 0.87 to 0.85) hinted that the fusion did not hazard prediction towards thin samples. What should be noticed is that the human-machine fusion narrowed the difference caused by individual and empirical factors and precisely produced consistent results regardless of the operators. This would be helpful for numerous junior and basic dentists to reduce the disparities and limit the risk of misdiagnosis.

Interestingly, the BCNN-VGG16 obtained high specificity (0.94) yet somehow inferior sensitivity (0.70), which was slightly lower than the mean sensitivity of dental practitioners (0.73). The imbalance between sensitivity and specificity resulted from the trade-off between sensitivity and specificity and the imbalance of thin and thick types (around 2.4:1), which can be adjusted through the loss reweight method. The increased ratio of loss weight from 1:1 to 1:3 led to the increase of sensitivity (from 0.719 to 0.749) and decrease of specificity (from 0.944 to 0.922) with the minor cost of AUC (from 0.925 to 0.920) (Table S7). From the clinical aspect, the higher specificity is helpful in the exclusion of the thin buccal bone type which is not suitable for immediate implant placement. The dentist can take advantage of the loss reweight method to adjust the preference towards the thin or thick type according to the clinical demand.

Implications of BCNN-based micro-scale structure analysis and limitations

The success of BCNN-based buccal bone wall classification hints that the fine-grained image recognition network has the potential in quantitative analysis of other important millimeter-to-micron structures, including small blood arteries abnormities (internal diameter smaller than 100 µm) (32), small bronchiole (internal diameter is less than 2 mm) (33), and so forth. Other kinds of medical images such as X-ray, ultrasound, and magnetic resonance imaging (MRI), are able to be quantitatively analyzed regardless of whether it is of high- or low-resolution. However, this study attempted to turn a regression task into a classification task. In the future, more deep learning methods can be explored in the regression-based micro-scale structure analysis to achieve better performance.

Conclusions

We succeeded in applying BCNN to the quantitative analysis of the binary thickness of the buccal bone wall and yielded clinically acceptable performance with high specificity. The BCNN precisely located the buccal bone wall region and identified the subtle difference in its thickness. The model outperformed the human experts generally whereas the diagnostic ability of all levels of dentists improved with the assistance of BCNN. This study took the automatic classification of buccal bone as a paradigm and laid a stepping-stone to the quantitative micro-scale structure analysis of low-resolution medical images.

Acknowledgments

The authors wish to thank Shangyou Wen, Jiayu Li, Jiaxin Xie, Wei Wei, Chenghao Zhang, Xinyi Yang, and Diallo Mariama from Guanghua School of Stomatology, Sun Yat-sen University for their contribution in data collection.

Funding: This project was supported by

Footnote

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://qims.amegroups.com/article/view/10.21037/qims-23-744/coif). The authors have no conflicts of interest.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. This study was conducted in accordance with the Declaration of Helsinki (as revised in 2013) and received ethical approval (No. KQEC-2020-29-04) from the Medical Ethics Committee of the Hospital of Stomatology of Sun Yat-sen University. The requirement of informed consent from participants was exempted due to the retrospective nature of the study.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Tsigarida A, Toscano J, de Brito Bezerra B, Geminiani A, Barmak AB, Caton J, Papaspyridakos P, Chochlidakis K. Buccal bone thickness of maxillary anterior teeth: A systematic review and meta-analysis. J Clin Periodontol 2020;47:1326-43. [Crossref] [PubMed]

- Ferrus J, Cecchinato D, Pjetursson EB, Lang NP, Sanz M, Lindhe J. Factors influencing ridge alterations following immediate implant placement into extraction sockets. Clin Oral Implants Res 2010;21:22-9. [Crossref] [PubMed]

- Chappuis V, Araújo MG, Buser D. Clinical relevance of dimensional bone and soft tissue alterations post-extraction in esthetic sites. Periodontol 2000 2017;73:73-83. [Crossref] [PubMed]

- Morton D, Chen ST, Martin WC, Levine RA, Buser D. Consensus statements and recommended clinical procedures regarding optimizing esthetic outcomes in implant dentistry. Int J Oral Maxillofac Implants 2014;29:216-20. [Crossref] [PubMed]

- Rojo-Sanchis J, Soto-Peñaloza D, Peñarrocha-Oltra D, Peñarrocha-Diago M, Viña-Almunia J. Facial alveolar bone thickness and modifying factors of anterior maxillary teeth: a systematic review and meta-analysis of cone-beam computed tomography studies. BMC Oral Health 2021;21:143. [Crossref] [PubMed]

- Westheimer G. Visual acuity: information theory, retinal image structure and resolution thresholds. Prog Retin Eye Res 2009;28:178-86. [Crossref] [PubMed]

- Zhang LJ, Wang Y, Schoepf UJ, Meinel FG, Bayer RR 2nd, Qi L, Cao J, Zhou CS, Zhao YE, Li X, Gong JB, Jin Z, Lu GM. Image quality, radiation dose, and diagnostic accuracy of prospectively ECG-triggered high-pitch coronary CT angiography at 70 kVp in a clinical setting: comparison with invasive coronary angiography. Eur Radiol 2016;26:797-806. [Crossref] [PubMed]

- Wang G, Liu X, Shen J, Wang C, Li Z, Ye L, et al. A deep-learning pipeline for the diagnosis and discrimination of viral, non-viral and COVID-19 pneumonia from chest X-ray images. Nat Biomed Eng 2021;5:509-21. [Crossref] [PubMed]

- Yue W, Zhang H, Zhou J, Li G, Tang Z, Sun Z, Cai J, Tian N, Gao S, Dong J, Liu Y, Bai X, Sheng F. Deep learning-based automatic segmentation for size and volumetric measurement of breast cancer on magnetic resonance imaging. Front Oncol 2022;12:984626. [Crossref] [PubMed]

- Huang Y, Bert C, Sommer P, Frey B, Gaipl U, Distel LV, Weissmann T, Uder M, Schmidt MA, Dörfler A, Maier A, Fietkau R, Putz F. Deep learning for brain metastasis detection and segmentation in longitudinal MRI data. Med Phys 2022;49:5773-86. [Crossref] [PubMed]

- Wei XS, Song YZ, Aodha OM, Wu J, Peng Y, Tang J, Yang J, Belongie S. Fine-Grained Image Analysis With Deep Learning: A Survey. IEEE Trans Pattern Anal Mach Intell 2022;44:8927-48. [Crossref] [PubMed]

- Lin TY, RoyChowdhury A, Maji S. Bilinear CNN Models for Fine-Grained Visual Recognition. 2015 IEEE International Conference on Computer Vision (ICCV). Santiago: IEEE; 2015:1449-57.

- Brauwers G, Frasincar F. A General Survey on Attention Mechanisms in Deep Learning. IEEE Trans Knowl Data Eng 2023;35:3279-98.

- Sabour S, Frosst N, Hinton GE. Dynamic routing between capsules. Proceedings of the 31st International Conference on Neural Information Processing Systems. Long Beach, CA, USA: Curran Associates Inc.; 2017:3859-69.

- Hu J, Shen L, Albanie S, Sun G, Wu E. Squeeze-and-Excitation Networks. IEEE Trans Pattern Anal Mach Intell 2020;42:2011-23. [Crossref] [PubMed]

- Askar H, Krois J, Rohrer C, Mertens S, Elhennawy K, Ottolenghi L, Mazur M, Paris S, Schwendicke F. Detecting white spot lesions on dental photography using deep learning: A pilot study. J Dent 2021;107:103615. [Crossref] [PubMed]

- Carreira J, Caseiro R, Batista J, Sminchisescu C. Semantic Segmentation with Second-Order Pooling. In: Fitzgibbon A, Lazebnik S, Perona P, Sato Y, Schmid C. editors. Computer Vision – ECCV 2012. Lecture Notes in Computer Science. Berlin, Heidelberg: Springer; 2012.

- de Vries BM, Zwezerijnen GJC, Burchell GL, van Velden FHP. Menke-van der Houven van Oordt CW, Boellaard R. Explainable artificial intelligence (XAI) in radiology and nuclear medicine: a literature review. Front Med (Lausanne) 2023;10:1180773. [Crossref] [PubMed]

- Zheng X, Wang R, Zhang X, Sun Y, Zhang H, Zhao Z, et al. A deep learning model and human-machine fusion for prediction of EBV-associated gastric cancer from histopathology. Nat Commun 2022;13:2790. [Crossref] [PubMed]

- Lin TY. RoyChowdhury A, Maji S. Bilinear Convolutional Neural Networks for Fine-grained Visual Recognition. IEEE Trans Pattern Anal Mach Intell 2018;40:1309-22. [Crossref] [PubMed]

- Liu W, Juhas M, Zhang Y. Fine-Grained Breast Cancer Classification With Bilinear Convolutional Neural Networks (BCNNs). Front Genet 2020;11:547327. [Crossref] [PubMed]

- Hou X, Xu X, Zhao M, Kong J, Wang M, Lee ES, Jia Q, Jiang HB. An overview of three-dimensional imaging devices in dentistry. J Esthet Restor Dent 2022;34:1179-96. [Crossref] [PubMed]

- Oyama A, Kumagai S, Arai N, Takata T, Saikawa Y, Shiraishi K, Kobayashi T, Kotoku J. Image quality improvement in cone-beam CT using the super-resolution technique. J Radiat Res 2018;59:501-10. [Crossref] [PubMed]

- Liu J, Yan H, Cheng H, Liu J, Sun P, Wang B, Mao R, Du C, Luo S. CBCT-based synthetic CT generation using generative adversarial networks with disentangled representation. Quant Imaging Med Surg 2021;11:4820-34. [Crossref] [PubMed]

- Mastouri R, Khlifa N, Neji H, Hantous-Zannad S. A bilinear convolutional neural network for lung nodules classification on CT images. Int J Comput Assist Radiol Surg 2021;16:91-101. [Crossref] [PubMed]

- Zhao X, Liu Z, Agu E, Wagh A, Jain S, Lindsay C, Tulu B, Strong D, Kan J. Fine-grained diabetic wound depth and granulation tissue amount assessment using bilinear convolutional neural network. IEEE Access 2019;7:179151-62.

- Huang J, Mei L, Long M, Liu Y, Sun W, Li X, Shen H, Zhou F, Ruan X, Wang D, Wang S, Hu T, Lei C. BM-Net: CNN-Based MobileNet-V3 and Bilinear Structure for Breast Cancer Detection in Whole Slide Images. Bioengineering (Basel) 2022;9:261. [Crossref] [PubMed]

- Mastouri R, Khlifa N, Neji H, Hantous-Zannad S. Transfer Learning Vs. Fine-Tuning in Bilinear CNN for Lung Nodules Classification on CT Scans. Proceedings of the 2020 3rd International Conference on Artificial Intelligence and Pattern Recognition 2020:99-103.

- Liu X, Faes L, Kale AU, Wagner SK, Fu DJ, Bruynseels A, Mahendiran T, Moraes G, Shamdas M, Kern C, Ledsam JR, Schmid MK, Balaskas K, Topol EJ, Bachmann LM, Keane PA, Denniston AK. A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: a systematic review and meta-analysis. Lancet Digit Health 2019;1:e271-97. [Crossref] [PubMed]

- Sun H, Du M, Tai B, Chang S, Wang Y, Jiang H. Prevalence and associated factors of periodontal conditions among 55- to 74-year-old adults in China: results from the 4th National Oral Health Survey. Clin Oral Investig 2020;24:4403-12. [Crossref] [PubMed]

- Nguyen TC, Witter DJ, Bronkhorst EM, Truong NB, Creugers NH. Oral health status of adults in Southern Vietnam - a cross-sectional epidemiological study. BMC Oral Health 2010;10:2. [Crossref] [PubMed]

- Bosetti F, Galis ZS, Bynoe MS, Charette M, Cipolla MJ, Del Zoppo GJ, Gould D, Hatsukami TS, Jones TL, Koenig JI, Lutty GA, Maric-Bilkan C, Stevens T, Tolunay HE, Koroshetz W. “Small Blood Vessels: Big Health Problems” Workshop Participants. "Small Blood Vessels: Big Health Problems?": Scientific Recommendations of the National Institutes of Health Workshop. J Am Heart Assoc 2016;5:e004389. [Crossref] [PubMed]

- Ikezoe K, Hackett TL, Peterson S, Prins D, Hague CJ, Murphy D, LeDoux S, Chu F, Xu F, Cooper JD, Tanabe N, Ryerson CJ, Paré PD, Coxson HO, Colby TV, Hogg JC, Vasilescu DM. Small Airway Reduction and Fibrosis Is an Early Pathologic Feature of Idiopathic Pulmonary Fibrosis. Am J Respir Crit Care Med 2021;204:1048-59. [Crossref] [PubMed]